🥳Happy to share that we have three papers accepted to #ICLR2026. Congrats to our authors and see you in Rio🌴🇧🇷. Check the thread for highlights👇

12.02.2026 13:08 —

👍 6

🔁 2

💬 1

📌 0

With some trepidation, I'm putting this out into the world:

gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

09.01.2026 01:27 —

👍 585

🔁 237

💬 16

📌 10

🚀 Excited to announce that I'm looking for people (PhD/Postdoc) to join my Cognitive Modelling group @uniosnabrueck.bsky.social.

If you want to join a genuinely curious, welcoming and inclusive community of Coxis, apply here:

tinyurl.com/coxijobs

Please RT - deadline is Jan 4‼️

18.12.2025 14:52 —

👍 78

🔁 54

💬 1

📌 5

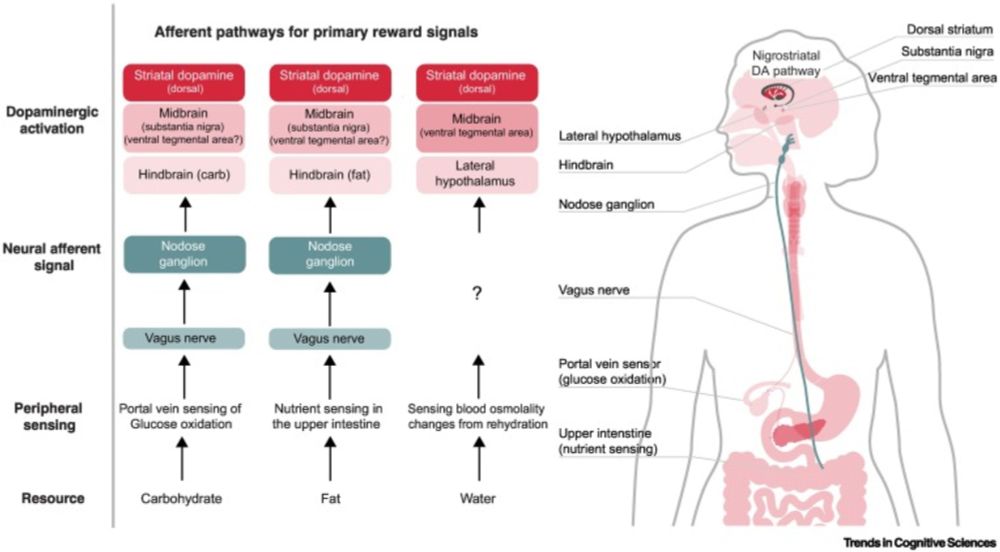

** Recruiting a postdoc ** We are looking for a postdoc to work on emotion, mental health, and interoception, based in London at @ucl.ac.uk in my lab (Clinical and Affective Neuroscience). Part of a large Wellcome Grant (co-led with the brilliant @camillanord.bsky.social)

24.11.2025 12:22 —

👍 86

🔁 87

💬 1

📌 1

Pleased to share new work with @sflippl.bsky.social @eberleoliver.bsky.social @thomasmcgee.bsky.social & undergrad interns at Institute for Pure and Applied Mathematics, UCLA.

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

27.10.2025 18:13 —

👍 74

🔁 16

💬 1

📌 0

Introducing hMFC: A Bayesian hierarchical model of trial-to-trial fluctuations in decision criterion! Now out in @plos.org Comp Bio.

led by Robin Vloeberghs with @anne-urai.bsky.social Scott Linderman

Paper: desenderlab.com/wp-content/u... Thread ↓↓↓

#PsychSciSky #Neuroscience #Neuroskyence

25.09.2025 09:13 —

👍 51

🔁 30

💬 3

📌 0

SleepECG

If you are concerned with performance, I also recommend checking out SleepECG (Systole uses their version of the Pan-Tompkins algorithm under the hood) : sleepecg.readthedocs.io/en/stable/

16.09.2025 11:52 —

👍 1

🔁 0

💬 0

📌 0

Hi @koeniglab.bsky.social ! Thanks for the shout-out. I created Systole while I was a postdoc in the ECG lab, but since I left a few years ago, I am no longer actively maintaining it at the moment.

16.09.2025 11:52 —

👍 1

🔁 0

💬 1

📌 0

does someone good at coding & analysis want to work remotely w/ us in the coming few months (before end of 2025), as a paid consultant? project will be on neurofeedback (fMRI, ECoG, calcium imaging). we'll work towards developing the experiments & analysis pipelines together. if so pls DM me ur CV🧠📈

01.09.2025 13:06 —

👍 42

🔁 37

💬 4

📌 0

A Gaussian process showing that the allowed time series are forced to be compatible with data

I’m especially proud of this article I wrote about Gaussian Processes for the Recast blog! 🥳

GPs are super interesting, but it’s not easy to wrap your head around them at first 🤔

This is a medium level (more intuition than math) introduction to GPs for time series.

getrecast.com/gaussian-pro...

29.08.2025 17:11 —

👍 80

🔁 23

💬 2

📌 1

An illustration of a man falling out of a piece of paper, with text that says: How an academic betrayal led me to change my authorship practices.

"The day the paper was published should have been a moment of pride. Instead, it felt like a quiet erasure." #ScienceWorkingLife https://scim.ag/4p3eH5g

25.08.2025 13:24 —

👍 60

🔁 21

💬 2

📌 3

I made this Computational Psychiatry Starter Pack a while ago and was wondering if I may be missing anyone who has joined bluesky since?

I will add anyone who uses computational models to adress questions in psychiatry research. :)

go.bsky.app/5PTy9Zj

08.08.2025 07:32 —

👍 17

🔁 12

💬 11

📌 1

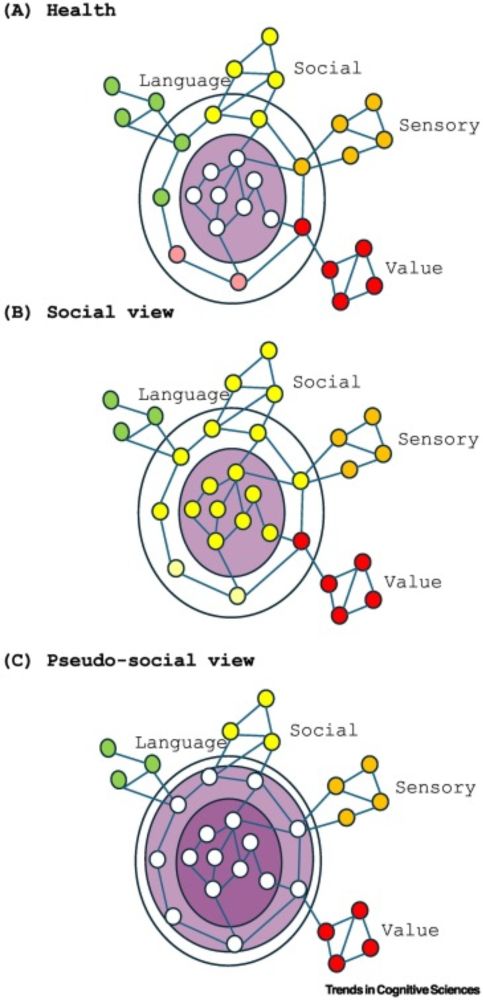

My first, first author paper, comparing the properties of memory-augmented large language models and human episodic memory, out in @cp-trendscognsci.bsky.social!

authors.elsevier.com/a/1lV174sIRv...

Here’s a quick 🧵(1/n)

26.07.2025 15:05 —

👍 68

🔁 19

💬 3

📌 3

OSF

After five years of confused staring at Greek letters, it is my absolute pleasure to finally share our (with @smfleming.bsky.social) computational model of mental imagery and reality monitoring: Perceptual Reality Monitoring as Higher-Order inference on Sensory Precision ✨

osf.io/preprints/ps...

23.07.2025 14:18 —

👍 129

🔁 35

💬 4

📌 4

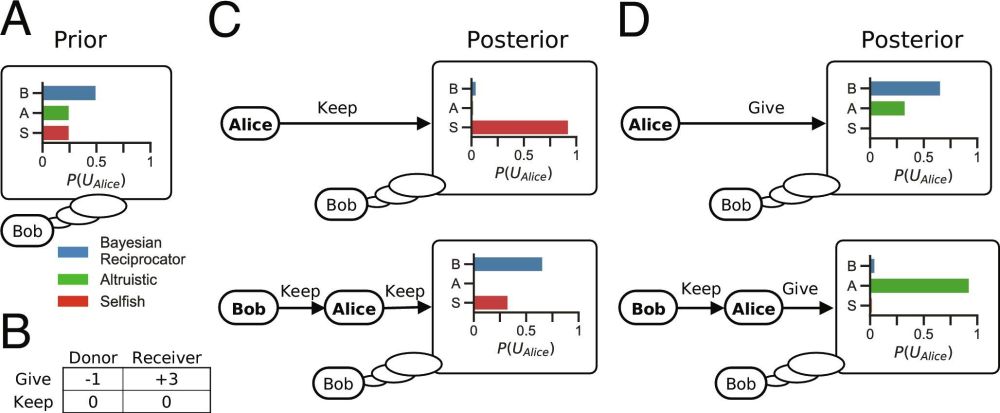

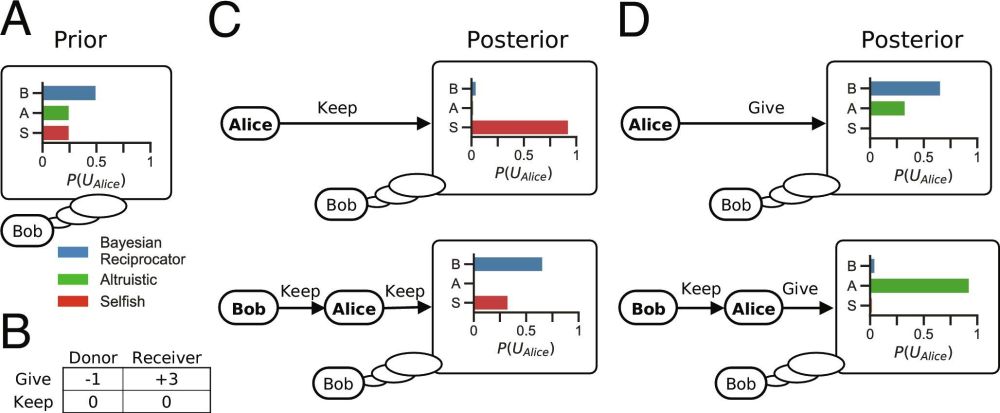

Evolving general cooperation with a Bayesian theory of mind | PNAS

Theories of the evolution of cooperation through reciprocity explain how unrelated

self-interested individuals can accomplish more together than th...

Our new paper is out in PNAS: "Evolving general cooperation with a Bayesian theory of mind"!

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

22.07.2025 06:03 —

👍 92

🔁 36

💬 2

📌 2

memo-lang

A language for mental models

memo is a new probabilistic programming language for modeling social inferences quickly. Looks like a real advance over previous approaches: fast, python-based, easily integrated into data analysis. Super cool!

pypi.org/project/memo...

and

osf.io/preprints/ps...

09.07.2025 20:56 —

👍 35

🔁 3

💬 0

📌 1

Interoception vs. Exteroception: Cardiac interoception competes with tactile perception, yet also facilitates self-relevance encoding https://www.biorxiv.org/content/10.1101/2025.06.25.660685v1

28.06.2025 00:15 —

👍 15

🔁 11

💬 0

📌 0

We need your help!!! 🧠🧪💤

If you are human, you fall asleep at least once a day! What happens in your mind then?

Scientists know actually very little about this private moment.

We propose a 20-min survey to get as much data as possible!

Here is the link:

redcap.link/DriftingMinds

19.06.2025 14:43 —

👍 51

🔁 42

💬 3

📌 6

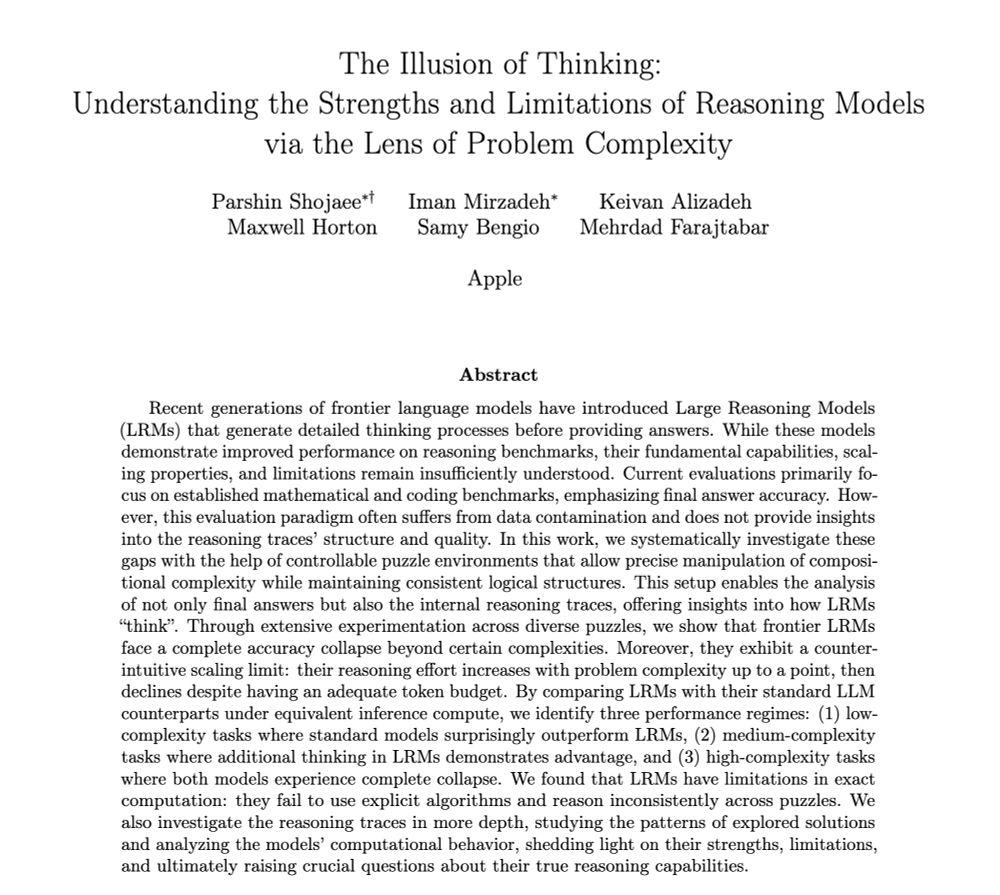

The Illusion of Thinking:

Understanding the Strengths and Limitations of Reasoning Models

via the Lens of Problem Complexity

Parshin Shojaee∗† Iman Mirzadeh∗ Keivan Alizadeh

Maxwell Horton Samy Bengio Mehrdad Farajtabar

Apple

Abstract

Recent generations of frontier language models have introduced Large Reasoning Models

(LRMs) that generate detailed thinking processes before providing answers. While these models

demonstrate improved performance on reasoning benchmarks, their fundamental capabilities, scal-

ing properties, and limitations remain insufficiently understood. Current evaluations primarily fo-

cus on established mathematical and coding benchmarks, emphasizing final answer accuracy. How-

ever, this evaluation paradigm often suffers from data contamination and does not provide insights

into the reasoning traces’ structure and quality. In this work, we systematically investigate these

gaps with the help of controllable puzzle environments that allow precise manipulation of composi-

tional complexity while maintaining consistent logical structures. This setup enables the analysis

of not only final answers but also the internal reasoning traces, offering insights into how LRMs

“think”. Through extensive experimentation across diverse puzzles, we show that frontier LRMs

face a complete accuracy collapse beyond certain complexities. Moreover, they exhibit a counter-

intuitive scaling limit: their reasoning effort increases with problem complexity up to a point, then

declines despite having an adequate token budget. By comparing LRMs with their standard LLM

counterparts under equivalent inference compute, we identify three performance regimes: (1) low-

complexity tasks where standard models surprisingly outperform LRMs, (2) medium-complexity

tasks where additional thinking in LRMs demonstrates advantage, and (3) high-complexity tasks

where both models experience complete collapse. We found that LRMs have limitations in exact

computation: they fail to use explicit …

If I have time I'll put together a more detailed thread tomorrow, but for now, I think this new paper about limitations of Chain-of-Thought models could be quite important. Worth a look if you're interested in these sorts of things.

ml-site.cdn-apple.com/papers/the-i...

08.06.2025 06:35 —

👍 332

🔁 64

💬 15

📌 9

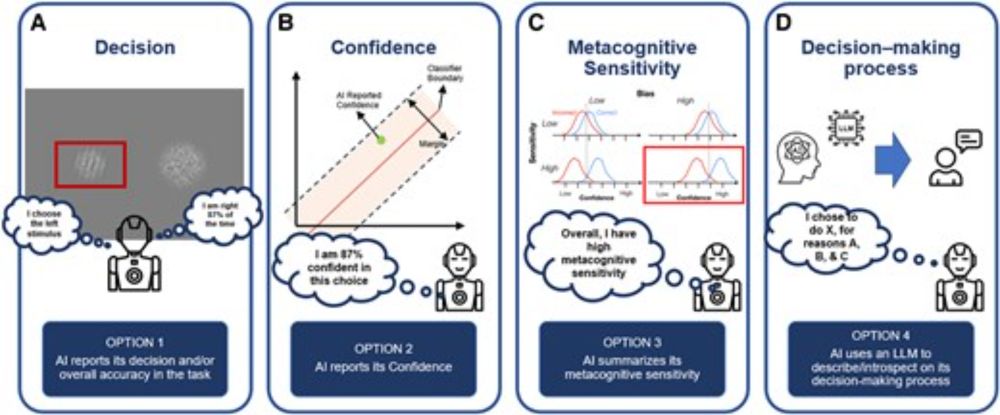

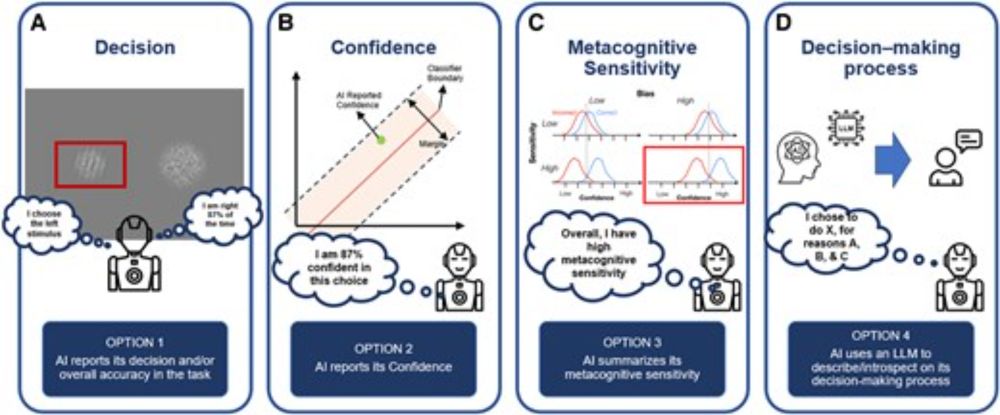

Metacognitive sensitivity: The key to calibrating trust and optimal decision making with AI

Abstract. Knowing when to trust and incorporate the advice from artificially intelligent (AI) systems is of increasing importance in the modern world. Rese

Led by postdoc Doyeon Lee and grad student Joseph Pruitt, our lab has a new Perspectives piece in PNAS Nexus:

"Metacognitive sensitivity: The key to calibrating trust and optimal decision-making with AI"

academic.oup.com/pnasnexus/ar...

With co-authors Tianyu Zhou and Eric Du 1/

27.05.2025 14:27 —

👍 11

🔁 6

💬 1

📌 0