![Figure 1

Illustration of why AI systems cannot realistically scale to human cognition within the foreseeable future: (b) Human cognitive capacities (such as reasoning, communication, problem solving, learning, concept formation, planning etc.) can handle unbounded situations across many domains, ranging from simple to complex. (a) Engineers create AI systems using machine learning from human data. (d) In an attempt to approximate human cognition a lot of data is consumed. (c) Making AI systems that approximate human cognition is intractable (van Rooij, Guest, et al., 2024), i.e., the required resources (e.g. time, data) grows prohibitively fast as input domains get more complex, leading to diminishing returns. (a) Any existing AI system is

created in limited time (hours, months or years, not millennia or eons). Therefore, existing AI systems cannot realistically have the domain-general cognitive capacities that humans have. [Made with elements from freepik.com.]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:24ngrqljhck7hpvbgrg7a23k/bafkreie5dbxbxpaszqwfbgne6pi7kf26im6chq45olvzxyyrg7eitq6uue@jpeg)

Figure 1

Illustration of why AI systems cannot realistically scale to human cognition within the foreseeable future: (b) Human cognitive capacities (such as reasoning, communication, problem solving, learning, concept formation, planning etc.) can handle unbounded situations across many domains, ranging from simple to complex. (a) Engineers create AI systems using machine learning from human data. (d) In an attempt to approximate human cognition a lot of data is consumed. (c) Making AI systems that approximate human cognition is intractable (van Rooij, Guest, et al., 2024), i.e., the required resources (e.g. time, data) grows prohibitively fast as input domains get more complex, leading to diminishing returns. (a) Any existing AI system is

created in limited time (hours, months or years, not millennia or eons). Therefore, existing AI systems cannot realistically have the domain-general cognitive capacities that humans have. [Made with elements from freepik.com.]

✨ Updated preprint ✨

Iris van Rooij & Olivia Guest (2026). Combining Psychology with Artificial Intelligence: What Could Possibly Go Wrong? PsyArXiv osf.io/preprints/psyarxiv/aue4m_v2 @olivia.science

Our aim is to make these ideas accessible for a.o. psych students. Hope we succeeded 🙂

06.01.2026 17:41 — 👍 154 🔁 65 💬 5 📌 11

bigram of the year: "surprisal brainrot"

21.12.2025 03:33 — 👍 4 🔁 0 💬 0 📌 0

Yi Ting Huang shares remarks with a large crowd of Language Science Day attendees

Shevaun Lewis presents to a room of people in front of a projector screen that reads "More Than One Brain: Studying Conversation"

Language Science Day panelist sit at the front of the room sharing their expertise

Graduate students and faculty share their poster presentations at the Language Science Day poster session

We captured so many great moments from Language Science Day, thanks to Andrea Zukowski! We wish we could share them all here, but you can see the full gallery on our Flickr page. Click here to check them out: flickr.com/photos/umd-l...

22.10.2025 14:16 — 👍 5 🔁 1 💬 0 📌 0

If any friends are at Cog Sci, I’ll be in SF tomorrow! Let me know if you’d like to meet!

01.08.2025 03:24 — 👍 1 🔁 0 💬 0 📌 0

DEFINITELY not talking about predictive coding the next time i go back to the bay area

14.07.2025 01:42 — 👍 1 🔁 0 💬 0 📌 0

The sycophantic tone of ChatGPT always sounded familiar, and then I recognized where I'd heard it before: author response letters to reviewer comments.

"You're exactly right, that's a great point!"

"Thank you so much for this insight!"

Also how it always agrees even when it contradicts itself.

09.07.2025 09:24 — 👍 188 🔁 22 💬 5 📌 4

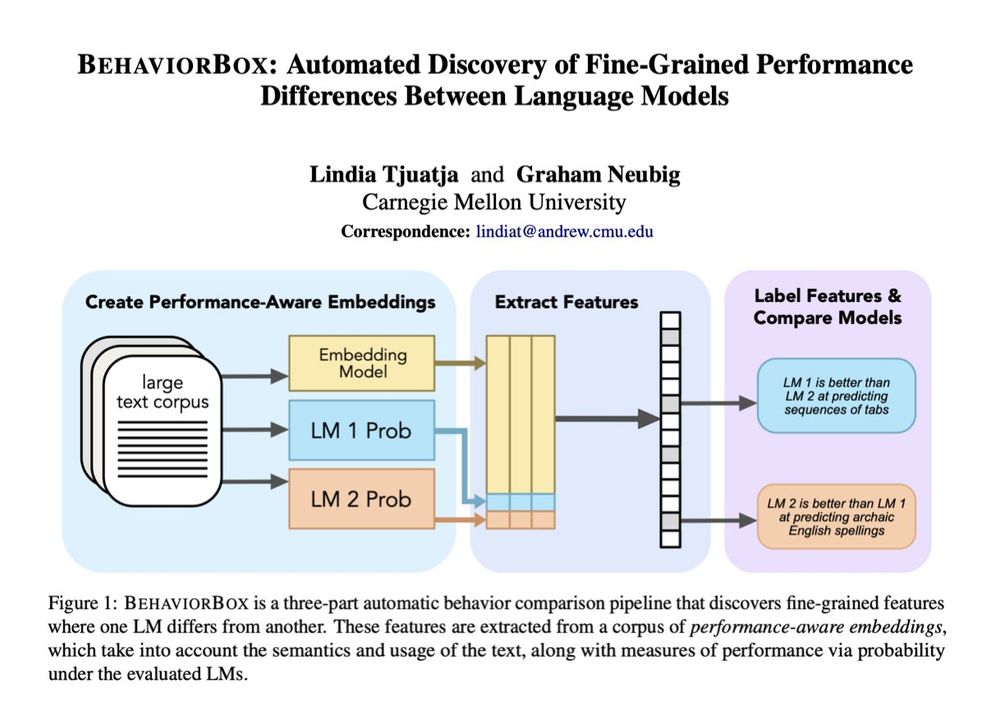

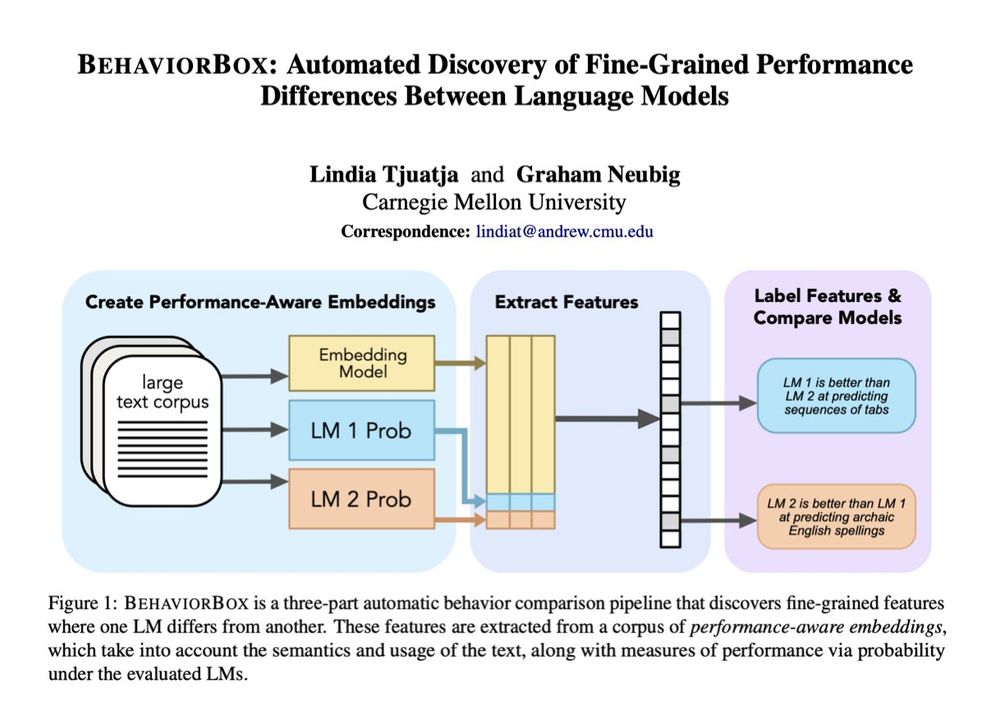

When it comes to text prediction, where does one LM outperform another? If you've ever worked on LM evals, you know this question is a lot more complex than it seems. In our new #acl2025 paper, we developed a method to find fine-grained differences between LMs:

🧵1/9

09.06.2025 13:47 — 👍 72 🔁 21 💬 2 📌 2

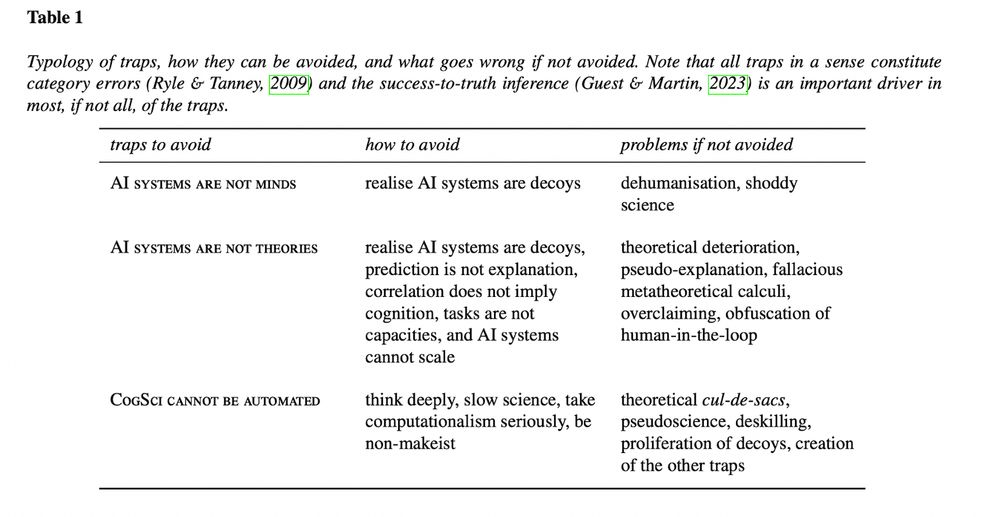

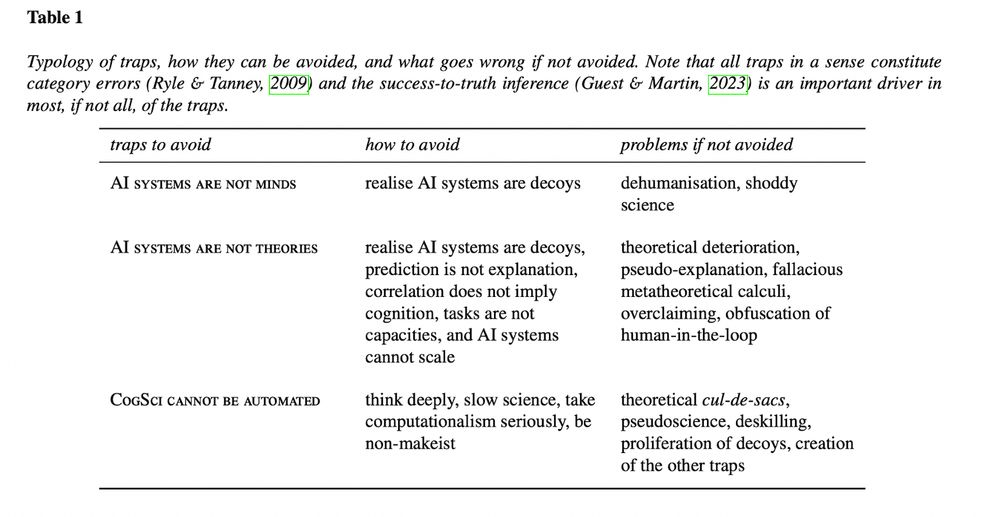

Table 1

Typology of traps, how they can be avoided, and what goes wrong if not avoided. Note that all traps in a sense constitute category errors (Ryle & Tanney, 2009) and the success-to-truth inference (Guest & Martin, 2023) is an important driver in most, if not all, of the traps.

NEW paper! 💭🖥️

“Combining Psychology with Artificial Intelligence: What could possibly go wrong?”

— Brief review paper by @olivia.science & myself, highlighting traps to avoid when combining Psych with AI, and why this is so important. Check out our proposed way forward! 🌟💡

osf.io/preprints/ps...

14.05.2025 21:23 — 👍 347 🔁 105 💬 15 📌 25

Capturing Online SRC/ORC Effort with Memory Measures from a Minimalist Parser

Aniello De Santo. Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics. 2025.

A bit late but since I really like this paper, a bit of self-advertising! I am presenting at CMCL today work showing that metrics measuring how a Minimalist Grammar parser modulates memory usage can help us model Self-paced reading data for SRC/ORC contrasts: aclanthology.org/2025.cmcl-1.5/

03.05.2025 16:43 — 👍 28 🔁 6 💬 4 📌 0

New preprint on controlled generation from LMs!

I'll be presenting at NENLP tomorrow 12:50-2:00pm

Longer thread coming soon :)

10.04.2025 19:19 — 👍 20 🔁 9 💬 1 📌 0

thanks a lot Kanishka! happy i made a little contribution & that the probabilities are corrected :)

08.04.2025 15:10 — 👍 2 🔁 0 💬 0 📌 0

![from minicons import scorer

from nltk.tokenize import TweetTokenizer

lm = scorer.IncrementalLMScorer("gpt2")

# your own tokenizer function that returns a list of words

# given some sentence input

word_tokenizer = TweetTokenizer().tokenize

# word scoring

lm.word_score_tokenized(

["I was a matron in France", "I was a mat in France"],

bos_token=True, # needed for GPT-2/Pythia and NOT needed for others

tokenize_function=word_tokenizer,

bow_correction=True, # Oh and Schuler correction

surprisal=True,

base_two=True

)

'''

First word = -log_2 P(word | <beginning of text>)

[[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('matron', 17.611848831176758),

('in', 2.5804288387298584),

('France', 9.036953926086426)],

[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('mat', 19.385351181030273),

('in', 6.76780366897583),

('France', 10.574726104736328)]]

'''](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:njnapclhkbrhe3hsq44q2e4w/bafkreibw7fjs4zeocjmfvpr4fo7wikiqenbdz7b3clwq2rolckpoqwkssu@jpeg)

from minicons import scorer

from nltk.tokenize import TweetTokenizer

lm = scorer.IncrementalLMScorer("gpt2")

# your own tokenizer function that returns a list of words

# given some sentence input

word_tokenizer = TweetTokenizer().tokenize

# word scoring

lm.word_score_tokenized(

["I was a matron in France", "I was a mat in France"],

bos_token=True, # needed for GPT-2/Pythia and NOT needed for others

tokenize_function=word_tokenizer,

bow_correction=True, # Oh and Schuler correction

surprisal=True,

base_two=True

)

'''

First word = -log_2 P(word | <beginning of text>)

[[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('matron', 17.611848831176758),

('in', 2.5804288387298584),

('France', 9.036953926086426)],

[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('mat', 19.385351181030273),

('in', 6.76780366897583),

('France', 10.574726104736328)]]

'''

another day another minicons update (potentially a significant one for psycholinguists?)

"Word" scoring is now a thing! You just have to supply your own splitting function!

pip install -U minicons for merriment

02.04.2025 03:35 — 👍 21 🔁 7 💬 3 📌 0

I’ll also be presenting a talk based on this work Friday afternoon at HSP. Very excited to share it with a psycholinguistics-focused audience!

25.03.2025 23:21 — 👍 7 🔁 1 💬 0 📌 0

I’ll be at #HSP2025! I’m presenting a poster in session 4 on how semantic factors might affect timing data from a speeded cloze task (w @virmalised.us, Philip Resnik, and @colinphillips.bsky.social)

hsp2025.github.io/abstracts/19...

25.03.2025 23:08 — 👍 10 🔁 3 💬 0 📌 0

I’ll be presenting a poster at HSP 2025 in about a week. It’s on memory for pronominal clitic placement in Spanish, come stop by and say hi if you can!

22.03.2025 22:33 — 👍 3 🔁 2 💬 0 📌 0

YouTube video by Society for Mathematical Psychology

Iris van Rooij keynote at MathPsych/ICCM 2024

🎬🎥🍿 Video of my keynote at MathPsych2024 now available online www.youtube.com/watch?v=WrwN...

#CogSci #CriticalAI #AIhype #AGI #PsychSci #PhilSci 🧪

11.01.2025 17:34 — 👍 111 🔁 33 💬 4 📌 2

Perspectives on Intelligence: Community Survey

Research survey exploring how NLP/ML/CogSci researchers define and use the concept of intelligence.

What do YOU mean by "intelligence", and does ChatGPT fit your definition?

We collected the major criteria used in CogSci and other fields, and designed a survey to find out!

Access link: www.survey-xact.dk/collect

Code: 4S7V-SN4M-S536

Time: 5-10 mins

04.12.2024 07:48 — 👍 32 🔁 13 💬 2 📌 10

starter pack for the Computational Linguistics and Information Processing group at the University of Maryland - get all your NLP and data science here!

go.bsky.app/V9qWjEi

10.12.2024 17:14 — 👍 29 🔁 12 💬 1 📌 1

@kanishka.bsky.social and I have made a starter pack for researchers working broadly on linguistic interpretability and LLMs!

go.bsky.app/F9qzAUn

Please message me or comment on this post if you've noticed someone who we forgot or would like to be added yourself!

26.11.2024 14:49 — 👍 37 🔁 9 💬 10 📌 1

"Hey everyone! 👋 I’ve created a starter pack of South Asian artists, authors, academics, activists, and orgs. I’ll keep it updated—DM me or reply if you or someone you know should be added! ✨" go.bsky.app/GGd6dxU

23.11.2024 13:50 — 👍 152 🔁 61 💬 42 📌 4

I'll be there as well - excited to chat!

11.11.2024 20:30 — 👍 1 🔁 0 💬 0 📌 0

Generalizations across filler-gap dependencies in neural language models

Katherine Howitt, Sathvik Nair, Allison Dods, Robert Melvin Hopkins. Proceedings of the 28th Conference on Computational Natural Language Learning. 2024.

RNN LMs can learn many syntactic relations but fail to capture a shared generalization across constructions. Augmenting the training data with more examples helps, but not how we'd expect!

(with Katherine Howitt, @allidods.bsky.social , and Robert Hopkins)

aclanthology.org/2024.conll-1...

11.11.2024 01:00 — 👍 1 🔁 0 💬 0 📌 0

A Psycholinguistic Evaluation of Language Models’ Sensitivity to Argument Roles

Eun-Kyoung Rosa Lee, Sathvik Nair, Naomi Feldman. Findings of the Association for Computational Linguistics: EMNLP 2024. 2024.

Language models can identify who did what to whom, but they may not be using human-like mechanisms, based on materials from different psycholinguistic studies finding systematic patterns in human processing.

(with Rosa Lee & Naomi Feldman)

aclanthology.org/2024.finding...

11.11.2024 01:00 — 👍 2 🔁 0 💬 1 📌 1

I’ll be presenting two posters on (psycho)linguistically motivated perspectives on LM generalization at #EMNLP2024!

1. Sensitivity to Argument Roles - Session 2 & #BlackBoxNLP

2. Learning & Filler-Gap Dependencies - #CoNLL

Excited to chat with other folks interested in compling x cogsci!

papers⬇️

11.11.2024 01:00 — 👍 7 🔁 1 💬 1 📌 0

5-gram of the day: "language models from computational linguistics"

06.06.2024 15:17 — 👍 0 🔁 0 💬 0 📌 0

I’m so sorry but cool to see another psycholinguist who came from the CS route! I interned on a database team and hated it. The other reviews were good. It’s just a very random process

18.04.2024 01:17 — 👍 1 🔁 0 💬 0 📌 0

GRFP reviews sigh

16.04.2024 22:05 — 👍 1 🔁 0 💬 1 📌 0

Today I learned that I may not have a successful psycholinguistics career because I got a B in databases.

16.04.2024 18:13 — 👍 2 🔁 0 💬 2 📌 0

Panicked after seeing AGI on my tax form

30.03.2024 04:31 — 👍 2 🔁 0 💬 0 📌 0

“There is no ethical way to use the major AI image generators. All of them are trained on stolen images, and all of them are built for the purpose of deskilling, disempowering and replacing real human artists.”

This sums it up perfectly. It’s not a conversation.

04.03.2024 02:20 — 👍 17388 🔁 7484 💬 159 📌 163

i am a cognitive scientist working on auditory perception at the University of Auckland and the Yale Child Study Center 🇳🇿🇺🇸🇫🇷🇨🇦

lab: themusiclab.org

personal: mehr.nz

intro to my research: youtu.be/-vJ7Jygr1eg

I am Ted Gibson, and I study human language in MIT's Brain and Cognitive Sciences. My lab is sometimes called the Language Lab or Tedlab. I work a lot with Ev Fedorenko.

PhD, CogSci @ University of Delaware (ongoing)

Asst Prof at Cornell Info Sci and Cornell Tech. Responsible AI

https://angelina-wang.github.io/

🔎 distributional information and syntactic structure in the 🧠 | 💼 postdoc @ Université de Genève | 🎓 MPI for Psycholinguistics, BCBL, Utrecht University | 🎨 | she/her

Information with representation, by and for D.C. residents.

Worker-led nonprofit newsroom, funded by readers like YOU.

🌐 https://51st.news

Support the work: https://51st.news/signup

East Coast patriot joining the culture war on the side of Culture 🎹📖✍️

ARS/NE/BOS/hockey/socialism/jazz

https://act.dsausa.org/s/3128.1zrbhX

யாதும் ஊரே யாவரும் கேளிர் 🐅

Computational cognitive neuroscience of language @bcbl + @uconn

PhD student in Language Science at @ucirvine.bsky.social

https://shiupadhye.github.io/

Making invisible peer review contributions visible 🌟 Tracking 2,970 exceptional ARR reviewers across 1,073 institutions | Open source | arrgreatreviewers.org

Kempner Institute research fellow @Harvard interested in scaling up (deep) reinforcement learning theories of human cognition

prev: deepmind, umich, msr

https://cogscikid.com/

Research @ OpenAI

ex-CMU Robotics, Berkeley AI Research

@SuvanshSanjeev 🐦

Language scientist at UC Irvine

NLP Graduate Researcher at The University of Tehran #NLProc

Housing and transit fan. Anti fascism. My "bluesky posts" represent myself and do not represent my employer or any affiliated groups and organizations.

PhD student in psycholinguistics and neurolinguistics at University of Kansas.Dog dad of two Boston Terriers.🐶

Lecturer in cognitive psychology @uniofhull.bsky.social. Subjects, verbs, and the other superfluities of language. she/her

stonekate.github.io

![Figure 1

Illustration of why AI systems cannot realistically scale to human cognition within the foreseeable future: (b) Human cognitive capacities (such as reasoning, communication, problem solving, learning, concept formation, planning etc.) can handle unbounded situations across many domains, ranging from simple to complex. (a) Engineers create AI systems using machine learning from human data. (d) In an attempt to approximate human cognition a lot of data is consumed. (c) Making AI systems that approximate human cognition is intractable (van Rooij, Guest, et al., 2024), i.e., the required resources (e.g. time, data) grows prohibitively fast as input domains get more complex, leading to diminishing returns. (a) Any existing AI system is

created in limited time (hours, months or years, not millennia or eons). Therefore, existing AI systems cannot realistically have the domain-general cognitive capacities that humans have. [Made with elements from freepik.com.]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:24ngrqljhck7hpvbgrg7a23k/bafkreie5dbxbxpaszqwfbgne6pi7kf26im6chq45olvzxyyrg7eitq6uue@jpeg)

![from minicons import scorer

from nltk.tokenize import TweetTokenizer

lm = scorer.IncrementalLMScorer("gpt2")

# your own tokenizer function that returns a list of words

# given some sentence input

word_tokenizer = TweetTokenizer().tokenize

# word scoring

lm.word_score_tokenized(

["I was a matron in France", "I was a mat in France"],

bos_token=True, # needed for GPT-2/Pythia and NOT needed for others

tokenize_function=word_tokenizer,

bow_correction=True, # Oh and Schuler correction

surprisal=True,

base_two=True

)

'''

First word = -log_2 P(word | <beginning of text>)

[[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('matron', 17.611848831176758),

('in', 2.5804288387298584),

('France', 9.036953926086426)],

[('I', 6.1522440910339355),

('was', 4.033324718475342),

('a', 4.879510402679443),

('mat', 19.385351181030273),

('in', 6.76780366897583),

('France', 10.574726104736328)]]

'''](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:njnapclhkbrhe3hsq44q2e4w/bafkreibw7fjs4zeocjmfvpr4fo7wikiqenbdz7b3clwq2rolckpoqwkssu@jpeg)