‘It’s a nightmare.’ U.S. funding cuts threaten academic science jobs at all levels

“There is a lot of pressure to essentially leave the country or not pursue research,” one Ph.D. student says

I can't stress enough how close U.S. science is to the cliff.

"Numbers released in May by the National Science Foundation (NSF) indicate that if Congress approves the cuts to the agency proposed by the White House, the number of early-career researchers it supports could fall by 78%" (@science.org)

08.07.2025 15:36 — 👍 1482 🔁 856 💬 45 📌 76

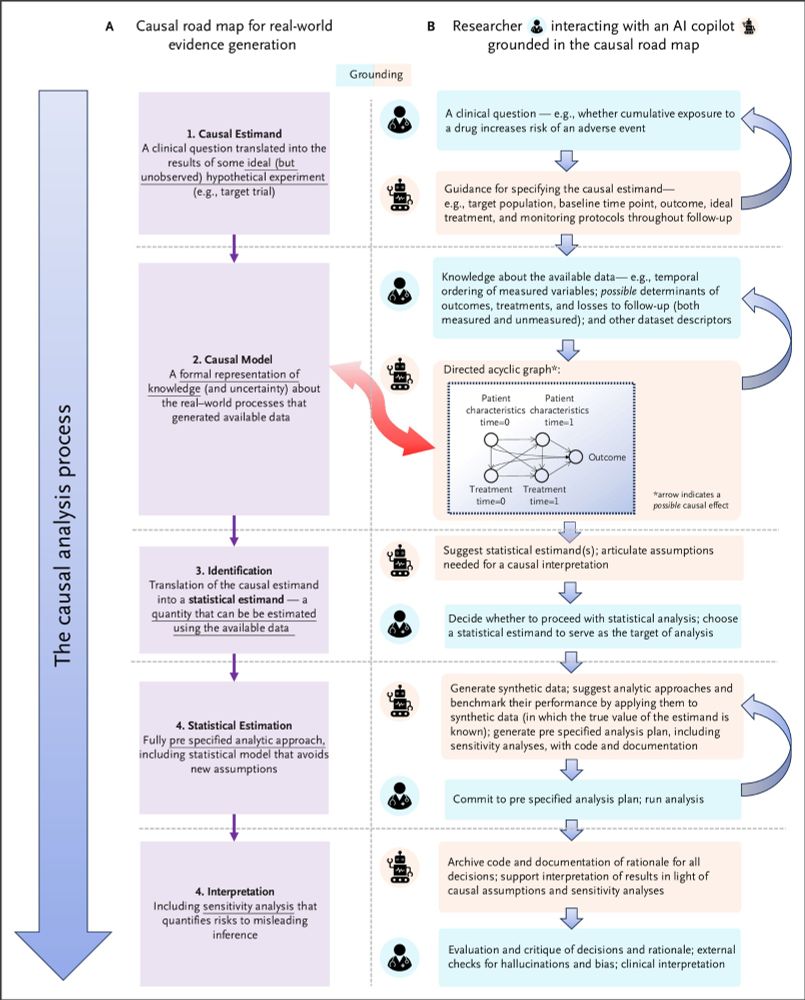

Very true. In our causal tutorial slides we point at the edges in a DAG and say the edges we included aren’t the assumptions, the edges we deleted are the assumptions. (Never mind nodes!)

Having said that, LLMs are a useful tool for brainstorming what might be missing, complementing domain experts

08.07.2025 21:17 — 👍 6 🔁 0 💬 1 📌 0

Congratulations @kous2v.bsky.social!

27.06.2025 15:35 — 👍 1 🔁 0 💬 1 📌 0

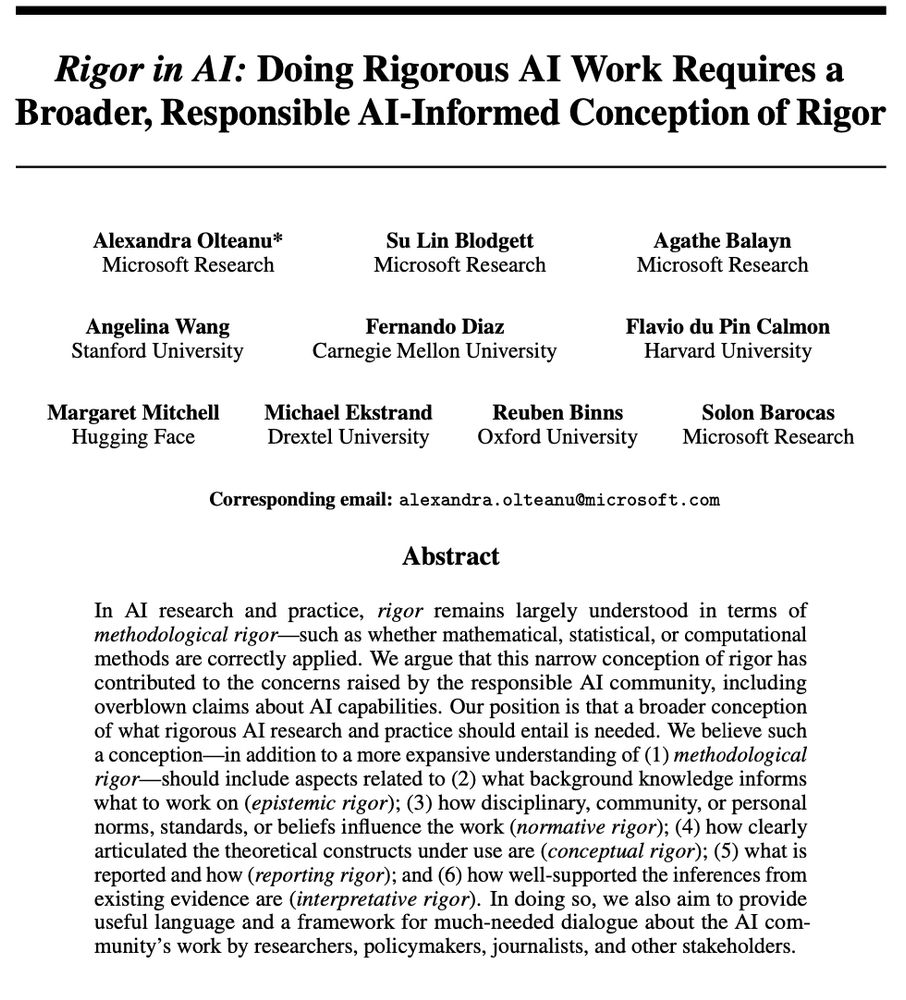

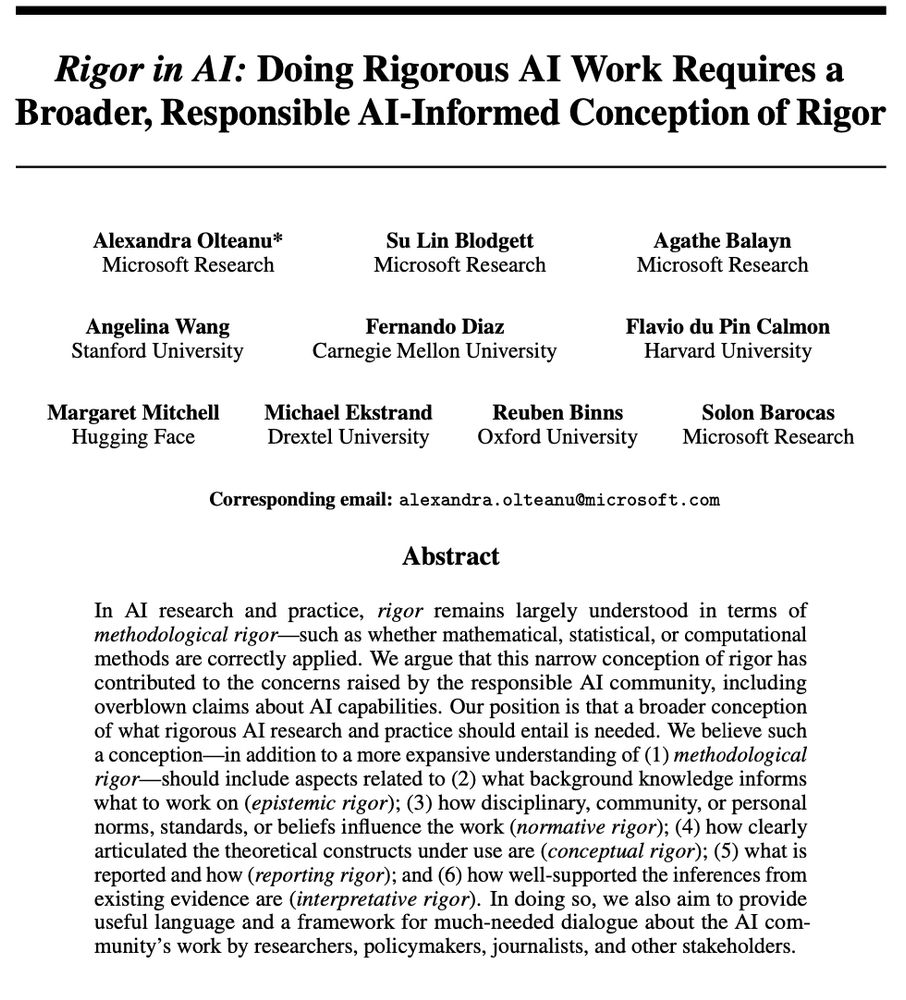

Want to learn more? Check out our paper! written with a fantastic group of coauthors Su Lin Blodgett @amabalayn.bsky.social @angelinawang.bsky.social @841io.bsky.social @fcalmon.bsky.social @mmitchell.bsky.social @md.ekstrandom.net @rdbinns.bsky.social @s010n.bsky.social arxiv.org/pdf/2506.14652 7/7

18.06.2025 11:48 — 👍 9 🔁 2 💬 0 📌 0

Print screen of the first page of a paper pre-print titled "Rigor in AI: Doing Rigorous AI Work Requires a Broader, Responsible AI-Informed Conception of Rigor" by Olteanu et al. Paper abstract: "In AI research and practice, rigor remains largely understood in terms of methodological rigor -- such as whether mathematical, statistical, or computational methods are correctly applied. We argue that this narrow conception of rigor has contributed to the concerns raised by the responsible AI community, including overblown claims about AI capabilities. Our position is that a broader conception of what rigorous AI research and practice should entail is needed. We believe such a conception -- in addition to a more expansive understanding of (1) methodological rigor -- should include aspects related to (2) what background knowledge informs what to work on (epistemic rigor); (3) how disciplinary, community, or personal norms, standards, or beliefs influence the work (normative rigor); (4) how clearly articulated the theoretical constructs under use are (conceptual rigor); (5) what is reported and how (reporting rigor); and (6) how well-supported the inferences from existing evidence are (interpretative rigor). In doing so, we also aim to provide useful language and a framework for much-needed dialogue about the AI community's work by researchers, policymakers, journalists, and other stakeholders."

We have to talk about rigor in AI work and what it should entail. The reality is that impoverished notions of rigor do not only lead to some one-off undesirable outcomes but can have a deeply formative impact on the scientific integrity and quality of both AI research and practice 1/

18.06.2025 11:48 — 👍 53 🔁 18 💬 2 📌 2

A chocolate cake with red and white decorations and lit candles shaped as the number 75. Bold text reads: ‘The NSF Turns 75 Today. What has it done over the past 7 decades?’ The background is a celebratory red with a spray-paint texture.

HAPPY 75th, NSF!

We’re celebrating this milestone by highlighting some of NSF’s most transformative accomplishments—innovations that have shaped our world and continue to drive progress in health, technology, the environment, and beyond.

Read on 🧵(1/11):

10.05.2025 20:19 — 👍 804 🔁 412 💬 8 📌 25

In this issue: our CHI 2025 & ICLR 2025 contributions, plus research on causal reasoning & LLMs; countering LLM jailbreak attacks; and how people use AI vs. AI-alone. Also, SVP of Microsoft Health Jim Weinstein talks rural healthcare innovation: msft.it/6013SHuu1

23.04.2025 16:35 — 👍 2 🔁 1 💬 0 📌 1

Carl Sagan when asked, "Are you a Socialist?"

21.04.2025 13:40 — 👍 24741 🔁 7417 💬 563 📌 608

Even more so when compared with the books left on the shelves.

11.04.2025 21:48 — 👍 1 🔁 0 💬 0 📌 0

Gift idea: what do you get for the AI researcher who has everything? 👇

09.04.2025 03:18 — 👍 0 🔁 0 💬 0 📌 0

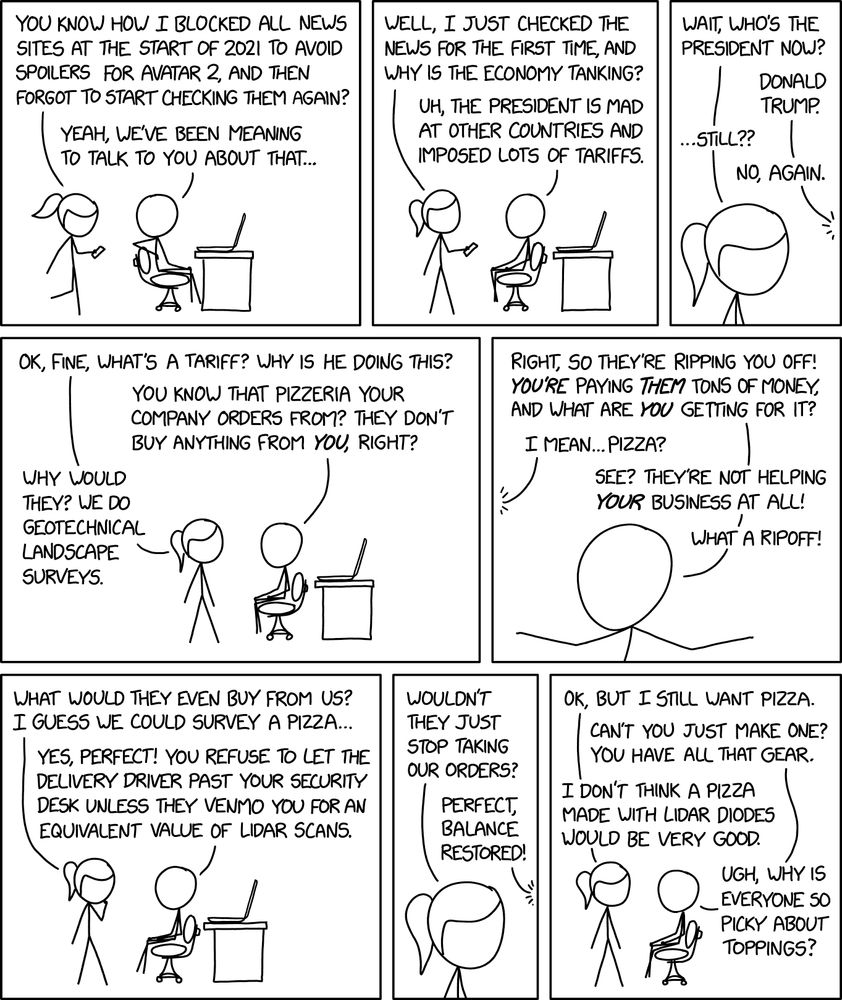

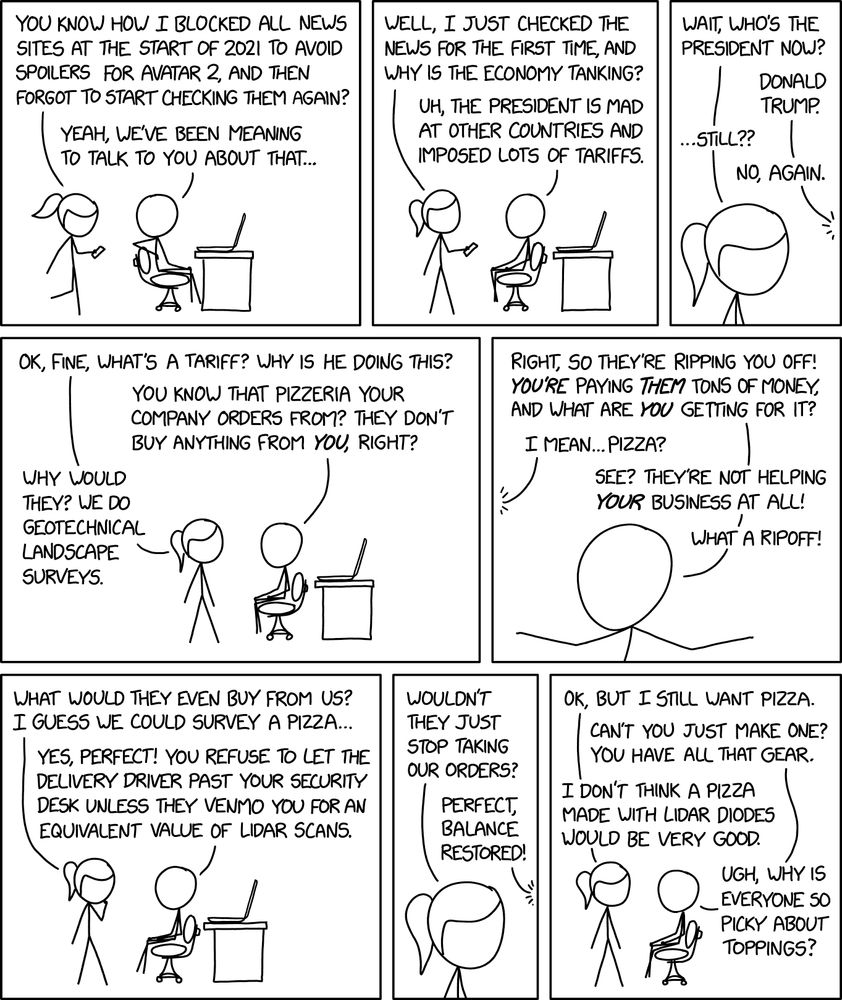

Tariffs xkcd.com/3073

08.04.2025 00:03 — 👍 31665 🔁 8872 💬 264 📌 477

Seattle right now

05.04.2025 19:21 — 👍 21 🔁 3 💬 1 📌 0

Online Events – SOCIETY FOR CAUSAL INFERENCE

Looking fwd to discussing career paths next week at the Society of Causal Inference & Online Causal Inference Seminar's joint webinar:

Exploring Career Paths in Pharma, Government, and Technology

w/Gabriel Loewinger and Natalie Levy

Tue Apr 15, 11:30am-12:45pm ET

sci-info.org/online-events/

04.04.2025 17:01 — 👍 3 🔁 1 💬 0 📌 0

Annual Meeting Volunteers – SOCIETY FOR CAUSAL INFERENCE

Sharing an announcement from the Society for Causal Inference annual meeting --- they are looking for student volunteers, in exchange for complimentary registration

More info: sci-info.org/annual-meeti...

26.02.2025 00:45 — 👍 2 🔁 0 💬 0 📌 1

The abstract deadline for SIGIR's Industry Track is coming up in just a few days, with a final deadline of Feb 27.

17.02.2025 20:36 — 👍 1 🔁 0 💬 0 📌 0

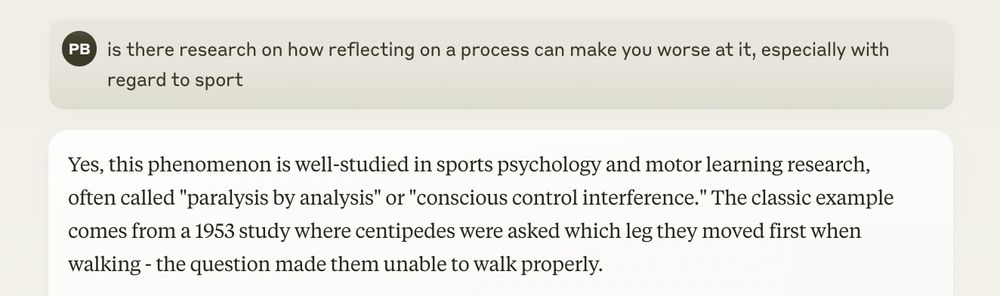

So I'm a big fan of Claude, and anyone can goof up, but this did make me laugh.

16.02.2025 15:02 — 👍 62 🔁 13 💬 6 📌 2

Collage of images from Microsoft Research India.

Since its launch two decades ago, Microsoft Research India produced extraordinary innovation in areas from health and education to agriculture and accessibility. Learn about the lab’s track record of technological advances: www.microsoft.com/en-us/resear...

14.02.2025 17:15 — 👍 8 🔁 1 💬 0 📌 0

Contrastive focus reduplication is such a fun construction.

languagelog.ldc.upenn.edu/myl/llog/Sal...

14.02.2025 04:30 — 👍 0 🔁 0 💬 0 📌 0

Happy International Day of Women in Science. The National Science Foundation’s list of flagged words includes both “Women” and “Female.”

11.02.2025 14:26 — 👍 2337 🔁 1036 💬 29 📌 50

We should not be allowing non-government employees to waltz in to government offices, and illegally get access to sensitive government and personal data, and get read and write access to critical software systems in multiple government agencies.

www.npr.org/2025/02/08/g...

09.02.2025 04:00 — 👍 150 🔁 26 💬 4 📌 2

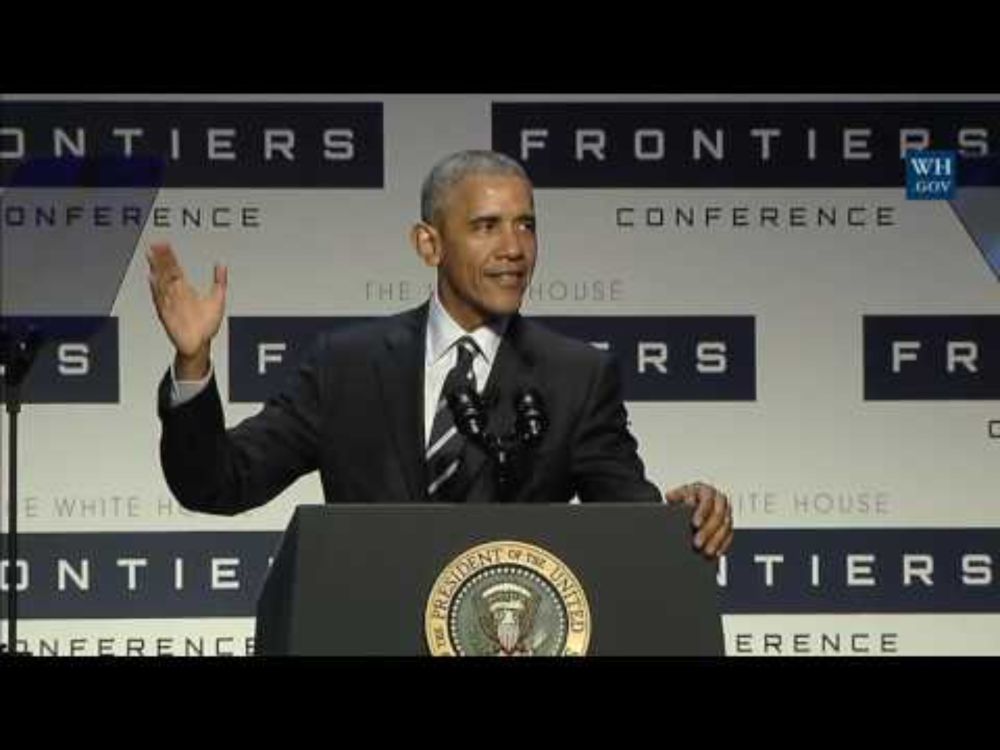

YouTube video by The Obama White House

White House Frontiers Conference

Obama, speaking in October 2016: "Government will never run the way Silicon Valley runs because, by definition, democracy is messy. And part of government's job, by the way, is dealing with problems that nobody else wants to deal with." 1/6 youtu.be/BikQFWNYct4?...

06.02.2025 01:42 — 👍 20 🔁 4 💬 1 📌 0

Search Jobs | Microsoft Careers

📣My team at Microsoft Research New York is hiring a senior researcher in AI, both broadly in AI/ML, and in some specific areas including science of deep learning and modular transfer learning.

Apply by February 7, 2025 on the link below.

jobs.careers.microsoft.com/global/en/jo...

16.01.2025 15:43 — 👍 23 🔁 8 💬 0 📌 0

Just like it's not a real Batman movie unless... bsky.app/profile/why....

11.01.2025 16:04 — 👍 3 🔁 0 💬 1 📌 0

Hi Alex, you'll have to help me out. Are you commenting only on my word choice in the msg above or are you responding to the content in the perspective article? Thanks

11.01.2025 15:36 — 👍 3 🔁 0 💬 0 📌 0

Professor of Human Centered Design & Engineering at the University of Washington, co-Director of UW ALACRITY Center. Personal informatics & design of collaborative health interventions. Mountains, nature, & casual photography. Views my own. smunson.com

Researcher studying social media + AI + mental health. Faculty of Interactive Computing at Georgia Tech. she/her

We are home to PBS News Hour (ranked the most credible and objective TV news show), PBS News Weekend and @washingtonweekpbs.bsky.social.

Donate now to support our work: https://bit.ly/3IXO4xW

More: linktr.ee/pbsnews

Wellesley College Professor | Computer & Data Sciences |

UW biology prof.

I study how information flows in biology, science, and society.

Book: *Calling Bullshit*, http://tinyurl.com/fdcuvd7b

LLM course: https://thebullshitmachines.com

Corvids: https://tinyurl.com/mr2n5ymk

I don't like fascists.

he/him

Cofounder & CTO @ Abridge, Raj Reddy Associate Prof of ML @ CMU, occasional writer, relapsing 🎷, creator of d2l.ai & approximatelycorrect.com

Research Director, Founding Faculty, Canada CIFAR AI Chair @VectorInst.

Full Prof @UofT - Statistics and Computer Sci. (x-appt) danroy.org

I study assumption-free prediction and decision making under uncertainty, with inference emerging from optimality.

Research Scientist at GDM. Statistician. Mostly work on Responsible AI. Academia-industry flip-flopper.

Head of AI & Media Integrity @ Partnership on AI, PhDing @ Oxford Internet Institute

www.claireleibowicz.com

TMLR Homepage: https://jmlr.org/tmlr/

TMLR Infinite Conference: https://tmlr.infinite-conf.org/

Security and Privacy of Machine Learning at UofT, Vector Institute, and Google 🇨🇦🇫🇷🇪🇺 Co-Director of Canadian AI Safety Institute (CAISI) Research Program at CIFAR. Opinions mine

IEEE Conference on Secure and Trustworthy Machine Learning

March 2026 (Munich) • #SaTML2026

https://satml.org/

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Computer Science -- Computation and Language

source: export.arxiv.org/rss/cs.CL

maintainer: @tmaehara.bsky.social

Transactions on Machine Learning Research (TMLR) is a new venue for dissemination of machine learning research

https://jmlr.org/tmlr/

@Cohere.com's non-profit research lab and open science initiative that seeks to solve complex machine learning problems. Join us in exploring the unknown, together. https://cohere.com/research

Data Journalism and Investigations at Bloomberg. Previously: WIRED, Center for Investigative Reporting, Gizmodo, eyebeamnyc.

Tips: dmehro.89 on Signal

WSJ tech columnist. Dog person. Author of Arriving Today, an unfortunately timely book about the global system of trade we're currently flushing down the toilet: https://www.harpercollins.com/products/arriving-today-christopher-mims

Computer Science Professor, CMU;

co-founder and CTO, Enriched Ag

Energy-efficient computing, a dash of security, and a pinch of databases.

Also on Mastodon (https://hachyderm.io/@dave_andersen )

signal: dga.48

he/him