🧵Excited to present our latest work at #Neurips25! Together with @avm.bsky.social, we discover 𝐜𝐡𝐚𝐧𝐧𝐞𝐥𝐬 𝐭𝐨 𝐢𝐧𝐟𝐢𝐧𝐢𝐭𝐲: regions in neural networks loss landscapes where parameters diverge to infinity (in regression settings!)

We find that MLPs in these channels can take derivatives and compute GLUs 🤯

04.12.2025 17:26 — 👍 14 🔁 6 💬 2 📌 0

This was a lot of fun! From my side, it started with a technical Q: what's the relation between two-side cavity and path integrals? Turns out it's a fluctuation correction - and amazingly, this also enable the "O(N) rank" theory by @david-g-clark.bsky.social and @omarschall.bsky.social. 🤯

05.11.2025 09:15 — 👍 12 🔁 3 💬 0 📌 0

P4 52 “Coding Schemes in Non-Lazy Artificial Neural Networks” by @avm.bsky.social

30.09.2025 09:29 — 👍 2 🔁 0 💬 0 📌 0

WEDNESDAY 14:00 – 15:30

P4 25 “Rarely categorical, always high-dimensional: how the neural code changes along the cortical hierarchy” by @shuqiw.bsky.social

P4 35 “Biologically plausible contrastive learning rules with top-down feedback for deep networks” by @zihan-wu.bsky.social

30.09.2025 09:29 — 👍 4 🔁 0 💬 1 📌 0

WEDNESDAY 12:30 – 14:00

P3 4 “Toy Models of Identifiability for Neuroscience” by @flavioh.bsky.social

P3 55 “How many neurons is “infinitely many”? A dynamical systems perspective on the mean-field limit of structured recurrent neural networks” by Louis Pezon

30.09.2025 09:29 — 👍 0 🔁 0 💬 1 📌 0

P2 65 “Rate-like dynamics of spiking neural networks” by Kasper Smeets

30.09.2025 09:29 — 👍 0 🔁 0 💬 1 📌 0

TUESDAY 18:00 – 19:30

P2 2 “Biologically informed cortical models predict optogenetic perturbations” by @bellecguill.bsky.social

P2 12 “High-precision detection of monosynaptic connections from extra-cellular recordings” by @shuqiw.bsky.social

30.09.2025 09:29 — 👍 1 🔁 0 💬 1 📌 0

Lab members are at the Bernstein conference @bernsteinneuro.bsky.social with 9 posters! Here’s the list:

TUESDAY 16:30 – 18:00

P1 62 “Measuring and controlling solution degeneracy across task-trained recurrent neural networks” by @flavioh.bsky.social

30.09.2025 09:29 — 👍 9 🔁 3 💬 1 📌 0

New in @pnas.org: doi.org/10.1073/pnas...

We study how humans explore a 61-state environment with a stochastic region that mimics a “noisy-TV.”

Results: Participants keep exploring the stochastic part even when it’s unhelpful, and novelty-seeking best explains this behavior.

#cogsci #neuroskyence

28.09.2025 11:07 — 👍 97 🔁 36 💬 0 📌 3

🎉 "High-dimensional neuronal activity from low-dimensional latent dynamics: a solvable model" will be presented as an oral at #NeurIPS2025 🎉

Feeling very grateful that reviewers and chairs appreciated concise mathematical explanations, in this age of big models.

www.biorxiv.org/content/10.1...

1/2

19.09.2025 08:01 — 👍 110 🔁 23 💬 4 📌 4

Work led by Martin Barry with the supervision of Wulfram Gerstner and Guillaume Bellec @bellecguill.bsky.social

04.09.2025 16:00 — 👍 0 🔁 0 💬 0 📌 0

In experiments (models & simulations), we showed how this approach supports stable retention of old tasks while learning new ones (split CIfar-100, ASC…)

04.09.2025 16:00 — 👍 0 🔁 0 💬 1 📌 0

We designed a Bio-inspired Context-specific gating of plasticity and neuronal activity allowing for a drastic reduction in catastrophic forgetting.

We also show the capacity of our model of both forward and backward transfer! All of this thanks to the shared neuronal activity across tasks.

04.09.2025 16:00 — 👍 1 🔁 0 💬 1 📌 0

We designed a Gating/Availabilty model that detects selective neurons - most useful neuron for the task - during learning, shunt activity of the others (Gating) and decrease the learning rate of task selective neuron (Availability)

04.09.2025 16:00 — 👍 0 🔁 0 💬 1 📌 0

Context selectivity with dynamic availability enables lifelong continual learning

“You never forget how to ride a bike”, – but how is that possible? The brain is able to learn complex skills, stop the practice for years, learn other…

🧠 “You never forget how to ride a bike”, but how is that possible?

Our study proposes a bio-plausible meta-plasticity rule that shapes synapses over time, enabling selective recall based on context

04.09.2025 16:00 — 👍 16 🔁 3 💬 1 📌 0

So happy to see this work out! 🥳

Huge thanks to our two amazing reviewers who pushed us to make the paper much stronger. A truly joyful collaboration with @lucasgruaz.bsky.social, @sobeckerneuro.bsky.social, and Johanni Brea! 🥰

Tweeprint on an earlier version: bsky.app/profile/modi... 🧠🧪👩🔬

25.08.2025 16:18 — 👍 38 🔁 13 💬 0 📌 0

Attending #CCN2025?

Come by our poster in the afternoon (4th floor, Poster 72) to talk about the sense of control, empowerment, and agency. 🧠🤖

We propose a unifying formulation of the sense of control and use it to empirically characterize the human subjective sense of control.

🧑🔬🧪🔬

13.08.2025 08:40 — 👍 10 🔁 1 💬 1 📌 1

YouTube video by Gerstner Lab

From Spikes To Rates

Is it possible to go from spikes to rates without averaging?

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

08.08.2025 15:25 — 👍 61 🔁 17 💬 2 📌 1

OSF

Excited to present at the PIMBAA workshop at #RLDM2025 tomorrow!

We study curiosity using intrinsically motivated RL agents and developed an algorithm to generate diverse, targeted environments for comparing curiosity drives.

Preprint (accepted but not yet published): osf.io/preprints/ps...

11.06.2025 20:09 — 👍 7 🔁 1 💬 0 📌 0

Our new preprint 👀

09.06.2025 19:32 — 👍 31 🔁 6 💬 0 📌 0

Interested in high-dim chaotic networks? Ever wondered about the structure of their state space? @jakobstubenrauch.bsky.social has answers - from a separation of fixed points and dynamics onto distinct shells to a shared lower-dim manifold and linear prediction of dynamics.

10.06.2025 19:45 — 👍 13 🔁 2 💬 0 📌 0

Episode #22 in #TheoreticalNeurosciencePodcast: On 50 years with the Hopfield network model - with Wulfram Gerstner

theoreticalneuroscience.no/thn22

John Hopfield received the 2024 Physics Nobel prize for his model published in 1982. What is the model all about? @icepfl.bsky.social

07.12.2024 08:24 — 👍 33 🔁 5 💬 0 📌 2

Brain models draw closer to real-life neurons

Researchers at EPFL have shown how rough, biological spiking neural networks can mimic the behavior of brain models called recurrent neural networks. The findings challenge traditional assumptions and...

A cool EPFL News article was written about our recent neurotheory paper on spikes vs rates!

Super engaging text by science communicater Nik Papageorgiou.

actu.epfl.ch/news/brain-m...

Definitely more accessible than the original physics-style, 4.5-page letter 🤓

journals.aps.org/prl/abstract...

22.01.2025 16:04 — 👍 26 🔁 9 💬 2 📌 0

Emergent Rate-Based Dynamics in Duplicate-Free Populations of Spiking Neurons

Can spiking neural networks (SNNs) approximate the dynamics of recurrent neural networks? Arguments in classical mean-field theory based on laws of large numbers provide a positive answer when each ne...

New round of spike vs rate?

The concentration of measure phenomenon can explain the emergence of rate-based dynamics in networks of spiking neurons, even when no two neurons are the same.

This is what's shown in the last paper of my PhD, out today in Physical Review Letters 🎉 tinyurl.com/4rprwrw5

06.01.2025 16:45 — 👍 26 🔁 8 💬 1 📌 0

Pre-print 🧠🧪

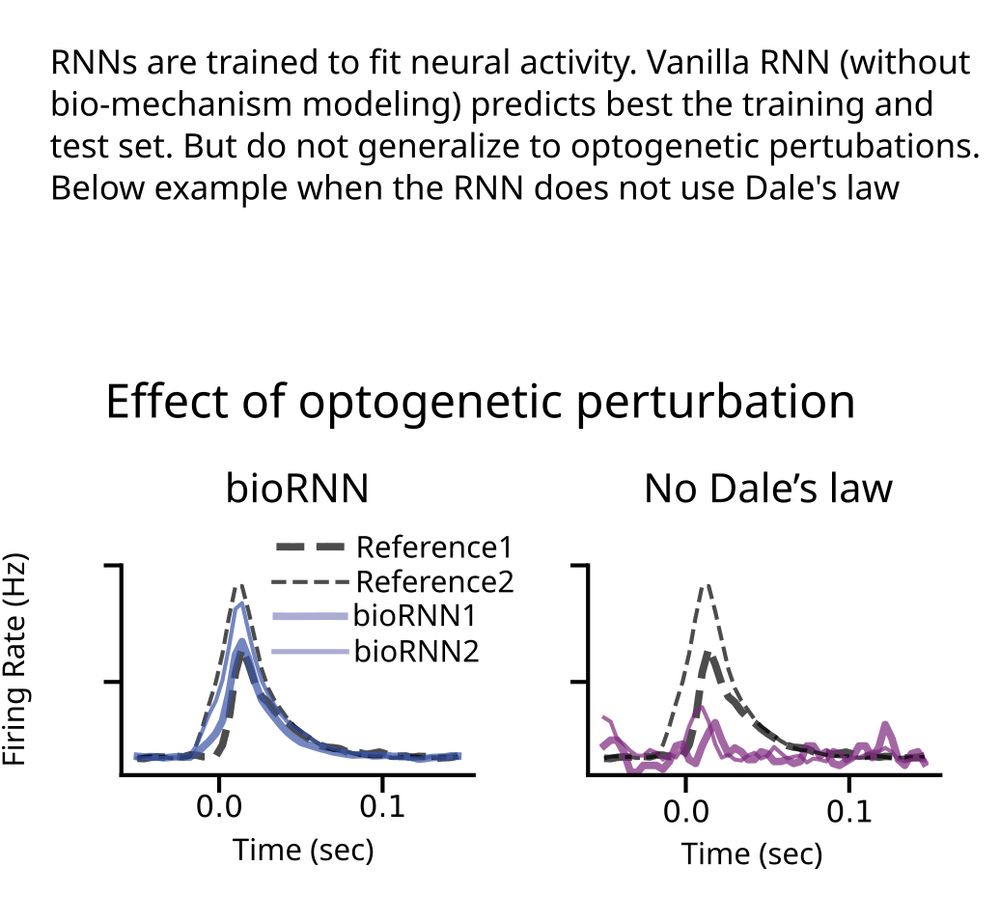

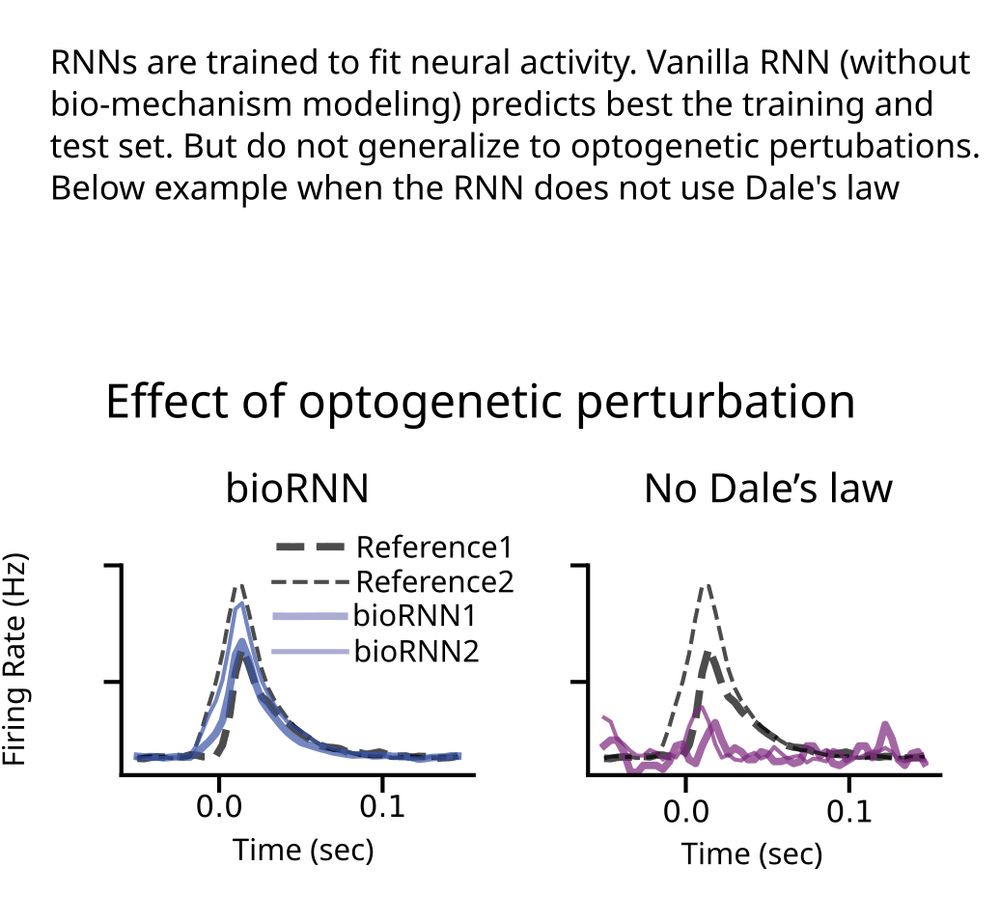

Is mechanism modeling dead in the AI era?

ML models trained to predict neural activity fail to generalize to unseen opto perturbations. But mechanism modeling can solve that.

We say "perturbation testing" is the right way to evaluate mechanisms in data-constrained models

1/8

08.01.2025 16:33 — 👍 126 🔁 48 💬 4 📌 3

Dedicated to helping neuroscientists stay current and build connections. Subscribe to receive the latest news and perspectives on neuroscience: www.thetransmitter.org/newsletters/

Workshop at #NeurIPS2025 aiming to connect machine learning researchers with neuroscientists and cognitive scientists by focusing on concrete, open problems grounded in emerging neural and behavioral datasets.

🔗 https://data-brain-mind.github.io

Neuroscientist. I use models and experiments to understand dendrites, their role in brain function and their potential to advance ML/AI.

Research Director at IMBB @imbb-forth.bsky.social and Head: www.dendrites.gr

Secretary General of FENS www.fens.org

Dr. @yiotapoirazi.bsky.social lab (https://dendrites.gr/) at @imbb-forth.bsky.social. We investigate the role of dendrites in learning and memory processes, using computational models, behavioral and imaging experiments.

Across many scientific disciplines, researchers in the Bernstein Network connect experimental approaches with theoretical models to explore brain function.

PhD candidate in computational neuroscience, university of Geneva.

Studying the dynamical properties of neural activity during speech processing.💻🧠

webpage: nosratullah.github.io

science. https://briandepasquale.github.io

Bluesky in Barcelona

Computational Neuroscience & Natural and Artificial Intelligence

Serra Hunter Professor

Center for Brain and Cognition, Universitat Pompeu Fabra

https://sites.google.com/view/morenobotecompneuro?pli=1

Co-Founder & CEO, Sakana AI 🎏 → @sakanaai.bsky.social

https://sakana.ai/careers

Computational & Systems Neuroscience (COSYNE) Conference

🧠🧠🧠

Next: Mar 27-April 1 2025, Montreal/Tremblant

🧠🧠🧠

Here too:

@CosyneMeeting@neuromatch.social

@CosyneMeeting on Twitter

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

Cognitive neuroscientist.

Professor at College de France in Paris.

Head of the NeuroSpin brain imaging facility in Saclay.

President of the Scientific Council of the French national education ministry (CSEN)

Director of Helmholtz Institute for Human-Centered AI in Munich.

Cognitive science, machine learning, large models.

https://hcai-munich.com

Machine Learning Scientist and Social Entrepreneur | 🦓 https://cebra.ai | Group Leader at Helmholtz Munich https://dynamical-inference.ai/ | Co-Founder & CEO, https://ki-macht-schule.de/ | Co-Founder & CTO, https://kinematik.ai | @ellis.eu member

NeuroAI Prof @EPFL 🇨🇭. ML + Neuro 🤖🧠. Brain-Score, CORnet, Vision, Language. Previously: PhD @MIT, ML @Salesforce, Neuro @HarvardMed, & co-founder @Integreat. go.epfl.ch/NeuroAI

Machine Learning and Neuroscience Lab @ Uni Tuebingen, PI: Matthias Bethge, bethgelab.org

The Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University.

Associate Professor of Electrical Engineering, EPFL.

Amazon Scholar (AGI Foundations). IEEE Fellow. ELLIS Fellow.