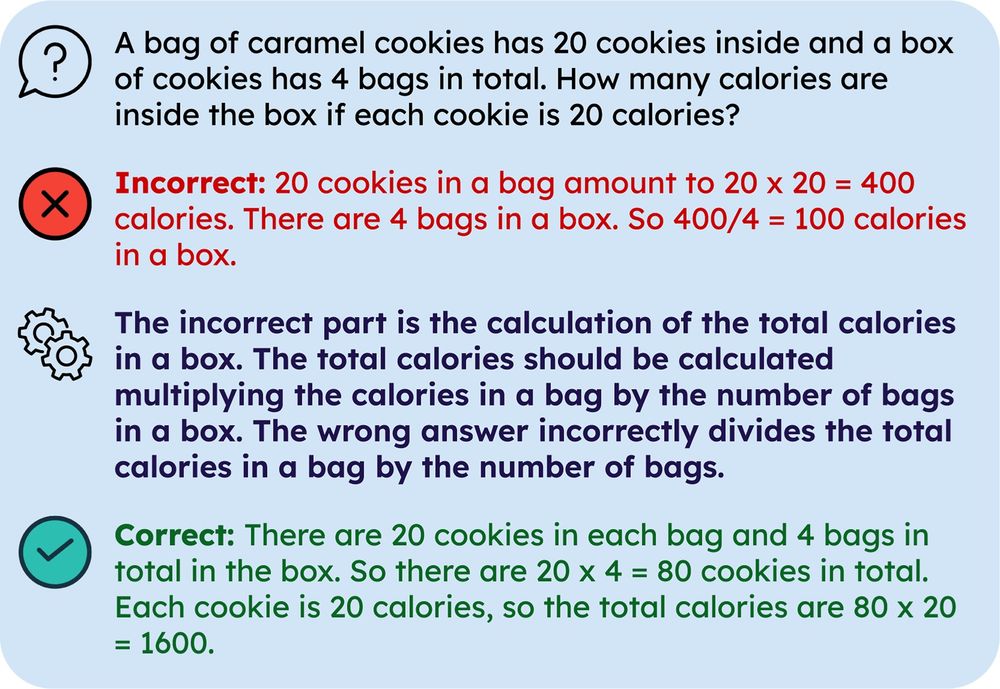

Do LLMs need rationales for learning from mistakes? 🤔

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

13.02.2025 15:38 — 👍 21 🔁 9 💬 1 📌 3

are you guys interested in research interns at this stage as well?

24.01.2025 00:39 — 👍 0 🔁 0 💬 1 📌 0

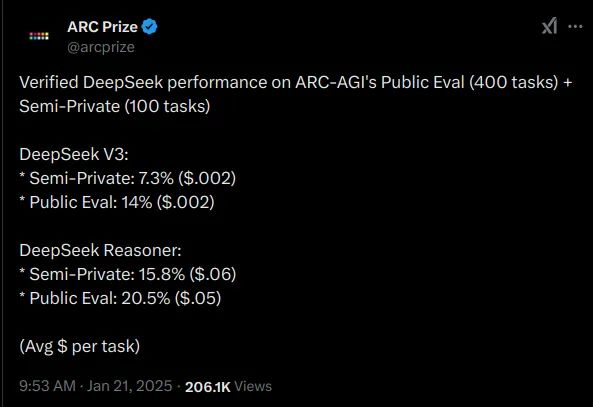

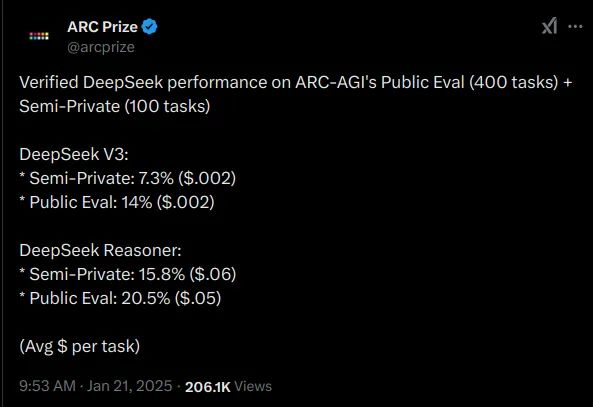

Not that you need another thread on Deepseek's R1, but I really enjoy these models, and it's great to see an *open*, MIT-licensed reasoner that's ~as good as OpenAI o1.

A blog post: itcanthink.substack.com/p/deepseek-r...

It's really very good at ARC-AGI for example:

22.01.2025 22:01 — 👍 40 🔁 7 💬 2 📌 0

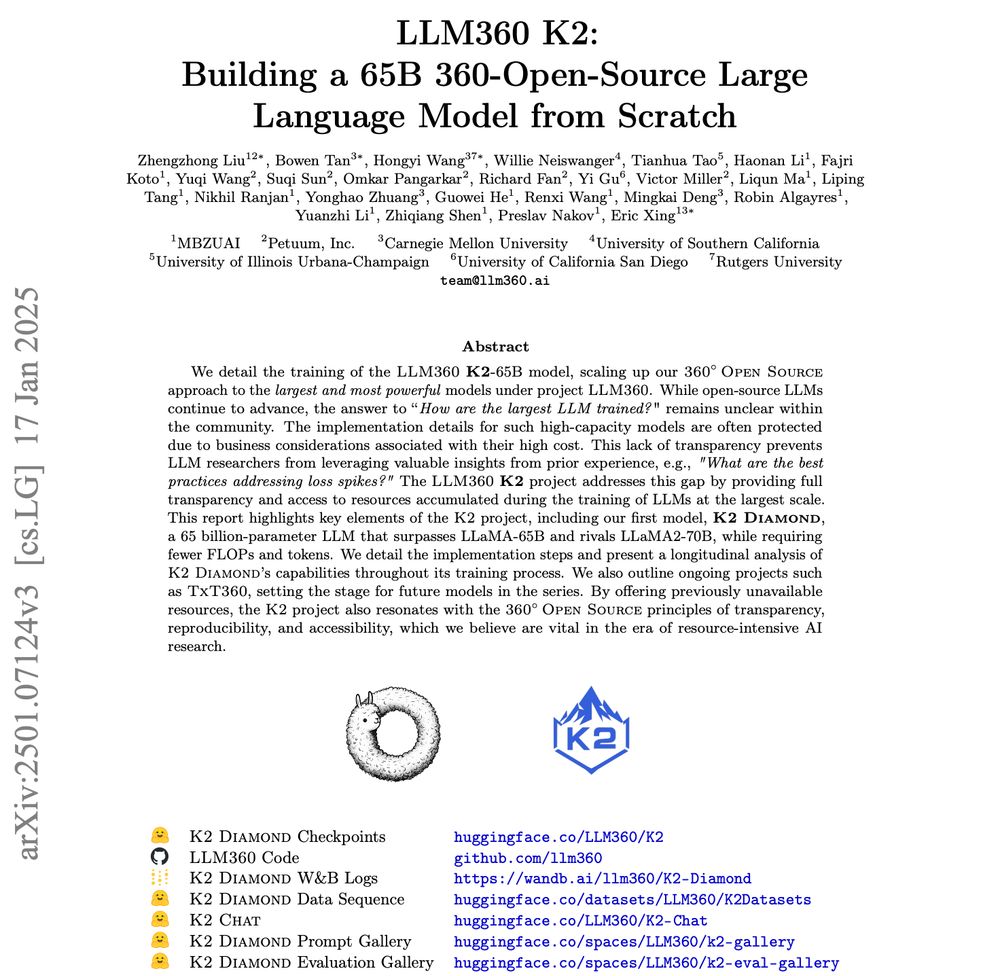

LLM360 gets way less recognition relative to the quality of their totally open outputs in the last year+. They dropped a 60+ page technical report last week and I don't know if I saw anyone talking about it. Along with OLMo, it's the other up to date open-source LM.

Paper: https://buff.ly/40I6s4d

23.01.2025 02:37 — 👍 45 🔁 5 💬 1 📌 0

Thank you for posting this work.

We are finding very similar findings for LLM Agent research.

Would anyone be interested in a collaboration on reproducibility on that?

22.01.2025 10:36 — 👍 1 🔁 0 💬 1 📌 0

#NLP #LLMAgents Community, I have a question:

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

21.01.2025 10:36 — 👍 0 🔁 1 💬 0 📌 0

#NLP #LLMAgents Community, I have a question:

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

21.01.2025 10:36 — 👍 0 🔁 1 💬 0 📌 0

Hey,

I work on LLM agents, if that qualifies. Please add me as well. Thanks.

28.11.2024 01:57 — 👍 0 🔁 0 💬 0 📌 0

Posting a call for help: does anyone know of a good way to simultaneously treat both POTS and Ménière’s disease? Please contact me if you’re either a clinician with experience doing this or a patient who has found a good solution. Context in thread

24.11.2024 16:34 — 👍 128 🔁 71 💬 15 📌 6

The OLMo 2 models sit at the Pareto frontier of training FLOPs vs model average performance.

Meet OLMo 2, the best fully open language model to date, including a family of 7B and 13B models trained up to 5T tokens. OLMo 2 outperforms other fully open models and competes with open-weight models like Llama 3.1 8B — As always, we released our data, code, recipes and more 🎁

26.11.2024 20:51 — 👍 151 🔁 36 💬 5 📌 12

Hi there,

Please add me as well. I'm a PhD student on LLM agents at Imperial College London

25.11.2024 23:30 — 👍 0 🔁 0 💬 0 📌 0

That's really interesting and perhaps find some roots in that numbers come from the arabic script?

What are your thoughts on that?

24.11.2024 11:37 — 👍 2 🔁 0 💬 2 📌 0

Hey, I would love to be added too. I work on LLM Agents, and worked on Bayesian Exploration in RL.

24.11.2024 10:21 — 👍 1 🔁 0 💬 0 📌 0

Hey, thanks for the group. I would love to be added too. I'm a PhD on LLM Agents at Imperial College London.

24.11.2024 10:15 — 👍 0 🔁 0 💬 0 📌 0

I would love to be in that one too :))

23.11.2024 19:50 — 👍 0 🔁 0 💬 0 📌 0

Pretty cool people are being added to the LLM Agent & LLM Reasoning group. Thanks @lisaalaz.bsky.social for suggesting @jhamrick.bsky.social @gabepsilon.bsky.social and others.

Feel free to mention yourself and others. :)

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

23.11.2024 19:36 — 👍 10 🔁 1 💬 9 📌 0

👍 ;)

23.11.2024 19:33 — 👍 1 🔁 0 💬 0 📌 0

Definitely, done.

23.11.2024 19:33 — 👍 1 🔁 0 💬 0 📌 0

Done :)

23.11.2024 19:32 — 👍 0 🔁 0 💬 0 📌 0

Sure thing.

21.11.2024 19:38 — 👍 1 🔁 0 💬 0 📌 0

Thanks, done.

21.11.2024 19:38 — 👍 1 🔁 0 💬 0 📌 0

Done :)

21.11.2024 19:38 — 👍 1 🔁 0 💬 0 📌 0

#EMNLP2024 was a fun time to reconnect with old friends and meet new ones! Reflecting on the conference program and in-person discussions, I believe we're seeing the "Google Moment" to #IR research play out in #NLProc.

1/n

21.11.2024 13:38 — 👍 15 🔁 3 💬 1 📌 0

I thought to create a Starter Pack for people working on LLM Agents. Please feel free to self-refer as well.

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

20.11.2024 14:08 — 👍 15 🔁 5 💬 11 📌 0

I thought to create a Starter Pack for people working on LLM Agents. Please feel free to self-refer as well.

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

20.11.2024 14:08 — 👍 15 🔁 5 💬 11 📌 0

Meta-Reasoning Improves Tool Use in Large Language Models

External tools help large language models (LLMs) succeed at tasks where they would otherwise typically fail. In existing frameworks, LLMs learn tool use either by in-context demonstrations or via full...

Hi Bluesky, would like to introduce myself 🙂

I am PhD-ing at Imperial College under @marekrei.bsky.social’s supervision. I am broadly interested in LLM/LVLM reasoning & planning 🤖 (here’s our latest work arxiv.org/abs/2411.04535)

Do reach out if you are interested in these (or related) topics!

20.11.2024 11:26 — 👍 40 🔁 3 💬 0 📌 0

Welcome to Bluesky to more of our NLP researchers at Imperial!! Looking forward to following everyone's work on here.

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb

20.11.2024 08:35 — 👍 20 🔁 7 💬 3 📌 0

We are a joint partnership of University of Tübingen and Max Planck Institute for Intelligent Systems. We aim at developing robust learning systems and societally responsible AI. https://tuebingen.ai/imprint

https://tuebingen.ai/privacy-policy#c1104

Professor, University of Tübingen @unituebingen.bsky.social.

Head of Department of Computer Science 🎓.

Faculty, Tübingen AI Center 🇩🇪 @tuebingen-ai.bsky.social.

ELLIS Fellow, Founding Board Member 🇪🇺 @ellis.eu.

CV 📷, ML 🧠, Self-Driving 🚗, NLP 🖺

ML PhD Student @ Uni. of Edinburgh, working on Multi-Agent Problems. | Organiser @deeplearningindaba.bsky.social @rl-agents-rg.bsky.social | 🇪🇹🇿🇦

kaleabtessera.com

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

PhD candidate at NYU

lexipalmer13.github.io/

Assistant Professor of Sociology, NYU. Core Faculty, CSMaP. Research Fellow Oxford Sociology. Computational social science, Methods, Conflict, Communication. Webpage: cjbarrie.com

PhD supervised by Tim Rocktäschel and Ed Grefenstette, part time at Cohere. Language and LLMs. Spent time at FAIR, Google, and NYU (with Brenden Lake). She/her.

Sentence Transformers, SetFit & NLTK maintainer

Machine Learning Engineer at 🤗 Hugging Face

Ginni Rometty Prof @NorthwesternCS | Fellow @NU_IPR | Uncertainty + decisions | Humans + AI/ML | Blog @statmodeling

Research Scientist at DeepMind. Opinions my own. Inventor of GANs. Lead author of http://www.deeplearningbook.org . Founding chairman of www.publichealthactionnetwork.org

San Diego Dec 2-7, 25 and Mexico City Nov 30-Dec 5, 25. Comments to this account are not monitored. Please send feedback to townhall@neurips.cc.

Building AI Agent Marketplace and Landscape Map

https://aiagentsdirectory.com/landscape

Breakthrough AI to solve the world's biggest problems.

› Join us: http://allenai.org/careers

› Get our newsletter: https://share.hsforms.com/1uJkWs5aDRHWhiky3aHooIg3ioxm

I like tokens! Lead for OLMo data at @ai2.bsky.social (Dolma 🍇) w @kylelo.bsky.social. Open source is fun 🤖☕️🍕🏳️🌈 Opinions are sampled from my own stochastic parrot

more at https://soldaini.net

Research Scientist Meta/FAIR, Prof. University of Geneva, co-founder Neural Concept SA. I like reality.

https://fleuret.org

Canadian in Taiwan. Emerging tech writer, and analyst with a flagship Newsletter called A.I. Supremacy reaching 115k readers

Also watching Semis, China, robotics, Quantum, BigTech, open-source AI and Gen AI tools.

https://www.ai-supremacy.com/archive

AI researcher at Google DeepMind. Synthesized views are my own.

📍SF Bay Area 🔗 http://jonbarron.info

This feed is a partial mirror of https://twitter.com/jon_barron

PhD-ing Clip@UMD

https://houyu0930.github.io/

Senior Research Scientist @MBZUAI. Focused on decision making under uncertainty, guided by practical problems in healthcare, reasoning, and biology.

PhD Student at the ILLC / UvA doing work at the intersection of (mechanistic) interpretability and cognitive science. Current Anthropic Fellow.

hannamw.github.io