New working paper 🚨🚨🚨

What was the origin of modern economic growth?

Joel Mokyr had a Nobel winning answer - growth took off when science and technology began to reinforce each other

But can we test this quantitatively?

This paper does so – read more ⬇️ 🧵

19.12.2025 13:47 —

👍 27

🔁 13

💬 2

📌 0

Too bad. But a good sign for the game. 😃

16.11.2025 12:23 —

👍 1

🔁 0

💬 0

📌 0

Have you played it? What are your first impressions?

16.11.2025 11:31 —

👍 1

🔁 0

💬 1

📌 0

🧵 1/

🚨 New paper out in PLOS ONE! w/ @caropradier.bsky.social @benzpierre.bsky.social @natsush.bsky.social @ipoga.bsky.social @lariviev.bsky.social

We studied 43k authors and 264k citation links in U.S. economics to ask:

👉 Why do some papers cite others?

🔗 journals.plos.org/plosone/arti...

27.10.2025 18:06 —

👍 32

🔁 23

💬 1

📌 3

That being said, I'm looking forward to the insights from the 'referee consensus model'.

2/2

28.07.2025 09:38 —

👍 2

🔁 0

💬 1

📌 0

Not very surprising to me, as in traditional journal peer review, the editor(s) are expected to reconcile views among individual referees. This provides an additional perspective while keeping the decision-making power with the editor(s).

1/2

28.07.2025 09:38 —

👍 0

🔁 0

💬 1

📌 0

😊

04.07.2025 08:38 —

👍 1

🔁 0

💬 0

📌 0

We often have to judge who is knowledgeable—precisely when we are not. Can humans really do that? Our new paper in Psychological Science shows that, surprisingly, we can. drive.google.com/file/d/1b15E...

02.06.2025 11:42 —

👍 102

🔁 30

💬 5

📌 2

There is a large literature on grant peer review but afaik nobody has looked at review scores like you have. Interesting!

09.05.2025 19:52 —

👍 0

🔁 0

💬 0

📌 0

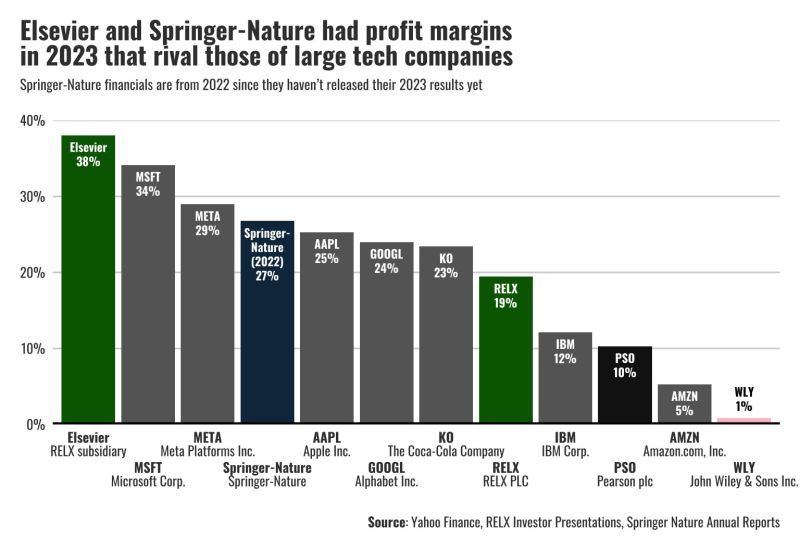

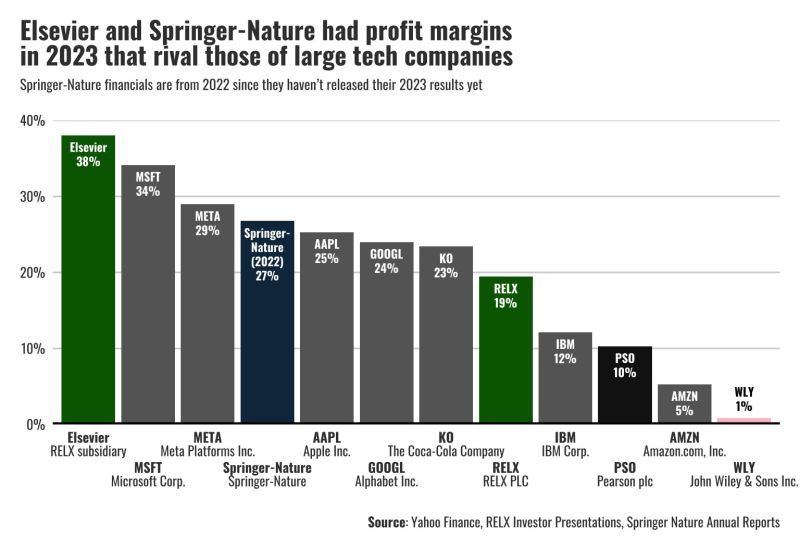

who says that science doesn't generate profit?

24.04.2025 01:38 —

👍 50

🔁 24

💬 5

📌 7

Research Topic Choice: Motivations, Strategies, and Consequences

Abstract. Scientists’ choices of what research topics to pursue are highly consequential and have been the subject of many studies. However, these studies are dispersed across several fields and liter...

How do scientists choose which topic to study?

Decades of studies on this, but they're dispersed across fields and not synthesized. Fortunately for us, Sidney

@sdxiang.bsky.social has written a fantastic review of this literature, focusing on econ and soc lit!

direct.mit.edu/qss/article/...

30.03.2025 23:26 —

👍 27

🔁 6

💬 2

📌 1

Danke für die rasche und klare Antwort! 👍

08.03.2025 10:59 —

👍 1

🔁 0

💬 1

📌 0

Weshalb sollte man eine Paketlösung wollen? Weshalb nicht?

08.03.2025 09:14 —

👍 1

🔁 0

💬 1

📌 0

Out now in Nature Human Behaviour: Our 68-country #survey on public attitudes to #science 📣

It shows: People still #trust scientists and support an active role of scientists in society and policy-making. #OpenAccess available here: www.nature.com/articles/s41... @natureportfolio.bsky.social

(1/13)

20.01.2025 10:27 —

👍 362

🔁 164

💬 7

📌 21

Screenshot of paper "Open Science at the generative AI turn: An exploratory analysis of challenges and opportunities" by Mohammad Hosseini, Serge P. J. M. Horbach, Kristi Holmes and Tony Ross-Hellauer

Crossmark: Check for Updates

Author and Article Information

Quantitative Science Studies 1–24.

https://doi.org/10.1162/qss_a_00337

Abstract

Technology influences Open Science (OS) practices, because conducting science in transparent, accessible, and participatory ways requires tools and platforms for collaboration and sharing results. Due to this relationship, the characteristics of the employed technologies directly impact OS objectives. Generative Artificial Intelligence (GenAI) is increasingly used by researchers for tasks such as text refining, code generation/editing, reviewing literature, and data curation/analysis. Nevertheless, concerns about openness, transparency, and bias suggest that GenAI may benefit from greater engagement with OS. GenAI promises substantial efficiency gains but is currently fraught with limitations that could negatively impact core OS values, such as fairness, transparency, and integrity, and may harm various social actors. In this paper, we explore the possible positive and negative impacts of GenAI on OS. We use the taxonomy within the UNESCO Recommendation on Open Science to systematically explore the intersection of GenAI and OS. We conclude that using GenAI could advance key OS objectives by broadening meaningful access to knowledge, enabling efficient use of infrastructure, improving engagement of societal actors, and enhancing dialogue among knowledge systems. However, due to GenAI’s limitations, it could also compromise the integrity, equity, reproducibility, and reliability of research. Hence, sufficient checks, validation, and critical assessments are essential when incorporating GenAI into research workflows.

1/ 🚨 NEW PAPER! “Open Science at the Generative AI Turn”

In a new study just published in Quantitative Science Studies, we explore how GenAI both enables and challenges Open Science, and why GenAI will benefit from adopting Open Science values. 🧵

doi.org/10.1162/qss_...

#OpenScience #AI #GenAI

17.12.2024 10:34 —

👍 16

🔁 11

💬 1

📌 1

Renovating the Theatre of Persuasion. ManyLabs as Collaborative Prototypes for the Production of Credible Knowledge | Preprint screenshot

Renovating the Theatre of Persuasion. ManyLabs as Collaborative Prototypes for the Production of Credible Knowledge; a new preprint & thread.

In it, I'll say a little about theatres of persuasion, and why new collaborative structures change how they look osf.io/preprints/me... #sts #metascience 1/

03.12.2024 09:12 —

👍 41

🔁 23

💬 2

📌 0

😂🤣

28.11.2024 21:36 —

👍 0

🔁 0

💬 0

📌 0

Does training of peer reviewers work?

"Evidence from 10 RCTs suggests that training peer reviewers may lead to little or no improvement in the quality of peer review."

Cochrane systematic review 🔓: www.cochranelibrary.com/cdsr/doi/10....

28.11.2023 18:04 —

👍 26

🔁 15

💬 2

📌 0

With all the new influx of users, I’d love to see a community around #sciencepolicy #scipol #bibliometrics #scientometrics #scisci #metascience. Please share if you want to be part of it, use the tags to find others, or just say hi 👋

21.11.2024 21:13 —

👍 35

🔁 16

💬 4

📌 0

OSF

It's raining preprints, hallelujah 🎶

Here is my latest preprint (review article) >> Sustaining the ‘frozen footprints’ of scholarly communication through open citations

osf.io/preprints/so...

21.11.2024 10:34 —

👍 8

🔁 1

💬 0

📌 1

Hello Bart! 👋🏻

Taking the opportunity to express my appreciation for your research - and for contributing to the beautiful blue place! 🦋

14.11.2024 19:06 —

👍 1

🔁 0

💬 0

📌 0

(6) design and test interventions to make peer review less conservative,

(7) assess whether these interventions make a real difference to scientific progress.

15.02.2024 22:12 —

👍 1

🔁 0

💬 0

📌 0