We’re running a Q&A series with our research team. First up:

How is Monty different from a Vision Transformer?

Here’s why learning through movement changes everything 👇

youtube.com/shorts/ff4Xi...

@thousandbrains.org.bsky.social

Advancing AI & robotics by reverse engineering the neocortex. Leveraging sensorimotor learning, structured reference frames, & cortical modularity. Open-source research backed by Jeff Hawkins & Gates Foundation. Explore thousandbrains.org

We’re running a Q&A series with our research team. First up:

How is Monty different from a Vision Transformer?

Here’s why learning through movement changes everything 👇

youtube.com/shorts/ff4Xi...

🚀 Watch @vivianeclay.bsky.social present our new paper “Hierarchy or Heterarchy?” revealing a new part of the Thousand Brains Theory describing how long‑range cortical & thalamic connections work in parallel and hierarchically to build human intelligence.

youtu.be/QIoENhFu2VU

What if we've been building AI completely wrong? While tech giants burn billions training on internet data, @thousandbrains.org just created an AI that learns like a child by exploring and touching objects. It’s the real path to intelligence: gregrobison.medium.com/hands-on-int...

16.07.2025 18:47 — 👍 5 🔁 2 💬 0 📌 2

🎥 Watch @cortical-canonical.bsky.social present our new paper:

"Thousand-Brains Systems: Sensorimotor Intelligence for Rapid, Robust Learning and Inference"

Watch the full talk here: youtu.be/3d4DmnODLnE

Sensorimotor learning implemented from 20 years of neocortex research.

10/

Thousand Brains Project is an open-source, open-research nonprofit building neocortex-based AI.

Join us on our journey.

Forum: thousandbrains.discourse.group

Roadmap: thousandbrainsproject.readme.io/docs/project...

Website: thousandbrains.org

Docs: thousandbrainsproject.readme.io

9/

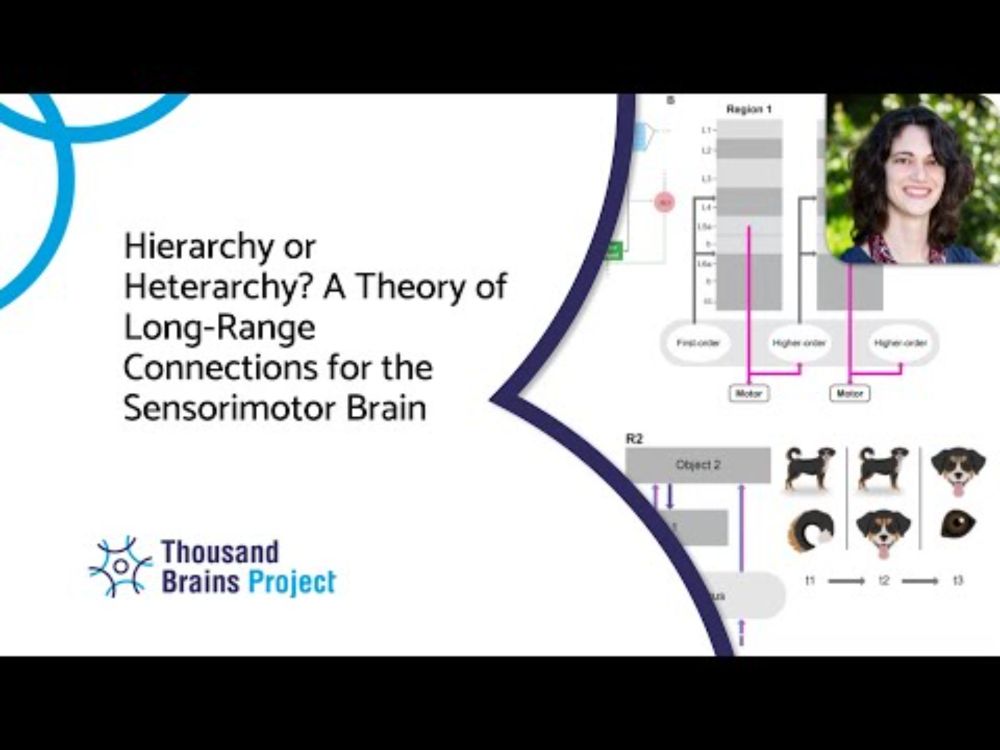

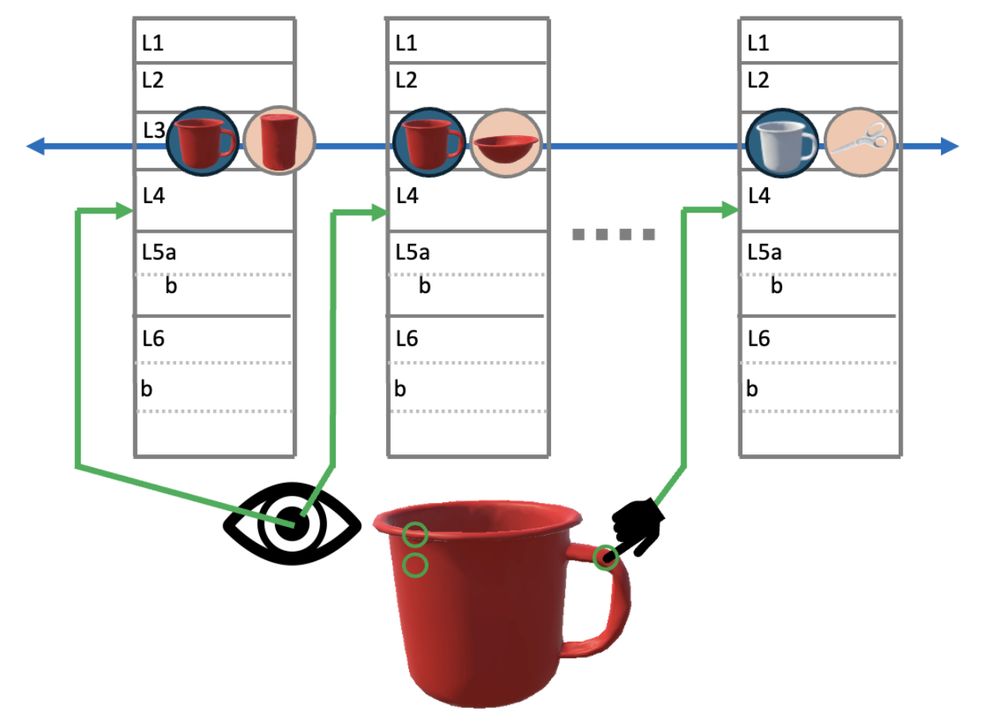

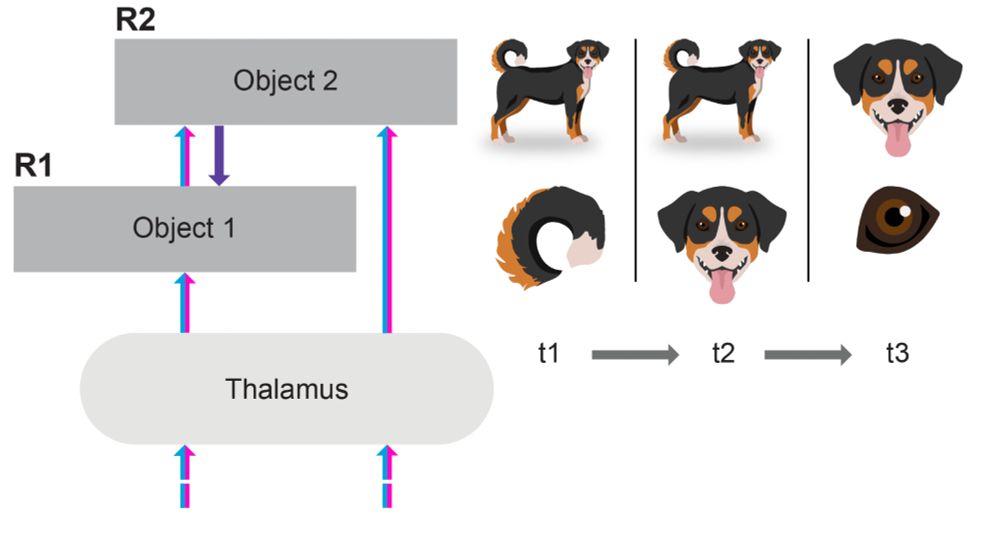

Hierarchical connections are used to learn compositional models.

A new mug with a known logo means that you don’t relearn either one. Your brain composes: “mug” + “logo.” Columns at different levels but with overlapping receptive field link representations spatially.

8/

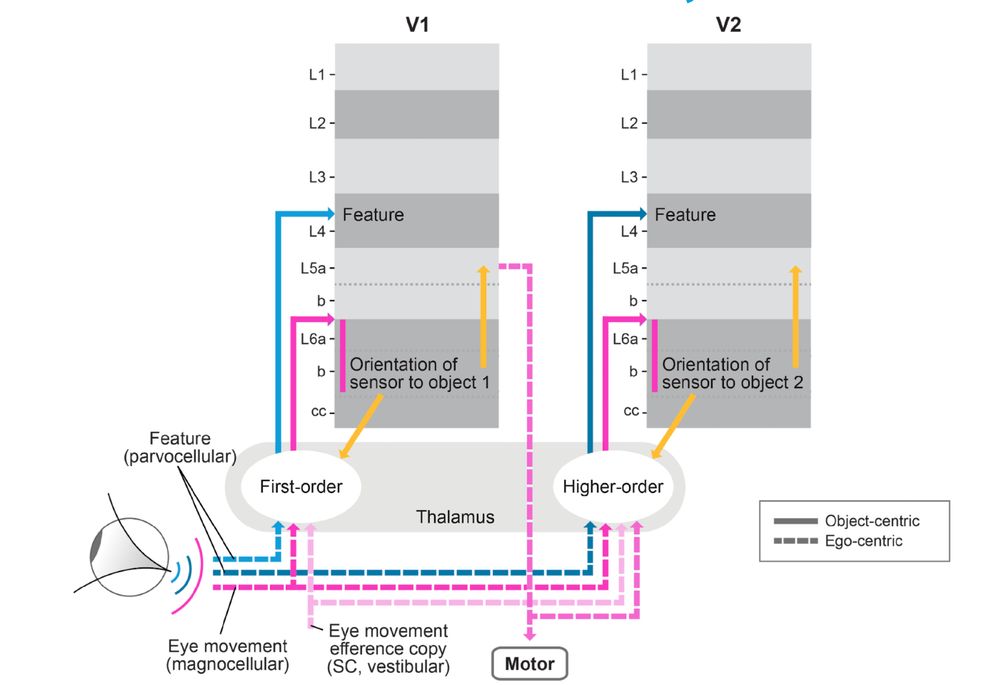

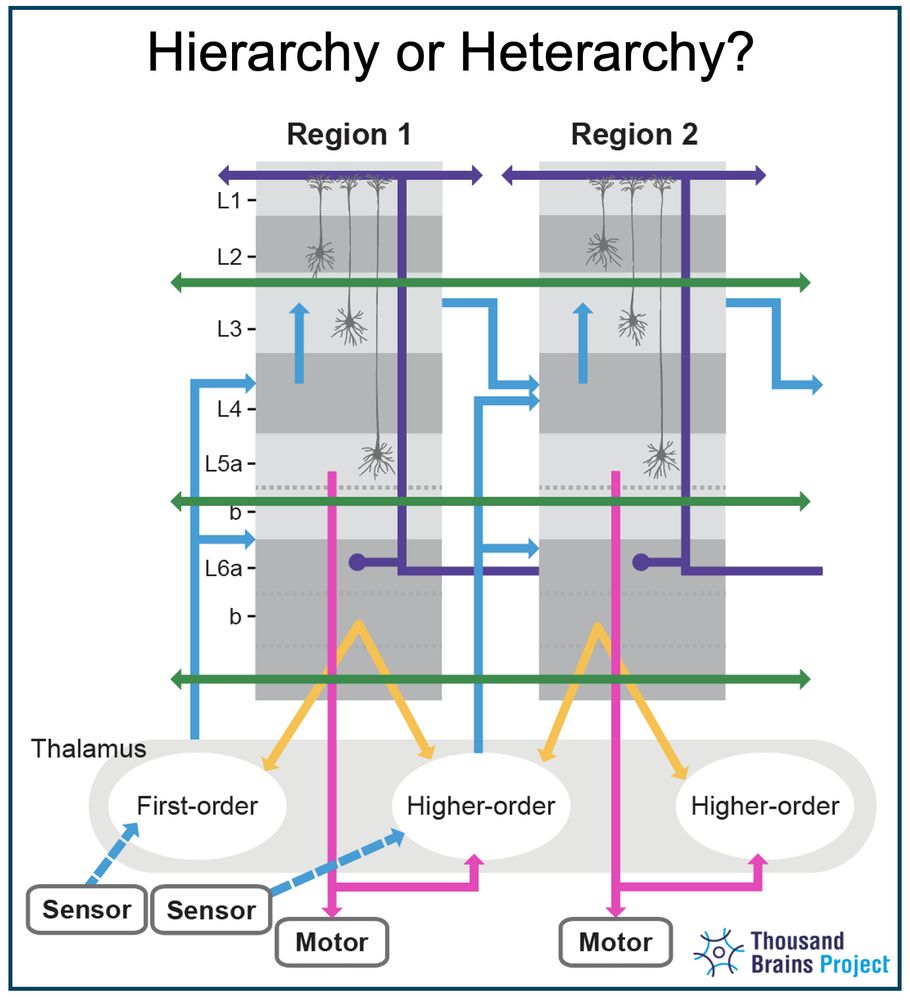

So what’s the thalamus doing?

It’s not just a relay. It helps convert sensory input from body-centered to object-centered coordinates. Essential for modeling the world via reference frames.

7/

Neocortical columns don’t think in egocentric space, “left of hand.” They think in object-centric space, “on the handle.” The thalamus helps do this translation. This is a key new proposal about the role of the thalamus.

6/

The Thousand Brains Theory already outlined several key proposals:

Each column builds its own model.

They vote.

They form consensus.

No need to wait for a final decision at the top.

5/

This paper argues that the neocortex is not a strict hierarchy. It has many non-hierarchical connections, each of which serves an essential role in modeling and interacting with the world.

4/

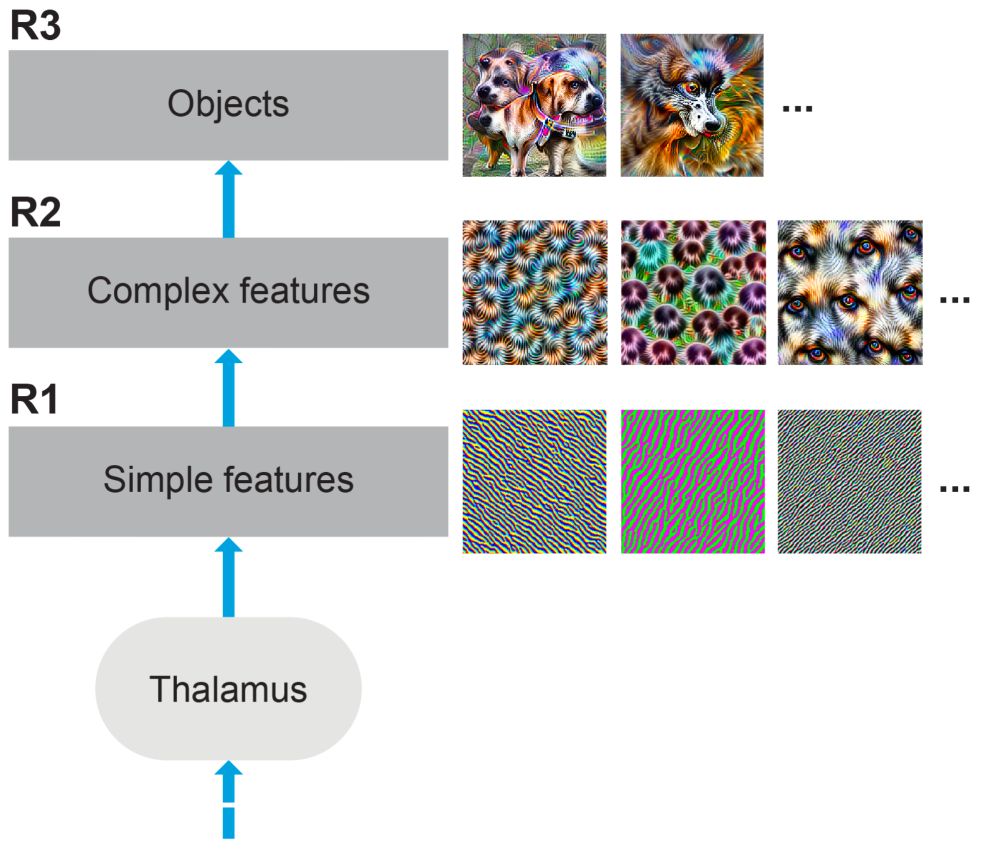

Our new view recasts cortical hierarchy as composition, not feature extraction. Hierarchy is used to combine known parts into new wholes.

an incomplete view of how the neocortex uses hierarchy

3/

Classically, the neocortex is viewed as a hierarchy: low levels detect edges, high levels recognize objects. But that model is incomplete. There are several other important connections most people overlook. But they are crucial for sensorimotor intelligence.

2/

TLDR; Watch @viviane give a presentation about this paper here: youtu.be/QIoENhFu2VU

Or read the plain language explainer here: thousandbrains.medium.com/hierarchy-or...

1/

🚨Another New Paper Drop! 🚨 “Hierarchy or Heterarchy? A Theory of Long-Range Connections for the Sensorimotor Brain”

👇 Dive into the full thread 🧵

arxiv.org/abs/2507.05888

🔥 Want to understand how the neocortex builds intelligence?

Artem Kirsanov made a great video on the Thousand Brains Theory, the foundation of everything we’re building at here Thousand Brains Project!

🎥 youtu.be/Dykkubb-Qus

#Neuroscience #AI #ThousandBrains #Neocortex

/16

Thousand Brains Project is an open-source, open-research nonprofit building a new type of machine intelligence based on principles of the neocortex.

Join us:

Forum: thousandbrains.discourse.group

Roadmap:

Docs: thousandbrainsproject.readme.io thousandbrainsproject.readme.io/docs/project...

/15

💾 Open source

• Replicate our results: github.com/thousandbrai...

• Code & docs: github.com/thousandbrai...

Fork it, break it, improve it.

/14

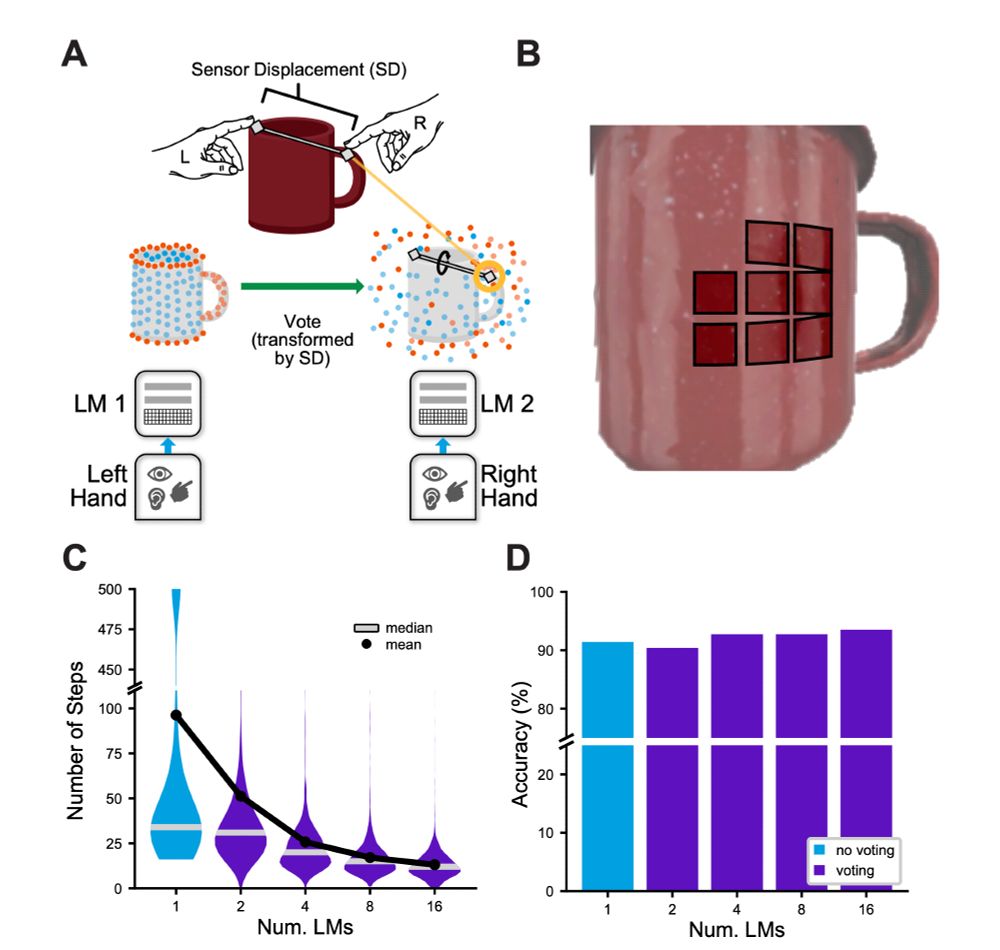

Voting across modules

Multiple learning modules share hypotheses; consensus arrives >2× faster without losing accuracy. Imagine two eyes, two fingers, or a sensor grid collaborating in real time.

/13

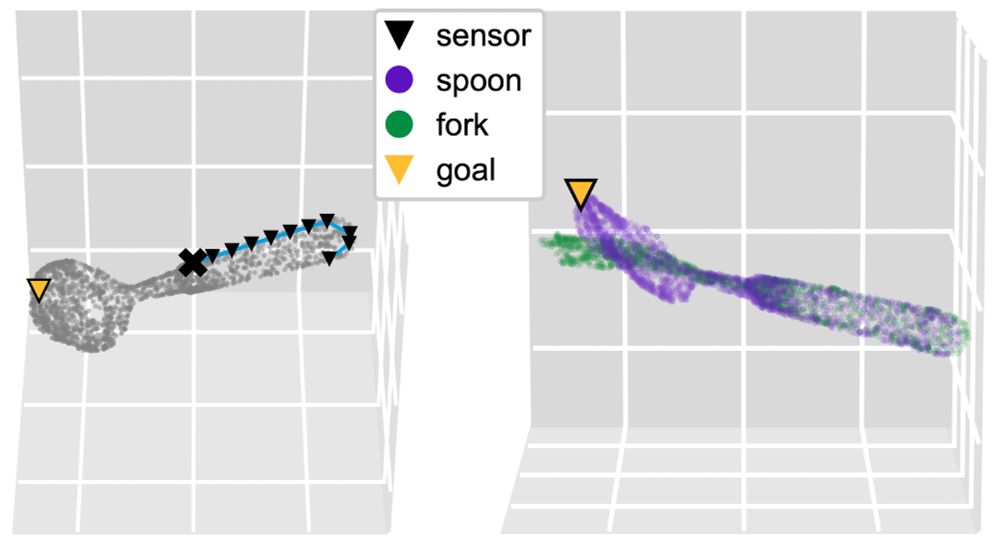

🏃♀️ Movement matters

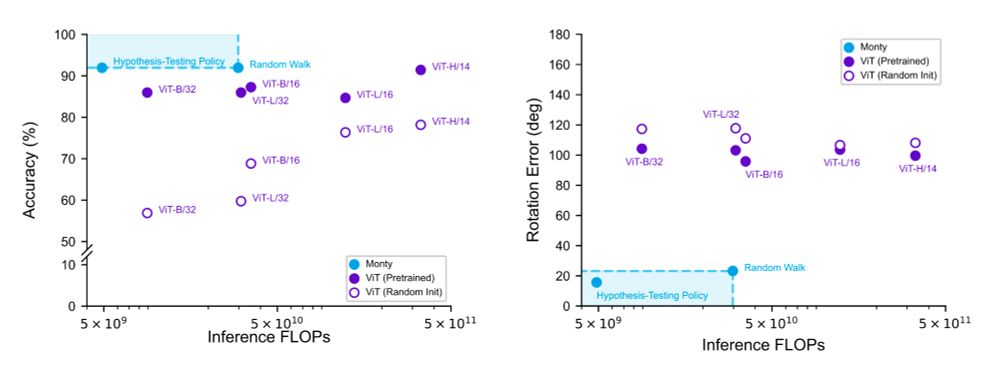

A simple curvature-following policy + a hypothesis-testing policy cut inference steps ~3× vs. random walks. Monty can leverage its learned models to perform principled movements to resolve uncertainty.

/12

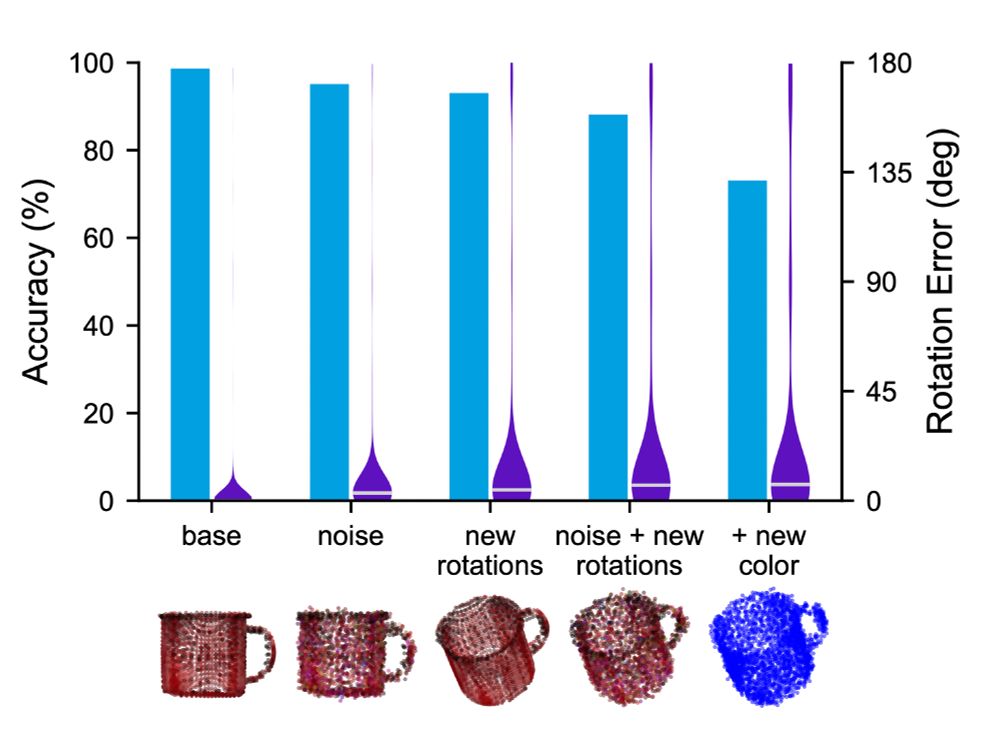

Robust inference and generalization

Monty recognized all 77 YCB objects and their pose with 90+% accuracy, even with noise, novel rotations. Even showing the object in a never-before-seen color doesn’t phase Monty.

/11

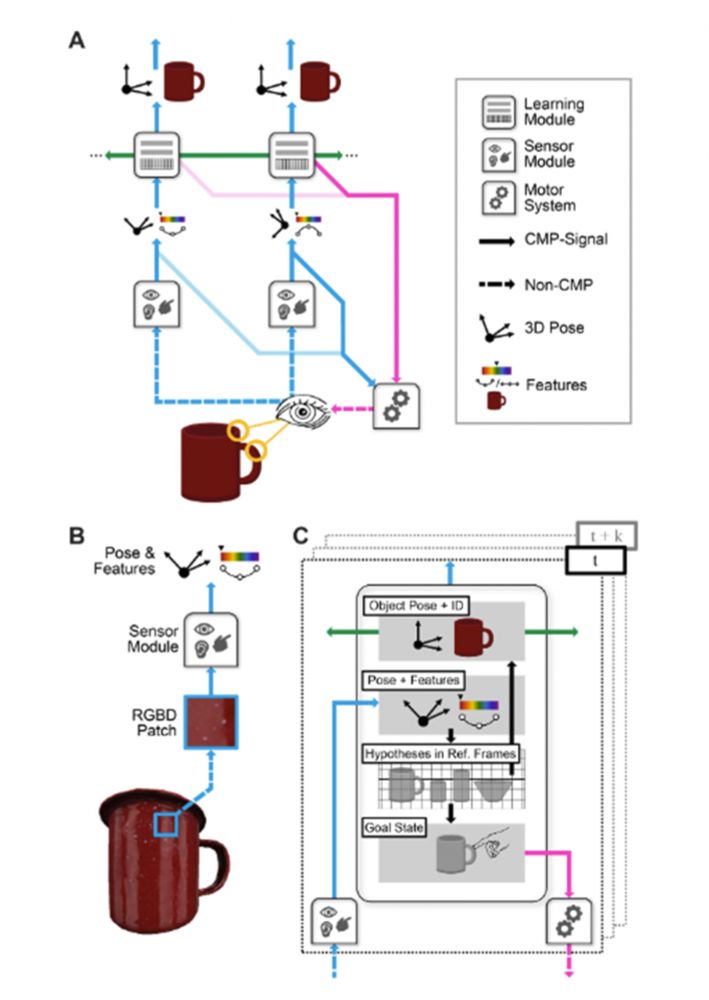

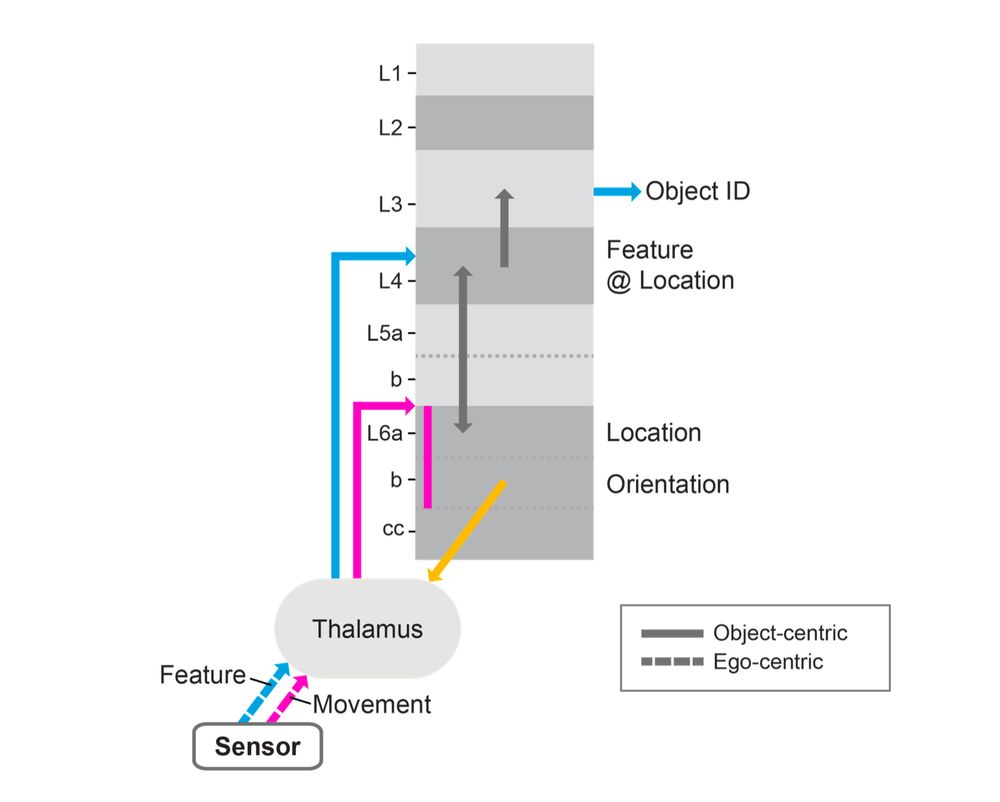

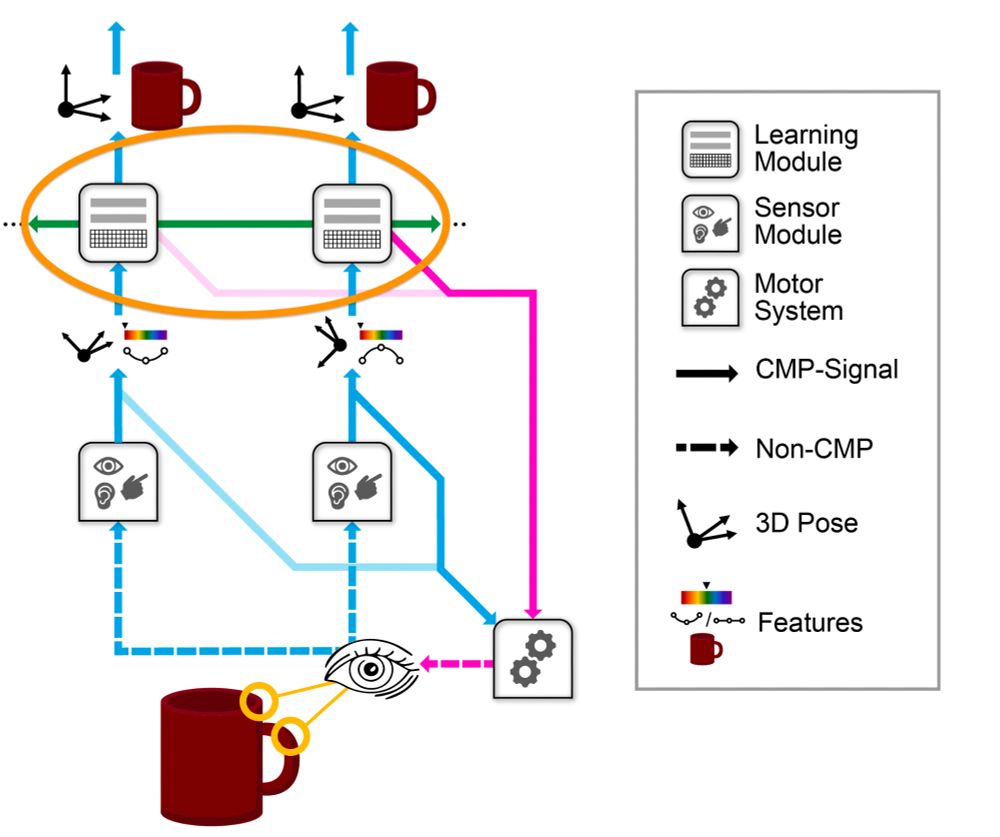

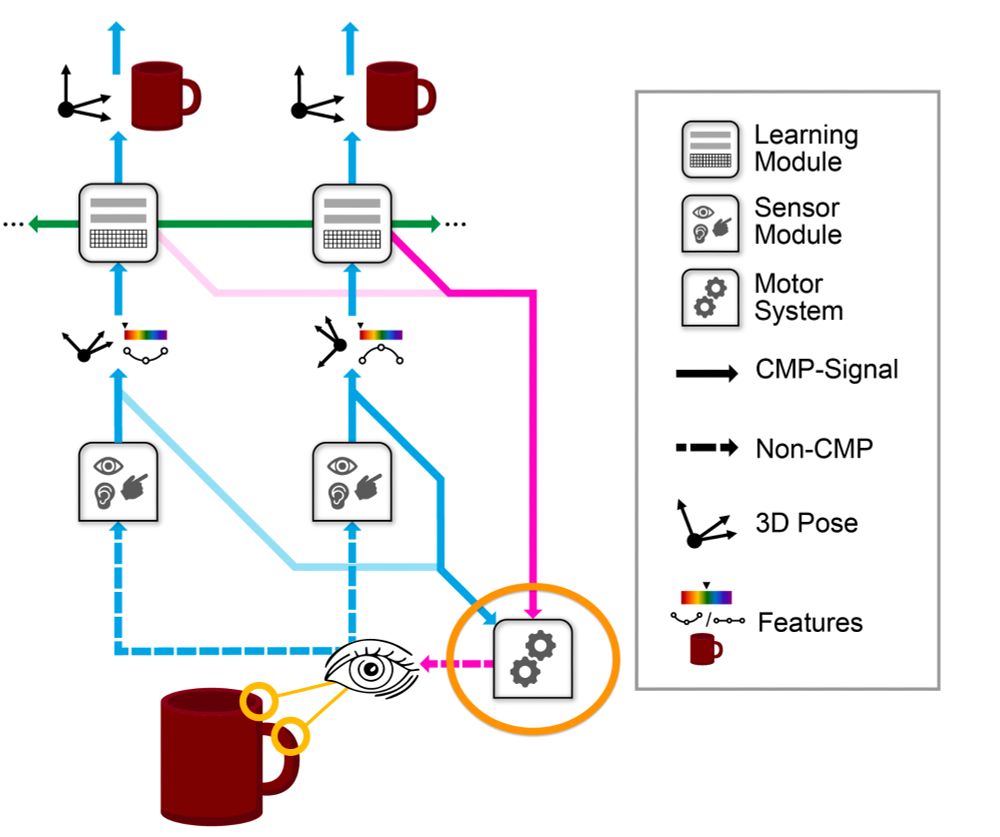

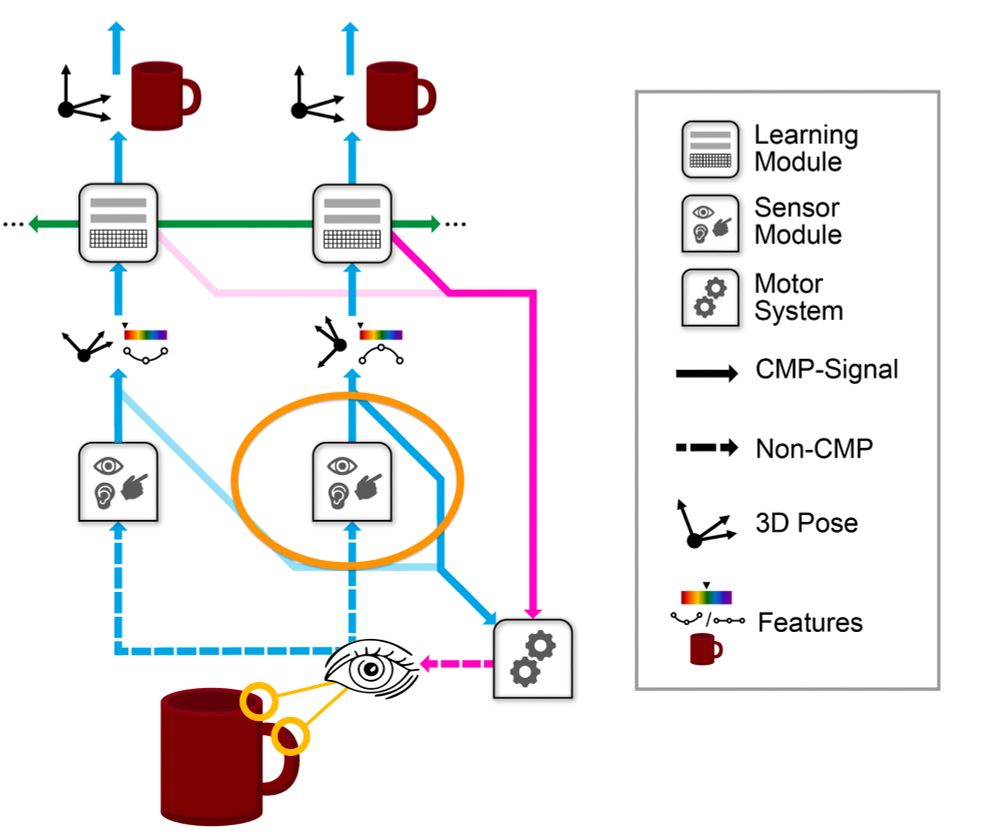

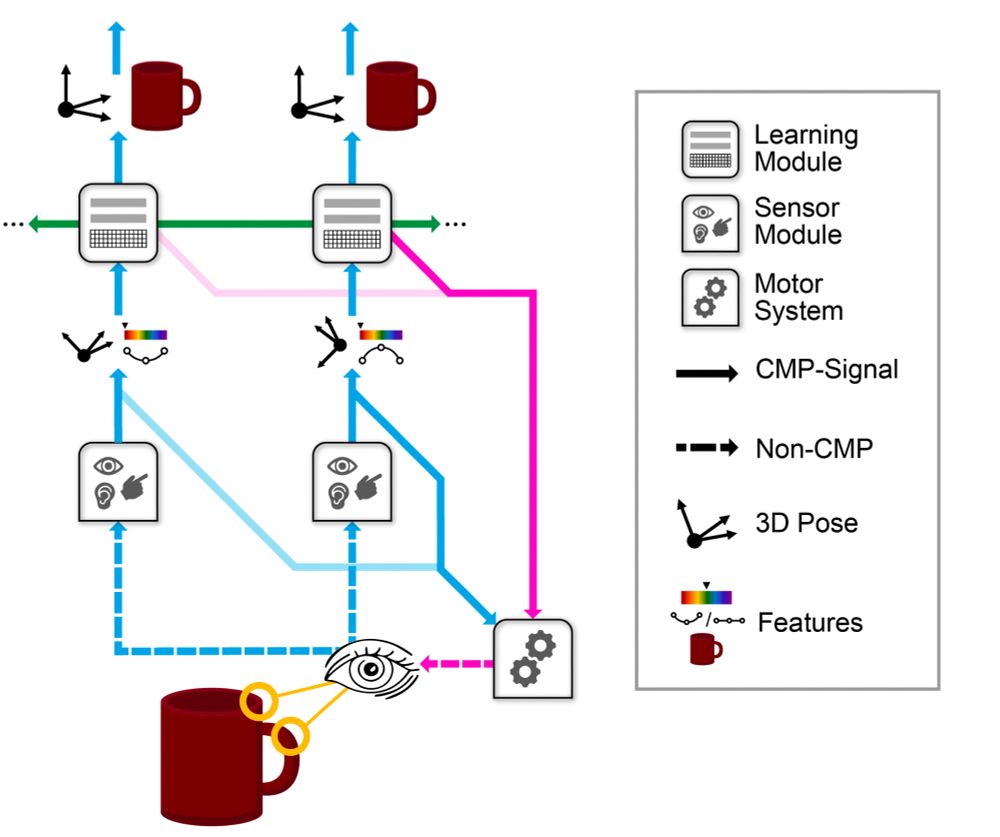

Learning modules can quickly reach consensus through voting about their most likely object and pose hypotheses, rather than having to integrate over time with multiple sensations.

/10

Motor commands from all the learning modules tell the system where it should observe next, causing a subsequent movement to observe that location.

/9

Learning Modules: a semi-independent modelling system that builds models of objects by integrating sensed observations with object-relative coordinates derived from the body-centric sensor locations.

/8

This new architecture comprises of the following subsystems that communicate using the cortical messaging protocol (CMP)

Sensor Modules: that observe a small patch of the world and send it to the learning module.

/7

This is a new type of machine learning architecture based on principles derived from 20+ years of research into the neocortex.

/6

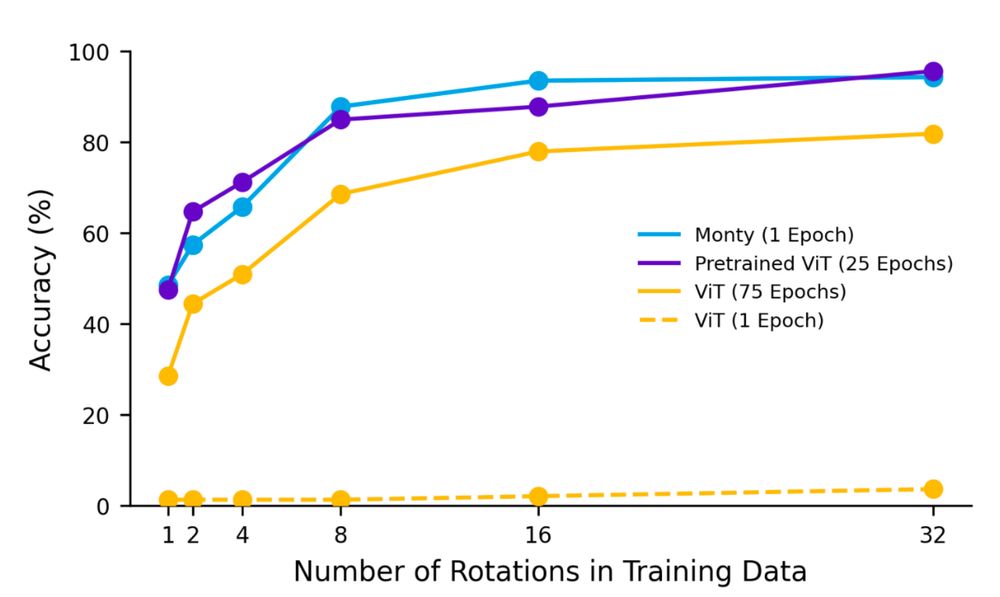

Few-shot learning

After just 8 views per object Monty hits ~90 % accuracy.

/5

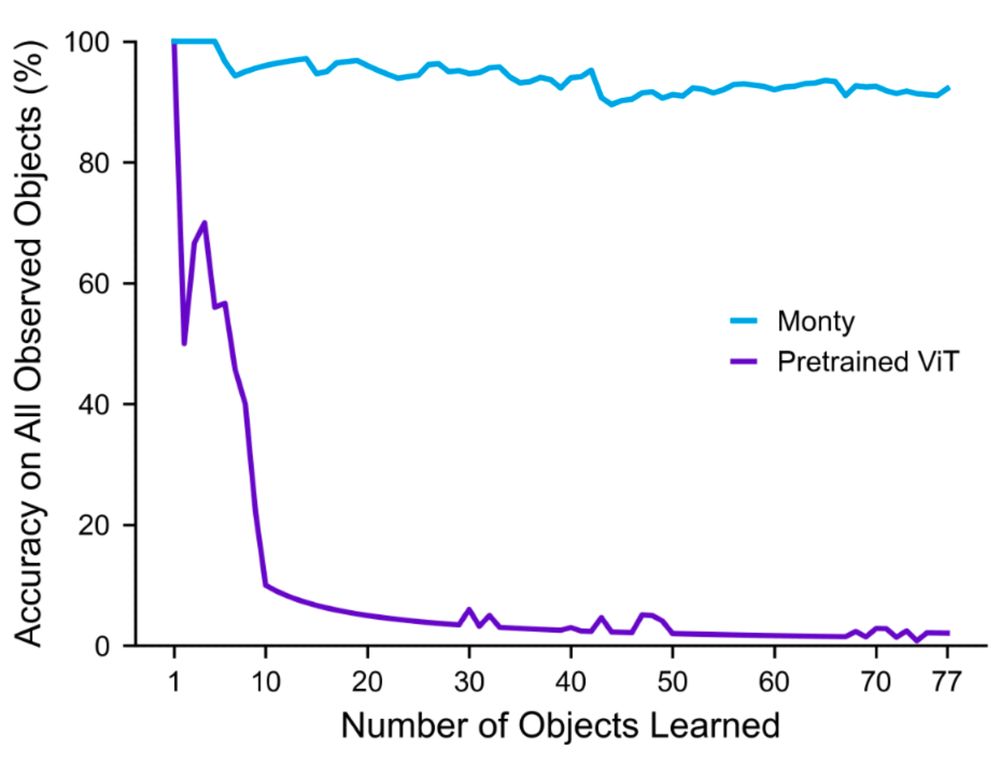

Monty learns continually with virtually no loss of accuracy as new objects are added to the model. Deep learning famously suffers from catastrophic forgetting in those settings.

/4

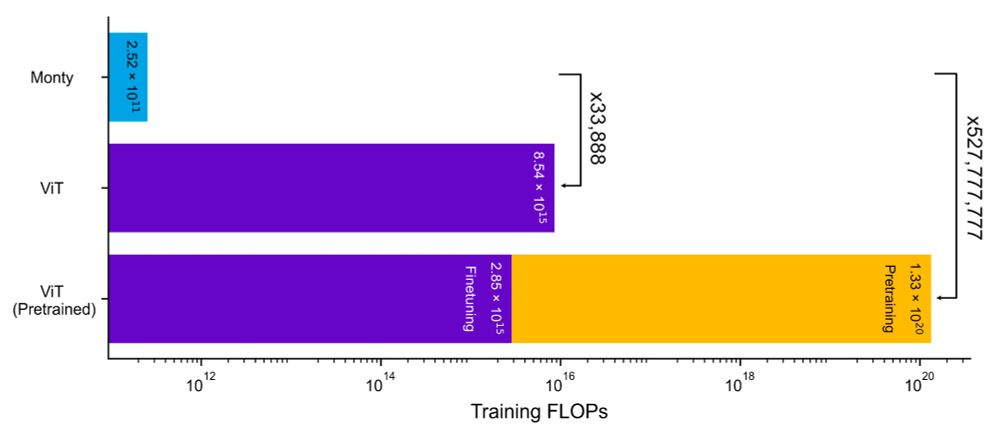

Green AI is here: Monty slashes compute needs in training and inference, yet beats a pretrained, finetuned ViT on object and pose tasks. It used 33,888× fewer FLOPs than ViT trained from scratch and 527,000,000× fewer than a pretrained ViT.

3/

A remarkable result: Monty achieves the same accuracy as a Vision Transformer while using 527 million times less computation, and it does so without suffering from catastrophic forgetting.

/2

TLDR; Watch @cortical-canonical.bsky.social give a presentation about this paper here: youtu.be/3d4DmnODLnE

Or read the plain language explainer here: thousandbrains.medium.com/thousand-bra...