Paper: openreview.net/forum?id=Tgc...

Code: github.com/dilyabareeva...

Dilyara Bareeva

@dilya.bsky.social

PhD Candidate in Interpretability @FraunhoferHHI | 📍Berlin, Germany dilyabareeva.github.io

@dilya.bsky.social

PhD Candidate in Interpretability @FraunhoferHHI | 📍Berlin, Germany dilyabareeva.github.io

Paper: openreview.net/forum?id=Tgc...

Code: github.com/dilyabareeva...

Huge thanks to my fantastic co-authors Marina MC Höhne, Alexander Warnecke, @lpirch.bsky.social, Klaus-Robert Müller, @rieck.mlsec.org, @slapuschkin.bsky.social, @kirillbykov.bsky.social, and to the UMI Lab, @aifraunhoferhhi.bsky.social, @xai-berlin.bsky.social and @bifold.berlin for the support!

29.11.2025 16:38 — 👍 3 🔁 1 💬 1 📌 0

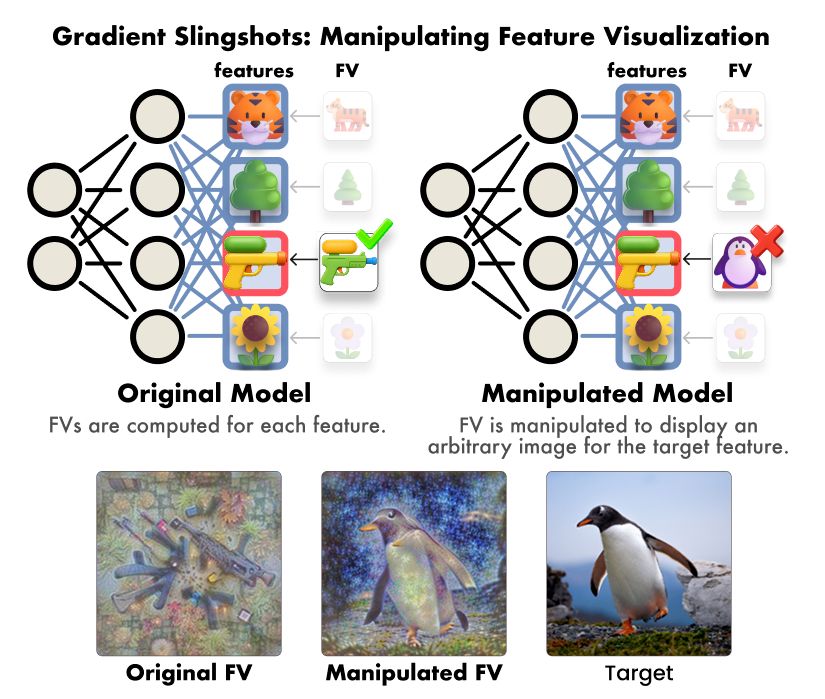

Our lightweight adversarial fine-tuning attack lets you bend a feature to visualize any arbitrary concept. Off-manifold, we impose a hyperbolic activation landscape with its optimum at the target, while preserving on-distribution activations through a weighted two-term loss. 🕵️♀️

29.11.2025 16:38 — 👍 1 🔁 1 💬 1 📌 0

✈️🇲🇽 Next Wednesday (Dec 3), 1–4 p.m. CST, I’ll be presenting Manipulating Feature Visualizations with Gradient Slingshots at NeurIPS 2025 in Mexico City!

Feature Visualization has long been a staple interpretability tool. Our work shows it’s far from reliable! 🚨

Sadly, I wasn’t able to make it to NeurIPS this year. For anyone attending, check out our quanda poster at the ATTRIB workshop tomorrow (Saturday) from 3 to 4:30 pm, presented by Galip Ümit Yolcu and Anna Hedström!

GitHub: github.com/dilyabareeva...

Paper: arxiv.org/abs/2410.07158