It would be fun for a benchmark to focus on problems that are more "visual" - truths that are easy for humans to "see" but hard for them to prove formally

23.07.2025 11:36 — 👍 0 🔁 0 💬 0 📌 0

Isn't natural language still awfully close to a formal / symbolic domain? Human mathematical intuition seems grounded in spatiotemporal relationships, not natural language.

22.07.2025 13:20 — 👍 1 🔁 0 💬 1 📌 0

It could be called "turbulence"

17.07.2025 11:09 — 👍 1 🔁 0 💬 0 📌 0

The Bluesky Python SDK is so cool!

14.07.2025 01:55 — 👍 0 🔁 0 💬 0 📌 0

Length of chain of thought does indeed correlate to difficulty - see attached

29.06.2025 09:14 — 👍 0 🔁 0 💬 1 📌 0

I’m genuinely confused by these statements. Chain of thought length absolutely does correlate to difficulty - generally the LLM will stop thinking when it reached a reasonable answer. Likewise in human reasoning!

29.06.2025 08:32 — 👍 0 🔁 0 💬 1 📌 0

The number of tokens doesn't necessarily stay the same, does it? LLMs can execute algorithms and output the stored values at intermediate steps as tokens, so the number of tokens / amount of computation scales up with the difficulty of the problem (size of the input, in the case of factorization)

28.06.2025 11:09 — 👍 0 🔁 0 💬 1 📌 0

But isn’t it just a constant amount of compute per token? Producing more tokens involves using more time and space. Chain of thought, etc.

28.06.2025 10:47 — 👍 0 🔁 0 💬 1 📌 0

By contrast, good explanatory scientific theories generalize to broader set of "perturbations" than just the types of experiments that went into constructing the theory. Watson and Crick's model of DNA was not just a way to predict x-ray diffraction patterns.

25.06.2025 23:35 — 👍 3 🔁 0 💬 1 📌 0

Totally right, you said something different. You're much more pro- this type of model learned from perturbation data.

My concern is that you end up with a causal model, yes - but the perturbations are drawn from a very constrained distribution. The ML model can more or less memorize them.

25.06.2025 23:20 — 👍 1 🔁 0 💬 1 📌 0

Also notable that this type of work doesn't use any of the conditional independence assumptions that are common in the causal modeling community @alxndrmlk.bsky.social

24.06.2025 17:27 — 👍 1 🔁 0 💬 1 📌 0

@kordinglab.bsky.social argued in a recent talk that you can't learn a model from canned data that will let you simulate perturbation experiments.

bsky.app/profile/kemp...

But this type of model seems darn close.

24.06.2025 17:25 — 👍 0 🔁 0 💬 1 📌 0

Cool work out of @arcinstitute.org . My question is, do models like this let us perform novel in-silico experiments the way first-principles models do, or are they just clever way of extrapolating existing experimental data from one context to another?

24.06.2025 17:15 — 👍 1 🔁 0 💬 1 📌 0

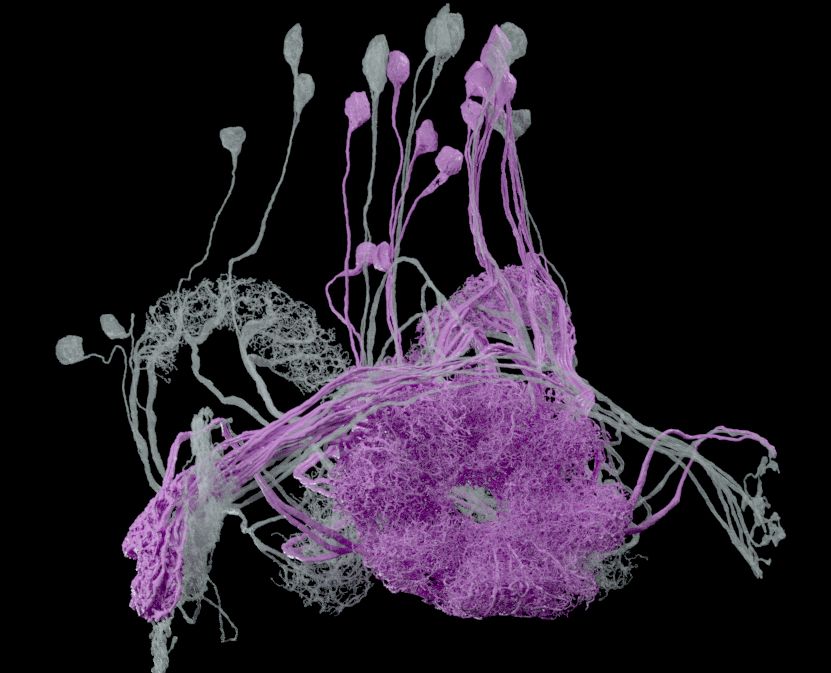

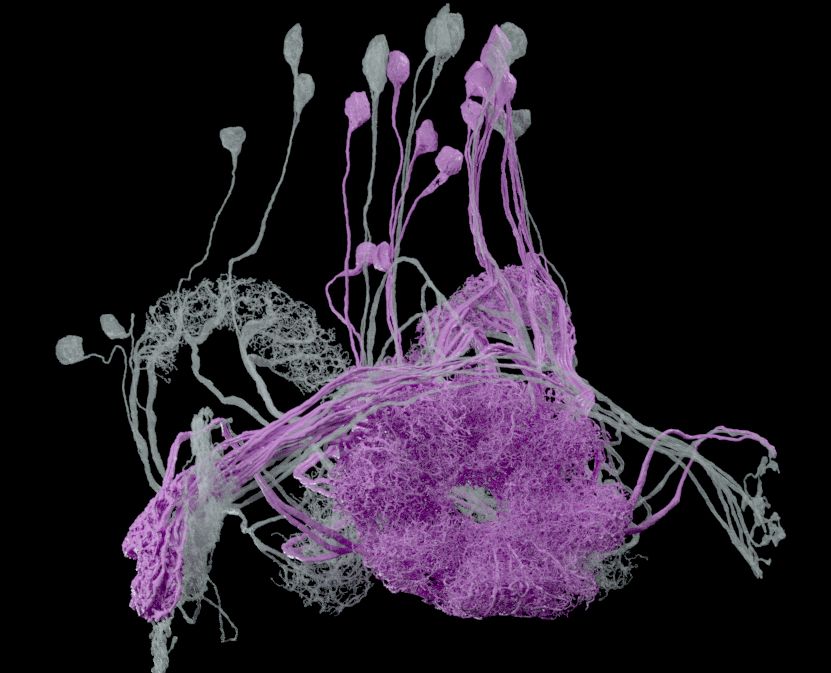

Two sets of connecting fly neurons with fine, wispy arbors.

Cleaning up disk space, I found this image I made for someone not long after the release of the #HHMIJanelia #Drosophila hemibrain #connectome in 2020. It shows EPG neurons in pink providing inputs to PFL1 neurons in transparent grey. I'm not sure if the image was ever used.

18.06.2025 22:46 — 👍 11 🔁 2 💬 1 📌 0

Philip did mention a MW talk from Zurek I think

14.06.2025 13:55 — 👍 0 🔁 0 💬 1 📌 0

Do we know if the number of steps they can perform is related to how many steps they saw in their training data? Can RL fine-tuning increase the number of steps?

10.06.2025 11:52 — 👍 0 🔁 0 💬 0 📌 0

Does anyone know what species this is? Would love to know more about what structures play the role of nervous system and muscles

08.06.2025 22:04 — 👍 0 🔁 0 💬 0 📌 0

Who knew that Chargaff was into this stuff

08.06.2025 14:03 — 👍 0 🔁 0 💬 0 📌 0

Against reductionism: "Our understanding of the world is built up of innumerable layers. Each is worth exploring, as long as we do not forget that it is one of many. Knowing all there is to know about one layer (...) would not teach us much about the rest". Erwin Chargaff

08.06.2025 12:45 — 👍 38 🔁 10 💬 3 📌 0

Things that aren’t chocked full of information-bearing molecules

07.06.2025 17:31 — 👍 8 🔁 0 💬 0 📌 0

Because the kind of theories we want involve phenomena that span 3-4 orders of magnitude in space (synapses vs. brains) and 6-7 orders of magnitude in time (action potentials vs. skill acquisition)?

07.06.2025 14:40 — 👍 18 🔁 0 💬 0 📌 0

There’s a good definition of computational universality (Church-Turing) - why couldn’t there be one of general intelligence?

30.05.2025 13:14 — 👍 2 🔁 0 💬 0 📌 0

If constructor theory told us something amazing *was* constructible, it might help motivate us to build it.

Conversely we could avoid wasting our time on things not even constructible in principle.

25.05.2025 16:17 — 👍 0 🔁 0 💬 0 📌 0

Quiet posters feed. You’re welcome.

24.05.2025 20:13 — 👍 0 🔁 0 💬 0 📌 0

To all the international students, post-docs, scientists, and other academics I’ve been friends with over the years - we support you, and we want you here

23.05.2025 21:13 — 👍 0 🔁 0 💬 0 📌 0

What do you mean by "information about"?

22.05.2025 02:20 — 👍 0 🔁 0 💬 1 📌 0

No. Burning a library destroys something. Not physical information (that’s left in the heat and ash) but knowledge about the world. Whatever the fire is destroying, the brain can create “de novo”. It’s not conserved.

21.05.2025 13:42 — 👍 0 🔁 0 💬 1 📌 0

Physics is also information-preserving.

So there’s been no “new” information since the Big Bang.

But there must be some other sense in which new things do come into existence.

18.05.2025 20:52 — 👍 8 🔁 0 💬 1 📌 1

New information, no.

But new ideas, new knowledge, yes.

Einstein didn’t acquire relativity from observations, he invented it.

15.05.2025 17:04 — 👍 1 🔁 0 💬 1 📌 0

@annakaharris.bsky.social @philipgoff.bsky.social All our *discourse* about C is 3rd-person observable - neurons firing, vocal cords moving, etc. We expect a boring old physical story one day. Won't that story undercut panpsychism?

@seanmcarroll.bsky.social did you ever get a satisfying answer?

15.04.2025 13:07 — 👍 1 🔁 0 💬 0 📌 0

I'm a physicist; I invent techniques to measure and control biological systems.

Homepage: andrewgyork.github.io

Bioinformatics Scientist at the Arc Institute.

Working at the intersection of functional genomics, systems biology, and machine learning. I also build rusty bioinformatics tools

https://github.com/noamteyssier

Frontier models for all molecules of life.

Long career as a dilettante at Bell Labs Research and Google, mostly building weird stuff no one uses, but occasionally getting it right, such as with UTF-8 and Go.

Postdoc @ Princeton AI Lab

Natural and Artificial Minds

Prev: PhD @ Brown, MIT FutureTech

Website: https://annatsv.github.io/

CS Prof at UC Irvine, CTO/Cofounder at Envive AI

Work on evaluation and robustness of LLMs

Msc at @eth interested in ML interpretability

Technical advisor to @bluesky - first engineer at Protocol Labs. Wizard Utopian

dec/acc 🌱 🪴 🌳

Professor @ASU @sfiscience

Statistical mechanic, secular Bayesian.

Prof. of Complex Systems and Network Science @ IT:U, Austria

Head of the “Inverse Complexity Lab”.

@invcomplexity.skewed.de

https://skewed.de/lab

Computation & Complexity | AI Interpretability | Meta-theory | Computational Cognitive Science

https://fedeadolfi.github.io

On the job market!

research scientist @deepmind. language & multi-agent rl & interpretability. phd @BrownUniversity '22 under ellie pavlick (she/her)

https://roma-patel.github.io

I make sure that OpenAI et al. aren't the only people who are able to study large scale AI systems.

Robustness, Data & Annotations, Evaluation & Interpretability in LLMs

http://mimansajaiswal.github.io/

Enjoy not enjoying ideals | Interpretability of modular convnets applied to 👁️ and 🛰️🐝 | she/her 🦒💕

variint.github.io

NLP assistant prof at KU Leuven, PI @lagom-nlp.bsky.social. I like syntax more than most people. Also multilingual NLP, interpretability, mountains and beer. (She/her)

Assistant Professor in NLP (Fairness, Interpretability and lately interested in Political Science) at the University of Copenhagen ✨

Before: PostDoc in NLP at Uni of CPH, PhD student in ML at TU Berlin

Computer Science PhD student | AI interpretability | Vision + Language | Cogntive Science. Prev. intern @MicrosoftResearch.

https://martinagvilas.github.io/

Full of childlike wonder. Building friendly robots. UT Austin PhD student, MIT ‘20.