Neanderthal - Fast Native Matrix and Linear Algebra in Clojure

Clojure Fast Matrix Library - GPU and native CPU

Clojure fast matrix library Neanderthal has just been updated with native Apple silicon engine

Please check the new release 0.54.0 in Clojars.

#Java #AI #Clojure #CUDA #Apple

neanderthal.uncomplicate.org

01.07.2025 21:24 — 👍 3 🔁 2 💬 0 📌 0

30.06.2025 16:24 — 👍 1 🔁 0 💬 0 📌 0

30.06.2025 16:24 — 👍 1 🔁 0 💬 0 📌 0

GitHub - uncomplicate/neanderthal: Fast Clojure Matrix Library

Fast Clojure Matrix Library. Contribute to uncomplicate/neanderthal development by creating an account on GitHub.

Fast matrices and number crunching now available on Apple Silicon #MacOS. Check out the newest snapshots of Neanderthal in the Clojars! Add 0.54.0-SNAPSHOT to your project.clj and you're ready to go!

github.com/uncomplicate...

#Clojure #NumPy #CUDA

11.06.2025 10:59 — 👍 3 🔁 2 💬 1 📌 0

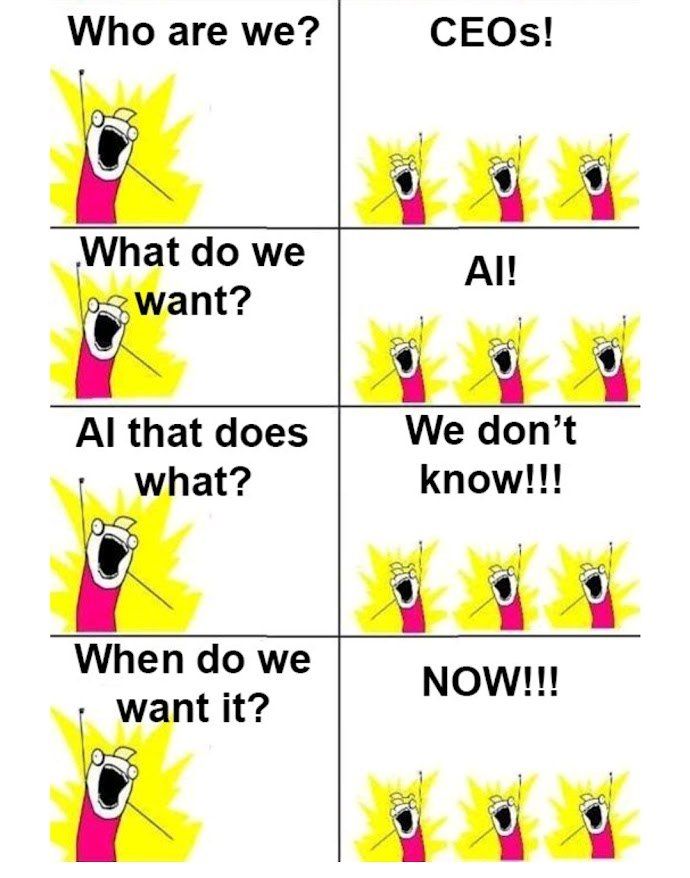

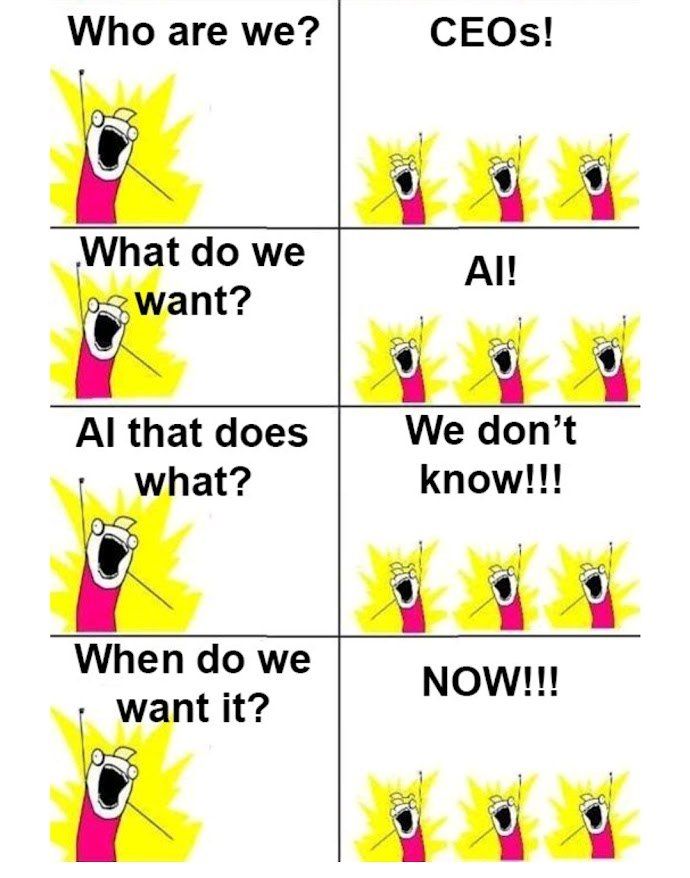

Getting AI to write good SQL | Hacker News

So, we created SQL so "analysts" can query the DB and get rid of programmers. They delegated this to programmers anyways. Now, they created AI that writes SQL, that queries the DB for "analysts". Guess who's going to be stuck writing shitty AI prompts. news.ycombinator.com/item?id=4400...

17.05.2025 17:38 — 👍 0 🔁 1 💬 0 📌 0

Damn, this is the clearest evidence yet of my “AI powered Dunning-Kruger” hypothesis: that AI proponents are only bullish about AI for work they don’t actually know or understand.

30.04.2025 16:29 — 👍 4 🔁 2 💬 1 📌 0

We need to put the punk back in cyber.

24.12.2024 02:54 — 👍 28 🔁 7 💬 1 📌 0

GitHub - uncomplicate/neanderthal: Fast Clojure Matrix Library

Fast Clojure Matrix Library. Contribute to uncomplicate/neanderthal development by creating an account on GitHub.

Apple M CPU Accelerate backend implemented for #Clojure Neanderthal! Now you have 3 superfast native CPU and 2 GPU choices when crunching numbers on the JVM!

Still available as snapshots on github.com/uncomplicate/neanderthal

(waiting for upstream releases). Thank you clojuriststogether.org

16.05.2025 08:17 — 👍 3 🔁 1 💬 0 📌 0

Interactive Programming for Artificial Intelligence Book Series: Deep Learning for Programmers, Linear Algebra for Programmers, and more

A book series on Programming, CUDA, GPU, Clojure, Deep Learning, Machine Learning, Java, Intel, Nvidia, AMD, CPU, High Performance Computing, Linear Algebra, OpenCL

Vectors/matrices/tensors are really the economy of scale at work! Don't process individual elements in your own loops; use the built-in operations to process the whole structure without looking in! CPU, GPU, CUDA, etc..

aiprobook.com

#Clojure #PyTorch #programming

14.05.2025 07:31 — 👍 2 🔁 1 💬 0 📌 0

I love physical books too!

I can't provide those for my books due to logistical issues, but I don't have anything against you printing the PDF (no drm) and binding in a hardcover binding (if such shops are available in your area).

14.05.2025 07:30 — 👍 1 🔁 0 💬 0 📌 0

Interactive Programming for Artificial Intelligence Book Series: Deep Learning for Programmers, Linear Algebra for Programmers, and more

A book series on Programming, CUDA, GPU, Clojure, Deep Learning, Machine Learning, Java, Intel, Nvidia, AMD, CPU, High Performance Computing, Linear Algebra, OpenCL

If you’re a dev who’s felt like most ML/Math content talks over your head — this is for you.

You can preview the books or support the work on my site. ❤️

🔗 aiprobook.com

Or just retweet this thread so others can find it.

12.05.2025 09:53 — 👍 1 🔁 1 💬 0 📌 0

Interactive Programming for Artificial Intelligence Book Series: Deep Learning for Programmers, Linear Algebra for Programmers, and more

A book series on Programming, CUDA, GPU, Clojure, Deep Learning, Machine Learning, Java, Intel, Nvidia, AMD, CPU, High Performance Computing, Linear Algebra, OpenCL

They've helped hundreds of devs actually get backprop, eigenvalues, gradient descent, and more — without needing a PhD or pretending math is magic.

A few chapters are even free at aiprobook.com if you want to explore. Lots of content is available as blog articles.

12.05.2025 09:53 — 👍 1 🔁 1 💬 1 📌 0

That feedback led me to create two books:

📘 Deep Learning for Programmers

📗 Linear Algebra for Programmers

They’re built entirely from the intuition that if you can code, you can understand math.

Code-first, jargon-free, honest.

12.05.2025 09:52 — 👍 0 🔁 0 💬 1 📌 0

One day, a blog post of mine made the front page of Hacker News.

It didn't break my server, but it was read by many people.

That gave me a signal: there's a hunger out there for programmers who want hands-on, code-first explanations of “scary” math concepts.

12.05.2025 09:52 — 👍 0 🔁 0 💬 1 📌 0

Articles

Dragan Djuric's Clojure Blog, Artificial Intelligence, Deep Learning, Bayesian Data Analysis, CUDA, GPU, OpenCL, Functional Programming, Probabilistic Programming, Data Science.

10y ago, many programmers were frustrated trying to understand how Deep Learning worked under the hood. Every resource was either:

Way too theoretical

Or shallow “framework tutorials”

I started writing blog posts at dragan.rocks just to explain things to my past self.

12.05.2025 09:52 — 👍 2 🔁 0 💬 1 📌 0

I've spent many years building HPC and ML libraries, and writing 2 books that teach Linear Algebra and Deep Learning to actual programmers (not math PhDs).

Here's how I went from writing my first blog post to building a following, front-paging Hacker News, and useful books.🧵👇

12.05.2025 09:51 — 👍 2 🔁 1 💬 1 📌 0

Thank you!

I really don't know, as I don't get any contact details from Patreon. I am surprised that they close accounts for such reasons. The only thing that I can suggest is to try with a more traditional email, such as gmail...

11.05.2025 19:50 — 👍 0 🔁 0 💬 1 📌 0

The best programmers aren’t good because they know more.

They’re good because they ask:

“What’s really going on here?”

10.05.2025 12:45 — 👍 1 🔁 0 💬 0 📌 0

Numerical Linear Algebra for Programmers

Numerical Linear Algebra for Programmers: An Interactive Tutorial with GPU, CUDA, OpenCL, MKL, Java, and Clojure

basically…

* a book for programmers

* interactive & dynamic

* direct link from theory t...

Programmers treat linear algebra like magic.

But it's not magic.

It's code. It's vectors. It's yours to master.

Linear Algebra for Programmers shows you how—with zero fluff.

If you write code, you need this book.

👉 aiprobook.com/numerical-li...

#DevLife #MachineLearning #AI #Coding

10.05.2025 12:03 — 👍 1 🔁 0 💬 1 📌 0

Numerical Linear Algebra for Programmers

Numerical Linear Algebra for Programmers: An Interactive Tutorial with GPU, CUDA, OpenCL, MKL, Java, and Clojure

basically…

* a book for programmers

* interactive & dynamic

* direct link from theory t...

🚀 If you're a programmer struggling with math, Linear Algebra for Programmers by Dragan Djuric is the book you didn’t know you needed.

🔢 No fluff. Just the math that powers ML, graphics, and more—explained in code.

📘 Get smarter where it counts: aiprobook.com/numerical-li...

#AI

10.05.2025 11:20 — 👍 1 🔁 1 💬 0 📌 0

A lot of code that makes PyTorch useful might already be in the Deep Diamond. No need to create a PyTorch port, just the integration of the most useful stuff from libtorch into Clojure.

27.04.2025 19:41 — 👍 1 🔁 0 💬 0 📌 0

That's all right. I might do the PyTorch part, and other people will do some other pieces.

27.04.2025 19:39 — 👍 1 🔁 0 💬 0 📌 0

Nothing stops us from doing the same deep integration to onnx, of course. Or any other runner. But, as I understand, the selling pitch for onnx is portability, not performance. Why I would go with PyTorch is that most models are developed on PyTorch anyway, so there's less friction there...

26.04.2025 18:33 — 👍 1 🔁 0 💬 1 📌 0

This would be orthogonal, for production use of the models. You could collaborate with Python colleagues in whatever way you can collaborate now. The point is that when there is a model (public or private) that you want to build your application on, you can run it from the JVM, without Python.

26.04.2025 15:55 — 👍 1 🔁 0 💬 1 📌 0

I am not sure what exactly you consider the PyTorch ecosystems, but my vision is, first, to be able to load and run popular PyTorch models (saved models, not python code to produce these models) by the PyTorch engine, without conversion, from Clojure/Deep Diamond in-process.

26.04.2025 15:19 — 👍 1 🔁 0 💬 1 📌 0

For now I'm still just thinking about it, not actually looking into that. First I need to see how many people would be engaged, and whether it's a good use of my (not limitless) resources ;)

26.04.2025 15:16 — 👍 1 🔁 0 💬 0 📌 0

I'm thinking of creating a #Clojure integration to #PyTorch (C++. without the Python part). I guess, if you can't beat them, join them...

How many Clojurians are interested in that? Would it be something you use passionately (at least for running existing model in-process)?

26.04.2025 10:01 — 👍 8 🔁 2 💬 3 📌 0

BTW Accelerate itself doesn't support GPU. For the GPU support, I'll have do do it using Metal.

14.04.2025 20:40 — 👍 1 🔁 0 💬 0 📌 0

GPU acceleration under MacOS is not yet available, but I plan to do it this summer!

14.04.2025 20:39 — 👍 1 🔁 0 💬 1 📌 0

UC Berkeley Alumni

Cloud Eng for AWS

TC: 280k

Entrepreneur

Costplusdrugs.com

Programmer based in Kyiv/Ukraine

Cryptography, web apps performance, automation, memes.

Mixed bag of music, gossip, JS/CLJ(S) and everything else.

https://fndriven.de/

Cyber-Social Infrastructure

| Digital Policy & Adaptive Governance

| Protocols for a New Millennium

| Webs of Trust

Chief Architect, Java Platform Group, Oracle · Views are my own · https://mreinhold.org

Web geek and environmentalist with a camera at the bottom left corner of Canada. He/him.

I’m mainly on Fedi, @cosocial.ca@timbray. On this account I mostly post #blueskyabove photos; for more, if you’re not on Fedi try @timbray.cosocial.ca.ap.brid.gy

Raised gifted; non-practicing.

🌐 productpicnic.beehiiv.com 💼 UX Design 🟦 Sick of rectangles 🧑 he/him

Java/JDK/OpenJDK developer, Oracle Corporation. The views expressed here are my own and do not necessarily reflect the views of Oracle. Mostly on @stuartmarks@mastodon.social ; formerly @stuartmarks on Twitter.

Comics, music, books, film, photos, paintings & cartoons! A Memorial Device Alternative National Treasure. Why not try The Almanac Of The Fantastical? https://almanacfantastical.blog/

Ⓐ λ 🏳️🌈 🫖 #anarchy #clojure #babashka #golang #sre #neovim

https://github.com/lispyclouds

https://mastodon.online/@lispyclouds

❤️ #clojure since 2008 | #clojuredart maintainer | Principal Consultant at t10s.com

I code, I write, I puzzle

elenatorro.com

👩🏻💻 Dev @penpot.app

🎨 Procedural art @patternbloom.bsky.social

🤖 Scifi & Fantasy @droidsanddruids.bsky.social

JVM developer. Principal Software Engineer at Broadcom (VMware). Previously @micronautfw.bsky.social committer.

Geek, father, conference speaker, Linux-lover 🐧, Lord of Sealand. Ex-Kaleider. @madridgug.com | @madridjug.es organizer.

Coder, mountaineer, beer drinker, bass player. Former anime production staff. Snowboard. Clojure, Kubernetes, Flutter, Tauri. Hungarian in Japan.

#alpinism #8000er #ultralight #hiking #craftbeer

☕ https://ko-fi.com/valerauko

🌴 https://amzn.asia/9ctecX1

Writing about systems, people, and complexity for small digital teams with BIG goals at kwt.stuttaford.me | 13+ year B2B SaaS CTO @Cognician

#clojure enthusiast | #clojuredart maintainer | Principal Consultant at t10s.com

30.06.2025 16:24 — 👍 1 🔁 0 💬 0 📌 0

30.06.2025 16:24 — 👍 1 🔁 0 💬 0 📌 0