At #NeurIPS in San Diego this week? Interested in XAI, causality, or performative prediction? Come visit our poster!

💬 Performative Validity of Recourse Explanations

📆 Wednesday, 4.30 pm, Poster Session 2

w/ Hidde Fokkema, Timo Freiesleben, Celestine Mendler-Dünner, Ulrike von Luxburg

02.12.2025 18:17 —

👍 11

🔁 3

💬 0

📌 0

Tübingen Conference for AI and Law starting on wednesday!

Keynotes by Rediet Abebe, Solon Borocas, Sylvie Delacroix, Lilian Edwards, Christoph Engel, Michal Gal, Philipp Hacker, Christoph Kern, Christoph Sorge.

ailawinstitute.de/conference-f...

03.11.2025 11:27 —

👍 7

🔁 2

💬 1

📌 0

I almost overlooked this one. Thanks to #NeurIPS for the complimentary registration! 🙏

17.10.2025 08:15 —

👍 2

🔁 0

💬 0

📌 0

ELLIS UnConference - A NeurIPS-endorsed conference in Europe

A NeurIPS-endorsed conference in Europe held in Copenhagen, Denmark

🔹 Speakers: @jessicahullman.bsky.social, @doloresromerom.bsky.social, @tpimentel.bsky.social & Bernt Schiele

🕒 Call for contributions open until Oct 15 (AoE)

🔗 More info: eurips.cc/ellis

13.10.2025 09:10 —

👍 3

🔁 2

💬 1

📌 0

How can we make AI explanations provably correct — not just convincing? 🤔

Join us for the Theory of Explainable Machine Learning Workshop, part of the ELLIS UnConference Copenhagen 🇩🇰 on Dec 2, co-located with #EurIPS.

🕒 Call for contributions open until Oct 15 (AoE)

🔗 eurips.cc/ellis

13.10.2025 09:10 —

👍 13

🔁 4

💬 1

📌 0

In short: Many XAI papers are based on goals such as "transparency". But what does that mean? We argue that XAI methods should be motivated by concrete goals (e.g., explaining how to change an unfavorable prediction) instead of vague concepts (e.g., interpretability).

Section 3, Misconception 1

08.10.2025 08:13 —

👍 1

🔁 0

💬 0

📌 0

Our article is also on arXiv: arxiv.org/pdf/2306.04292

08.10.2025 07:57 —

👍 2

🔁 0

💬 1

📌 0

Are you coming ?

I will be talking about

#XAI #orms

07.10.2025 18:27 —

👍 6

🔁 2

💬 0

📌 0

Looking forward to talking about our work on the value of explanation for decision-making at this workshop

07.10.2025 14:32 —

👍 11

🔁 3

💬 0

📌 0

Theory of XAI Workshop

Explainable AI (XAI) is now deployed across a wide range of settings, including high-stakes domains in which misleading explanations can cause real harm. For example, explanations are required by law ...

Interested in provable guarantees and fundamental limitations of XAI? Join us at the "Theory of Explainable AI" workshop Dec 2 in Copenhagen! @ellis.eu @euripsconf.bsky.social

Speakers: @jessicahullman.bsky.social @doloresromerom.bsky.social @tpimentel.bsky.social

Call for Contributions: Oct 15

07.10.2025 12:53 —

👍 8

🔁 5

💬 0

📌 2

expressing appreciation for this scientific diagram

05.10.2025 20:55 —

👍 50

🔁 7

💬 3

📌 0

Time to figure out which provable guarantees one can(not) give on XAI! Workshop "Theory of Explainable Machine

Learning", Dec 2 in Copenhagen as part of the Ellis

Unconference/EurIPS. Submission deadline: Oct 15.

sites.google.com/view/theory-...

eurips.cc/ellis/

01.10.2025 03:30 —

👍 8

🔁 3

💬 0

📌 0

Theory of XAI Workshop, Dec 2, 2025

Explainable AI (XAI) is now deployed across a wide range of settings, including high-stakes domains in which misleading explanations can cause real harm. For example, explanations are required by law ...

🚨 Workshop on the Theory of Explainable Machine Learning

Call for ≤2 page extended abstract submissions by October 15 now open!

📍 Ellis UnConference in Copenhagen

📅 Dec. 2

🔗 More info: sites.google.com/view/theory-...

@gunnark.bsky.social @ulrikeluxburg.bsky.social @emmanuelesposito.bsky.social

30.09.2025 14:00 —

👍 8

🔁 4

💬 0

📌 0

I am hiring PhD students and/or Postdocs, to work on the theory of explainable machine learning. Please apply through Ellis or IMPRS, deadlines end october/mid november. In particular: Women, where are you? Our community needs you!!!

imprs.is.mpg.de/application

ellis.eu/news/ellis-p...

17.09.2025 06:17 —

👍 25

🔁 15

💬 0

📌 0

Not that I know of. But the method is relatively easy to implement. Please reach out if you would like to use it. I'm happy to assist!

08.07.2025 15:00 —

👍 1

🔁 0

💬 1

📌 0

Sounds interesting? Have a look at our paper!

Joint work with Eric Günther and @ulrikeluxburg.bsky.social.

07.07.2025 15:43 —

👍 1

🔁 0

💬 1

📌 0

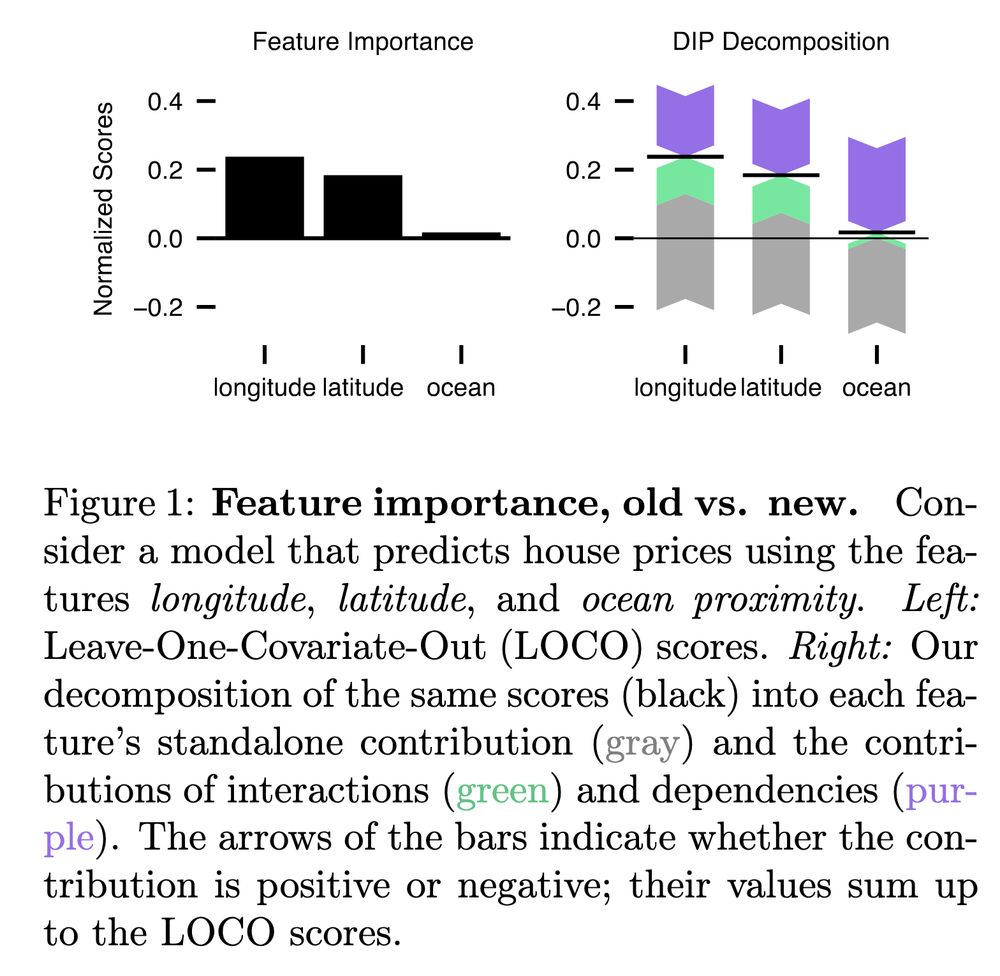

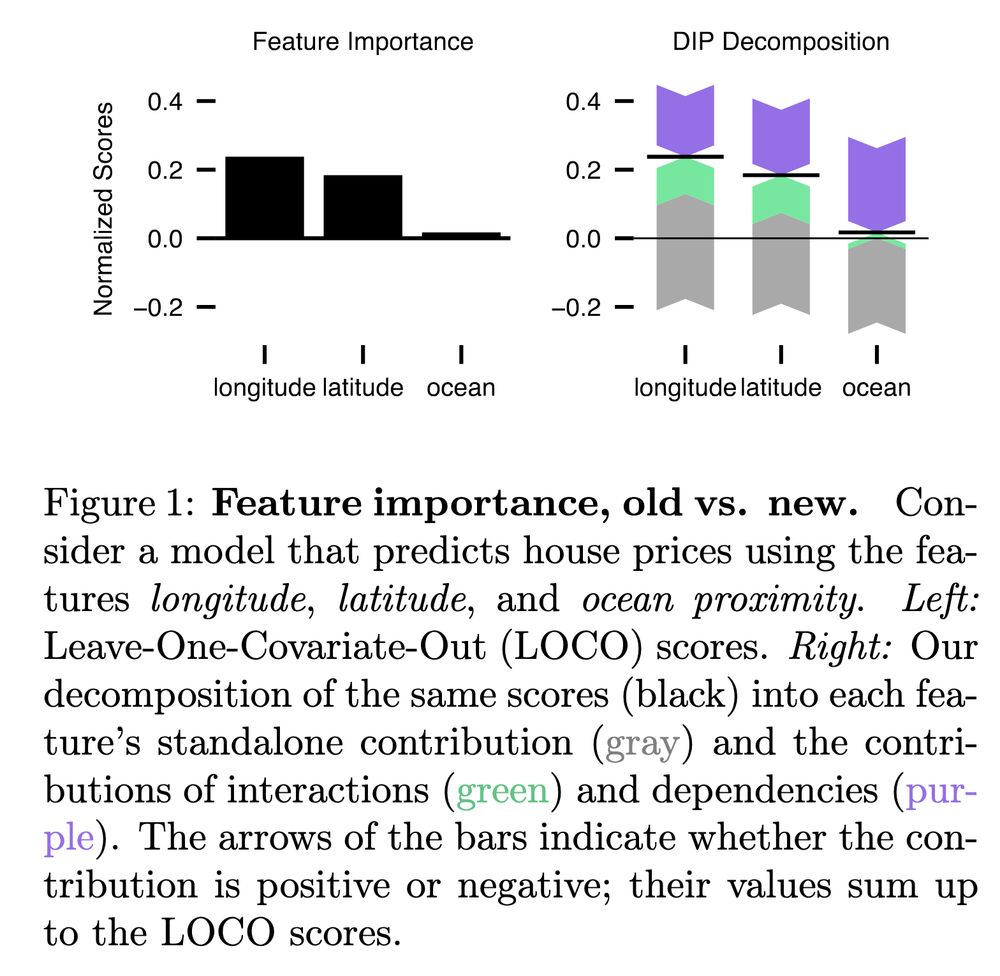

In our recent AISTATS paper, we propose DIP, a novel mathematical decomposition of feature attribution scores that cleanly separates individual feature contributions and the contributions of interactions and dependencies.

07.07.2025 15:40 —

👍 0

🔁 0

💬 1

📌 0

Dependencies are not only a neglected cooperative force but also complicate the definition and quantification of feature interactions. In particular, the contributions of interactions and dependencies may cancel each other out and must be disentangled to be fully revealed.

07.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

For example, suppose we predict kidney function (Y) from creatinine (C) and muscle mass (M), and that C reflects Y but also M, which is not linked to Y. Here, M becomes useful once combined with C, as it allows us to subtract irrelevant variation from C. In other words, C&M cooperate via dependence!

07.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

Determining whether variables are relevant due to cooperation is crucial, as variables that cooperate must be considered jointly to understand their relevance. Notably, features cooperate not only through interactions but also through statistical dependencies, which existing methods neglect.

07.07.2025 15:38 —

👍 1

🔁 0

💬 1

📌 0

In many XAI applications, it is crucial to determine whether features contribute individually or only when combined. However, existing methods fail to reveal cooperations since they entangle individual contributions with those made via interactions and dependencies. We show how to disentangle them!

07.07.2025 15:37 —

👍 17

🔁 3

💬 1

📌 2

Feature importance measures can clarify or mislead. PFI, LOCO, and SAGE each answer a different question.

Understand how to pick the right tool and avoid spurious conclusions: mcml.ai/news/2025-03...

@fionaewald.bsky.social @ludwig-bothmann.bsky.social @giuseppe88.bsky.social @gunnark.bsky.social

12.05.2025 12:54 —

👍 7

🔁 5

💬 0

📌 0

Finally made it to bluesky as well ...

05.05.2025 08:58 —

👍 14

🔁 3

💬 2

📌 0

And the video of Gunnar's talk is up on YouTube in case you missed it: youtu.be/7MrMjabTbuM

@gunnark.bsky.social

06.05.2025 15:01 —

👍 13

🔁 3

💬 0

📌 0

I recall you had an iPad -- why did you switch?

27.11.2024 13:52 —

👍 1

🔁 0

💬 1

📌 0

A starter pack of people working on interpretability / explainability of all kinds, using theoretical and/or empirical approaches.

Reply or DM if you want to be added, and help me reach others!

go.bsky.app/DZv6TSS

14.11.2024 17:00 —

👍 80

🔁 26

💬 34

📌 0

Tübingen AI

Join the conversation

Here's a fledgling starter pack for the AI community in Tübingen. Let me know if you'd like to be added!

go.bsky.app/NFbVzrA

19.11.2024 13:14 —

👍 24

🔁 13

💬 18

📌 0