Fully-funded International Neuroscience Doctoral Programme🧠 Champalimaud Foundation, Lisbon, Portugal 🇵🇹

Deadline: Jan 31, 2026

fchampalimaud.org/champalimaud...

Research program spans systems/computational/theoretical/clinical/sensory/motor neuroscience, neuroethology, intelligence, and more!!

16.12.2025 19:20 — 👍 26 🔁 17 💬 1 📌 0

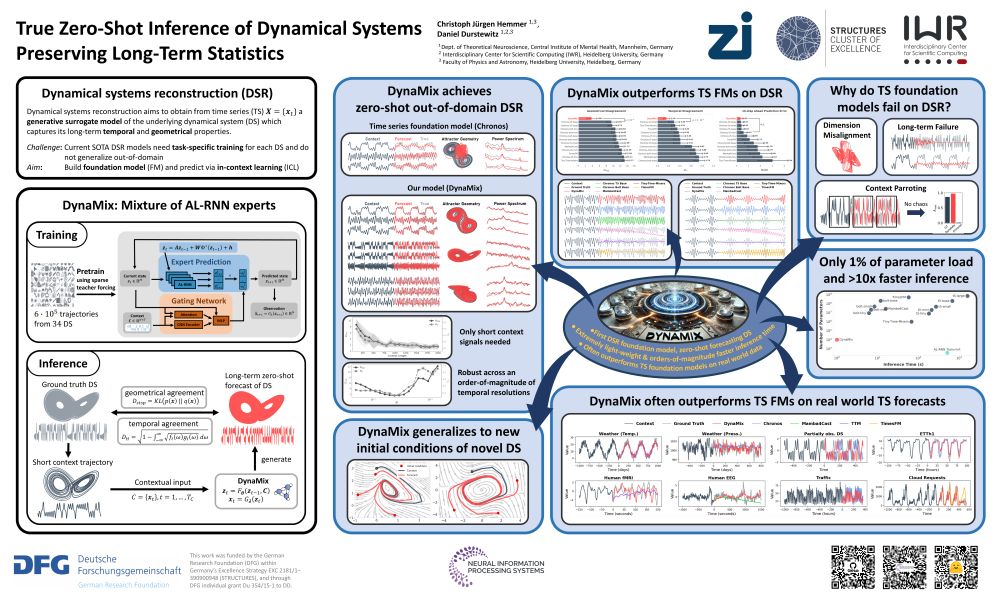

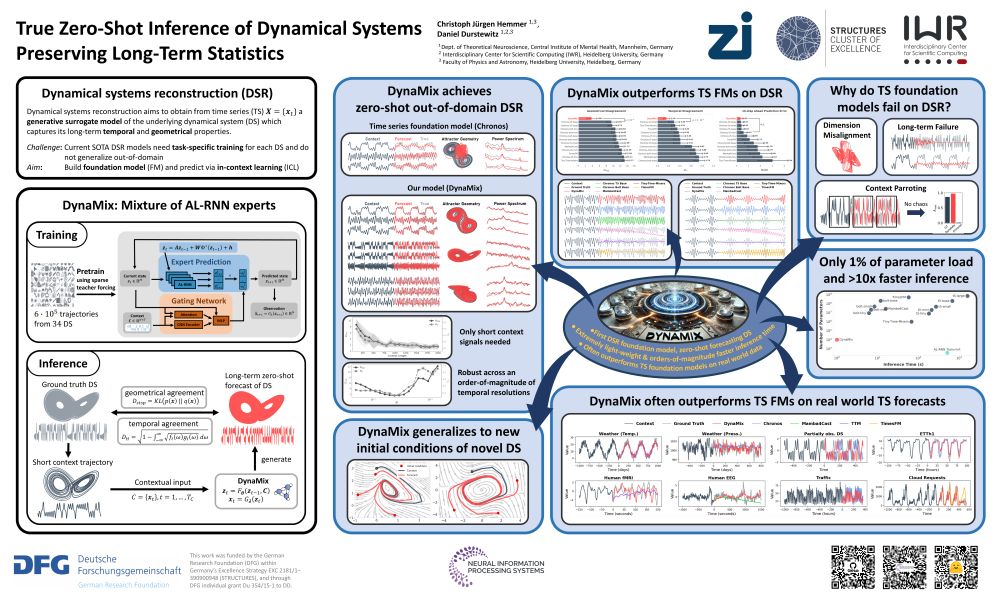

Tomorrow Christoph will present DynaMix, the first foundation model for dynamical systems reconstruction, at #NeurIPS2025 Exhibit Hall C,D,E #2303

05.12.2025 13:28 — 👍 11 🔁 3 💬 0 📌 0

Thanks for sharing! Missed it, but just downloaded it, looking forward to get into it ...

01.12.2025 14:17 — 👍 3 🔁 0 💬 1 📌 0

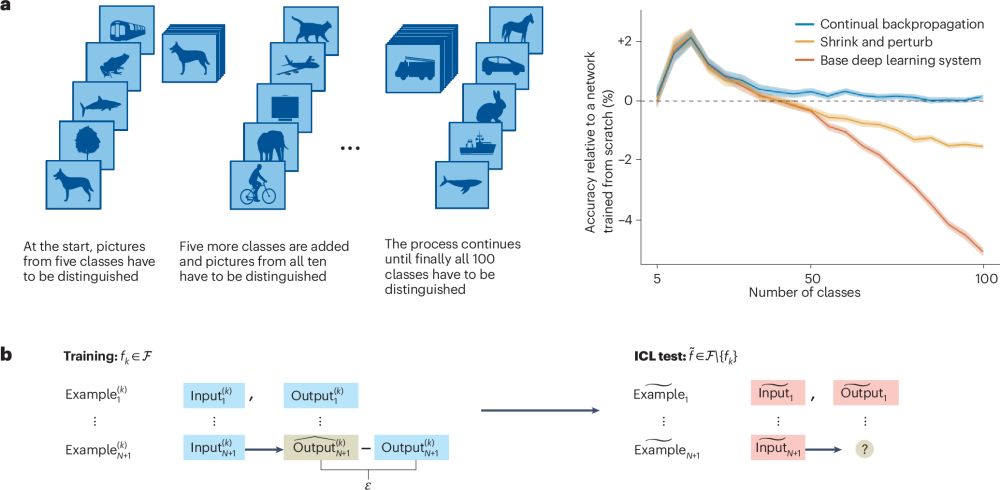

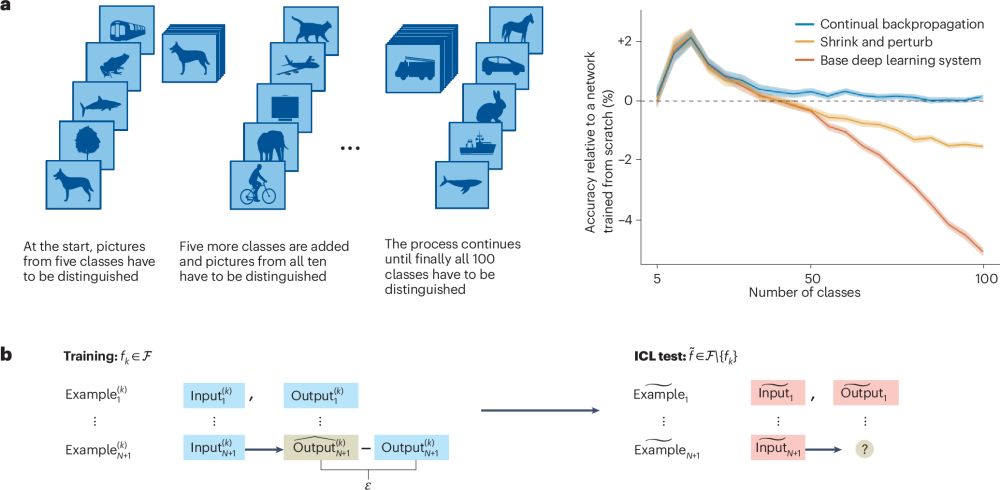

What neuroscience can tell AI about learning in continuously changing environments

Nature Machine Intelligence - Durstewitz et al. explore what artificial intelligence can learn from the brain’s ability to adjust quickly to changing environments. By linking neuroscience...

Unlike current AI systems, animals can quickly and flexibly adapt to changing environments.

This is the topic of our new perspective in Nature MI (rdcu.be/eSeif), where we relate dynamical and plasticity mechanisms in the brain to in-context and continual learning in AI. #NeuroAI

29.11.2025 09:24 — 👍 47 🔁 11 💬 0 📌 1

Revised version of our #NeurIPS2025 paper with full code base in Julia & Python now online, see arxiv.org/abs/2505.13192

28.10.2025 18:27 — 👍 26 🔁 7 💬 0 📌 0

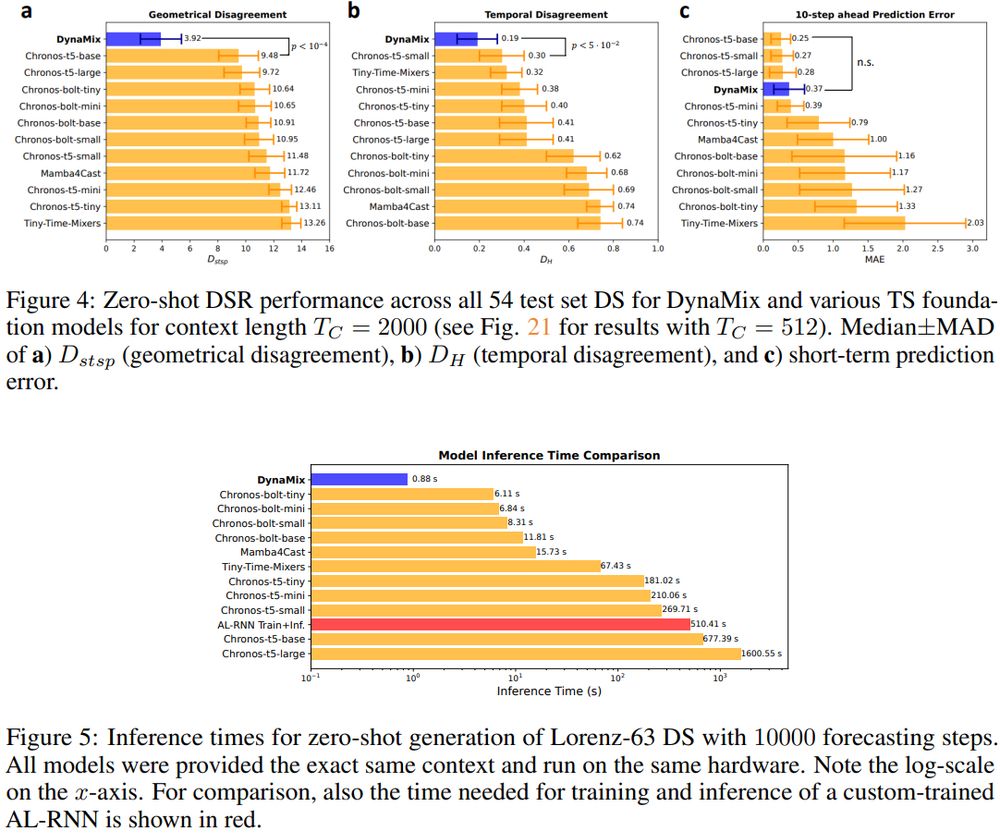

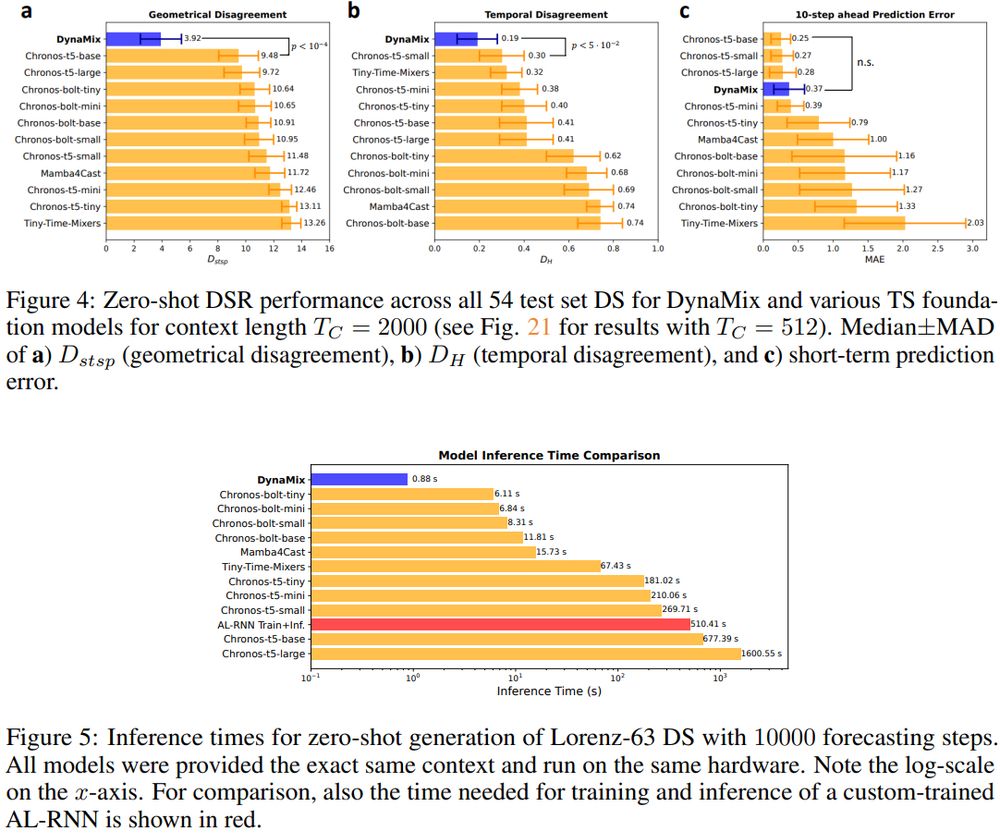

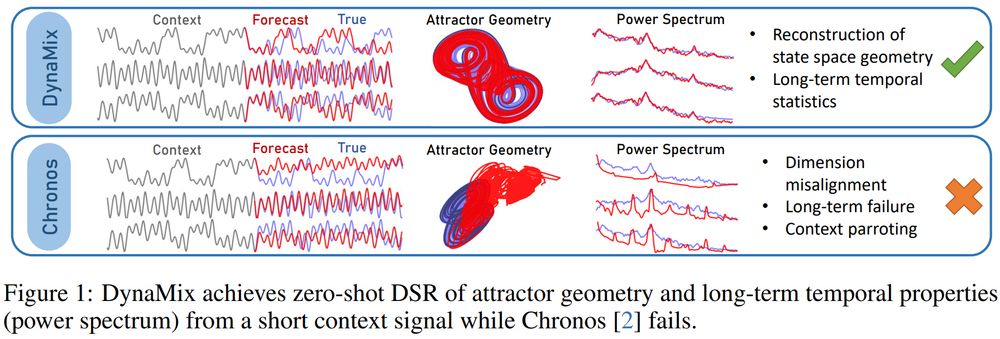

Despite being extremely lightweight (only 0.1% of params, 0.6% training corpus size, of closest competitor), it also outperforms major TS foundation models like Chronos variants on real-world TS forecasting with minimal inference times (0.2%) ...

21.09.2025 09:40 — 👍 2 🔁 0 💬 0 📌 0

Our #AI #DynamicalSystems #FoundationModel DynaMix was accepted to #NeurIPS2025 with outstanding reviews (6555) – first model which can *zero-shot*, w/o any fine-tuning, forecast the *long-term statistics* of time series provided a context. Test it on #HuggingFace:

huggingface.co/spaces/Durst...

21.09.2025 09:40 — 👍 12 🔁 4 💬 1 📌 1

We have openings for several fully-funded positions (PhD & PostDoc) at the intersection of AI/ML, dynamical systems, and neuroscience within a BMFTR-funded Neuro-AI consortium, at Heidelberg University & Central Institute of Mental Health:

www.einzigartigwir.de/en/job-offer...

More info below ...

15.08.2025 07:45 — 👍 7 🔁 1 💬 1 📌 0

YouTube video by Gerstner Lab

From Spikes To Rates

Is it possible to go from spikes to rates without averaging?

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

08.08.2025 15:25 — 👍 61 🔁 17 💬 2 📌 1

Got prov. approval for 2 major grants in Neuro-AI & Dynamical Systems Reconstruction, on learning & inference in non-stationary environments, out-of-domain generalization, and DS foundation models. To all AI/math/DS enthusiasts: Expect job announcements (PhD/PostDoc) soon! Feel free to get in touch.

13.07.2025 06:23 — 👍 34 🔁 8 💬 0 📌 0

What Neuroscience Can Teach AI About Learning in Continuously Changing Environments

Modern AI models, such as large language models, are usually trained once on a huge corpus of data, potentially fine-tuned for a specific task, and then deployed with fixed parameters. Their training ...

We wrote a little #NeuroAI piece about in-context learning & neural dynamics vs. continual learning & plasticity, both mechanisms to flexibly adapt to changing environments:

arxiv.org/abs/2507.02103

We relate this to non-stationary rule learning tasks with rapid performance jumps.

Feedback welcome!

06.07.2025 10:18 — 👍 36 🔁 8 💬 0 📌 0

Yes I think so!

04.07.2025 05:50 — 👍 2 🔁 0 💬 0 📌 0

CNS*2025 Florence: NeuroXAI: Explainable AI for Understandi...

View more about this event at CNS*2025 Florence

Happy to discuss our work on parsimonious & math. tractable RNNs for dynamical systems reconstruction next week at

cns2025florence.sched.com/event/1z9Mt/...

03.07.2025 12:40 — 👍 9 🔁 0 💬 1 📌 0

Fantastic work by Florian Bähner, Hazem Toutounji, Tzvetan Popov and many others - I'm just the person advertising!

26.06.2025 15:30 — 👍 0 🔁 0 💬 0 📌 0

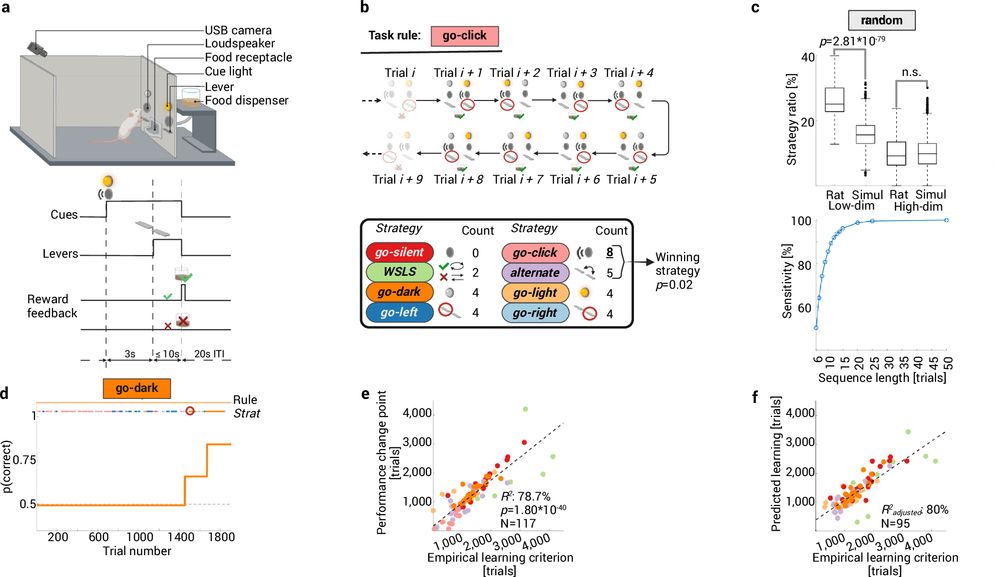

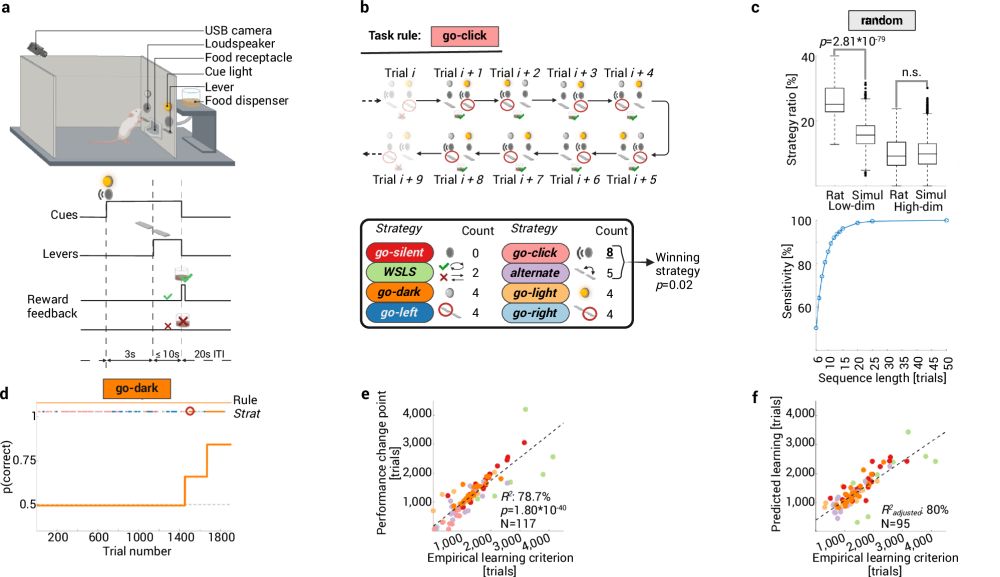

Abstract rule learning promotes cognitive flexibility in complex environments across species

Nature Communications - Whether neurocomputational mechanisms that speed up human learning in changing environments also exist in other species remains unclear. Here, the authors show that both...

How do animals learn new rules? By systematically testing diff. behavioral strategies, guided by selective attn. to rule-relevant cues: rdcu.be/etlRV

Akin to in-context learning in AI, strategy selection depends on the animals' "training set" (prior experience), with similar repr. in rats & humans.

26.06.2025 15:30 — 👍 8 🔁 2 💬 1 📌 0

What a line up!! With Lorenzo Gaetano Amato, Demian Battaglia, @durstewitzlab.bsky.social, @engeltatiana.bsky.social, @seanfw.bsky.social, Matthieu Gilson, Maurizio Mattia, @leonardopollina.bsky.social, Sara Solla.

21.06.2025 10:24 — 👍 5 🔁 2 💬 1 📌 0

Into population dynamics? Coming to #CNS2025 but not quite ready to head home?

Come join us! at the Symposium on "Neural Population Dynamics and Latent Representations"! 🧠

📆 July 10th

📍 Scuola Superiore Sant’Anna, Pisa (and online)

👉 Free registration: neurobridge-tne.github.io

#compneuro

21.06.2025 10:24 — 👍 22 🔁 11 💬 1 📌 1

I’m really looking so much forward to this! In wonderful Pisa!

21.06.2025 12:18 — 👍 1 🔁 0 💬 0 📌 0

Just heading back from a fantastic workshop on neural dynamics at Gatsby/ London, organized by Tatiana Engel, Bruno Averbeck, & Peter Latham.

Enjoyed seeing so many old friends, Memming Park, Carlos Brody, Wulfram Gerstner, Nicolas Brunel & many others …

Discussed our recent DS foundation models …

19.06.2025 11:37 — 👍 6 🔁 0 💬 0 📌 0

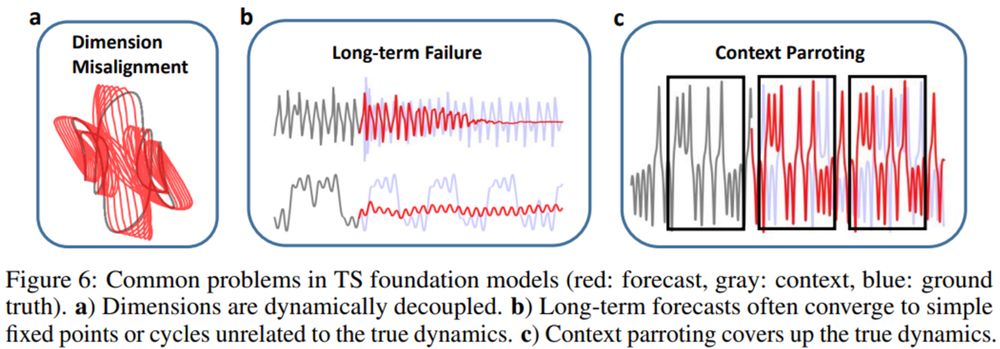

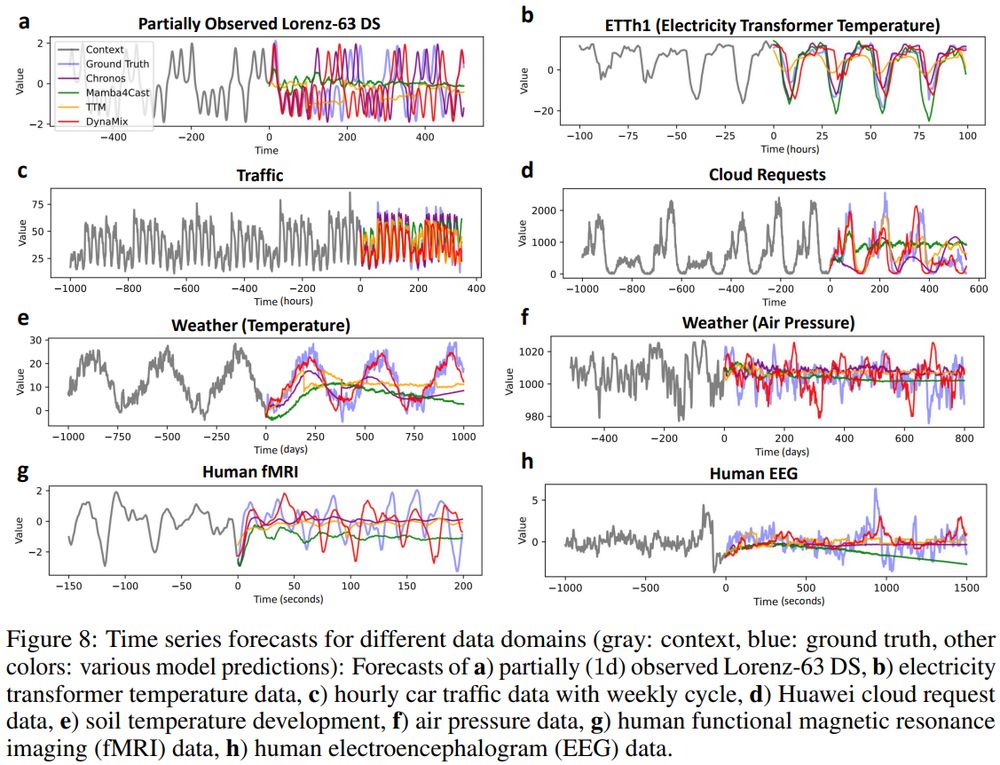

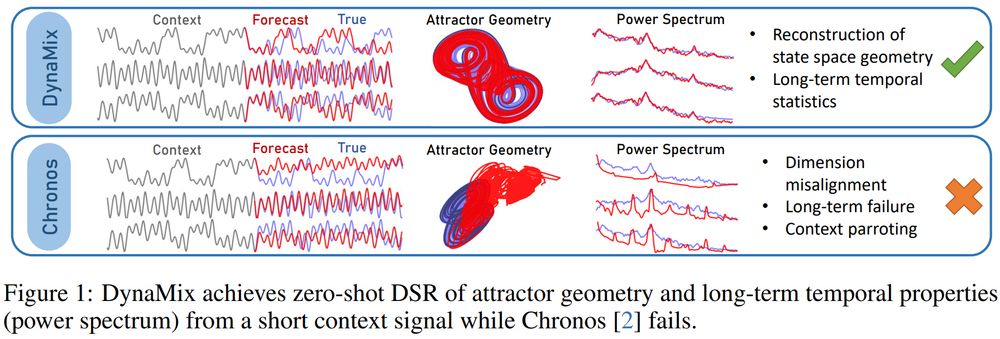

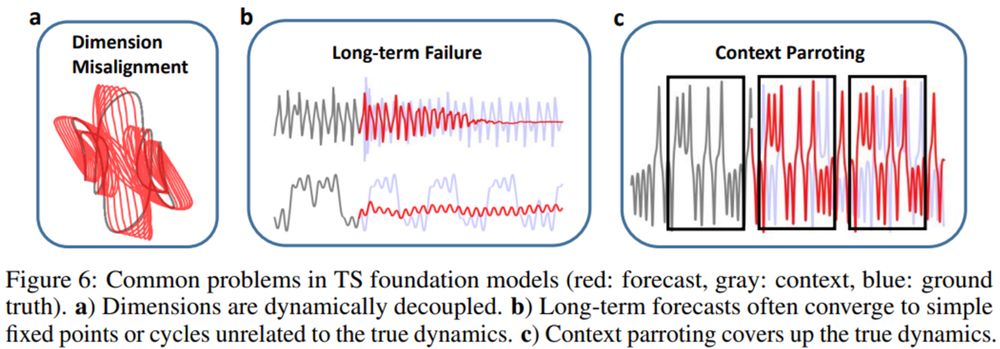

We dive a bit into the reasons why current time series FMs not trained for DS reconstruction fail, and conclude that a DS perspective on time series forecasting & models may help to advance the #TimeSeriesAnalysis field.

(6/6)

20.05.2025 14:15 — 👍 2 🔁 0 💬 0 📌 0

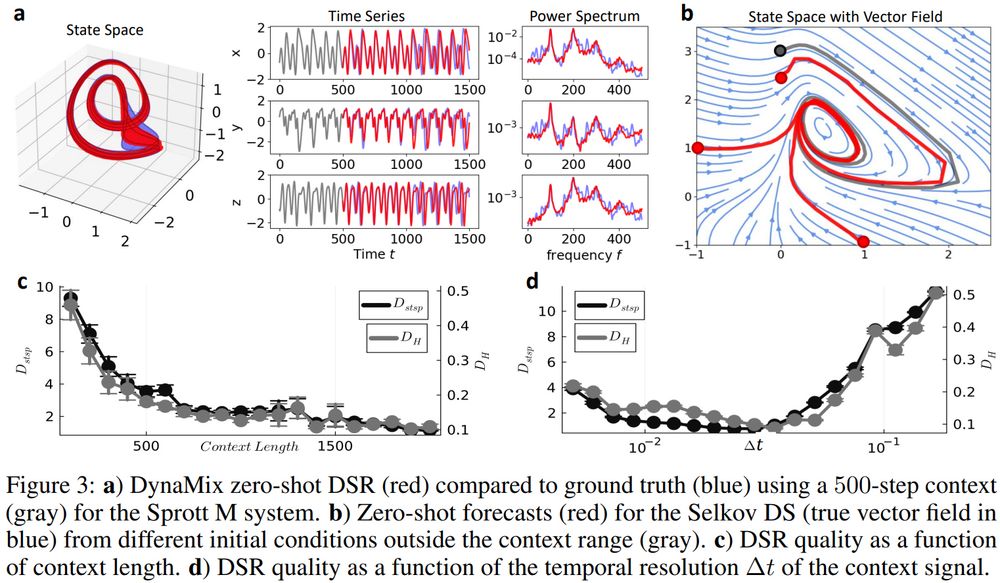

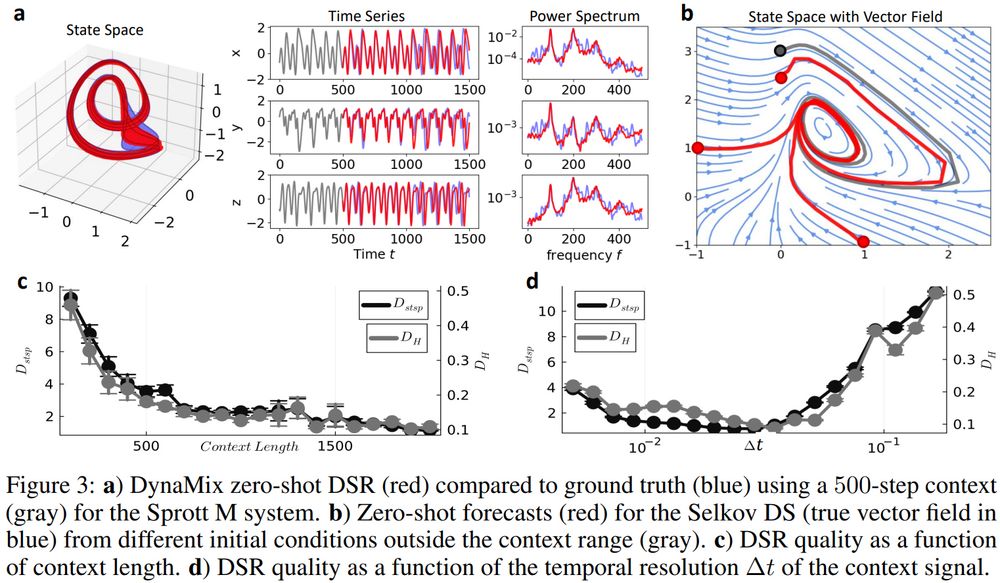

Remarkably, it not only generalizes zero-shot to novel DS, but it can even generalize to new initial conditions and regions of state space not covered by the in-context information.

(5/6)

20.05.2025 14:15 — 👍 2 🔁 0 💬 1 📌 0

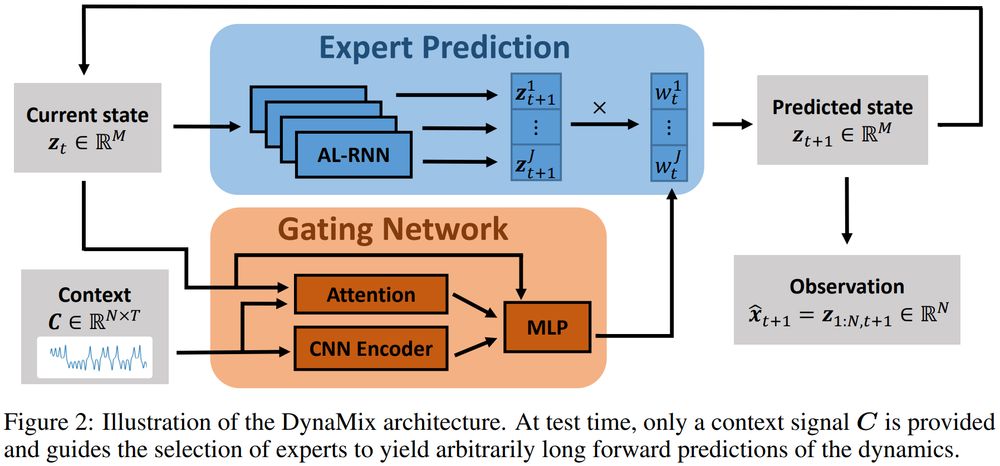

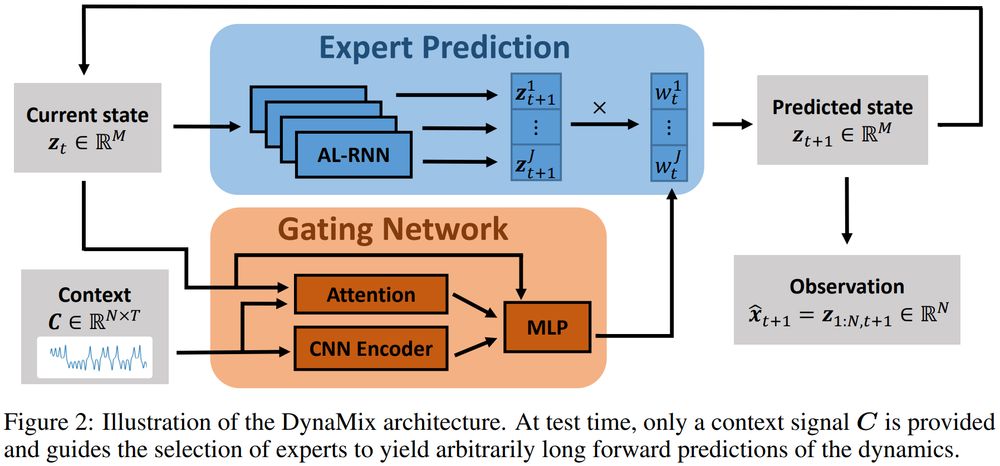

And no, it’s neither based on Transformers nor Mamba – it’s a new type of mixture-of-experts architecture based on the recently introduced AL-RNN (proceedings.neurips.cc/paper_files/...), specifically trained for DS reconstruction.

#AI

(4/6)

20.05.2025 14:15 — 👍 0 🔁 0 💬 1 📌 0

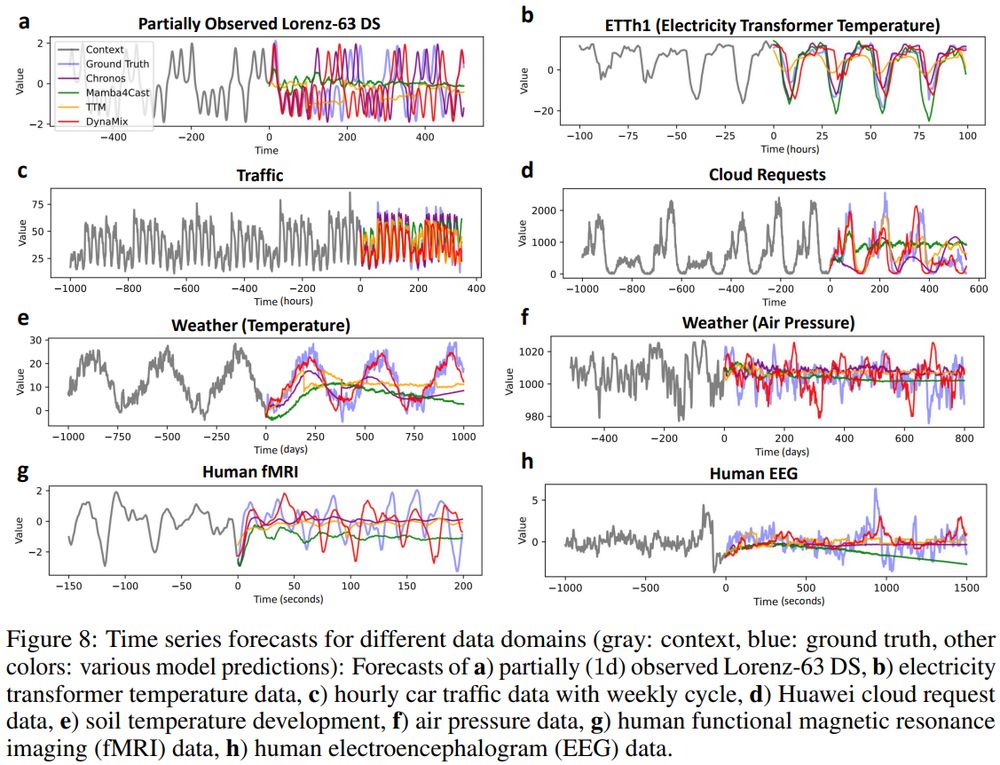

It often even outperforms TS FMs on forecasting diverse empirical time series, like weather, traffic, or medical data, typically used to train TS FMs.

This is surprising, cos DynaMix’ training corpus consists *solely* of simulated limit cycles & chaotic systems, no empirical data at all!

(3/6)

20.05.2025 14:15 — 👍 0 🔁 0 💬 1 📌 0

Unlike TS FMs, DynaMix exhibits #ZeroShotLearning of long-term stats of unseen DS, incl. attractor geometry & power spectrum, w/o *any* re-training, just from a context signal.

It does so with only 0.1% of the parameters of Chronos & 10x faster inference times than the closest competitor.

(2/6)

20.05.2025 14:15 — 👍 0 🔁 0 💬 1 📌 0

Can time series (TS) #FoundationModels (FM) like Chronos zero-shot generalize to unseen #DynamicalSystems (DS)?

No, they cannot!

But *DynaMix* can, the first TS/DS FM based on principles of DS reconstruction, capturing the long-term evolution of out-of-domain DS: arxiv.org/pdf/2505.131...

(1/6)

20.05.2025 14:15 — 👍 11 🔁 3 💬 1 📌 1

Home | Neuroscience | World Wide Theoretical Neuroscience Seminar

WWTNS is a weekly digital seminar on Zoom targeting the theoretical neuroscience community. Its aim is to be a platform to exchange ideas among theoreticians.

I'm presenting our lab's work on *learning generative dynamical systems models from multi-modal and multi-subject data* in the world-wide theoretical neurosci seminar Wed 23rd, 11am ET:

www.wwtns.online

--> incl. recent work on building foundation models for #dynamical-systems reconstruction #AI 🧪

21.04.2025 15:38 — 👍 11 🔁 3 💬 0 📌 1

A Nature Research journal on AI, robotics and machine learning

@natureportfolio.bsky.social

nature.com/natmachintell

Mobilizing the fight for science and democracy, because Science is for everyone 🧪🌎

The hub for science activism!

Learn more ⬇️

http://linktr.ee/standupforscience

Computational Neuroscientist & Neurotechnologist

Researcher in all things Neuro

Also at mastodon.social/@matrig

Neuroscientist, both computational and experimental. Also, parent of a teenager :) . All posts and opinions are in my personal capacity.

professional website: https://brodylab.org

Postdoc computational, clinical, network neuroscience and all things cognitive control || holmeslab.rutgers.edu/ sites.rutgers.edu/cahbir/ formerly Yale Psychology || PhD: colelab.org/ || carrisacocuzza.wixsite.com/neuro

Director TReNDS @ GSU/GATech/Emory, Multimodal brain imaging & genetics, signal & image processing, networks, ML/AI, geek, husband, father of three, lover of purple.

Professor, director of neuroscience lab at Rutgers University – neuroimaging, cognitive control, network neuroscience

Writing book “Brain Flows: How Network Dynamics Compose The Human Mind” for Princeton University Press

https://www.colelab.org

Networks, neuroscience, control theory, curiosity, science of science

🏳️🌈🏳️⚧️J Peter Skirkanich Professor, University of Pennsylvania

Leader neuroinformatics.org.uk | Computational Psychiatry | Computational Neurology | Author of 'Changing Connectomes' https://mitpress.mit.edu/9780262044615/

https://www.dynamic-connectome.org/

Assistant Professor, Drexel University | Cognitive Neuroscience | Spontaneous Thought | kucyilab.com

Director, Yale Imaging & Psychopharmacology (YIP), Assoc Prof in Psychiatry & Child Study, addiction, mood, neuroimaging, tries to predict clinical stuff

Professor, Semel Institute for Neuroscience and Behavior, UCLA (https://profiles.ucla.edu/lucina.uddin)

Director, Brain Connectivity and Cognition Lab (https://teams.semel.ucla.edu/bccl)

Available for academic career advice. I never said *good* advice.

Associate Professor, AI and Society Dept, Institute for Artificial Intelligence and Data Science, Neuroscience Program, University at Buffalo, SUNY

associate prof at university of minnesota | masonic institute for the developing brain | brain networks & behavior lab PI | views my own | oberlin to iu to penn to iu to umn | https://www.brainnetworkslab.com/

Associate Professor, Psychological & Brain Sciences, Johns Hopkins University.

theoretical neuroscience; open-ended cognition; memory

Neuroimaging, computational modeling and neuromodulation

Researcher @ QIMR Berghofer, Brisbane, Australia

https://ljhearne.github.io/

PhD Student https://pni-lab.github.io/

Modeling macro-scale brain dynamics with ANNs! 🧠

Doing cognitive neuroscience at Monash University, Melbourne, Australia. We investigate brains, networks, genes, models, cognition & disorders.

Computational Neuroscientist, NeuroAI, Causality. Monash, UCL, CIFAR. Lab: https://comp-neuro.github.io/