The same can probably be said also about a lot of the compliance and risk functions in major companies.

15.07.2025 11:48 — 👍 1 🔁 0 💬 0 📌 0Robert Porsch

@rmporsch.bsky.social

@rmporsch.bsky.social

The same can probably be said also about a lot of the compliance and risk functions in major companies.

15.07.2025 11:48 — 👍 1 🔁 0 💬 0 📌 0There are just too many options out there now. I think most people got desensitized. It's just another LLM in the end.

15.07.2025 11:45 — 👍 0 🔁 0 💬 0 📌 0Yeah, there isn't that novelty anymore compared to the deepseek moment. Maybe even expected by many in the Chinese tech industry.

14.07.2025 16:09 — 👍 1 🔁 0 💬 0 📌 0

When I read these articles about those new browsers from perplexity or openAI I really want to agree, but hyping it all up like this makes it very difficult. Calling it an extension of consciousness is a bit of a stretch. It's an untested product

hybridhorizons.substack.com/p/when-ai-be...

I thought most papers of this size just use the name of the consortium, like the human genome project did with many of its papers

13.07.2025 15:34 — 👍 3 🔁 0 💬 0 📌 0A new definition for AGI just dropped, and it is a bad one.

12.07.2025 18:04 — 👍 169 🔁 27 💬 8 📌 5

I genuinely loved this read about GitHub code search! 💻

Read "The technology behind GitHub’s new code search." on their blog!

Great article explaining how vLLM is using KV caching under the hood

www.ubicloud.com/blog/life-of...

Do you have the link at hand? :)

31.01.2025 04:56 — 👍 2 🔁 0 💬 1 📌 0

“They said it could not be done”. We’re releasing Pleias 1.0, the first suite of models trained on open data (either permissibly licensed or uncopyrighted): Pleias-3b, Pleias-1b and Pleias-350m, all based on the two trillion tokens set from Common Corpus.

05.12.2024 16:39 — 👍 248 🔁 85 💬 11 📌 19

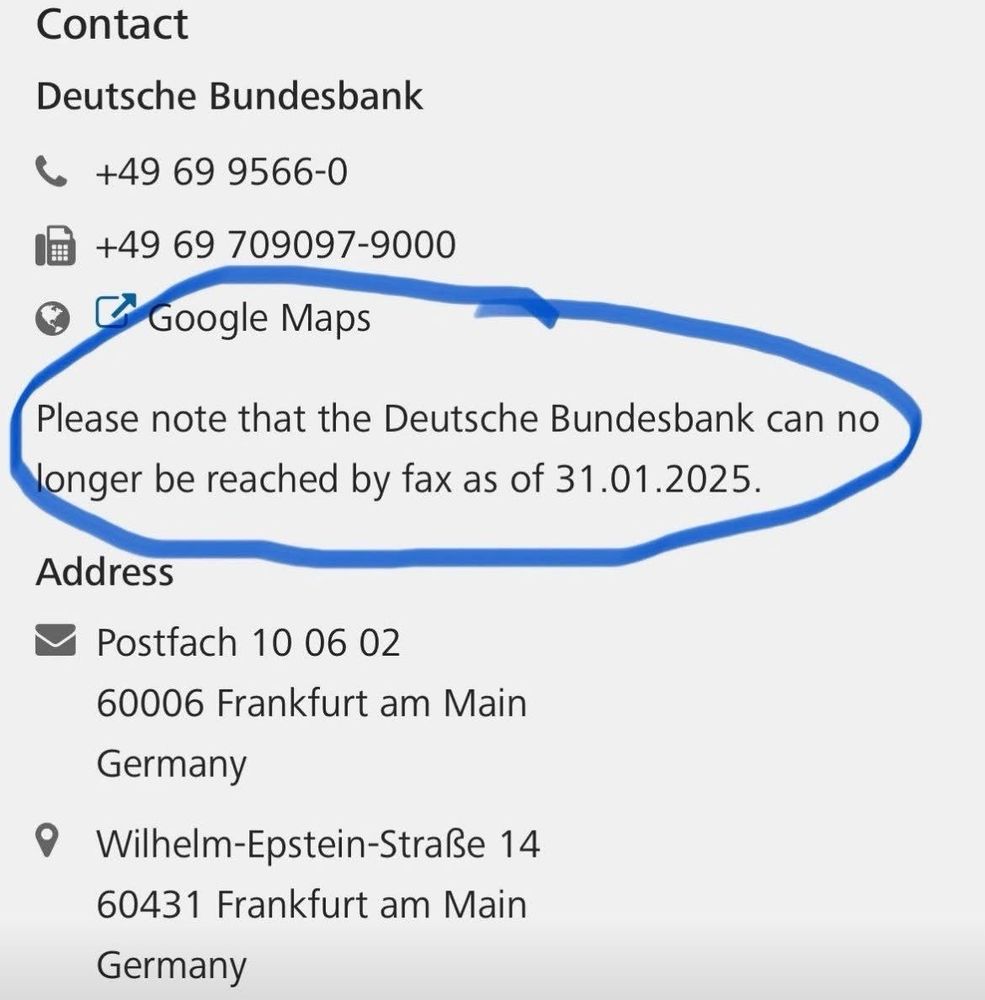

Please note that the Deutsche Bundesbank can no longer be reached by fax as of 31.01.2025.

Germany has fallen.

26.11.2024 12:58 — 👍 3328 🔁 718 💬 87 📌 127

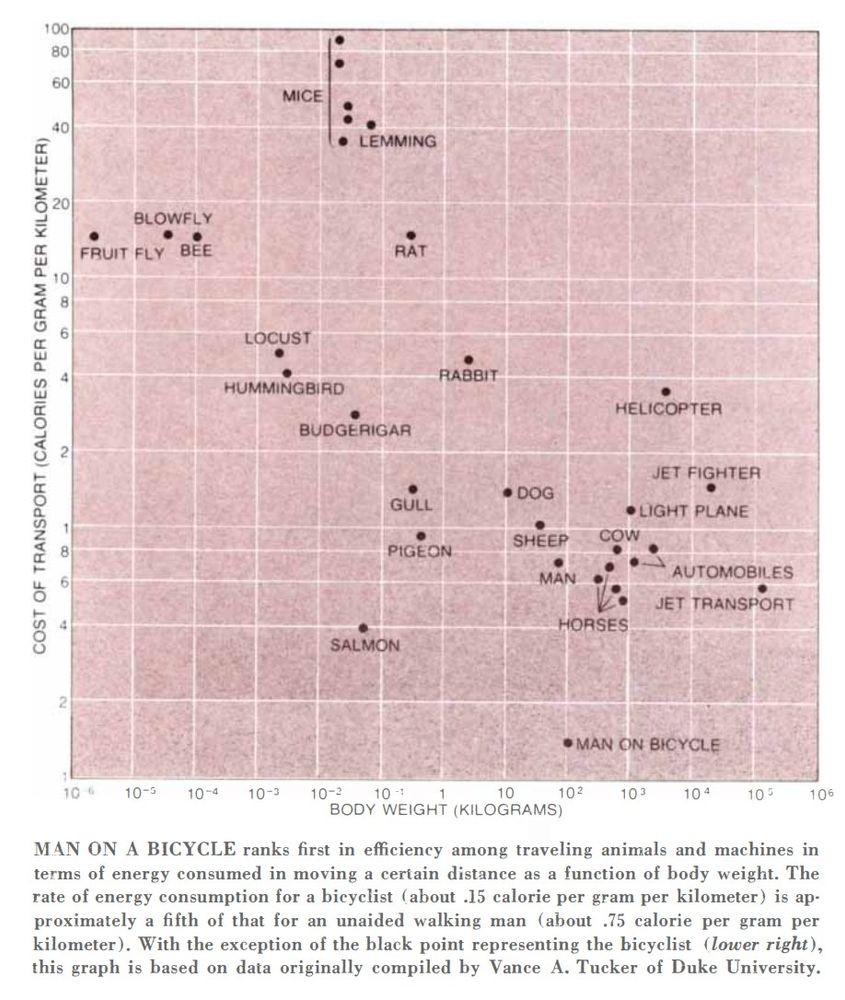

A graph titled "Cost of Transport" showing the relationship between body weight (in kilograms) and energy consumption for distance traveled (calories per gram per kilometer) for various animals and machines. It highlights that a person on a bicycle ranks first in efficiency.

A person on a bicycle is by far the most energy-efficient among animals and machines per distance traveled relative to body weight. The bicycle is magic.

www.jstor.org/stable/24923...

I guess asking trusted people is the real definition of a peer review then

25.11.2024 04:30 — 👍 0 🔁 0 💬 0 📌 0Wondering how many enterprise LLM applications use explicit user feedback to improve given that only 3% of users actually use those thumbs up/down buttons. Have seen people forcing users to provide feedback at random times however. Implicit Feedback such as session length seems easier to use

24.11.2024 15:13 — 👍 1 🔁 0 💬 0 📌 0

Interesting take on the actual adoption of AI assisted coding. Suggesting that only 5% of professional developers who have access to GitHub Copilot are using the more advanced featurs, most (63%) use auto complete only.

youtube.com/watch?v=Up6W...

He wanted to test how models performed when subjected to a new tokenization scheme without re-training the model.

Modifying Llama 3’s tokenizer to tokenize numbers from right to left (R2L) instead of left-to-right (L2R) with just a few lines of code 🧑💻. This affect the grouping of numbers by three:

It's just so freaking fast!

24.11.2024 05:15 — 👍 0 🔁 0 💬 0 📌 0Wish it was also available in Python

24.11.2024 04:33 — 👍 0 🔁 0 💬 0 📌 0Many of the current LLM eval tools, such as deepeval, mlflow, and evidently.ai are really great! However, I find it hard to choose as most of the tests are trivial and many framework feel like they want to lock me in without providing much added value.

24.11.2024 04:31 — 👍 2 🔁 0 💬 0 📌 0