pytorch 2.7 is out!

- Mega Cache looks nice, unclear whether those modules get cached by legacy mechanisms too

- foreach map looks good for optimizers

- trainable biases means you can now train T5 on flex

- prologue fusion is hype

- context parallel brings ring attention

pytorch.org/blog/pytorch...

24.04.2025 14:38 — 👍 2 🔁 0 💬 0 📌 0

pytorch 2.6 is out!

highlights:

- flex attention: better compilation of blockmask creation, better support for dynamic shapes

- cuDNN SDPA: fixes for memory layout

- CUDA 12.6

- python 3.13

- MaskedTensor memory leak fix

30.01.2025 00:41 — 👍 2 🔁 1 💬 0 📌 0

yeah but how do I type two hyphens

24.01.2025 21:08 — 👍 1 🔁 0 💬 0 📌 0

fish, dairy, beyond meat burgers

24.01.2025 01:21 — 👍 0 🔁 0 💬 0 📌 0

pytorch 2.6 final RC is out, promoting to stable in a couple of days!

mostly I'm looking forward to better compilation of flex block mask creation, and better support for flex attention on dynamic shapes.

there's also fixes for memory layout in cuDNN SDPA.

dev-discuss.pytorch.org/t/pytorch-re...

20.01.2025 19:27 — 👍 5 🔁 0 💬 0 📌 0

Claude does SVG memes

14.01.2025 01:41 — 👍 2 🔁 0 💬 0 📌 0

drink cups should put the hole in the bottom.

heat rises. "the top is cool enough to drink" implies "everything below it is colder".

drinking from the bottom lets us access safe temperatures earlier and before the whole cup cools.

04.01.2025 23:37 — 👍 3 🔁 0 💬 0 📌 0

running npm version from a subdirectory of a git repository is literally an unsolved problem in 2024

github.com/npm/cli/issu...

04.01.2025 20:37 — 👍 1 🔁 0 💬 0 📌 0

I should just get this tattooed, I never remember how to find it

pip install huggingface_hub[hf_transfer]

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download

01.01.2025 23:50 — 👍 2 🔁 0 💬 0 📌 0

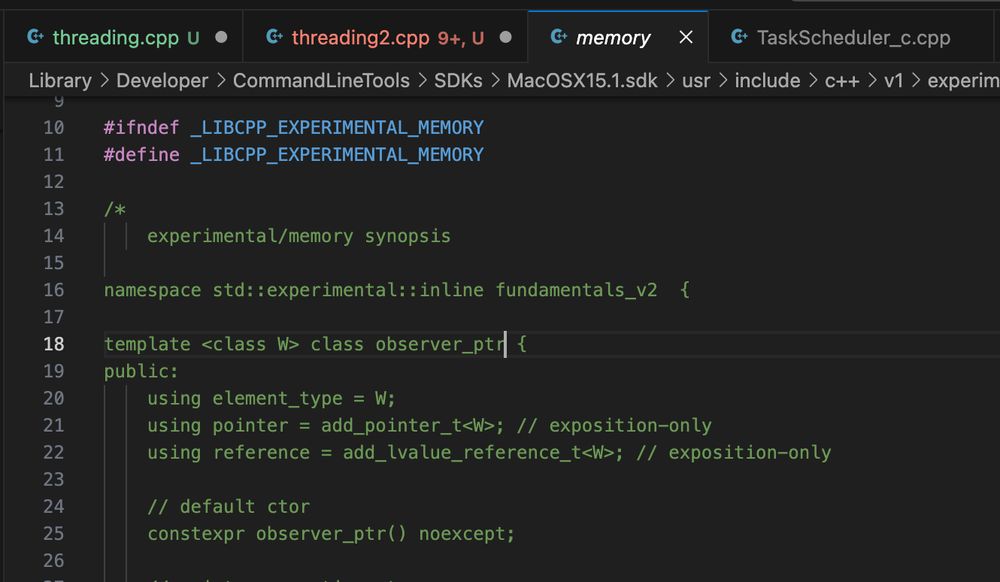

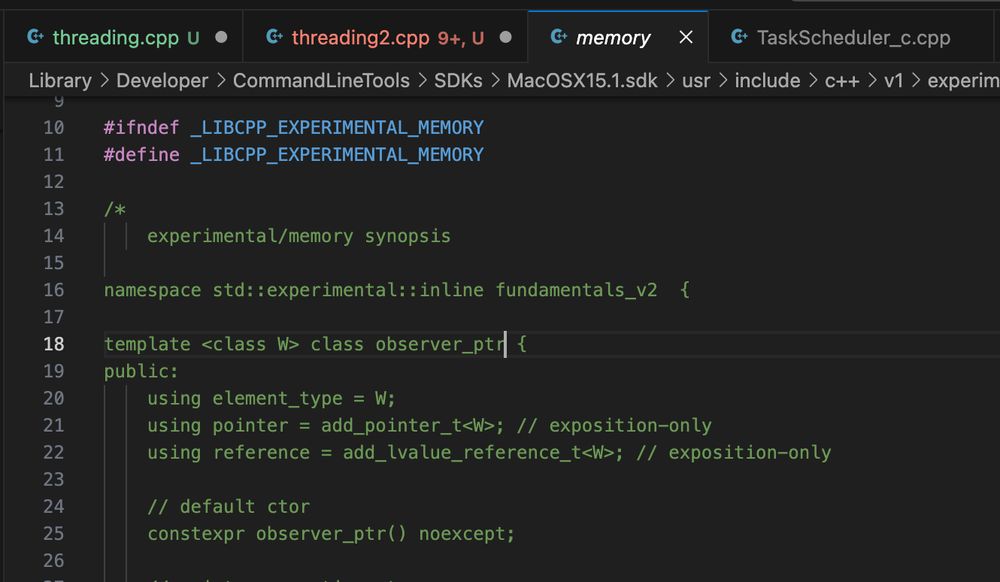

when the standard library comments out std::experimental::observer_ptr just to stop you having fun

22.12.2024 02:09 — 👍 0 🔁 0 💬 0 📌 0

NovelAI v4 makes dreams come true

21.12.2024 21:49 — 👍 4 🔁 0 💬 0 📌 0

when you're measuring torch compile warmup "oh 11 secs that's not so bad"

then you realize it was 111 secs

19.12.2024 18:00 — 👍 2 🔁 0 💬 0 📌 0

Claude's alright

17.12.2024 02:51 — 👍 2 🔁 0 💬 0 📌 0

if you care about multiprocess debugging in VSCode please upvote this issue

so we don't have to click terminate a hundred times

github.com/microsoft/vs...

16.12.2024 22:26 — 👍 3 🔁 0 💬 0 📌 0

GitHub - NVlabs/edm2: EDM2 and Autoguidance -- Official PyTorch implementation

EDM2 and Autoguidance -- Official PyTorch implementation - NVlabs/edm2

the EDM2 repository had an Autoguidance update 4 days ago demonstrating their NeurIPS Oral paper "Guiding a Diffusion Model with a Bad Version of Itself"

github.com/NVlabs/edm2

13.12.2024 00:25 — 👍 3 🔁 0 💬 0 📌 0

torch profiler record_function spans are not free, even when you're not profiling a model. the model I'm benchmarking trained 2.5% faster when I commented them all out.

12.12.2024 18:23 — 👍 2 🔁 0 💬 0 📌 0

10.12.2024 14:02 — 👍 0 🔁 0 💬 0 📌 0

10.12.2024 14:02 — 👍 0 🔁 0 💬 0 📌 0

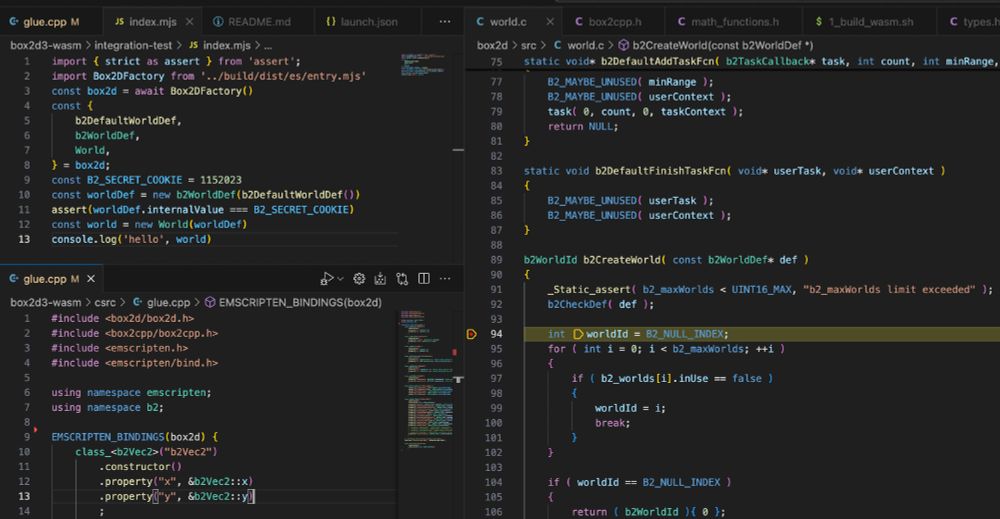

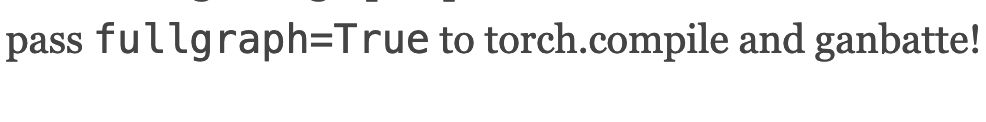

I thought this was about Box2D v3

09.12.2024 04:30 — 👍 1 🔁 0 💬 0 📌 0

it's here

main thing it's missing is bindings. lots of bindings

and I don't know how to bind to raw pointers and callbacks (it's not working)

github.com/Birch-san/bo...

09.12.2024 01:33 — 👍 0 🔁 0 💬 0 📌 0

Box2D 3.0.0 in WebAssembly+TypeScript starting to work

09.12.2024 01:32 — 👍 1 🔁 0 💬 1 📌 0

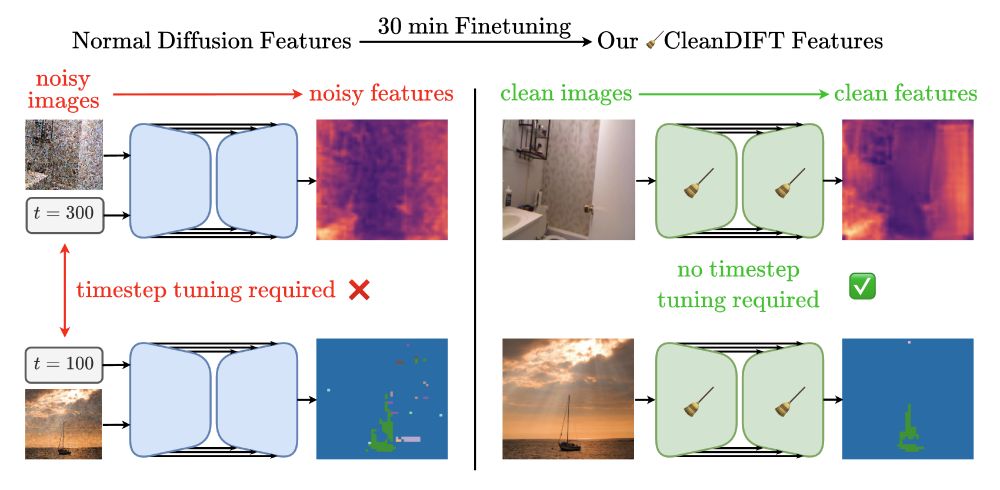

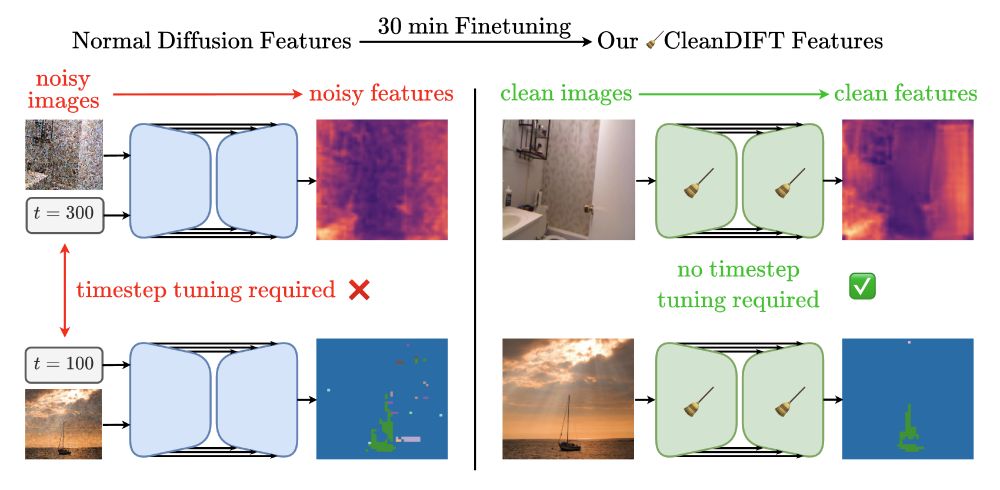

🤔 Why do we extract diffusion features from noisy images? Isn’t that destroying information?

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

04.12.2024 23:31 — 👍 40 🔁 10 💬 2 📌 5

I worked on Angular apps where people would type their arrays as `any`.

not even `any[]`, just `any`.

not a shred of pride

04.12.2024 01:24 — 👍 4 🔁 0 💬 1 📌 0

torch.compile is hard for dynamic shapes / large number of static shapes, and non-transformer architectures.

I measure suites of shapes, log recompiles, check which require warmup.

operation compile competitive with whole-model compile.

some operations prefer compiler disabled.

dynamic often slow.

04.12.2024 01:22 — 👍 1 🔁 0 💬 0 📌 0

“that makes two of us!”

03.12.2024 22:01 — 👍 1 🔁 0 💬 0 📌 0

come to ML, where there are no tests, and dependencies are the wild west

03.12.2024 20:50 — 👍 1 🔁 0 💬 0 📌 0

A common question nowadays: Which is better, diffusion or flow matching? 🤔

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

02.12.2024 18:45 — 👍 255 🔁 58 💬 7 📌 7

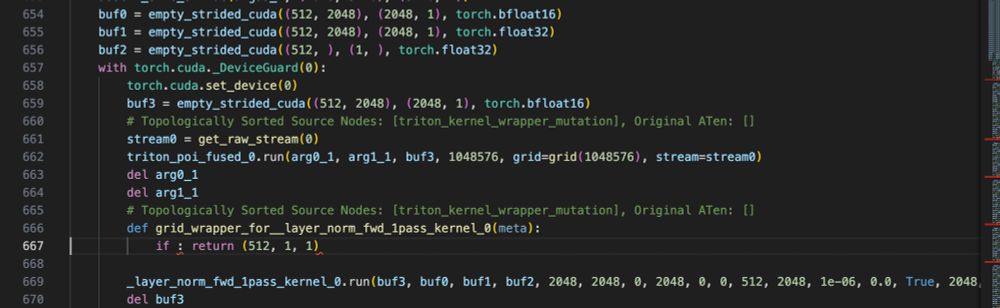

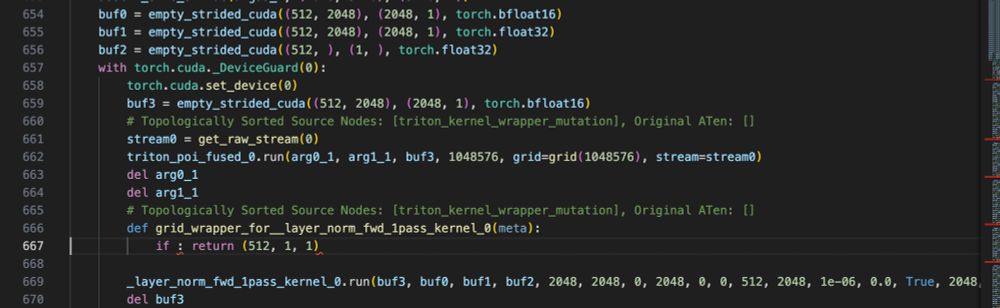

incidentally the inductor problem is here.

it could be fixed by omitting the guard altogether, or perhaps eliding the function grid wrapper entirely

github.com/pytorch/pyto...

30.11.2024 21:57 — 👍 1 🔁 0 💬 0 📌 0

if you don't do this, then inductor codegen emits invalid python

30.11.2024 21:54 — 👍 2 🔁 0 💬 1 📌 0

Now https://drawthings.ai. Maintains https://libnnc.org / https://libccv.org / https://dflat.io. Wrote iOS app Snapchat (2014-2020). Founded Facebook Videos with others (2013). Sometimes writes at https://liuliu.me

Hopeless TV critic, Python Core Developer, and a bunch of other boring stuff.

@PyTorch "My learning style is Horace twitter threads" -

@typedfemale

AI / ML researcher and developer. https://ostris.com

ML at http://glif.app

Neural network speedrunner and community-funded open source researcher. Set the CIFAR-10 record several times. Send me consulting/contracting work! she/they❤️

Boston transplant now living in San Francisco with a border collie / lab mix

I make models more efficient.

Google Scholar: https://scholar.google.com/citations?user=GDm6BIAAAAAJ&hl=en

Made sdxl-vae-fp16-fix, taesd, that pokemon-emulation-via-dnn thing.

https://github.com/madebyollin

https://madebyoll.in

Researcher (OpenAI. Ex: DeepMind, Brain, RWTH Aachen), Gamer, Hacker, Belgian.

Anon feedback: https://admonymous.co/giffmana

📍 Zürich, Suisse 🔗 http://lucasb.eyer.be

I make sure that OpenAI et al. aren't the only people who are able to study large scale AI systems.

10.12.2024 14:02 — 👍 0 🔁 0 💬 0 📌 0

10.12.2024 14:02 — 👍 0 🔁 0 💬 0 📌 0