@cstrauch.bsky.social

25.02.2026 12:06 — 👍 1 🔁 0 💬 1 📌 0@cstrauch.bsky.social

25.02.2026 12:06 — 👍 1 🔁 0 💬 1 📌 0Hey bluesky 👋 really excited about this one! 👀

24.02.2026 09:42 — 👍 13 🔁 1 💬 0 📌 0

My first TEDx talk just came out. It's always fun to talk about your own research area to the general audience, and its even more fun when you are lucky enough to be supported by such a platform. Happy to hear your thoughts :-)

youtu.be/UyUclyHx8d8?...

Thanks to @ruthrosenholtz.bsky.social for the thought-provoking article. I'm looking forward to reading all the other commentaries and the full reply!

26.11.2025 13:40 — 👍 0 🔁 0 💬 0 📌 0

Our commentary @stigchel.bsky.social on Ruth Rosenholtz' Visual Attention in Crisis paper is now available:

doi.org/10.1017/S014...

We argue that effort must be considered when aiming to quantify capacity limits or a task's complexity.

Congrats!! 🎉

25.11.2025 19:16 — 👍 0 🔁 0 💬 1 📌 0

Celebration time 🥳 @liangyouzhang.bsky.social publishes the 1st empirical paper of his PhD!

We show that numerosity adaptation (a seemingly high-level stim property) suppresses neural responses in early visual cortex; these adaptation FX increase as we progress thru the visual processing hierarchy.

This paper is now published in Journal of Neuroscience!

www.jneurosci.org/content/earl...

Planning on running a RIFT study? In a new manuscript, we put together the RIFT know-how accumulated over the years by multiple labs (@lindadrijvers.bsky.social, @schota.bsky.social, @eelkespaak.bsky.social, with Cecília Hustá and others).

Preprint: osf.io/preprints/ps...

Big thanks to @henryjones.bsky.social for his help with the simulation analysis! And of course thanks to all other co-authors Vicky Voet, Ed Awh, @cstrauch.bsky.social @stigchel.bsky.social.

Also thanks to the anonymous reviewers for their valuable input.

Highlighting a supplementary analysis we added: Prior work showed that cross-decoding asymmetries can sometimes be driven by SNR differences between conditions instead of 'true' neurocognitive effects. We simulated EEG data and showed that SNR is unlikely to account for our cross-decoding results.

24.10.2025 08:35 — 👍 2 🔁 0 💬 1 📌 0Filled with a bunch of extra analyses, this is now accepted in The Journal of Neuroscience @sfn.org! You can have a sneak peak here: www.biorxiv.org/content/10.1...

24.10.2025 08:35 — 👍 14 🔁 2 💬 1 📌 1

🧠 Regularization, Action, and Attractors in the Dynamical “Bayesian” Brain

direct.mit.edu/jocn/article...

(still uncorrected proofs, but they should post the corrected one soon--also OA is forthcoming, for now PDF at brainandexperience.org/pdf/10.1162-...)

Thanks Sebastiaan!!

17.10.2025 13:41 — 👍 0 🔁 0 💬 0 📌 0Thanks to everyone that contributed to project @henryjones.bsky.social, Stefan Van der Stigchel and Ed Awh. Also a special thanks to @dsuplica.bsky.social for helping out with the data from Exp. 3!

14.10.2025 14:04 — 👍 2 🔁 0 💬 0 📌 0In all experiments, we found a consistent pattern: pupil size tracked attentional breadth and WM load independently. This converges with recent EEG decoding work demonstrating a dissociation between spatial attention and working memory gating.

14.10.2025 14:04 — 👍 1 🔁 0 💬 1 📌 0

We analyzed pupil size - which reflects both spatial attention and WM load - data from three experiments wherein attentional breadth and working memory load were manipulated independently. For example, using dot cloud stimuli the spatial extent of stimuli were orthogonal to the number of clouds.

14.10.2025 14:04 — 👍 0 🔁 0 💬 1 📌 0

Spatial attention and working memory are popularly thought to be tightly coupled. Yet, distinct neural activity tracks attentional breadth and WM load.

In a new paper @jocn.bsky.social, we show that pupil size independently tracks breadth and load.

doi.org/10.1162/JOCN...

1/ Why are we so easily distracted? 🧠 In our new EEG preprint w/ Henry Jones, @monicarosenb.bsky.social and @edvogel.bsky.social we show that distractibility is associated w/ reduced neural connectivity — and can be predicted from EEG with ~80% accuracy using machine learning.

28.09.2025 19:14 — 👍 61 🔁 25 💬 1 📌 1

Very excited to announce my first paper is out in @currentbiology.bsky.social! Using EEG, we identify an item-based measure of storage in working memory that generalizes across auditory and visual items.

authors.elsevier.com/a/1ljFF3QW8S...

#PsychSciSky #neuroskyence #workingmemory

🧠 Excited to share that our new preprint is out!🧠

In this work, we investigate the dynamic competition between bottom-up saliency and top-down goals in the early visual cortex using rapid invisible frequency tagging (RIFT).

📄 Check it out on bioRxiv: www.biorxiv.org/cgi/content/...

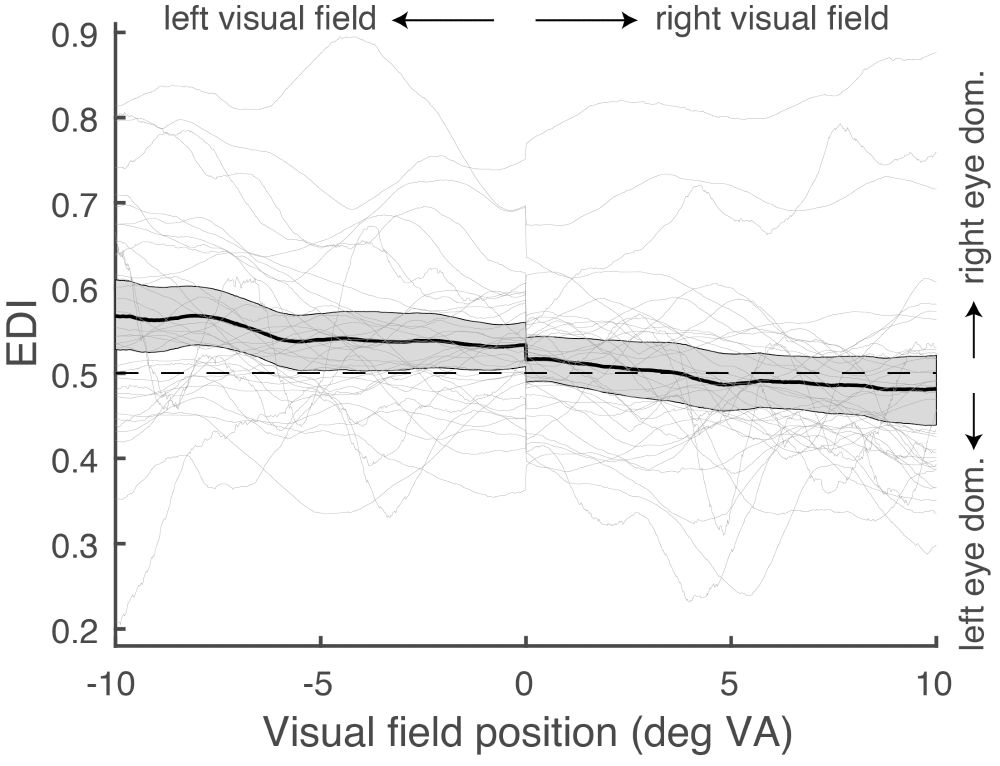

Sensory eye dominance varies over the horizontal axis of the visual field: left eye dominance for the right visual field; right eye dominance for the left visual field.

Surely you know about eye dominance. You probably don’t know it’s not a unitary phenomenon: in this paper I show that sensory eye dominance varies over the visual field. In the Discussion I propose an explanation for why this variation might exist. Curious? Read it here: doi.org/10.1167/jov....

02.07.2025 07:46 — 👍 6 🔁 3 💬 1 📌 0

Had a blast at last week's symposium! Inspiring to hear all the talks about EEG and attention.

I presented on the neural correlates of saccade preparation and covert spatial attention. Check out the preprint here: doi.org/10.1101/2025...

Last week's symposium titled "Advances in the Encephalographic Study of Attention" was a great success! Held in the KNAW building in Amsterdam and sponsored by the NWO, many of (Europe's) leading attention researchers assembled to discuss the latest advances in attention research using M/EEG.

30.06.2025 07:12 — 👍 25 🔁 7 💬 4 📌 3

In this new preprint, in review @elife.bsky.social, we show what processing steps make up the reaction time using single trial #EEG modelling in a contrast #decision task.

In this 🧵 I'm telling the story behind it as I think it is quite interesting and I can't write it like this in the paper...

Thrilled to share that I successfully defended my PhD dissertation on Monday June 16th!

The dissertation is available here: doi.org/10.33540/2960

New paper out at Journal of Memory and Language! We knew that individual differences in working memory predict source memory, but did it predict simple item recognition memory (that relied on less attention resources than source memory)? Our answer is: YES! (with @edvogel.bsky.social ) 1/5

18.06.2025 15:56 — 👍 9 🔁 3 💬 1 📌 0

Now published in Attention, Perception & Psychophysics @psychonomicsociety.bsky.social

Open Access link: doi.org/10.3758/s134...

Thanks to the support of the Dutch Research Council (NWO) and @knaw-nl.bsky.social , we're thrilled to announce the international symposium "Advances in the Encephalographic study of Attention"! 🧠🔍

📅 Date: June 25th & 26th

📍 Location: Trippenhuis, Amsterdam

Sensory sensitivity

We study how people react to sensory input like lights and sounds. You can help by completing a short online questionnaire. You can also sign up for an optional 3-hour lab session in Utrecht (€12/ hour) involving EEG and hearing tests.

Take the survey here: tinyurl.com/457z89ta