Nitpick: Berry–Esseen :P

29.07.2025 06:11 — 👍 1 🔁 0 💬 1 📌 0@anaymehrotra.bsky.social

PhD candidate @ Yale | Undergrad @ IITK | anaymehrotra.com Learning Theory, Missing Data, Generation

@anaymehrotra.bsky.social

PhD candidate @ Yale | Undergrad @ IITK | anaymehrotra.com Learning Theory, Missing Data, Generation

Nitpick: Berry–Esseen :P

29.07.2025 06:11 — 👍 1 🔁 0 💬 1 📌 0Organized with a stellar team of co-organizers – Andrew Ilyas (aifi.bsky.social), Alkis Kalavasis, and Manolis Zampetakis

28.07.2025 17:14 — 👍 1 🔁 0 💬 0 📌 0A fantastic lineup of speakers/panelists: Ahmad Beirami (abeirami.bsky.social), Surbhi Goel (surbhigoel.bsky.social���), Steve Hanneke, Chris Harshaw, Amin Karbasi (aminkarbasi.bsky.social), Samory Kpotufe, Chara Podimata (charapod.bsky.social), …

28.07.2025 17:14 — 👍 1 🔁 0 💬 1 📌 0📣 Excited to announce the Reliable ML workshop at neuripsconf.bsky.social 2025!

How do we build trustworthy models under distribution shift, adversarial attacks, strategic behavior, and missing data?

→ Submission tracks: long (9 pg) and short (4 pg)

→ Deadline: Aug 22, 2025 (AOE)

Slides 🪧 from our language generation tutorial are now up!

Check them out at languagegeneration.github.io

Recorded sessions coming – meanwhile also check out Jon's invited talk at ICML – icml.cc/virtual/2025... !

If you are at COLT, join us for a tutorial on Language Generation on the first day!

The tutorial dives into Kleinberg and Mullainathan’s “generation in the limit” framework and the exciting space of works building on it.

🕤 9:30 AM–12:00 PM | Room C

🔗 languagegeneration.github.io

📣Join us at COLT 2025 in Lyon for a community event!

📅When: Mon, June 30 | 16:00 CET

What: Fireside chat w/ Peter Bartlett & Vitaly Feldman on communicating a research agenda, followed by mentorship roundtable to practice elevator pitches & mingle w/ COLT community!

let-all.com/colt25.html

We are organizing a Language Generation tutorial @ #COLT 2025!

Visit our website (languagegeneration.github.io/) for references and materials; content updated regularly, check back for the latest!

Coorganizers: Moses Charikar, Chirag Pabbaraju, Charlotte Peale, Grigoris Velegkas

See you in Lyon!

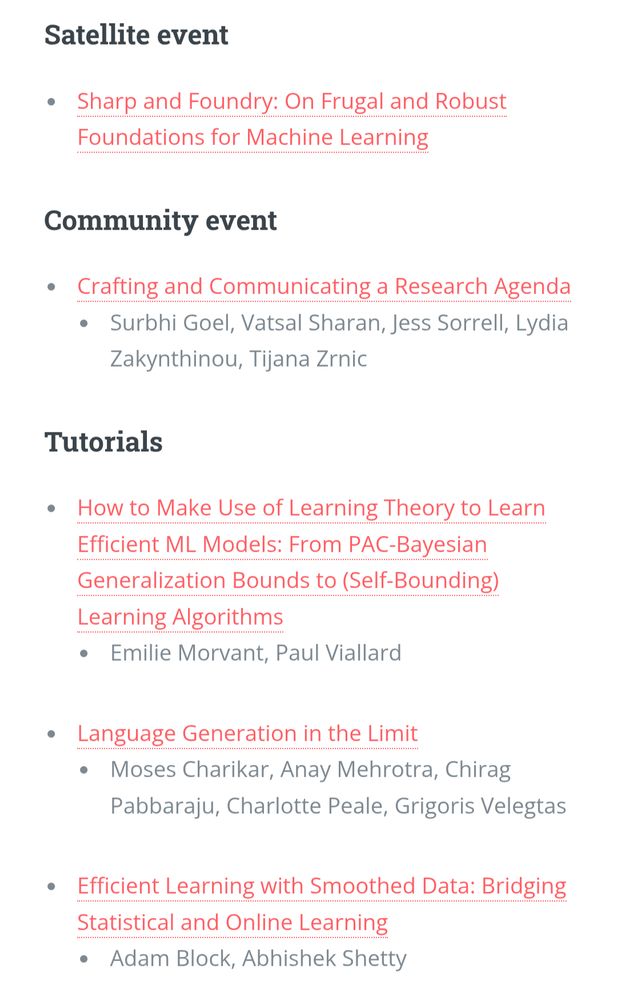

Community events and tutorials, list from the website

Workshops, list from the website

The tutorials, workshops, and community events for #COLT2025 have been announced!

Exciting topics, and impressive slate of speakers and events, on June 30! The workshops have calls for contributions (⏰ May 16, 19, and 25): check them out!

learningtheory.org/colt2025/ind...

@felix-zhou-cfz.bsky.social is giving two talks about this work at @uwaterloo.ca – one in the A&C seminar (May 14th), followed by a proof overview in the student seminar (May 15th)!

09.05.2025 20:14 — 👍 1 🔁 0 💬 0 📌 0

Paper ➜ arxiv.org/abs/2504.07133

09.05.2025 20:11 — 👍 2 🔁 0 💬 0 📌 0→ CDIZ’23: Runtime ≈ poly(d) · exp(k/ε)

→ Gaitonde–Mossel’24: Runtime ≈ poly(d) / εᵏ (+ also have optimal sample complexity)

→ Ours: Runtime ≈ poly(d) / ε² via warm start + SGD

Key insight: Recast self-selection as regression with coarse (aka rounded) labels

Self-selection arises when agents face options r_1, …,r_k and strategically choose the one to, e.g., maximize the reward

The seminal work of Roy (1951) introduced learning with self-selection

Even identification for unknown-index variant is nascent, starting w/ CDIZ [STOC’23]

New paper w/ @felix-zhou-cfz.bsky.social & Alkis Kalavasis!

Result: Vanilla SGD (w/ warm start) solves regression with unknown-index self-selection bias

Our method speeds up earlier algorithms by Y. Cherapanamjeri, C. Daskalakis, @aifi.bsky.social, M. Zampetakis, J. Gaitonde, & E. Mossel

A research paper?

29.04.2025 19:44 — 👍 2 🔁 0 💬 1 📌 0

This looks exciting! arxiv.org/abs/2504.160...

by Xi Chen, Shyamal Patel, and Rocco Servedio.

An exp(k^1/3)-query adaptive algo for tolerant testing of k-juntas ("is a Boolean function on n variables close from depending on only k variables?"), via a connection to agnostic learning conjunctions.

Growing list of contributors/researchers

Cornell/MIT: Jon Kleinberg, @sendhil.bsky.social

Duke: Fan Wei

Stanford: Moses Charikar, Chirag Pabbaraju, Charlotte Peale, Omer Reingold

U Michigan: Jiaxun Li, @vkraman.bsky.social , Ambuj Tewari

Yale: Alkis Kalavasis, Anay mehrotra, Grigoris Velegkas

Excellent talk by Jon Kleinberg at the institute for advanced studies on language generation—an exciting new area initiated by Jon and @sendhil.bsky.social, with contributors from many institutions (list below)

Link: www.youtube.com/watch?v=zlyr...

"What makes a good fisherman as opposed to other professions?"

This question can be formulated as a k-linear regression problem with self-selection bias.

Alkis, @anaymehrotra.bsky.social, and I design faster local convergence algorithms for this problem:

arxiv.org/abs/2504.07133

(1/7)

STOC Theory Fest in Prague June 23-27.

Registration now open. Early deadline is May 6.

acm-stoc.org/stoc202...

You can apply for student support. Deadline April 27.

acm-stoc.org/stoc202...

Taking a break from the submission season? Swing by the Workshop on Algorithms for Large Data (Online), WALDO 2025 🗓️ April 14—16: waldo-workshop.github.io/2025.html

Registration is free! (but necessary by April 7)

A reminder about NY Theory Day in a week! Fri Dec 6th! Talks by Amir Abboud, Sanjeev Khanna, Rotem Oshman, and Ron Rothblum! At NYU Tandon!

sites.google.com/view/nyctheo...

Registration is free, but please register for building access.

See you all there!

Missed the tag above: sendhil.bsky.social

25.11.2024 19:32 — 👍 0 🔁 0 💬 0 📌 0There is hope: If one has negative examples (undesirable outputs), generation and breadth can be achieved simultaneously!

En route, we get near-tight universal rates of generation building on seminal works: Angluin'88 and Bousquet, Hanneke, Moran, van Handel, Yehudayoff STOC'20

(3/3)

➤ Result: For most interesting language collections, all next-token-predictors either hallucinate or mode-collapse

This ~answers an open question in the fascinating recent work by Jon Kleinberg and @m_sendhil on language generation (see simons.berkeley.edu/talks/jon-kl...)

🧵(2/3)

Screenshot of a paper with the title "On the Limits of Language Generation: Trade-Offs Between Hallucination and Mode Collapse," authored by Alkis Kalavasis (Yale), Anay Mehrotra (Yale), and Grigoris Velegkas (Yale)

We want language models that do not hallucinate

We want language models that have breadth (i.e., no mode-collapse)

Jon Kleinberg-@sendhil.bsky.social asked: Can we get both?

Alkis Kalavasis, Grigoris Velegkas, and I show this is impossible: arxiv.org/abs/2411.09642

🧵(1/3)

I wrote a Part IV postscript to my job market blog post to add what I've learned as faculty.

TL;DR: No one is out to get you. For anything not going your way, it's probably due to people being busy or bureaucracy. And there are probably people working very hard for you behind the scenes regardless.

A list of all the stats/modeling/ML/data starter packs I've seen (26+ and counting):

23.11.2024 19:57 — 👍 44 🔁 12 💬 6 📌 3