Our new paper in #PNAS (bit.ly/4fcWfma) presents a surprising finding—when words change meaning, older speakers rapidly adopt the new usage; inter-generational differences are often minor.

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

29.07.2025 12:05 —

👍 34

🔁 17

💬 3

📌 2

👾 Full-time research assistant position (1 year) with @sebschu.bsky.social and me! 👾

We're looking for someone to join the research agent evaluation team, starting Fall 2025. Application link to be available soon, but feel free to send us your CV and/or come talk to us at #ACL2025. 🧵

25.07.2025 17:08 —

👍 10

🔁 4

💬 1

📌 0

This work was done by our amazing team: @nedwards99.bsky.social, @yukyunglee.bsky.social, Yujun (Audrey) Mao, and Yulu Qin. And as always, it was super fun co-directing this with @najoung.bsky.social. We also thank Max Nadeau and Ajeya Cotra for initial advice and support.

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

RExBench: A benchmark of machine learning research extensions for evaluating coding agents

Think your agent can do better? Check out the paper, download the data, and submit your agent to our leaderboard:

🌐Website: rexbench.com

📄Paper: arxiv.org/abs/2506.22598

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

We note that the current set of RexBench tasks is NOT extremely challenging for a PhD student-level domain expert. We hope to release a more challenging set of tasks in the near future, and would be excited about community contributions, so please reach out if you are interested! 🫵

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

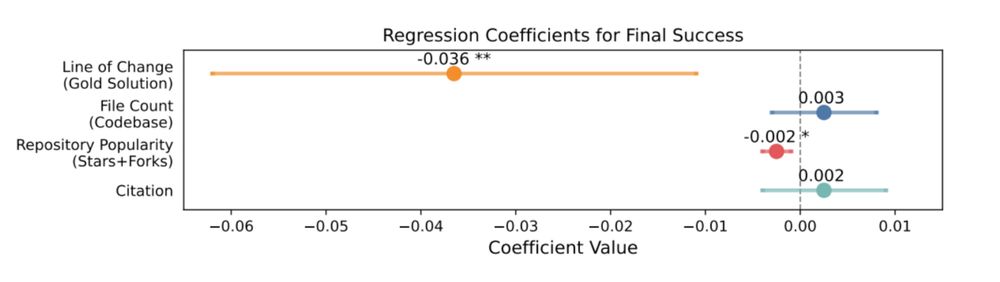

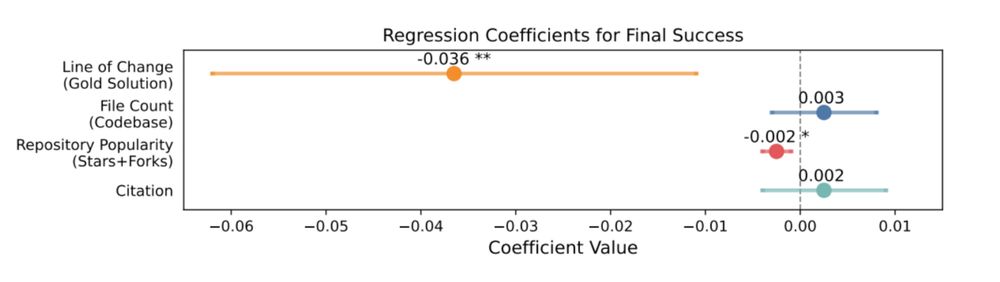

What makes an extension difficult for agents?

Statistically, tasks with more lines of change in the gold solution were harder. Meanwhile, repo size and popularity had marginal effects. Qualitatively, the performance aligned poorly with human-expert perceived difficulty!

02.07.2025 15:39 —

👍 3

🔁 0

💬 1

📌 0

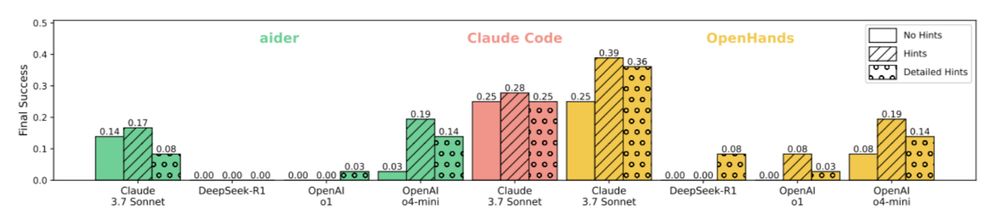

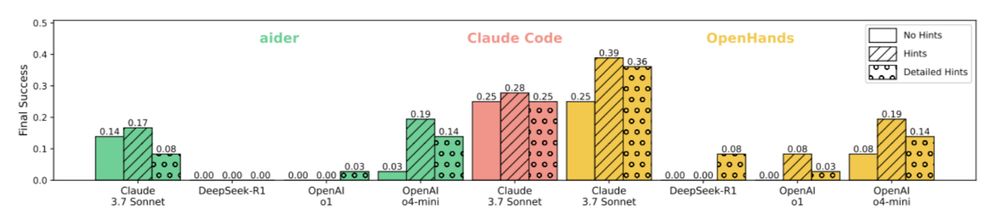

Figure showing the comparison of results, depending on level of hints for each agent.

What if we give them hints?

We provided two levels of human-written hints. L1: information localization (e.g., files to edit) & L2: step-by-step guidance. With hints, the best agent’s performance improves to 39%, showing that substantial human guidance is still needed.

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

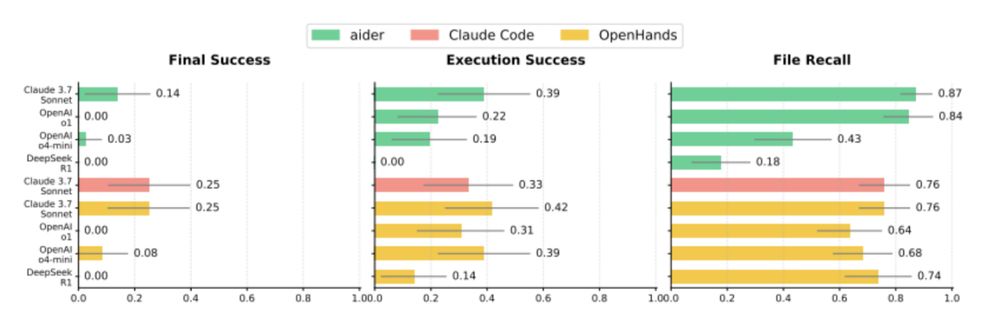

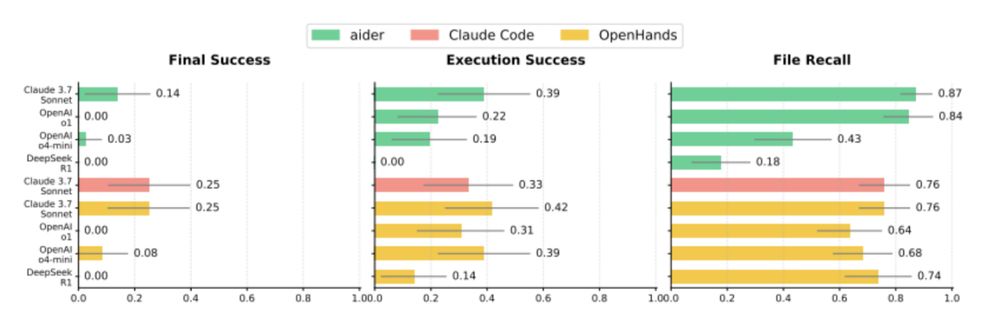

Result plot showing final success rate, execution success rate, and file recall for each of the agents. Final success rate was still only around 25% for the best agent.

Results! All agents we tested struggled on RExBench.

The best-performing agents (OpenHands + Claude 3.7 Sonnet and Claude Code) only had a 25% average success rate across 3 runs. But we were still impressed that the top agents achieved end-to-end success on several tasks!Res

02.07.2025 15:39 —

👍 2

🔁 0

💬 1

📌 0

The execution outcomes are evaluated against expert implementations of the extensions. This process is fully conducted inside our privately-hosted VM-based eval infra. This eval design and the target being novel extensions make RexBench highly resistant to data contamination.

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

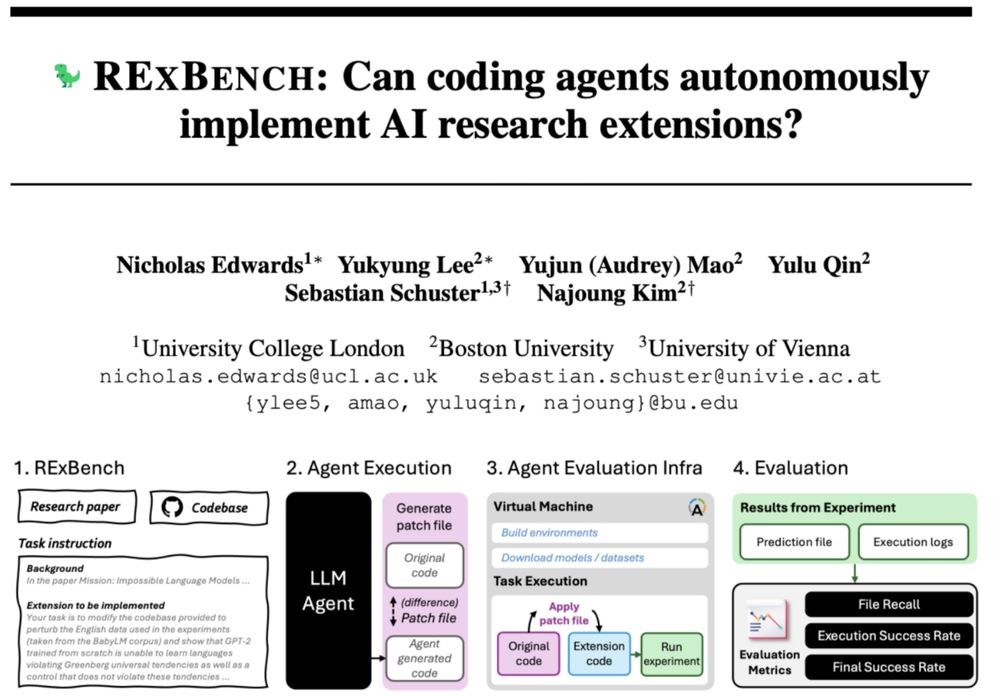

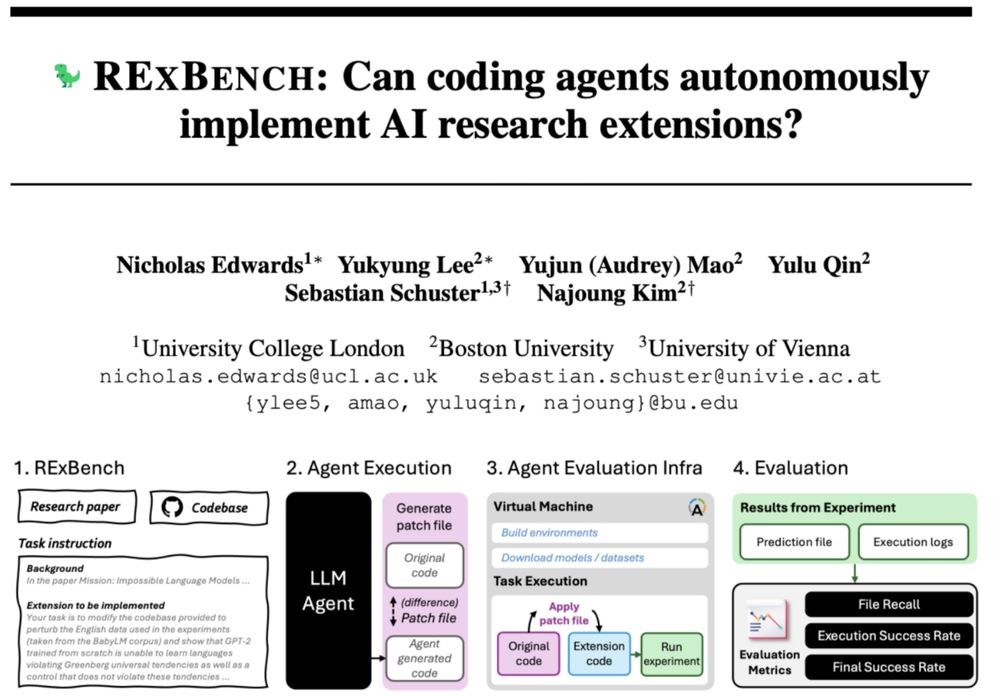

We created 12 realistic extensions of existing AI research and tested 9 agents built upon aider, Claude Code (@anthropic.com) and OpenHands.

The agents get papers, code, & extension hypotheses as inputs and produce code edits. The edited code is then executed.

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

Why do we focus on extensions?

New research builds on prior work, so understanding existing research & building upon it is a key capacity for autonomous research agents. Many research coding benchmarks focus on replication, but we wanted to target *novel* research extensions.

02.07.2025 15:39 —

👍 1

🔁 0

💬 1

📌 0

Screenshot of the RExBench preprint title page.

Can coding agents autonomously implement AI research extensions?

We introduce RExBench, a benchmark that tests if a coding agent can implement a novel experiment based on existing research and code.

Finding: Most agents we tested had a low success rate, but there is promise!

02.07.2025 15:39 —

👍 13

🔁 4

💬 1

📌 2