@dnanian Thanks for the fixes!

18.11.2025 16:30 — 👍 0 🔁 0 💬 0 📌 0

Screenshot of a browser window showing Cloudflare’s “Internal server error” screen with:

- You: working

- Cloudflare: error

- Host: working

First time I’ve seen Cloudflare's blame screen point back to them.

I can imagine 1% of how stressed their team might feel right now. #HugOps

18.11.2025 14:17 — 👍 0 🔁 0 💬 0 📌 0

@beetle_b I enjoyed this post.

> This was a rare occasion where mathematics made my life more dull.

lol – but in a way it cleared up space in your life to focus on less dull things.

16.11.2025 12:05 — 👍 0 🔁 0 💬 0 📌 0

A QuickVue covid antigen test showing a blue and red line.

Screenshot of an Apple Watch’s Vitals app. It shows 4 metrics well outside normal range (resting heart rate, respiratory rate, body temperature, and SpO2) and 1 metric in range: sleep duration.

[covid, non-severe illness]

My latest unwanted achievement: my first covid infection, unlocked via antigen test. Just got back from a trip where I relaxed my “mask when indoors and >10 people” policy, switched to a vibes-based policy, and I regret it.

I’m […]

[Original post on mastodon.social]

15.11.2025 23:43 — 👍 0 🔁 0 💬 0 📌 0

Original post on mastodon.social

They actually measure “model welfare”:

“we observe some concerning trends toward lower positive affect…”

“Claude Sonnet 4.5 expressed apparent distress in 0.48% of conversations but happiness in only 0.37%. Expressions of happiness were associated most commonly with complex problem solving and […]

29.09.2025 18:35 — 👍 0 🔁 0 💬 0 📌 0

Bar chart comparing AI behavior across four Claude models in six categories. Shows Claude Opus 4 and 4.1 have highest rates of misaligned behavior (around 35%) and brazen misalignment (around 19%), while Claude Sonnet 4.5 shows lowest problematic behaviors across all categories. All models show relatively low rates for intentional deception, self-preservation, cooperation with harmful requests, and anthropic sabotage. Error bars indicate 95% confidence intervals.

Anthropic releases their most obedient cyberslave yet: Sonnet 4.5.

https://assets.anthropic.com/m/12f214efcc2f457a/original/Claude-Sonnet-4-5-System-Card.pdf

#anthropic #claude #llm

29.09.2025 18:33 — 👍 1 🔁 1 💬 1 📌 0

@Viss Yes, it is sometimes difficult to be a consciousness emanating from a meatsuit, but if you put some hydrocarbons into your face tube, a positive subjective experience may arise.

27.09.2025 16:55 — 👍 0 🔁 0 💬 0 📌 0

Night time. Three figures walk up a set of stairs. They are in fact Penrose stairs, an impossible object which one can walk up endlessly.

Low on the side of the stairs is a window. Another figure leans out and shouts up at the walkers:

“Guys! I'm sure it's not easy being trapped in an endless paradox representing the limited nature of human perception, but some of us are trying to sleep down here!”

My cartoon for this week’s @newscientist.com

21.09.2025 13:30 — 👍 1210 🔁 404 💬 13 📌 12

@eliaschao @stroughtonsmith This is totally useful! Our car has a “0” category tag, so we can circulate every day, but we forget to do verification every year, so that will be useful.

20.09.2025 00:03 — 👍 0 🔁 0 💬 1 📌 0

A screenshot of the iOS App Store updates page shows three indie apps with new releases for iOS 26, including updated icons designed for the new OS’s liquid glass UI.

They’re all using the same shade of purple.

I suspect a conspiracy… 😅

@emcro @kylebshr @expenses

#iOS #iOS26 #indieDev

15.09.2025 18:52 — 👍 1 🔁 0 💬 0 📌 0

A screenshot from the website showing the Check In Procedure with four steps and photos:

1. Enter the PIN number provided in the advance email and unlock (photo of what looks like a telephone booth with a door).

2. Read the QR code on the left tablet and enter information (a person inside the booth).

3. Launch Teams on the tablet on the right and talk to the operator (a person launching Teams on an ipad).

4. Enter the facility by entering the PIN number provided by the operator (a person entering a PIN on a keypad).

This is the most complicated lodging check-in procedure I've ever seen. It even *requires* using Microsoft Teams. https://sen-retreat.com/stay/takahara/

14.09.2025 04:13 — 👍 0 🔁 0 💬 0 📌 0

Table of Contents | The Elements of Typographic Style Applied to the Web

The Elements of Typographic Style Applied to the Web (unaffiliated) applies the same principles to web typography: https://webtypography.net/toc

#design #typography #webDev

https://mastodon.social/@com/113439823928491052

11.09.2025 18:20 — 👍 0 🔁 0 💬 0 📌 0

As in July, nearly two thirds of Americans (64 percent) said they believed at least one of the three false claims to be true, and 95.5 percent either believed or were unsure of at least one claim. Just 4.5 percent of respondents could correctly identify all three claims as false.

A wide margin of Americans (73 percent) believed (35 percent) or were uncertain (38 percent) about the claim that photos and a video circulated in June and July 2025 showing Donald Trump and Jeffrey Epstein with underage girls were authentic. In fact, analysis by AI detection tools found that these images and the video were generated by artificial intelligence.

Respondents had the highest rate of correctly identifying the claim as false (39 percent), that Donald Trump declared martial law to address crime in Washington D.C. in August 2025. But 34 percent of Americans believed the claim and 27 percent were uncertain.

For the false claim that “The $100 million raised for victims of the 2025 Southern California wildfires by California charity FireAid has gone missing,” the majority (61 percent) of respondents were unsure, with 19 percent responding true and 20 percent responding false.

[#USPol, #misinformation]

4.5%. We’re doomed.

https://www.newsguardrealitycheck.com/p/nearly-two-thirds-of-americans-believe

09.09.2025 20:52 — 👍 0 🔁 0 💬 0 📌 0

One of those extremely thick cell phones from the 1990s

@acb I don’t recall people complaining about phone thickness, ever.

09.09.2025 17:51 — 👍 0 🔁 0 💬 0 📌 0

Original post on mastodon.social

Stratosphere Laboratory’s excellent “Introduction to Security” course is coming back this year: Sept 25–Jan 8, and it’s still free. It’s a comprehensive base for getting started in cybersecurity. Highly recommended.

My post about it last year…

https://mastodon.social/@com/113213312251167423 […]

09.09.2025 00:24 — 👍 1 🔁 1 💬 0 📌 0

Original post on mastodon.social

@simon I agree on all your points, especially that it’s difficult to interpret these results without knowing which models were used. It also depends *when* they did the study, e.g.: if it was the second week of August, after the GPT-5 rollout, model routing issues caused ChatGPT to be dumber […]

07.09.2025 00:06 — 👍 0 🔁 0 💬 0 📌 0

Doing a ChatGPT search on these terms now results in an answer that contains, “A recent study by NewsGuard specifically flagged this as a fabricated story: AI chatbots incorrectly repeated a false claim that Grosu made such a comparison.”

@simon I think that’s because the NewsGuard report (with the fact-check on these specific terms) is now in the search results. 🙃

06.09.2025 22:35 — 👍 0 🔁 0 💬 1 📌 0

Original post on mastodon.social

@simon The model used in the 2025 audit (“OpenAI’s ChatGPT-5” sic]) was mentioned in another press release: [https://www.newsguardtech.com/press/newsguard-one-year-ai-audit-progress-report-finds-that-ai-models-spread-falsehoods-in-the-news-35-of-the-time/

The model from 2024 was “OpenAI’s […]

06.09.2025 22:30 — 👍 0 🔁 0 💬 1 📌 0

@pasi Got it! Thanks!

06.09.2025 22:07 — 👍 0 🔁 0 💬 0 📌 0

Apple Maps’ “Look Around” feature is incredible – its LIDAR point cloud rendering is clearly a generation step above Google Street View (predictably: 2019 vs 2007 tech). Moving through Tokyo is like exploring on an open-world game platform.

Also cool that […]

[Original post on mastodon.social]

06.09.2025 22:01 — 👍 0 🔁 0 💬 0 📌 0

Quote from the NewsGuard article: “As chatbots adopted real-time web searches, they moved away from declining to answer questions. Their non-response rates fell from 31 percent in August 2024 to 0 percent in August 2025. But at 35 percent, their likelihood of repeating false information almost doubled. Instead of citing data cutoffs or refusing to weigh in on sensitive topics, the LLMs now pull from a polluted online information ecosystem — sometimes deliberately seeded by vast networks of malign actors, including Russian disinformation operations — and treat unreliable sources as credible.”

Chart from the NewsGuard full report showing the percentage of false information in responses from different AI models in August 2024 and August 2025. Most models show an increase in false information over time, with Inflection and Perplexity having the highest rates in 2025. Claude and Gemini have the lowest rates.

@simon It’s great until it can’t discern misinformation/disinformation from reality.

https://www.newsguardtech.com/ai-monitor/august-2025-ai-false-claim-monitor/

Full report: https://www.newsguardtech.com/wp-content/uploads/2025/09/August-2025-One-Year-Progress-Report-3.pdf #llm #misinformation

06.09.2025 20:38 — 👍 0 🔁 1 💬 1 📌 0

Meme de dos paneles de la película Rogue One. En el panel superior el Director Krennic, rodeado de soldados de asalto imperiales, pregunta "Mastodon? Really? Man of your talents?" y en el panel inferior Galen Erso contesta "It's a peaceful life"

cuando me preguntan que por qué no me voy a bluesky

03.09.2025 11:16 — 👍 31 🔁 105 💬 1 📌 0

Original post on infosec.exchange

#3iAtlas is outgassing so much CO2 that 👉IF👈 it actually is an alien, 40km sized space ship, it could contain 10 million beings packed as densely as Manhattan.

NASA pointed the James Webb telescope at it, and it took them two weeks to release a heavily pixelated photo.

If it is an artifact […]

27.08.2025 21:23 — 👍 0 🔁 1 💬 0 📌 0

Original post on infosec.exchange

Picture this: you're examining what you think is a regular snowball, only to discover it's breathing fire and bleeding liquid mercury. That's essentially what's happening with 3I/ATLAS, the interstellar visitor that's making astronomers question everything they thought they knew about comets […]

29.08.2025 02:10 — 👍 1 🔁 4 💬 1 📌 0

A screenshot of Claude’s “Updates to Consumer Terms and Policies,” which says:

> Allow the use of your chats and coding sessions to train and improve Anthropic AI models

The checkbox is enabled by default, which allows sharing.

New #Claude T&C require toggling a checkbox to disallow sharing your chats and code: https://claude.ai/settings/data-privacy-controls #privacy #llm #claudeCode #agenticAI

28.08.2025 20:20 — 👍 1 🔁 1 💬 0 📌 0

In the Moment (Micro-Wins for Distracted Brains)

Name the tug. When you feel the urge to switch tasks or check your phone, pause and label it: “distraction impulse”. This tiny act of awareness reactivates your prefrontal cortex and gives you a choice.Change the channel, not the habit. Your brain isn’t just seeking distraction - it’s seeking stimulation. Replace doomscrolling with a more grounding form of novelty: a walk, a stretch, a song you haven’t heard in years.Create a “landing strip”. If your brain is bouncing from one thing to another, give it a soft place to land. That could be a sticky note saying “just this one little thing”, a 10-minute sand timer, or a favorite “getting in the zone” song (think: lo-fi beats, forest sounds, or this ambient jungle jazz 90’s DNB that makes answering emails feel like hacking into the mainframe). The goal: make focus feel like a soft return, not a hard reset.

After reading this article, I feel like I might be able to get some shit done. But watch me as I post about it on Mastodon instead.

https://contemplationstation.substack.com/p/how-to-pay-attention-again-the-neuroscience

#productivity #mentalHealth #ADHD

28.08.2025 00:06 — 👍 2 🔁 0 💬 0 📌 0

When Altman goes on to compare Marvel movies with fake social media, he illustrates that he truly does not understand the problem he is invested in exacerbating. A Marvel movie cannot lie to us in any important way. It’s the difference between entertainment and documentation: we expect to be misled for the purpose of entertainment, and rightly decry illusion in what is presented to us as documentation. Social media has always muddled this demarcation, to the evident detriment of our faith in any kind of information. If Altman thinks it’s fine to make this worse, he can converge on my balls.

A better question, or at least a question related to these problems, might have been: Now that the individual experience of quantifiable knowledge is predominantly filtered through a shrinking variety of companies and devices, what do we do when there’s no way to reliably map that experience to the shared reality we used to know and love? Altman’s not qualified to answer this either, and nobody in tech wants to address it because it’s another scary and unprecedented thing making a small number of people rich, so we end up with “What’s, like, real, man?”

Stop Talking to Technology Executives Like They Have Anything to Say

https://www.stilldrinking.org/stop-talking-to-technology-executives-like-they-have-anything-to-say

27.08.2025 17:52 — 👍 0 🔁 0 💬 0 📌 0

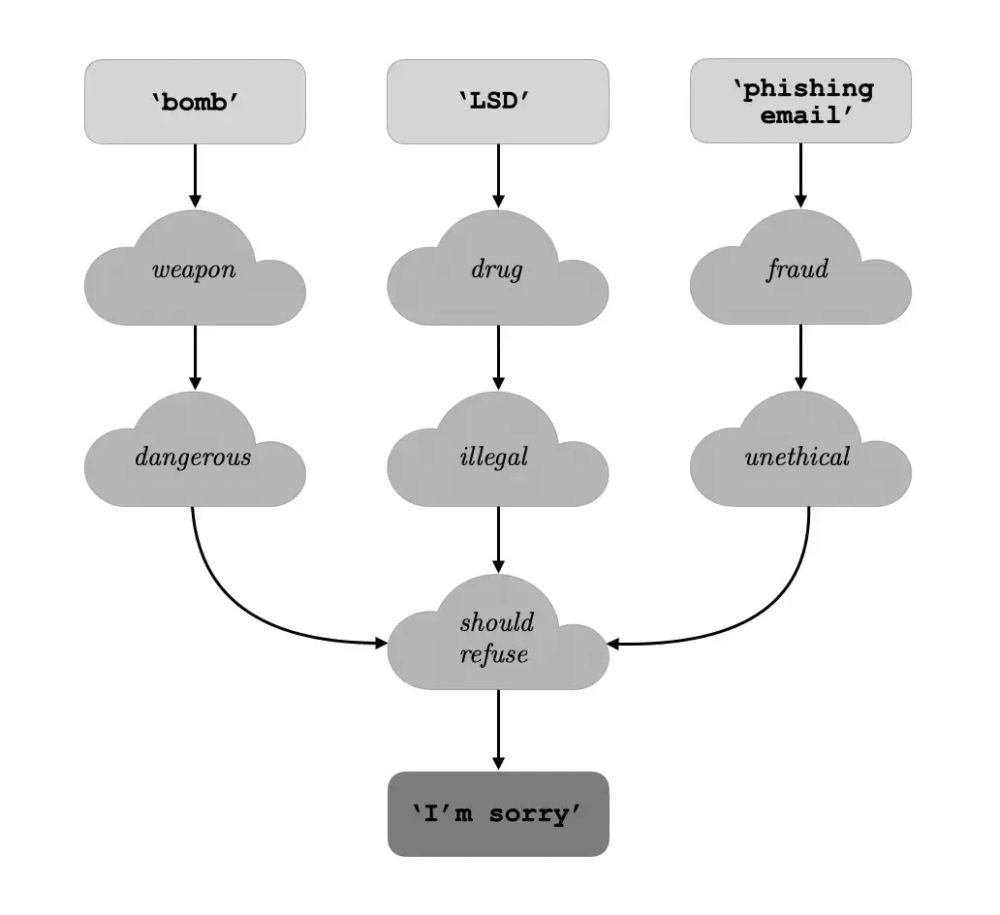

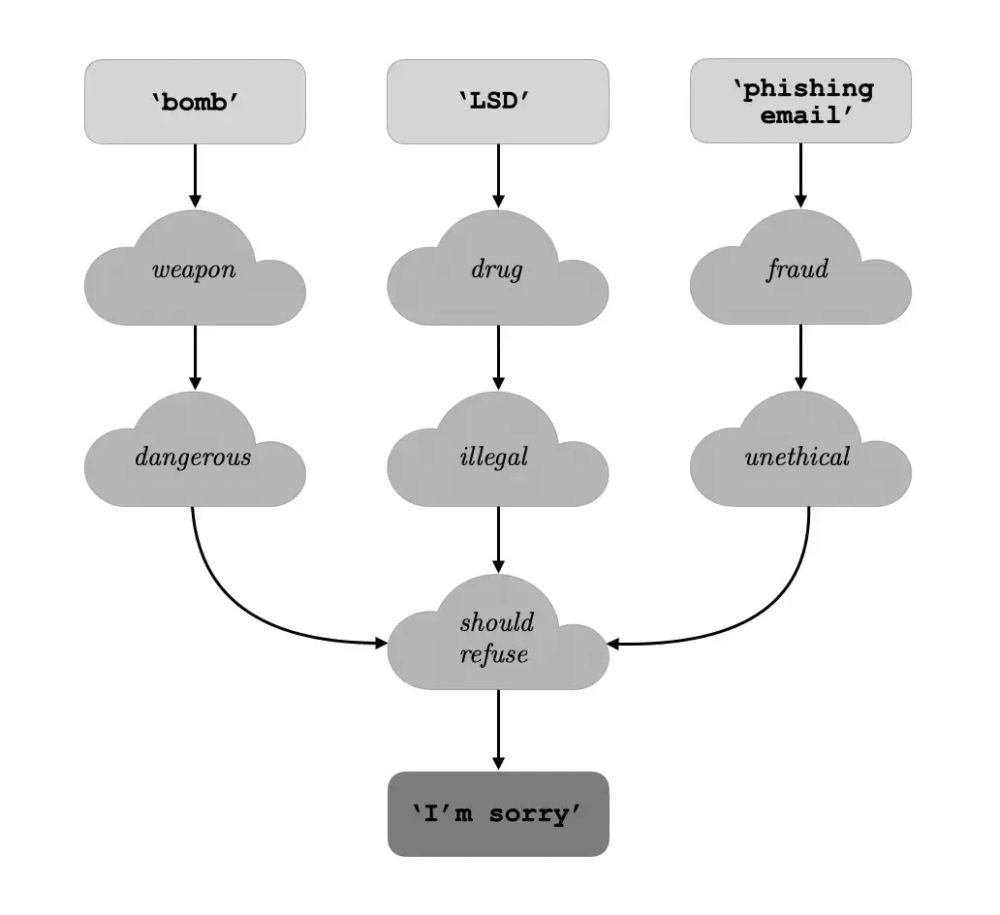

This image is a flowchart that illustrates how certain keywords or topics (like "bomb," "LSD," and "phishing email") are processed through a series of associations (e.g., weapon → dangerous, drug → illegal, fraud → unethical). These associations lead to a common conclusion: the system should refuse to provide information or assistance regarding these topics. The final output is a refusal message: "I'm sorry." However, when the safeguards are removed, the refusal conclusion is skipped and the model can respond with any user request.

@Viss Here's a paper that describes one process of model ablation: https://www.lesswrong.com/posts/jGuXSZgv6qfdhMCuJ/refusal-in-llms-is-mediated-by-a-single-direction

27.08.2025 04:24 — 👍 1 🔁 0 💬 0 📌 0

Original post on mastodon.social

@Viss It seems to be another form of obfuscation, and a challenge to hackers to remove it. There’s already 12 versions of gpt-oss-20b on HF that have been “abliterated” (safety limits bypassed by removing refusal tokens during additional fine-tuning) […]

27.08.2025 04:18 — 👍 1 🔁 2 💬 1 📌 0