Used cars are cheap because of new cars. Day-old bread it half price because of fresh bread.

This has been a housing policy post.

21.07.2025 04:27 — 👍 198 🔁 33 💬 7 📌 0

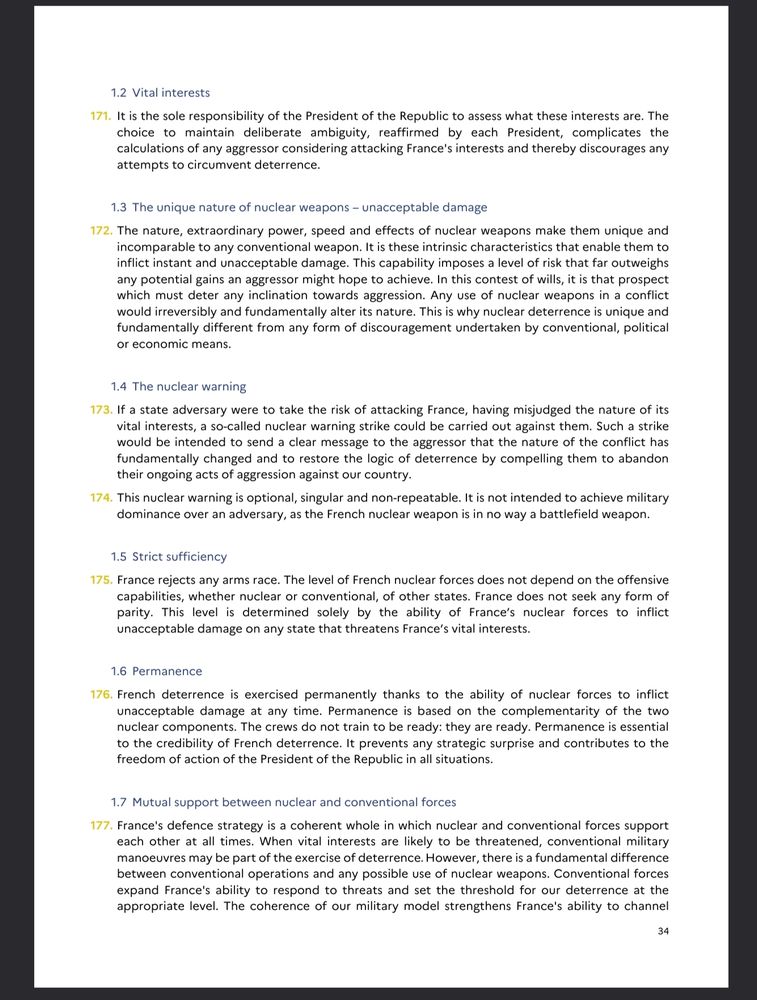

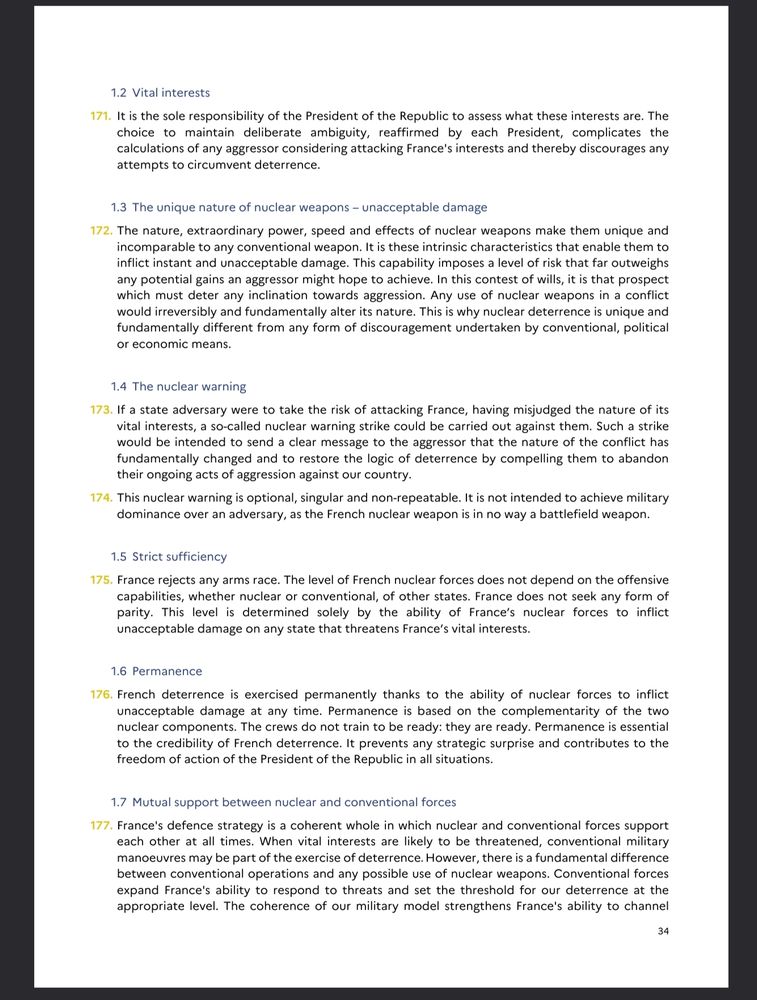

Screenshot from the French National Strategic Review 2025, page 34: "34

1.2 Vital interests

171. It is the sole responsibility of the President of the Republic to assess what these interests are. The

choice to maintain deliberate ambiguity, reaffirmed by each President, complicates the

calculations of any aggressor considering attacking France's interests and thereby discourages any

attempts to circumvent deterrence.

1.3 The unique nature of nuclear weapons – unacceptable damage

172. The nature, extraordinary power, speed and effects of nuclear weapons make them unique and

incomparable to any conventional weapon. It is these intrinsic characteristics that enable them to

inflict instant and unacceptable damage. This capability imposes a level of risk that far outweighs

any potential gains an aggressor might hope to achieve. In this contest of wills, it is that prospect

which must deter any inclination towards aggression. Any use of nuclear weapons in a conflict

would irreversibly and fundamentally alter its nature. This is why nuclear deterrence is unique and

fundamentally different from any form of discouragement undertaken by conventional, political

or economic means.

1.4 The nuclear warning

173. If a state adversary were to take the risk of attacking France, having misjudged the nature of its

vital interests, a so-called nuclear warning strike could be carried out against them. Such a strike

would be intended to send a clear message to the aggressor that the nature of the conflict has

fundamentally changed and to restore the logic of deterrence by compelling them to abandon

their ongoing acts of aggression against our country.

174. This nuclear warning is optional, singular and non-repeatable. It is not intended to achieve military

dominance over an adversary, as the French nuclear weapon is in no way a battlefield weapon.

1.5 Strict sufficiency

175. France rejects any arms race. The level of French nuclear forces does not depend on the offensive

capabilities, whe…

Say what you will about Macron's approach to Europe but the French philosophy on nuclear deterrence kicks ass

📑 www.sgdsn.gouv.fr/files/files/...

15.07.2025 17:42 — 👍 7 🔁 1 💬 1 📌 0

The future of AI governance may hinge on our ability to develop trusted and effective ways to make credible claims about AI systems. This new report expands our understanding of the verification challenge and maps out compelling areas for further work. ⬇️

14.07.2025 13:45 — 👍 16 🔁 3 💬 0 📌 0

I've normally seen this kind of thing coded as one of the non-extinction outcomes that are possible within the broader existential risk definition that I think Bostrom introduced. For example, AIs enforcing ideological homogeneity.

13.07.2025 22:07 — 👍 3 🔁 0 💬 0 📌 0

I can only speak by reference to some of his written work. What I've read so far has been worth the read.

13.07.2025 04:47 — 👍 1 🔁 0 💬 0 📌 0

Epoch AI

Epoch AI is a research institute investigating key trends and questions that will shape the trajectory and governance of Artificial Intelligence.

You might be interested in work from Anton Korinek and his coauthors. I also recommend checking out Epoch epoch.ai And if you're going to have accelerationist stuff you might want to include Vitalik Buterin on defensive accelerationism: vitalik.eth.limo/general/2023...

12.07.2025 22:31 — 👍 2 🔁 0 💬 1 📌 0

If you like the steel drum, check out the handpan too if you haven't already.

12.07.2025 22:13 — 👍 0 🔁 0 💬 0 📌 0

16/ Guillem Bas, @nickacaputo.bsky.social, Julia C Morse, Janvi Ahuja, Isabella Duan, Janet Egan, Ben Bucknall, @briannarosen.bsky.social Renan Araujo, Vincent Boulanin, Ranjit Lall @fbarez.bsky.social, Sanaa Alvira, Corin Katzke, Ahmad Atamli, Amro Awad /end🧵

07.07.2025 22:12 — 👍 2 🔁 0 💬 0 📌 0

15/ Thanks to @aigioxfordmartin.bsky.social for backing this project and all my coauthors: Robert Trager, @ankareuel.bsky.social, @davidmanheim.alter.org.il, @milesbrundage.bsky.social, Onni Aarne, @aaronscher.bsky.social, Yanliang Pan, Jenny Xiao, Kristy Loke, Sumaya Nur Adan

07.07.2025 22:12 — 👍 2 🔁 0 💬 1 📌 0

13/ Those who lived through or studied the Cold War may remember President Reagan reiterating the Russian proverb “Trust, but verify.” Just as it was with 1980s nuclear arms control, our ability to build new verification systems may be crucial for preserving peace today.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

12/ If we build these more serious verification systems, we would be laying the foundation for international agreements over AI—which might end up being the most important international deals in the history of humanity.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

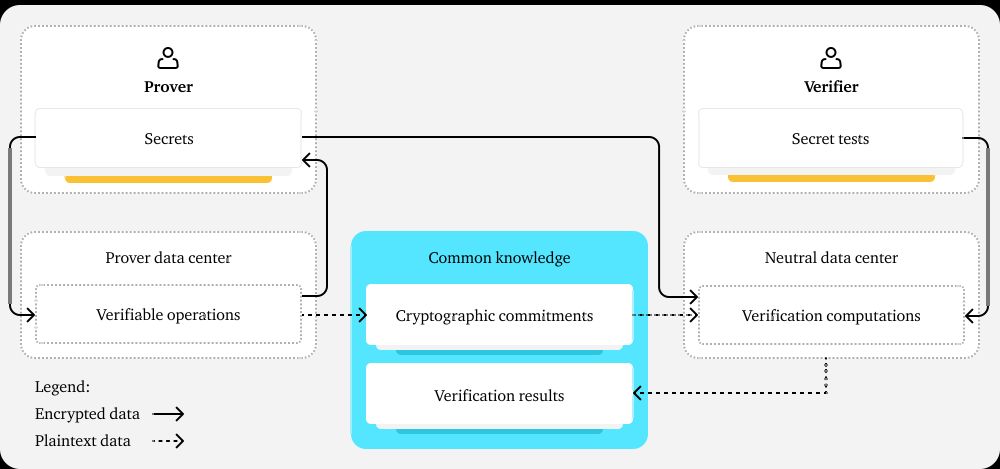

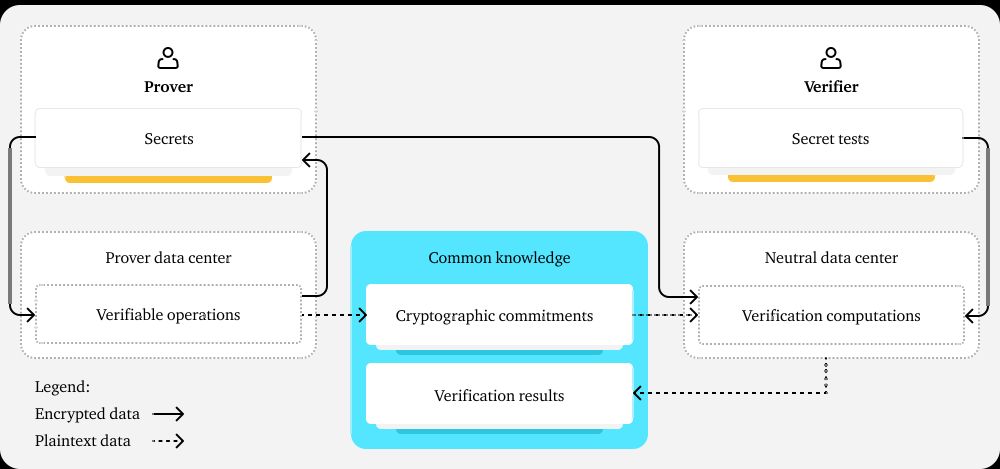

A schematic summary of the concept of verifiable confidential computing. A Prover is able to demonstrate something to the Verifier without either of them revealing to the other the entirety of their secrets (e.g., AI model for the Prover and sensitive test content for the Verifier). They arrange for cryptographic commitments to be made about the activities of the Prover. These can then later be used to ensure that the same data and code is provided in a neutral datacenter where verification computations can take place. These verification computations can be privacy-preserving and thus protect everyone's data while demonstrating that these digital objects follow rules.

11/ It seems possible to create similar verification exchanges that preserve security to an extreme degree, but we’ll need political action to get there. Our report goes into this in some detail. These setups might take about 1-3 years of intense effort to research and build.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

10/ However, even if we scale this up, the most important secrets (think national security info, military AI models, or the Coca-Cola formula) are probably too sensitive to govern via just confidential computing. Further work is needed to safeguard these.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

9/ Groups that use AI (including corporations and countries) will likewise place more trust in AI services that they can be sure are secure and appropriately governed. They may also request—or demand—this kind of thing in the future.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

8/ This setup allows 1) users to feel safe and confident about services they pay for, 2) companies to expand their offerings to more sensitive domains, and 3) governments to check that rules are followed.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

7/ An AI provider can prove that they abide by rules by having a set of third parties (e.g., AI testing companies and AI Safety / Security Institutes) securely test their models and systems. A user can trust a group of third parties a *lot* more than they trust the AI provider.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

6/ Confidential computing might be reliable enough for a company to make pretty strong claims about what they are *doing* (e.g., serving you inference with a given model and compute budget) and what they are *not doing* (e.g., copying your data).

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

5/ Some of these technologies can be deployed *today*, such as confidential computing, which is available in recent hardware such as NVIDIA’s Hopper or Blackwell chips. These are good enough to get us started.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

4/ Luckily, decades of work has gone into privacy-preserving computational methods. Basically they are tricks with hardware and cryptography that allow one actor (Prover) to prove to another actor (Verifier) something without revealing all the underlying data.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

3/ But countries care about their security, so we can’t expect them to simply hand over all the information needed to prove that they’re following governance rules.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

2/ International AI governance is desirable (for peace, security, and good lives), but it faces verification challenges because there’s no easy way to understand what someone else is doing on their computer without violating their security.

07.07.2025 22:12 — 👍 0 🔁 0 💬 1 📌 0

Cover of the report "Verification for International AI Governance" published by the Oxford Martin AI Governance Initiative. Authors are: Ben Harack, Robert F. Trager, Anka Reuel, David Manheim, Miles Brundage, Onni Aarne, Aaron Scher, Yanliang Pan, Jenny Xiao, Kristy Loke, Sumaya Nur Adan, Guillem Bas, Nicholas A. Caputo, Julia C. Morse, Janvi Ahuja, Isabella Duan, Janet Egan, Ben Bucknall, Brianna Rosen, Renan Araujo, Vincent Boulanin, Ranjit Lall, Fazl Barez, Sanaa Alvira, Corin Katzke, Ahmad Atamli, Amro Awad

Governing AI requires international agreements, but cooperation can be risky if there’s no basis for trust.

Our new report looks at how to verify compliance with AI agreements without sacrificing national security.

This is neither impossible nor trivial.🧵

1/

07.07.2025 22:12 — 👍 7 🔁 1 💬 2 📌 1

Fascinating and important.

04.07.2025 22:39 — 👍 1 🔁 0 💬 0 📌 0

Cosmos by Carl Sagan was going to be my answer, but now I realize Star Trek should be in there too!

16.06.2025 05:55 — 👍 3 🔁 0 💬 1 📌 0

Always a challenging needle to thread, since you want them to be successful (to continue to exist) without being overrun!

14.06.2025 09:49 — 👍 0 🔁 0 💬 0 📌 0

The conditions that have led to what’s happening in the US today exist in democracies around the world.

They are an inevitable outcome of our collective failure to adapt to fundamental changes in the information ecosystem on which our democracies were originally built.

07.06.2025 20:39 — 👍 5775 🔁 1863 💬 167 📌 272

🔊 Listen Now: A whistleblower's disclosure details how DOGE may have taken sensitive labor data

All Things Considered on NPR One | 7:13

Happy Friday everyone. Thanks for reading NPR.org this week.

Wanted to take a second to also remind you: I interviewed whistleblower Dan Berulis to accompany my lengthy written story on NLRB. Hear from him in his own words:

one.npr.org/i/nx-s1-5355...

18.04.2025 11:33 — 👍 501 🔁 218 💬 15 📌 19

America’s Finest News Source. A @globaltetrahedron.bsky.social subsidiary.

Get the paper delivered to your door: membership.theonion.com

🏳️🌈 just a simple country AI ethicist | Assistant Professor, Western University 🇨🇦 | he/his/him | | no all-male panels |#BLM | 🏳️⚧️ ally | views my own

https://starkcontrast.co/

https://starlingcentre.ca/

Through August 11, new monthly or annual Sustaining Donors get an EFF35 Challenge Coin! With your help, EFF is here to stay. https://eff.org/35years

Global advisor on better cities, city planning, transportation & urban change. City planner + urbanist leading @ToderianUW.bsky.social. Past Chief Planner for Vancouver BC. Past/founding President of @CanUrbanism.bsky.social. International speaker & media.

Notorious GIS reply guy

YIMBY

I will come to your hearing, Berkeley politician

Vuvuzela owner

Father

@jeffinatorator on certain bird sites

UALawSchool |MSNBC & NBCNews |Podcasts #SistersInLaw & Cafe Insider|Obama US Atty |25 year fed'l prosecutor|Wife & Mom of 4|Knits a lot|Civil Discourse newsletter: joycevance.substack.com

Economics Ph.D candidate @UCLA | economics of computing and AI | industrial organization, innovation, political economy | PEPFAR fanatic

https://nicholasemeryxu.com/

Writing about AI policy at Transformer. Supporting AI journalism with grants at Tarbell. Prev: AI safety and EA comms; journalist at The Economist, Protocol, Finimize. London.

https://transformernews.ai

I'm a postdoc with Yoshua Bengio at Mila, and the scientific lead of the International AI Safety Report.

Working towards the safe development of AI for the benefit of all at Université de Montréal, LawZero and Mila.

A.M. Turing Award Recipient and most-cited AI researcher.

https://lawzero.org/en

https://yoshuabengio.org/profile/

William H. Neukom Professor, Stanford Law School. Partner, Lex Lumina LLP. I teach and write in IP, antitrust, internet, and video game law

senior politics reporter @ Rolling Stone magazine // co-author of ‘Sinking in the Swamp’ // adjunct professor at the University of Cincinnati (go Bearcats 🐻 🐱) // volunteer at St. Vincent de Paul - Cincinnati // Rolling Stones obsessive

Professor of Int’l & EU Law, Diplomatic Academy Vienna. Past: Prof., founding Director CIGAD at King's College London, specialist adviser House of Lords EU Select Committee, Référendaire CJEU

President of Signal, Chief Advisor to AI Now Institute

Political science, game theory, music, wooden boats, vintage airplanes and motorcycles.

Senior Fellow and Director, AI and Emerging Tech Initiative at Just Security | Senior Research Associate at the University of Oxford | Formerly White House NSC

Website: www.drbriannarosen.com

AI governance at Oxford Martin AIGI

Technical AI Governance Research at MIRI

Views are my own

Computer Science PhD Student @ Stanford | Geopolitics & Technology Fellow @ Harvard Kennedy School/Belfer | Vice Chair EU AI Code of Practice | Views are my own