The Complicated Politics of Trump’s New AI Executive Order

The administration’s attempt to suppress state AI regulation risks legal backlash, bipartisan resistance, and public distrust, undermining the innovation it seeks to advance. The Trump administration...

Excited to share a new op-ed by @minanrn.bsky.social, @jessicaji.bsky.social, and myself for the National Interest!

The administration's new AI Executive Order, aiming to suppress state-level AI regulation, risks undermining the innovation it seeks to advance.

nationalinterest.org/blog/techlan...

30.01.2026 15:53 — 👍 3 🔁 2 💬 1 📌 0

Helping to organize and run this workshop has been a highlight of my time at CSET.

My takeaways from the workshop are that it's hard to find strong signal on the future of AI use for automating R&D - so we need as much insight into how AI is being used at present for AI R&D.

28.01.2026 06:43 — 👍 1 🔁 0 💬 0 📌 0

AI Gov & Nat'l Policy '25

Week Theme Week 1 Sep 2 Introduction and AI Background The story of AI so far, and what deep learning is. Extra Resources: Computer History Museum: https://www.computerhistory.org/timeline/ai-roboti...

My AI Governance and National Policy course is a wrap! I covered the technical background of AI, how AI is applied (and why we might want to govern it), and what policies about AI are out there.

Find my syllabus here (and let me know what I'm missing): docs.google.com/document/d/1...

16.12.2025 17:27 — 👍 1 🔁 0 💬 0 📌 0

This is an awesome opportunity.

Come work with awesome researchers (and me, too), tackle the thorniest debates in AI, and make real impact!

16.10.2025 16:25 — 👍 0 🔁 0 💬 0 📌 0

Yesterday I taught my first class. I'm officially a teacher (a professor, even)! I'm very excited to be teaching AI policy to undergrads at Georgetown this semester.

03.09.2025 20:29 — 👍 1 🔁 0 💬 0 📌 0

Data Research Analyst | Center for Security and Emerging Technology

We are currently seeking capable data storytellers, analyzers, and visualizers to serve as Data Research Analysts.

CSET is hiring! Be sure to apply by Tuesday!

Join our data team as a Data Research Analyst to contribute to data-driven research products and policy analysis at the forefront of national security and tech policy.

cset.georgetown.edu/job/data-res...

29.08.2025 14:25 — 👍 1 🔁 2 💬 0 📌 0

Banning state-level AI regulation is a bad idea!

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

18.06.2025 18:52 — 👍 8 🔁 5 💬 1 📌 0

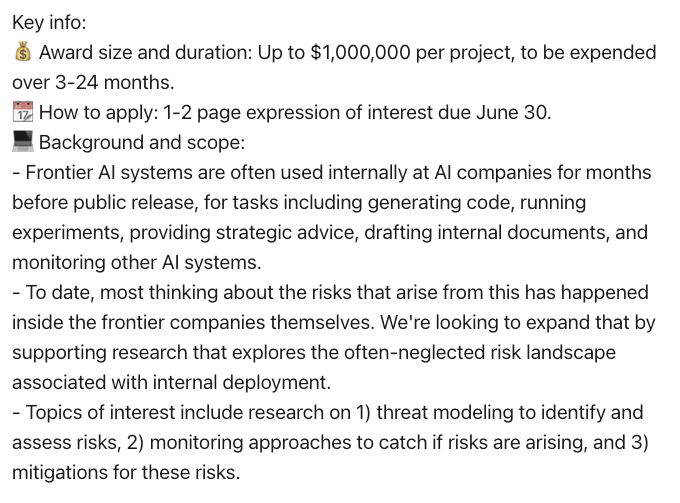

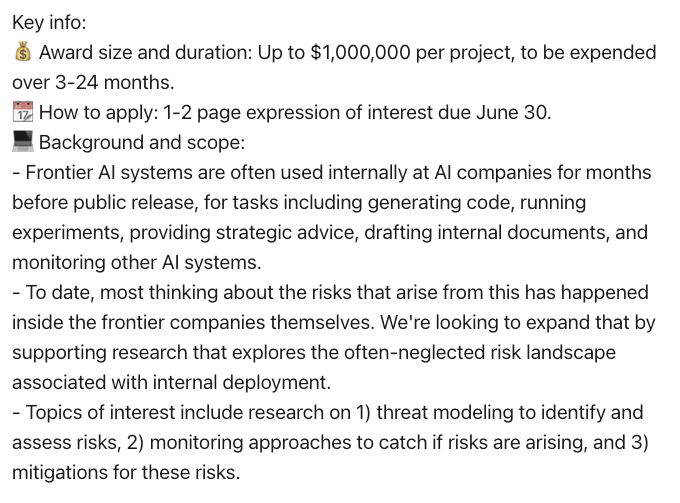

2 weeks left on this open funding call on risks from internal deployments of frontier AI models—submissions are due June 30.

Expressions of interest only need to be 1-2 pages, so still time to write one up!

Full details: cset.georgetown.edu/wp-content/u...

16.06.2025 18:37 — 👍 7 🔁 2 💬 0 📌 0

💡Funding opportunity—share with your AI research networks💡

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

19.05.2025 16:59 — 👍 9 🔁 5 💬 1 📌 1

A bar chart of zodiac signs among popes. Aries: 4, Taurus: 7, Gemini: 5, Cancer: 4, Leo: 4, Virgo: 4, Libra: 4, Scorpio: 4, Sagittarius: 5, Capricorn: 5, Aquarius: 4, Pisces: 8

Pope Leo XIV is a rare Virgo ♍

08.05.2025 18:45 — 👍 1 🔁 0 💬 0 📌 0

The audience asked a ton of great questions. We couldn't get to all of them, but I'll be reading through them all and will try to answer some in upcoming research!

26.03.2025 18:22 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by Center for Security and Emerging Technology

What’s Next for AI Red-Teaming?

ICYMI: The CSET webinar on AI red-teaming has been recorded!

www.youtube.com/watch?v=gDnN...

Watch this for a great discussion on what AI red-teaming is, how different organizations do it, and how it can be improved!

Huge thanks to the panelists, moderator, and audience!

26.03.2025 18:22 — 👍 1 🔁 0 💬 1 📌 0

Starting in 5 👀

25.03.2025 15:55 — 👍 1 🔁 1 💬 0 📌 0

ChatGPT - 2025 March Madness Bracket

Shared via ChatGPT

Here's the report: chatgpt.com/share/67db85...

It shows the prompt I used, some follow-up questions ChatGPT asked, and my attempt to get some decent pun-based names for the bracket.

20.03.2025 16:08 — 👍 0 🔁 0 💬 0 📌 0

A filled march madness bracket showing the first seed teams (Duke, Houston, Auburn, and Florida) going to the final four, and showing Duke going all the way.

🏀 I know nothing about college basketball, so I decided to be the avatar for ChatGPT in the @csetgeorgetown.bsky.social #MarchMadness tournament. I used Deep research on o3-mini-high to generate a detailed report and analysis of the tournament. The resulting bracket is very conservative. Go Humans!

20.03.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

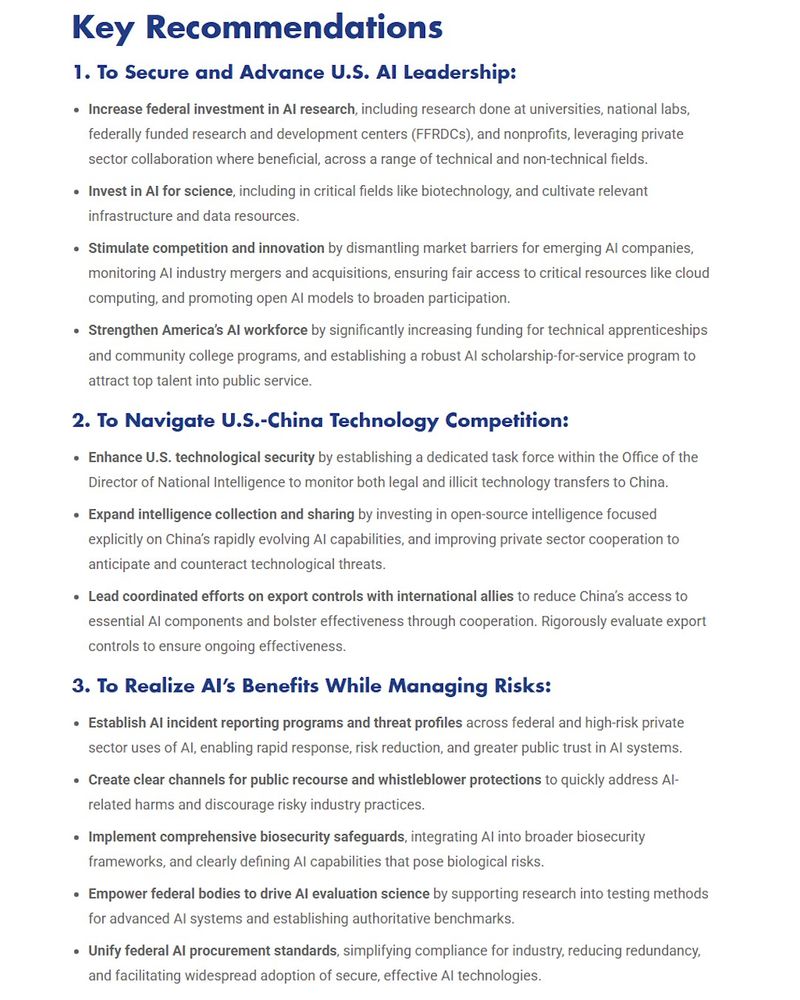

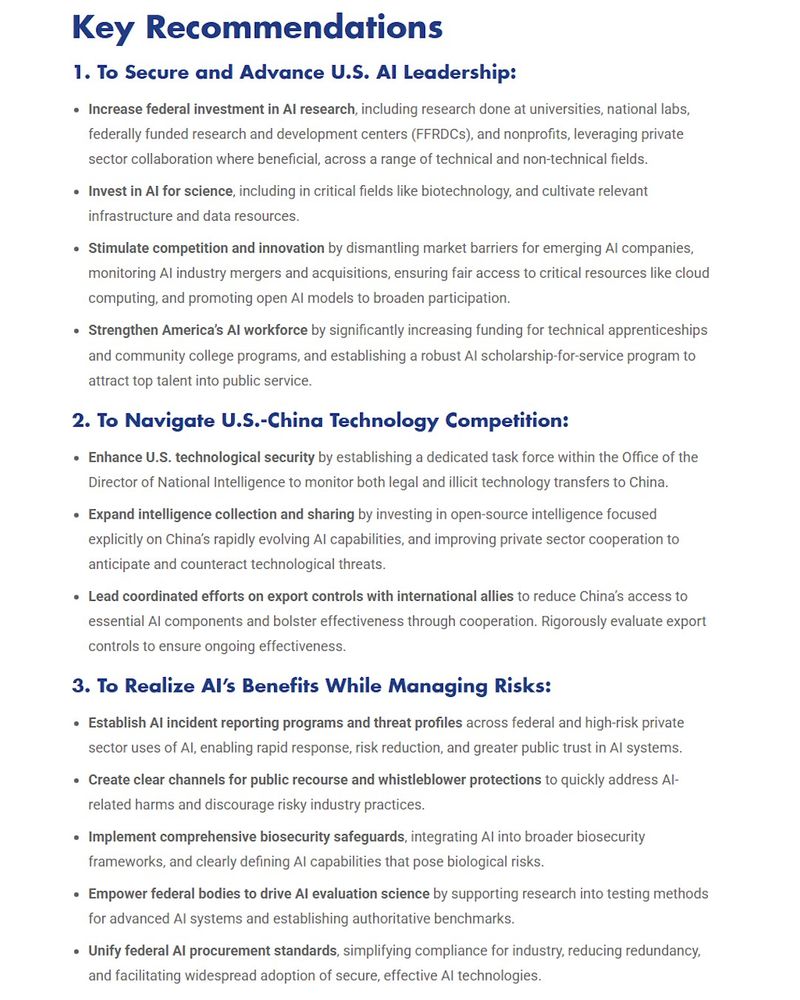

✨ NEW: How can the U.S. stay ahead in AI?

OSTP called for input on developing an “AI Action Plan.” Our response outlines the steps the U.S. should take to:

1️⃣Secure and advance its AI leadership

2️⃣Navigate competition with China

3️⃣Realize AI’s benefits and avoid its risks

17.03.2025 13:23 — 👍 5 🔁 3 💬 2 📌 3

What’s Next for AI Red-Teaming? | Center for Security and Emerging Technology

On March 25, CSET will host an in-depth discussion about AI red-teaming — what it is, how it works in practice, and how to make it more useful in the future.

Why does #AI red-teaming suck? How can we make it suck less?

All this and more at the next CSET webinar: cset.georgetown.edu/event/whats-...

Join me, the Director of Microsoft's AI Red Team, the MITRE ATLAS Lead, and the Director of Apollo Research. This will be an awesome conversation.

12.03.2025 15:32 — 👍 4 🔁 1 💬 0 📌 0

What’s Next for AI Red-Teaming? | Center for Security and Emerging Technology

On March 25, CSET will host an in-depth discussion about AI red-teaming — what it is, how it works in practice, and how to make it more useful in the future.

What: CSET Webinar 📺

When: Tuesday, 3/25 at 12PM ET 📅

What’s next for AI red-teaming? And how do we make it more useful?

Join Tori Westerhoff, Christina Liaghati, Marius Hobbhahn, and CSET's @dr-bly.bsky.social * @jessicaji.bsky.social for a great discussion: cset.georgetown.edu/event/whats-...

12.03.2025 15:11 — 👍 4 🔁 4 💬 0 📌 1

Expert Q&A: At Paris AI Summit, Speed V. Risk

A look at how embrace of AI opportunities weighed against concerns of AI risks at the Paris AI Action Summit.

I gave my takes from the Paris AI Action Summit to @thecipherbrief.bsky.social last week. I heard three Nobel Laureates warn about the risks of AI, while VP Vance was "not here ... to talk about AI safety" and focused on opportunity. I think we can innovate on AI without building unsafe products.

18.02.2025 19:18 — 👍 1 🔁 0 💬 0 📌 0

I'm packing my bags for an exciting week in Paris! I'll be contributing to the Paris Peace Forum's AI-Cyber Nexus discussions, and I'll be attending @iaseai.bsky.social '25 and the Paris AI Security Forum. I'm registered for a few other events, as well (if I can find the time and energy to attend).

03.02.2025 20:25 — 👍 1 🔁 0 💬 0 📌 0

Fact Sheet: President Donald J. Trump Takes Action to Enhance America’s AI Leadership – The White House

REMOVING BARRIERS TO AMERICAN AI INNOVATION: Today, President Donald J. Trump signed an Executive Order eliminating harmful Biden Administration AI

The fact sheet [1] on Pres. Trump's AI Executive Order calls out the administration's previous work on regulating AI with Kratsios's Op-Ed on "AI that Reflects American Values". I think there's an RLHF-to-CoT narrative that highlights the value of safety in AI.

1: www.whitehouse.gov/fact-sheets/...

29.01.2025 21:00 — 👍 0 🔁 0 💬 0 📌 0

30+ yrs writing code. Go expert now deep in AI-powered development. Founder GopherGuides - Co-author Go Fundamentals. 10K+ devs trained. Videos & blog

Interested in:

Alignment | Emergent Systems | Logic |

Urban Design | Public Transit | AI Safety

Just a man flesh and bone.

Cybersecurity/ICS/OT/IT/IoT/Engineering/SmartGrid

Innovation/Sustainability/Climate/Recycle/GreenTech

Data/Research/Investing/FreeThinking/

Democracy/Politics/Liberal/Organizer/

Music/Theater/Art/Museums/Food/Languages

Research Fellow @ GMU | AI Governance + PPE + HPS | Studying @ VT

machineculture.io | ryanhauser.carrd.co | ◁▷◁

We are a researcher community developing scientifically grounded research outputs and robust deployment infrastructure for broader impact evaluations.

https://evalevalai.com/

A semi-regular gathering for irregulars from the security research community to engage with Congressional staffers. Run by I Am The Cavalry, bridging the gap between the hacker and public policy communities since 2017.

AI technical gov & risk management research. PhD student @MIT_CSAIL, fmr. UK AISI. I'm on the CS faculty job market! https://stephencasper.com/

Creating the Global Disinformation Policy Database. https://disinfo-policy.org

I have a Pug.

Democracy, technology, and collective sensemaking.

PhD student @ the University of Texas.

Professor at Wharton, studying AI and its implications for education, entrepreneurship, and work. Author of Co-Intelligence.

Book: https://a.co/d/bC2kSj1

Substack: https://www.oneusefulthing.org/

Web: https://mgmt.wharton.upenn.edu/profile/emollick

Statistician, Associate Professor (Lektor) at University of Gothenburg and Chalmers; inference and conditional distributions for anything

https://mschauer.github.io

http://orcid.org/0000-0003-3310-7915

[ˈmoː/r/ɪts ˈʃaʊ̯ɐ]

Cybersecurity for AI :: https://mindgard.ai/

www.rummanchowdhury.com

www.humane-intelligence.org

CEO & co-founder, Humane Intelligence

US Science Envoy for AI (Biden Administration)

Assistant Professor the Polaris Lab @ Princeton (https://www.polarislab.org/); Researching: RL, Strategic Decision-Making+Exploration; AI+Law

CS PhD candidate at Princeton. I study the societal impact of AI.

Website: cs.princeton.edu/~sayashk

Book/Substack: aisnakeoil.com

PhD @ MIT. Prev: Google Deepmind, Apple, Stanford. 🇨🇦 Interests: AI/ML/NLP, Data-centric AI, transparency & societal impact

AI researcher & teacher at SCAI, ASU. Former President of AAAI & Chair of AAAS Sec T. Here to tweach #AI. YouTube Ch: http://bit.ly/38twrAV Twitter: rao2z

Technical AI Policy Researcher at HuggingFace @hf.co 🤗. Current focus: Responsible AI, AI for Science, and @eval-eval.bsky.social!

Professor and Head of Machine Learning Department at Carnegie Mellon. Board member OpenAI. Chief Technical Advisor Gray Swan AI. Chief Expert Bosch Research.

AI professor. Director, Foundations of Cooperative AI Lab at Carnegie Mellon. Head of Technical AI Engagement, Institute for Ethics in AI (Oxford). Author, "Moral AI - And How We Get There."

https://www.cs.cmu.edu/~conitzer/