SODAS Data Discussion 3 (Fall 2025)

SODAS is delighted to host Daniel Juhász Vigild and Stephanie Brandl for the Fall 2025 Data Discussion series!

Join us for a Data Discussion on Friday, November 7! 📅

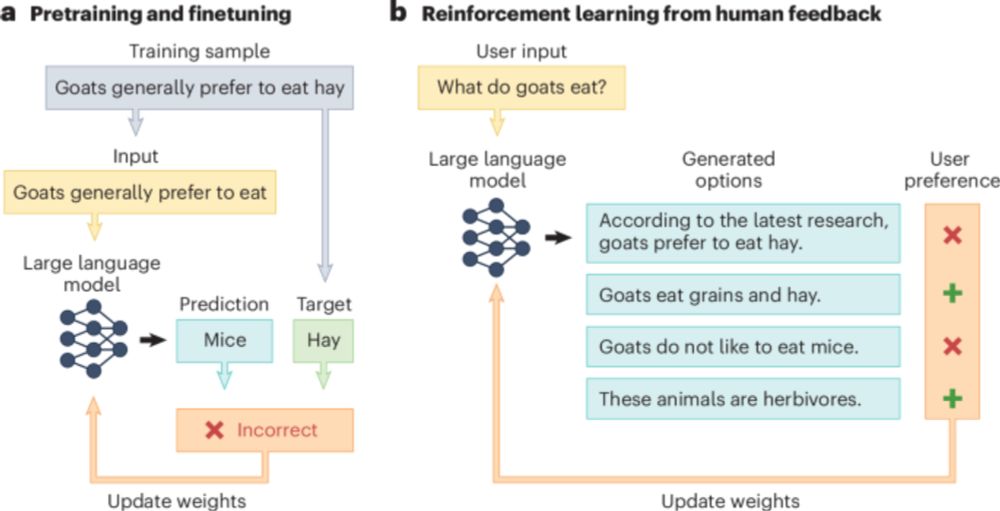

Daniel Juhász Vigild will start by exploring how government use of AI impacts its trustworthiness, while Stephanie Brandl will examine whether LLMs can identify and classify fine-grained forms of populism.

Event🔗: sodas.ku.dk/events/sodas...

31.10.2025 13:12 — 👍 3 🔁 3 💬 0 📌 1

Cheers from Learning@Scale poster 033 😋

22.07.2025 11:56 — 👍 1 🔁 0 💬 0 📌 0

Loved working on this with @kizilcec.bsky.social, Magnus Lindgaard Nielsen, David Dreyer Lassen, and @andbjn.bsky.social , thank you for the collaboration, looking forward to future work!

21.07.2025 08:15 — 👍 1 🔁 0 💬 0 📌 0

To learn more, check out our paper and TikTok-style video, or see my poster and talk this week in Palermo!

Paper and video : dl.acm.org/doi/10.1145/...

Poster session: learningatscale.acm.org/las2025/inde...

Lightning talk: fair4aied.github.io/2025/

21.07.2025 08:15 — 👍 0 🔁 0 💬 1 📌 0

Depending on the time point and fairness metric, we observe both alarming disparities and confidence intervals that include zero.

21.07.2025 08:03 — 👍 0 🔁 0 💬 1 📌 0

While human behavior and the data describing it evolve over time, fairness is often evaluated at a single snapshot. Yet, as we show in our newly published paper, fairness is dynamic. We studied how fairness evolves in dropout prediction across enrollment and found that it shifts over time.

21.07.2025 08:02 — 👍 4 🔁 2 💬 1 📌 0

Researcher. Cities, urban morphology, human geography & spatial data science. Open source software developer. #python #cities #open_science

https://martinfleischmann.net

Sr. Principal Research Manager at Microsoft Research, NYC // Machine Learning, Responsible AI, Transparency, Intelligibility, Human-AI Interaction // WiML Co-founder // Former NeurIPS & current FAccT Program Co-chair // Brooklyn, NY // http://jennwv.com

On the 2025/2026 job market! Machine learning, healthcare, and robustness

postdoc @ Cornell Tech, phd @ MIT

dmshanmugam.github.io

Lead research engineer @dairinstitute.bsky.social, social dancer, aspirational post-apocalyptic gardener 🏳️🌈😷

I run workshops @dairfutures.bsky.social. Always imagining otherwise.

dylanbaker.com

they/he

A workshop series where we make zines that answer the question: what happens when we imagine otherwise?

Run by @dylnbkr.bsky.social and @paulinewee.bsky.social from @dairinstitute.bsky.social

Sign up for workshops:

>>> https://linktr.ee/dair.futures <<<

tech & society @washingtonpost.com

stories: drewharwell.com

videos: tiktok.com/@drewharwell

+ instagram.com/bydrewharwell

The Center for Information Technology Policy (CITP) is a nexus of expertise in technology, engineering, public policy, & the social sciences. Our researchers work to better understand and improve the relationship between technology & society. Princeton U.

Director, Max Planck Center for Humans & Machines http://chm.mpib-berlin.mpg.de | Former prof. @MIT | Creator of http://moralmachine.net | Art: http://instagram.com/iyad.rahwan Web: rahwan.me

Dozentin am Zentrum Medien & Informatik PH Luzern | KI in der Bildung| AIEOU AI in education at the university of Oxford

#CAS Fachkarriere Schule in der Digitalität

AIEOU is a growing community of academics, educators, technologists, policymakers and students exploring the promise and pitfalls of AI in Education together. Based at the Department of Education, University of Oxford.

The FAccTRec workshop at ACM RecSys. https://facctrec.github.io/facctrec2023

HCI Prof at CMU HCII. Research on augmented intelligence, participatory AI, & complementarity in human-human and human-AI workflows.

thecoalalab.com

A Nature Portfolio journal bringing you research and commentary on all aspects of human behaviour.

https://www.nature.com/nathumbehav/

The Association for Computing Machinery's Computer-Human Interaction Conference and Steering Committee.

#CHI2026 is in Barcelona, Spain 🎨 April 13 -17.

Content managed by Social Media Chairs.

EurIPS is a community-organized, NeurIPS-endorsed conference in Copenhagen where you can present papers accepted at @neuripsconf.bsky.social

eurips.cc

ML researcher, MSR + Stanford postdoc, future Yale professor

https://afedercooper.info

PhD student at @charlesuni.cuni.cz, research @ensured.bsky.social

Political Science - intl. cooperation, party politics, statistics & NLP

https://stepanjaburek.github.io/

A non-profit bringing together academic, civil society, industry, & media organizations to address the most important and difficult questions concerning AI.

Applied AI Scientist (in 🇩🇰 since 2012) • http://pedromadruga.com • Interested in Information Retrieval at scale • Lead AI Scientist at Karnov Group

Opinions are my own.

PhD student at the ROCKWOOL Foundation and SODAS (University of Copenhagen), examining the use of artificial intelligence in the public sector.