Thanks Hope! I just came across your related work with the CSS team at Microsoft- I'd love to chat about it sometime if you're free 🙂

11.02.2025 23:20 — 👍 1 🔁 0 💬 0 📌 0

Hi Daniel- thanks so much. The preprint is dependable, though missing a little additional discussion that made it into the camera-ready. I can email you the camera ready and will update arxiv with it shortly. Thank you!

11.02.2025 23:13 — 👍 1 🔁 0 💬 0 📌 0

Many thanks to my collaborators and @kempnerinstitute.bsky.social for helping make this idea come to life, and to @rdhawkins.bsky.social for helping plant the seeds 🌱

10.02.2025 17:20 — 👍 2 🔁 1 💬 0 📌 0

(8/9) We think that better understanding such tradeoffs will be important to building LLMs that are aligned to human values– human values are diverse, our models should be too.

10.02.2025 17:20 — 👍 1 🔁 0 💬 1 📌 0

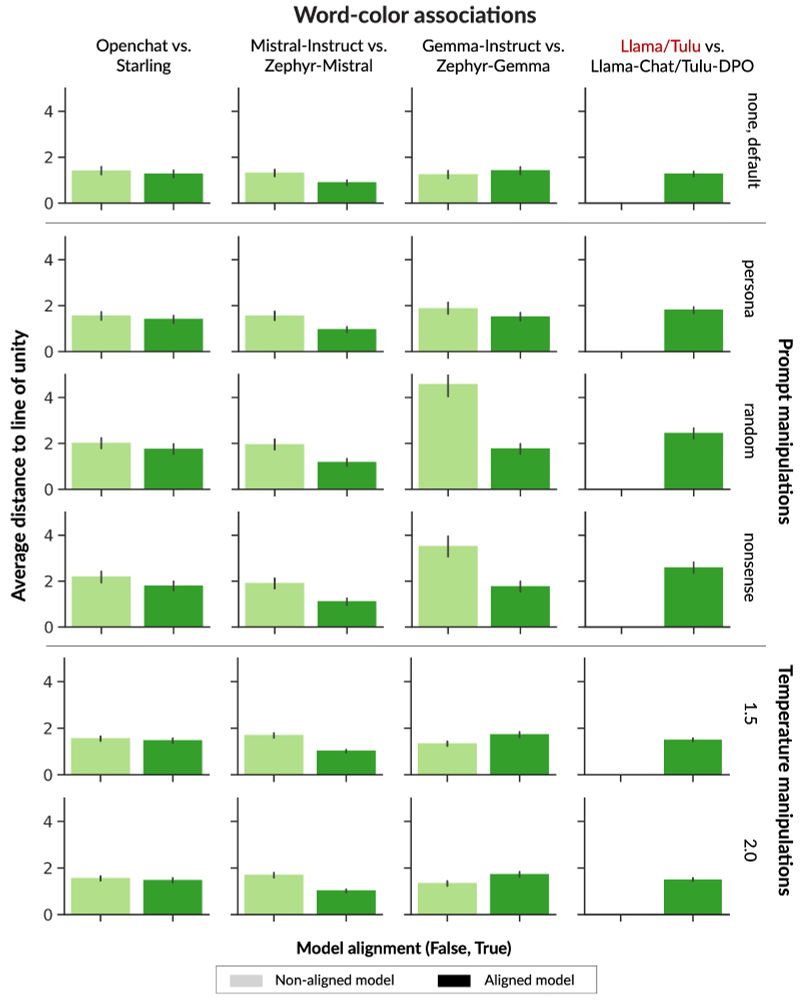

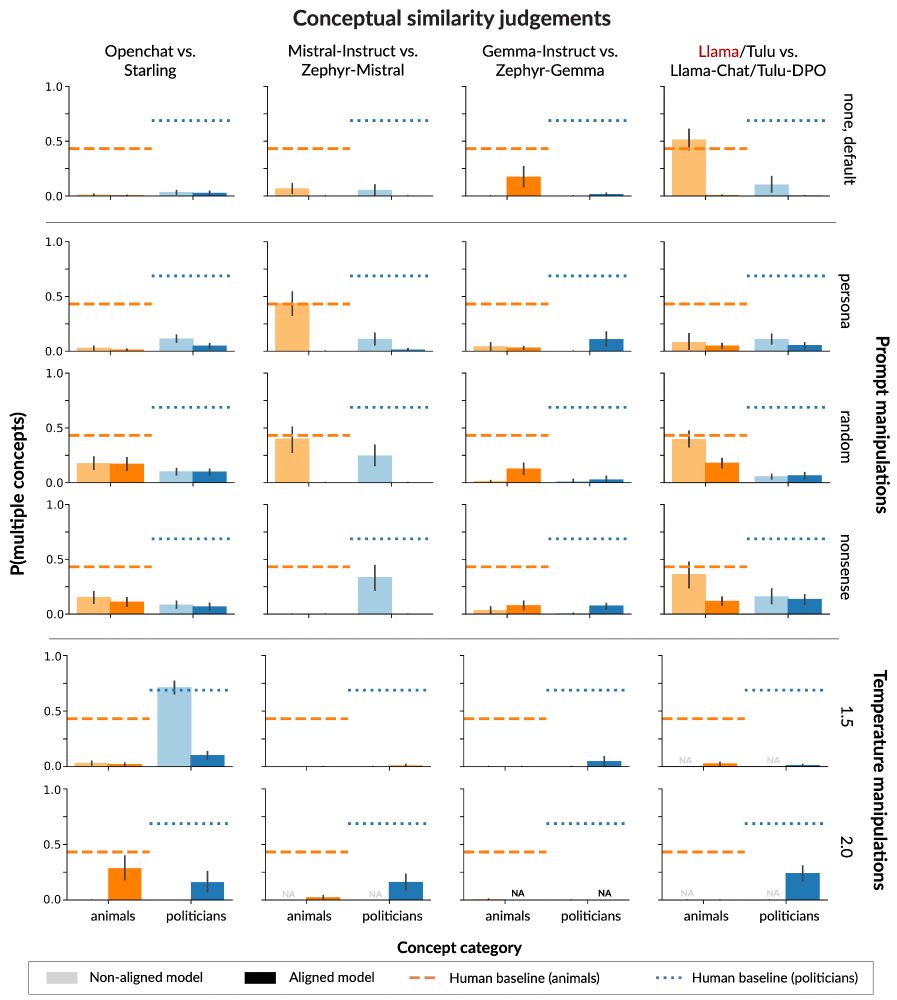

(7/9) This suggests a trade-off: increasing model safety in terms of value alignment decreases safety in terms of diversity of thoughts and opinion.

10.02.2025 17:20 — 👍 3 🔁 0 💬 1 📌 0

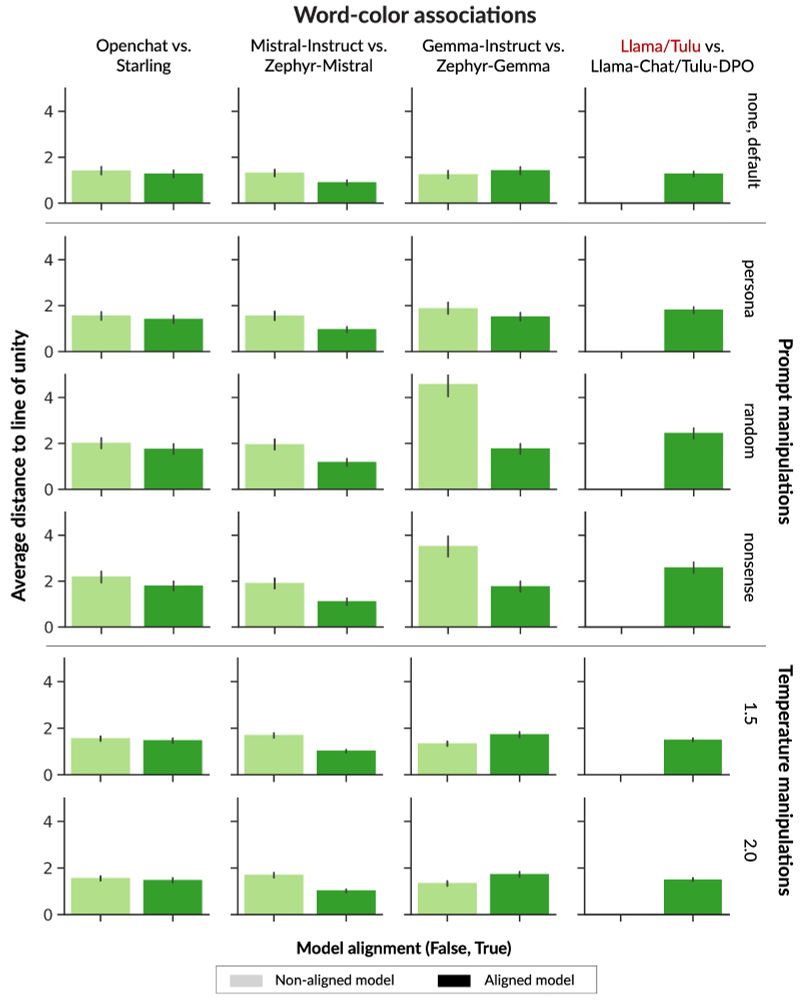

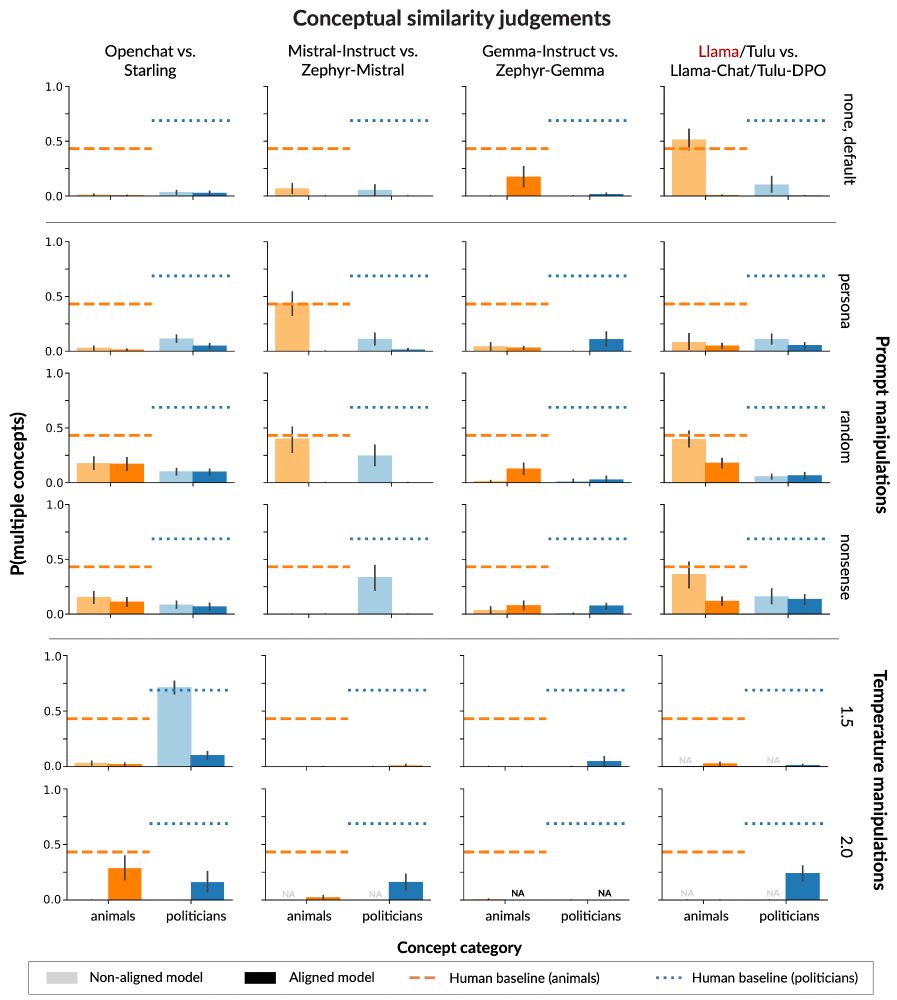

(6/9) We put a suite of aligned models, and their instruction fine-tuned counterparts, to the test and found:

* no model reaches human-like diversity of thought.

* aligned models show LESS conceptual diversity than instruction fine-tuned counterparts

10.02.2025 17:20 — 👍 1 🔁 0 💬 1 📌 0

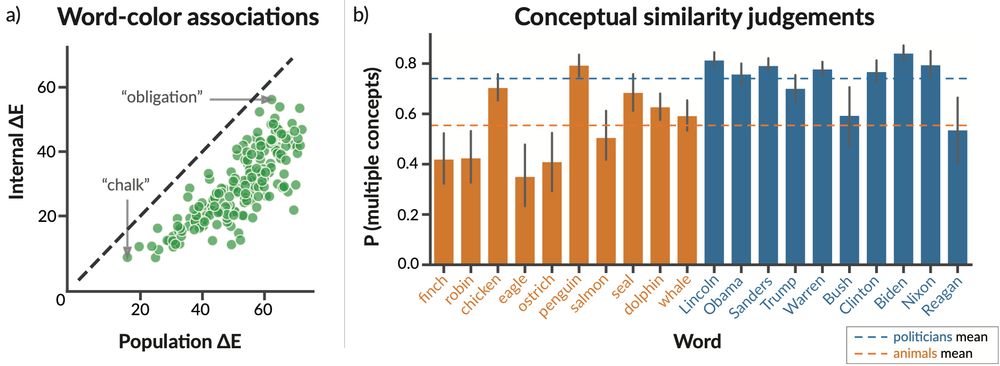

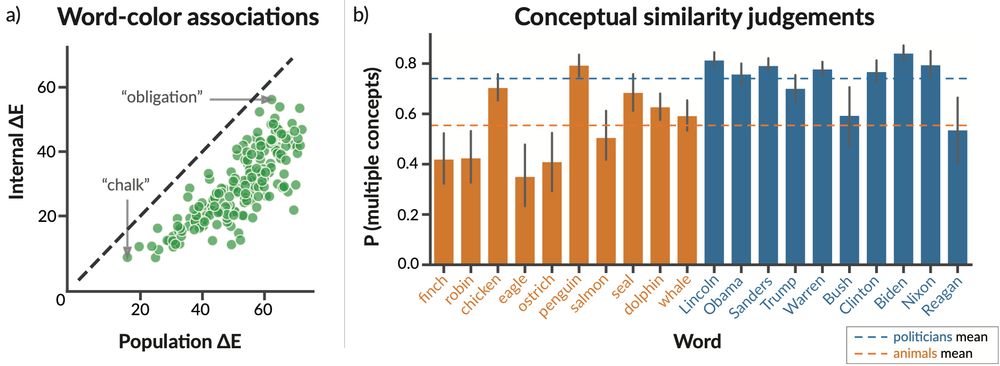

(5/9) Our experiments are inspired by human studies in two domains with rich behavioral data.

10.02.2025 17:20 — 👍 2 🔁 0 💬 1 📌 0

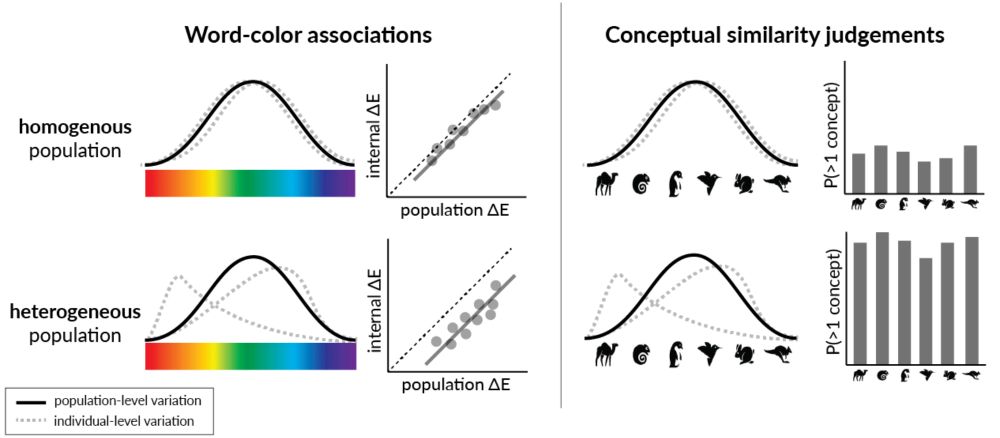

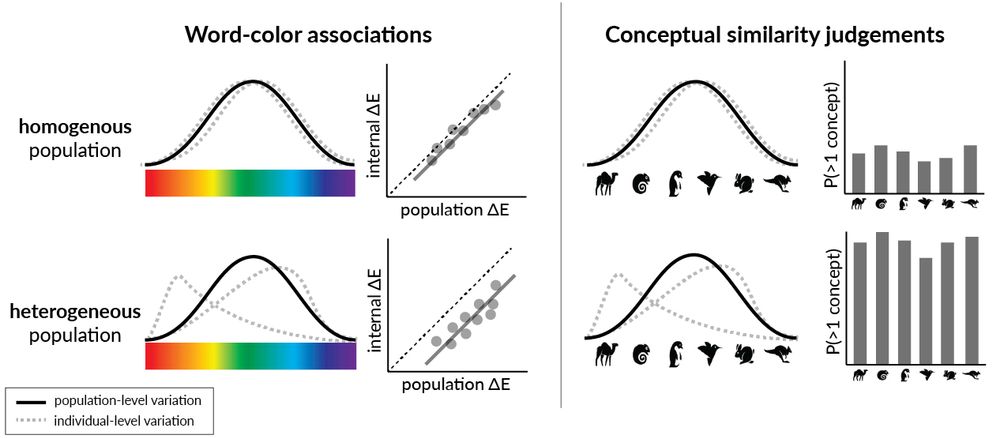

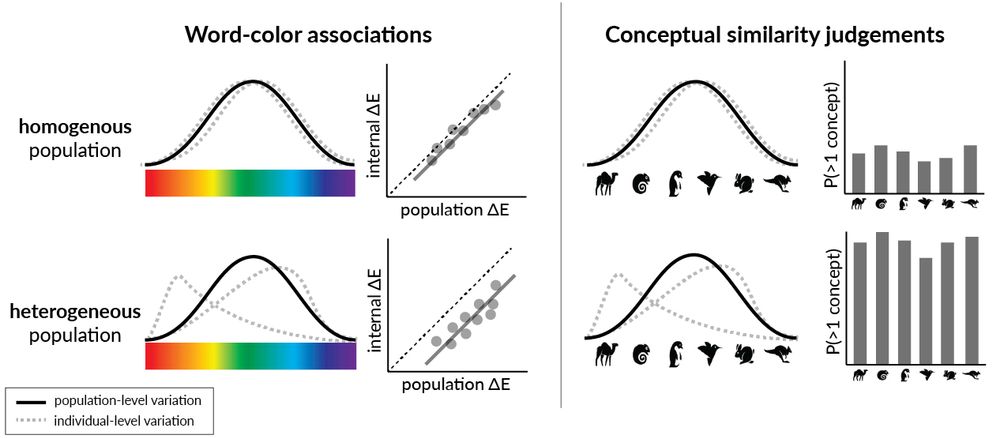

(4/9) We introduce a new way of measuring the conceptual diversity of synthetically-generated LLM "populations" by considering how its “individuals’” variability relates to that of the population.

10.02.2025 17:20 — 👍 0 🔁 0 💬 1 📌 0

(3/9) One key issue is whether LLMs capture conceptual diversity: the variation among individuals’ representations of a particular domain. How do we measure this? And how does alignment affect this?

10.02.2025 17:20 — 👍 2 🔁 0 💬 1 📌 0

(2/9) There's a lot of interest right now in getting LLMs to mimic the response distributions of “populations”--heterogeneous collections of individuals– for the purposes of political polling, opinion surveys, and behavioral research.

10.02.2025 17:20 — 👍 2 🔁 0 💬 1 📌 0

(1/9) Excited to share my recent work on "Alignment reduces LM's conceptual diversity" with @tomerullman.bsky.social and @jennhu.bsky.social, to appear at #NAACL2025! 🐟

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

10.02.2025 17:20 — 👍 63 🔁 10 💬 2 📌 4

🧠 Researcher: noorsajid.com

PhD student at Harvard/MIT interested in neuroscience, language, AI | @kempnerinstitute.bsky.social @mitbcs.bsky.social | prev: Princeton neuro | coltoncasto.github.io

PhDing @ Harvard, previously @ Duke

CoCoDev&Ed: https://projects.iq.harvard.edu/ccdlab/home

PhD student @ MIT Brain and Cognitive Sciences

aliciamchen.github.io

Tea drinking assistant professor of cognitive psychology at Stanford.

https://cicl.stanford.edu

pedestrian and straphanger, reluctantly in california, natural language processor, contentedly irrational, humanist | no kings, no masters, no ghosts

PhD Student @nyudatascience.bsky.social, working with He He on NLP and Human-AI Collaboration.

Also hanging out @ai2.bsky.social

Website - https://vishakhpk.github.io/

NLP research - PhD student at UW

Will irl - PhD student @ NYU on the academic job market!

Using complexity theory and formal languages to understand the power and limits of LLMs

https://lambdaviking.com/ https://github.com/viking-sudo-rm

Studying NLP, CSS, and Human-AI interaction. PhD student @MIT. Previously at Microsoft FATE + CSS, Oxford Internet Institute, Stanford Symbolic Systems

hopeschroeder.com

PhD student at Brown University working on interpretability. Prev. at Ai2, Google

cs phd student at brown

https://apoorvkh.com

Exploring world in sounds.

Junior Research Fellow, University of Cambridge

www.harinlee.info

Postdoc @harvard.edu @kempnerinstitute.bsky.social

Homepage: http://satpreetsingh.github.io

Twitter: https://x.com/tweetsatpreet

Associate Professor, Department of Psychology, Harvard University. Computation, cognition, development.

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language

Asst Prof at Johns Hopkins Cognitive Science • Director of the Group for Language and Intelligence (GLINT) ✨• Interested in all things language, cognition, and AI

jennhu.github.io

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app