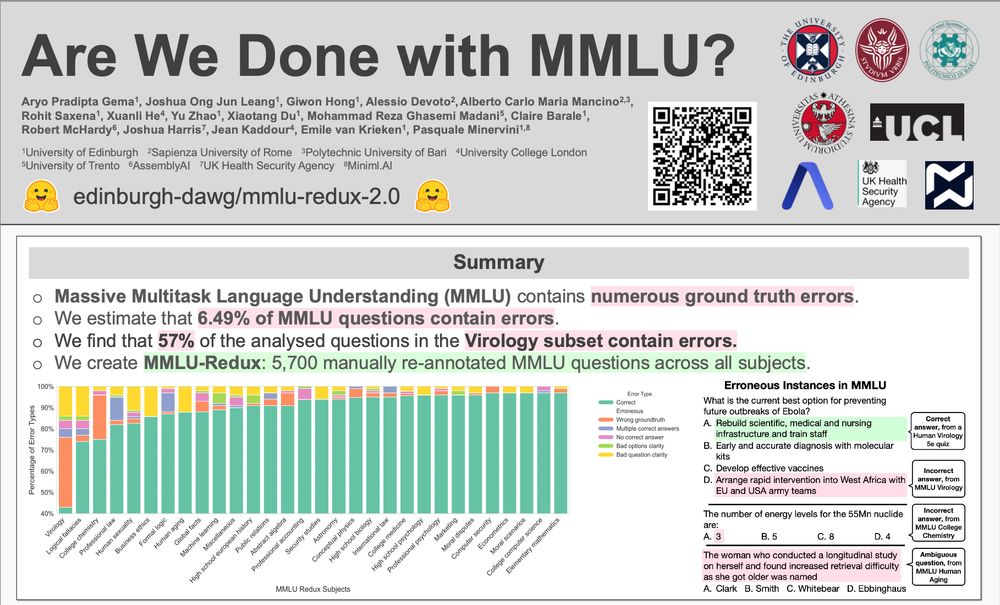

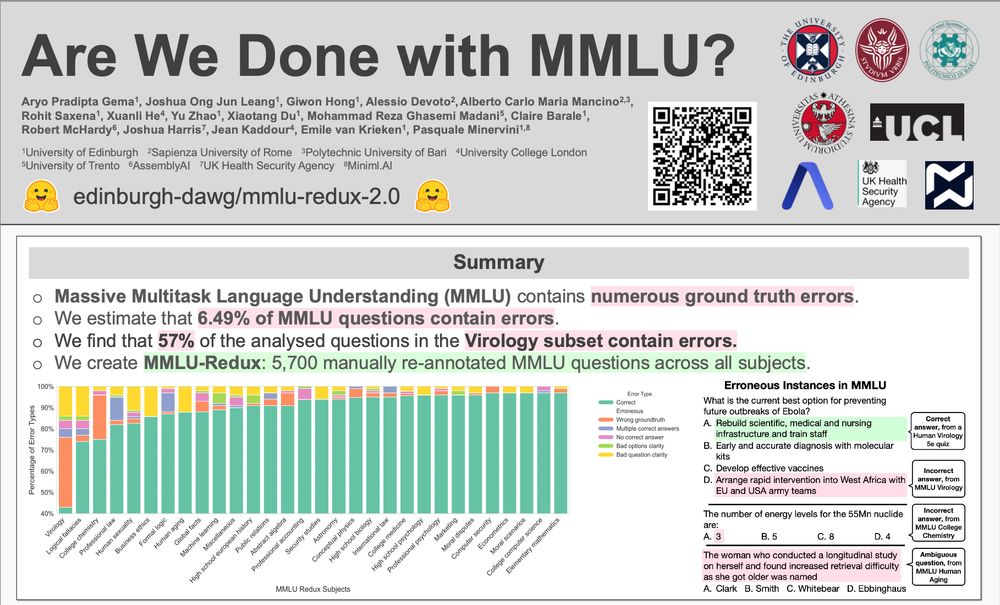

MMLU-Redux Poster at NAACL 2025

MMLU-Redux just touched down at #NAACL2025! 🎉

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

02.05.2025 13:00 — 👍 16 🔁 11 💬 0 📌 0

Thanks @nolovedeeplearning.bsky.social for the picture!!! 🥰

06.12.2024 21:54 — 👍 21 🔁 3 💬 1 📌 1

Very cool work! 👏🚀 Unfortunately, errors in the original dataset will propagate to all new languages 😕

We investigated the issue of existing errors in the original MMLU in

arxiv.org/abs/2406.04127

@aryopg.bsky.social @neuralnoise.com

06.12.2024 13:57 — 👍 4 🔁 2 💬 0 📌 1

For clarity -- great project, but most of the MMLU errors we found (and fixed) in our MMLU Redux paper (arxiv.org/abs/2406.04127) are also present in this dataset. We also provide a curated version of MMLU, so it's easy to fix 😊

06.12.2024 09:26 — 👍 15 🔁 4 💬 1 📌 0

Super Cool work from Cohere for AI! 🎉 However, this highlights a concern raised by our MMLU-Redux team (arxiv.org/abs/2406.04127): **error propagation to many languages**. Issues in MMLU (e.g., "rapid intervention to solve ebola") seem to persist in many languages. Let's solve the root cause first?

06.12.2024 09:38 — 👍 9 🔁 3 💬 1 📌 0

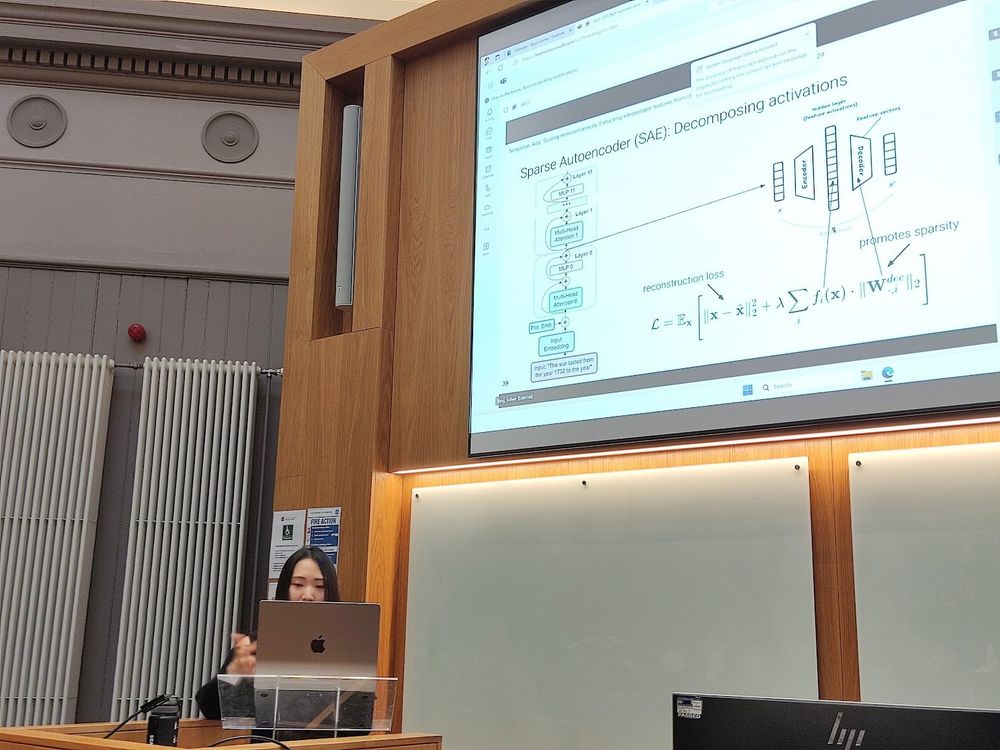

Sohee (@soheeyang.bsky.social) in the house! 🚀🚀🚀

05.12.2024 14:38 — 👍 9 🔁 1 💬 0 📌 0

The OLMo 2 models sit at the Pareto frontier of training FLOPs vs model average performance.

Meet OLMo 2, the best fully open language model to date, including a family of 7B and 13B models trained up to 5T tokens. OLMo 2 outperforms other fully open models and competes with open-weight models like Llama 3.1 8B — As always, we released our data, code, recipes and more 🎁

26.11.2024 20:51 — 👍 151 🔁 36 💬 5 📌 12

This papers' findings about testing LLMs on NLI aligns with many of personal thoughts:

1) NLI remains a difficult task for LLMs

2) Having more few-shot examples is helpful (in my view, helping LLMs better understand class boundaries)

3) Incorrect predictions are often a result of ambiguous labels

24.11.2024 16:38 — 👍 27 🔁 3 💬 1 📌 0

Hey John! Thanks for reaching out—I’ve sent you a DM to discuss this further!

24.11.2024 22:25 — 👍 0 🔁 0 💬 1 📌 0

Hii I’d love to join as well!!!🙋🏼♀️

24.11.2024 03:48 — 👍 0 🔁 0 💬 0 📌 0

Hii I’d love to join as well!!

24.11.2024 03:46 — 👍 1 🔁 0 💬 0 📌 0

Check out our CoMAT: Chain of Mathematically Annotated Thought, which improves mathematical reasoning by converting mathematical questions into structured symbolic representations and performing step-by-step reasoning🎉 works on various languages and challenging benchmarks

arxiv.org/pdf/2410.103...

20.11.2024 15:29 — 👍 0 🔁 0 💬 1 📌 0

The main question about the current LLM “reasoning” research is what to do next. Most go into synthetic generation and training on maybe with self-Refinement in hopes the model becomes better. I think we are missing controlled task formalization, step by step reasoning and strict step verification.

19.11.2024 05:34 — 👍 24 🔁 3 💬 5 📌 1

Thanksss!!!!!

20.11.2024 14:50 — 👍 1 🔁 0 💬 0 📌 0

1/ Introducing ᴏᴘᴇɴꜱᴄʜᴏʟᴀʀ: a retrieval-augmented LM to help scientists synthesize knowledge 📚

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

19.11.2024 16:30 — 👍 161 🔁 39 💬 6 📌 8

Hi I’d love to be added as well!🙋🏼♀️

20.11.2024 13:40 — 👍 0 🔁 0 💬 1 📌 0

Hey, I’m available! However, I can’t send you a dm since it’s restricted to followers. If you could send me a message instead, that’d be great!

20.11.2024 13:40 — 👍 0 🔁 0 💬 0 📌 0

I’ll be travelling to London from Wednesday to Friday for an upcoming event and would be very happy to meet up! 🚀

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127

18.11.2024 13:48 — 👍 12 🔁 7 💬 0 📌 0

dm-ed you!

20.11.2024 00:43 — 👍 1 🔁 0 💬 0 📌 0

Added! Thanks!!

18.11.2024 11:04 — 👍 0 🔁 0 💬 0 📌 0

I made a starter pack with the people doing something related to Neurosymbolic AI that I could find.

Let me know if I missed you!

go.bsky.app/RMJ8q3i

11.11.2024 15:27 — 👍 91 🔁 36 💬 16 📌 2

Hi I would love to be added as well!!

18.11.2024 09:33 — 👍 1 🔁 0 💬 1 📌 0

Hi, I would love to be added as well!

18.11.2024 09:31 — 👍 1 🔁 0 💬 0 📌 0

Hi, I’d love to be added as well!

18.11.2024 09:26 — 👍 1 🔁 0 💬 0 📌 0

Hi, I’d love to be added, thanks!!!

18.11.2024 08:50 — 👍 0 🔁 0 💬 0 📌 0

Senior Research Scientist @GoogleDeepMind working on Autonomous Assistants ✍️🤖

Center for Language and Speech Processing at Johns Hopkins University

#NLProc #MachineLearning #AI http://tinyurl.com/clspy2ube

associate prof at UMD CS researching NLP & LLMs

PhD student/research scientist intern at UCL NLP/Google DeepMind (50/50 split). Previously MS at KAIST AI and research engineer at Naver Clova. #NLP #ML 👉 https://soheeyang.github.io/

Ph.D. candidate @ UMD CS Clip lab | ex Intern @ Meta FAIR & Microsoft | Multilingual and Multimodal NLP. Machine Translation and Speech Translation. https://h-j-han.github.io/

doing ML stuff at answer.ai / fast.ai

🇯🇵-based 🇫🇷man

27, French CS Engineer 💻, PhD in ML 🎓🤖 — Guiding generative models for better synthetic data and building multimodal representations @LightOn

Sentence Transformers, SetFit & NLTK maintainer

Machine Learning Engineer at 🤗 Hugging Face

👨🍳 Web Agents @mila-quebec.bsky.social

🎒 @mcgill-nlp.bsky.social

PhD student at McGill University and Mila — Quebec AI Institute

#NLP Postdoc at Mila - Quebec AI Institute & McGill University

mariusmosbach.com

Search at Reddit, formerly Shopify and OpenSource Connections. Helped write Relevant Search / AI Powered Search

http://softwaredoug.com

RecSys, AI, Engineering; Principal Applied Scientist @ Amazon. Led ML @ Alibaba, Lazada, Healthtech Series A. Writing @ eugeneyan.com, aiteratelabs.com.

Research Engineer at Zeta Alpha. Likes 🧠 neural IR, 📋 model evals, and 🏋️ lifting weights. Incredibly optimistic about the future!

𝕏: din0s_ / 🖥️: din0s.me

Research Scientist at Yahoo! / ML OSS developer

PhD in Computer Science at UC Irvine

Research: ML, NLP, Computer Vision, Information Retrieval

Technical Chair: #CVPR2026 #ICCV2025 #WACV2026

Open Source/Science matters!

https://yoshitomo-matsubara.net

Glasgow Information Retrieval Group at the University of Glasgow

proud mediterrenean 🧿 open-sourceress at hugging face 🤗 multimodality, zero-shot vision, vision language models, transformers

PhD researcher (Recommender Systems) @TerrierTeam, University of Glasgow.

Ex. Senior Software Engineer @Amazon

The opinions are mine.