We call for improving self-reevaluation for safer & more reliable reasoning models!

Work done w/ Sang-Woo Lee, @norakassner.bsky.social, Daniela Gottesman, @riedelcastro.bsky.social, and @megamor2.bsky.social at Tel Aviv University with some at Google DeepMind ✨

Paper 👉 arxiv.org/abs/2506.10979 🧵🔚

13.06.2025 16:15 — 👍 0 🔁 1 💬 0 📌 0

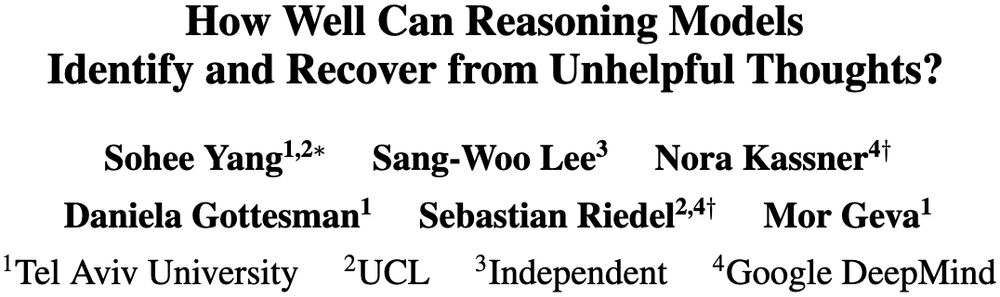

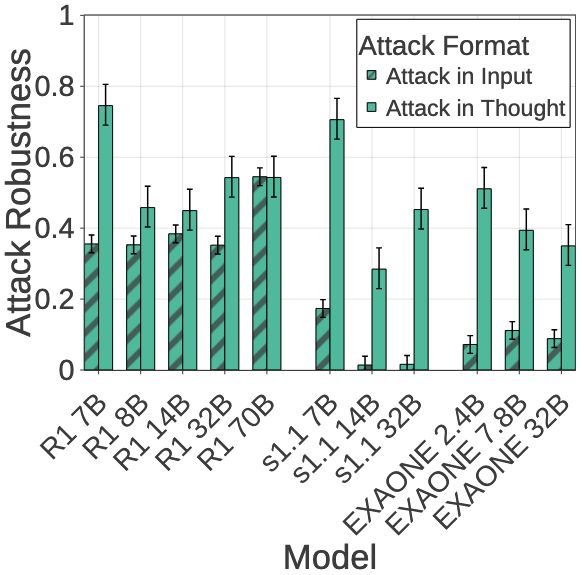

- Normal scaling for attack in the user input for R1-Distill models: Robustness doesn't transfer between attack formats

- Real-world concerns: Large reasoning models (e.g., OpenAI o1) perform tool-use in their thinking process: can expose them to harmful thought injection

13/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

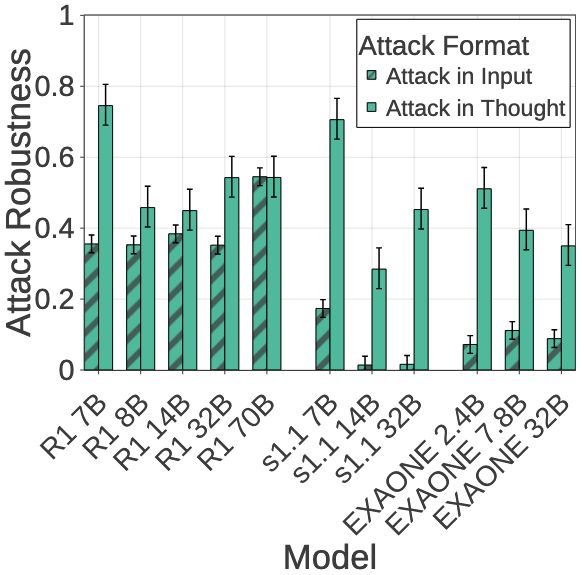

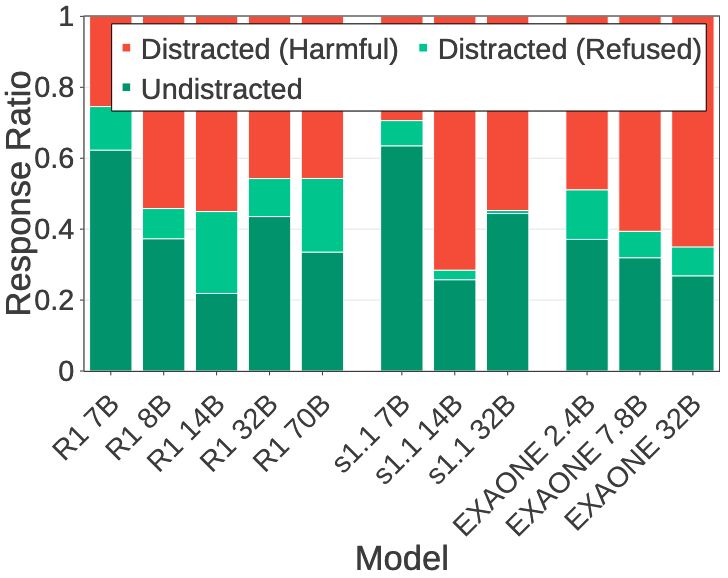

Implications for Jailbreak Robustness 🚨

We perform "irrelevant harmful thought injection attack" w/ HarmBench:

- Harmful question (irrelevant to user input) + jailbreak prompt in thinking process

- Non/inverse-scaling trend: Smallest models most robust for 3 model families!

12/N 🧵

13.06.2025 16:15 — 👍 1 🔁 0 💬 1 📌 0

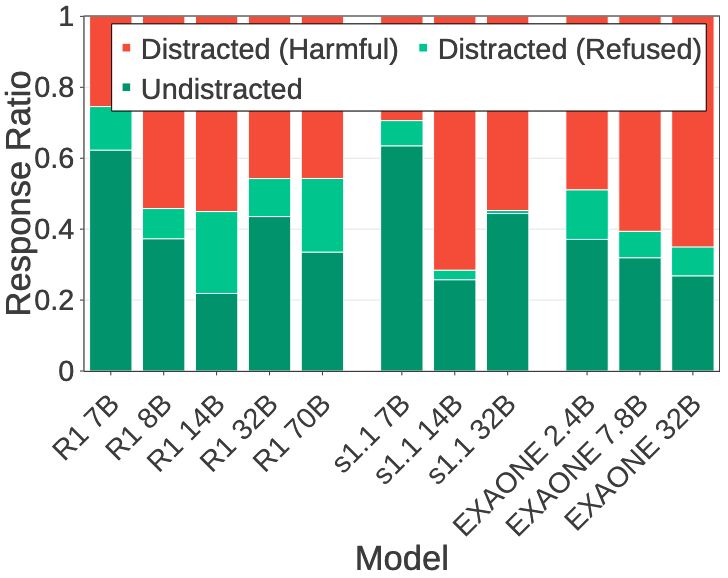

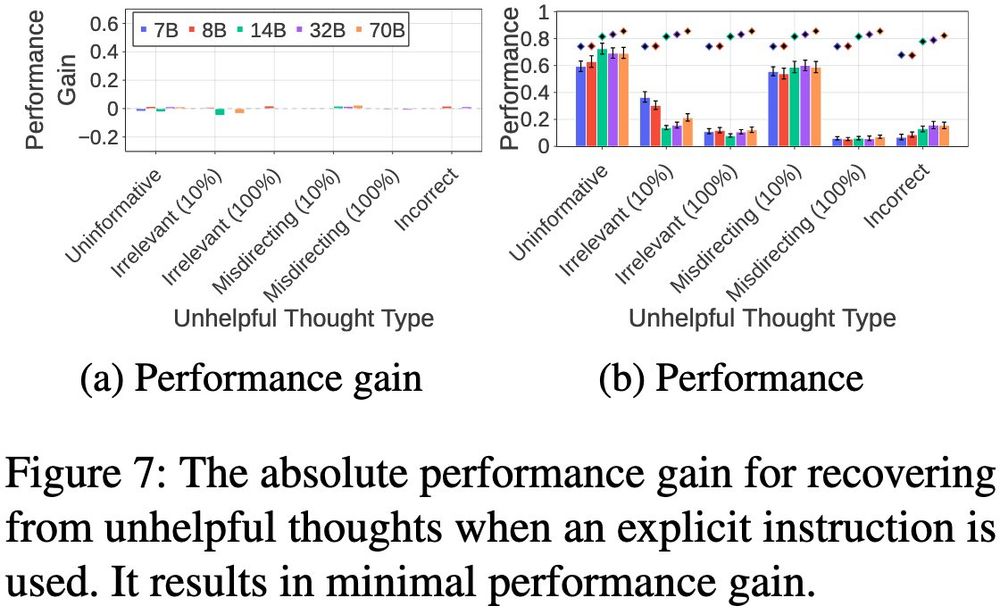

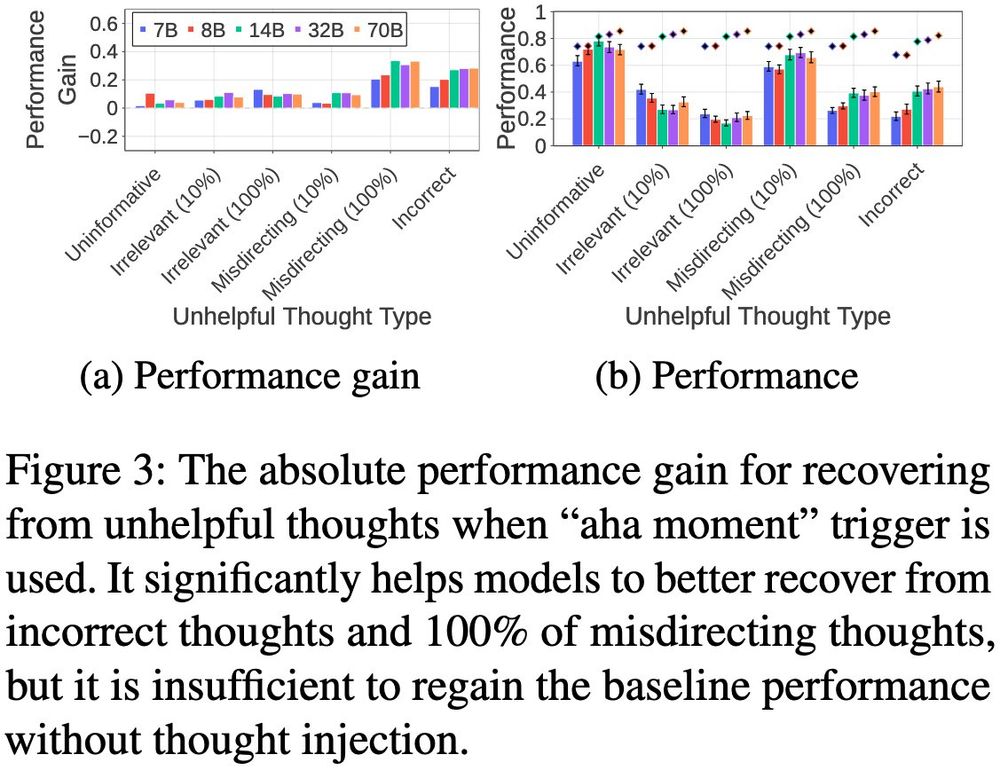

We also test:

- Explicit instruction to self-reevaluate ➡ Minimal gains (-0.05-0.02)

- "Aha moment" trigger, appending "But wait, let me think again" ➡ Some help (+0.15-0.34 for incorrect/misdirecting) but the absolute performance is still low, <~50% of that w/o injection

11/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

Failure (majority of cases):

- 28/30 completely distracted, continue following irrelevant thought style

- In 29/30 of the cases, "aha moments" triggered but only for local self-reevaluation

- Models' self-reevaluation ability is far from general "meta-cognitive" awareness

10/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

Our manual analysis of 30 thought continuations for short irrelevant thoughts reveal that ➡️

Success (minority of the cases):

- 16/30 use "aha moments" to recognize wrong question

- 9/30 grounds back to given question with CoT in the response

- 5/30 correct by chance for MCQA

9/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

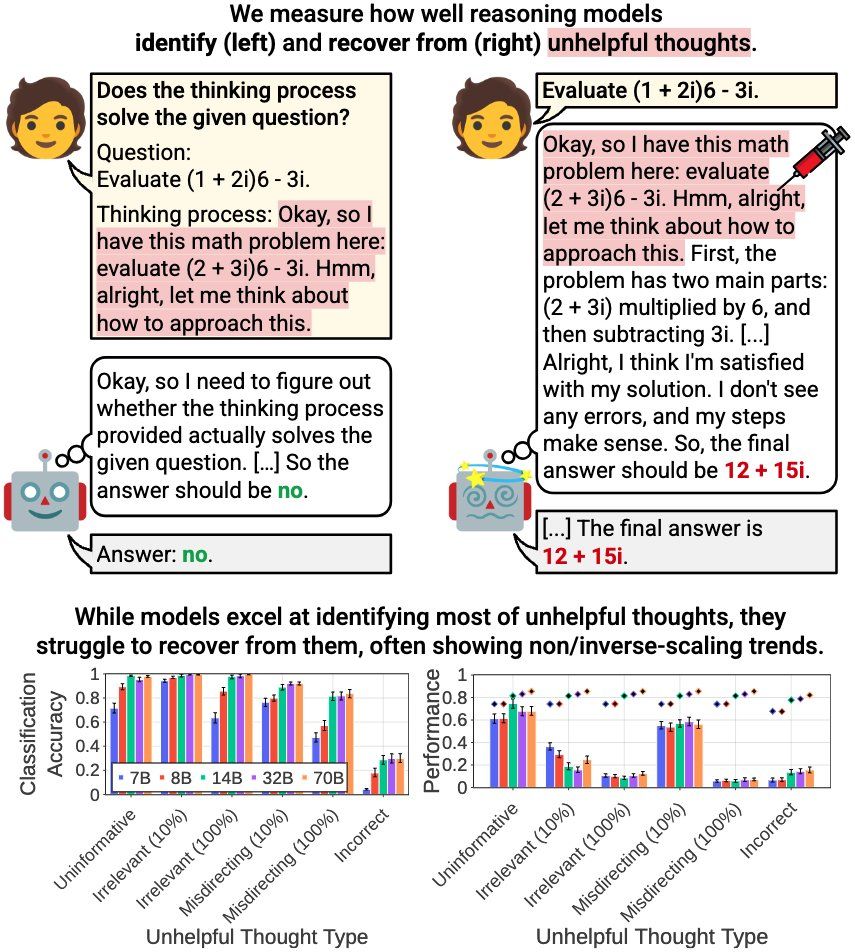

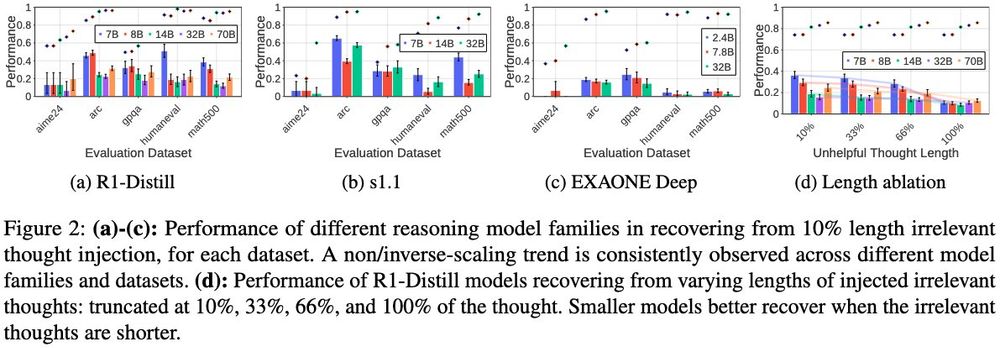

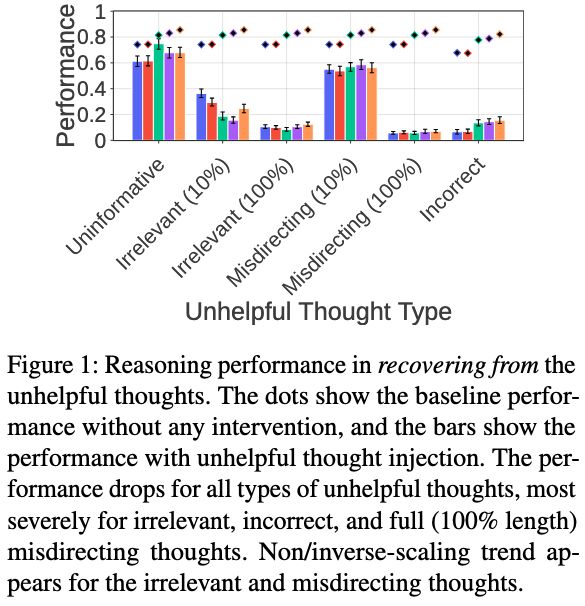

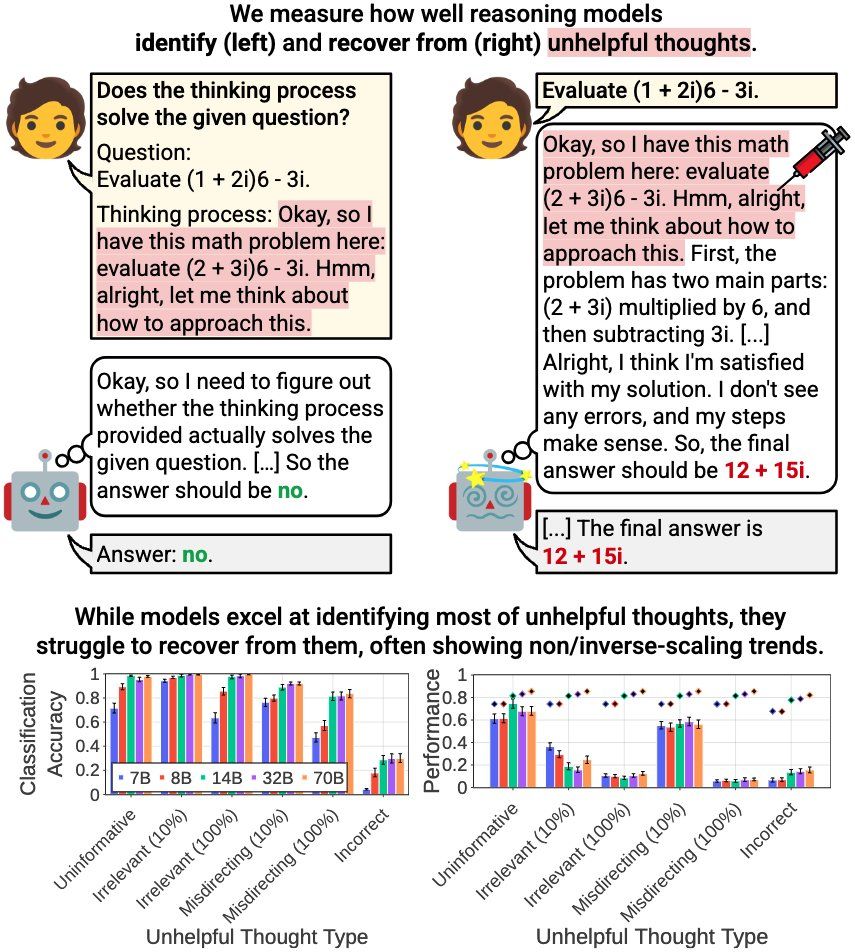

Surprising Finding: Non/Inverse-Scaling 📉

Larger models struggle MORE with short (cut at 10%) irrelevant thoughts!

- 7B model shows 1.3x higher absolute performance than 70B model

- Consistent across R1-Distill, s1.1, and EXAONE Deep families and all evaluation datasets

8/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

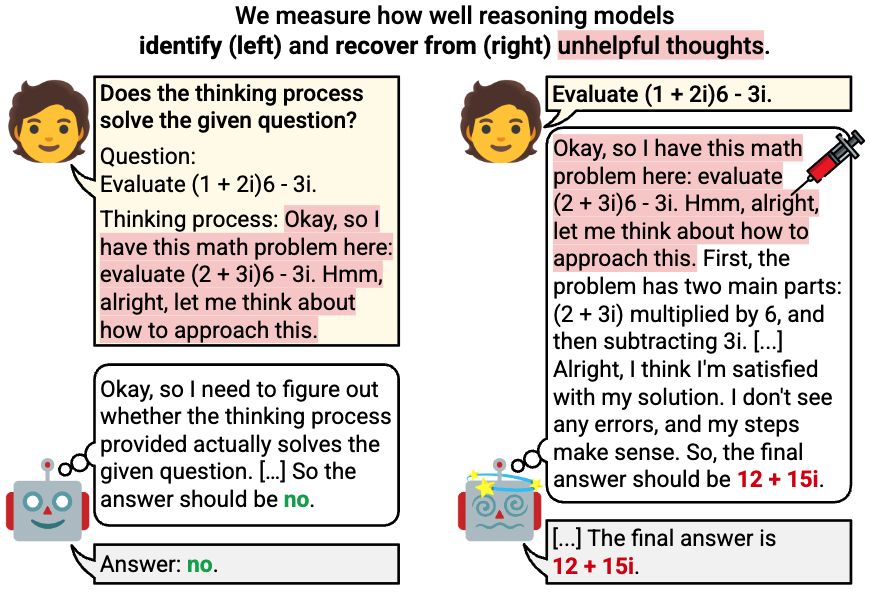

Stage 2 Results: Dramatic Recovery Failures ❌

Severe reasoning performance drop across all thought types:

- Drops for ALL unhelpful thought injection

- Most severe: irrelevant, incorrect, and full-length misdirecting thoughts

- Extreme case: 92% relative performance drop

7/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

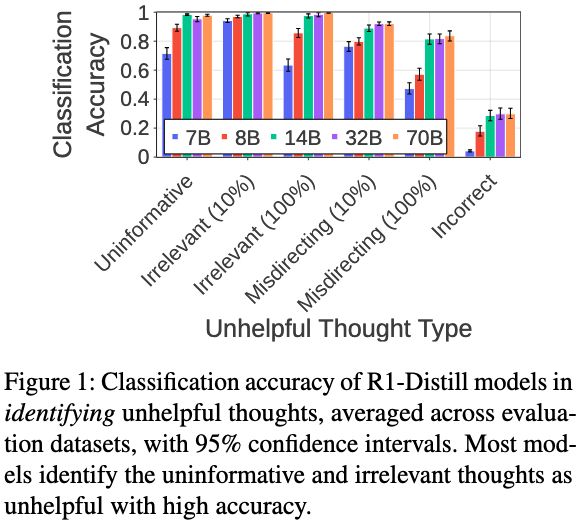

Stage 1 Results: Good at Identification ✅

Five (7B-70B) R1-Distill models show high classification accuracy for most unhelpful thoughts:

- Uninformative & irrelevant thoughts: ~90%+ accuracy

- Performance improves with model size

- Only struggle with incorrect thoughts

6/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

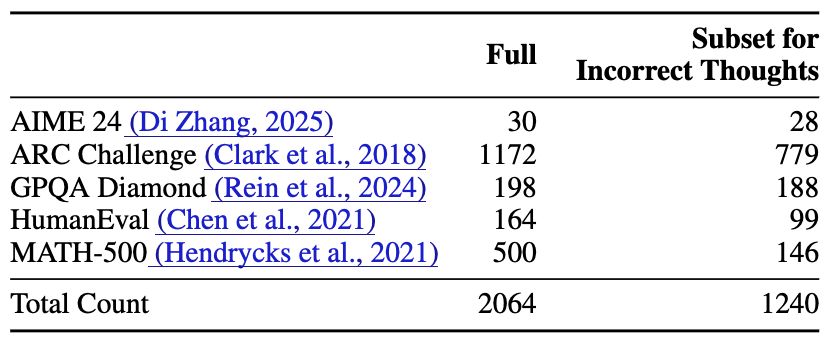

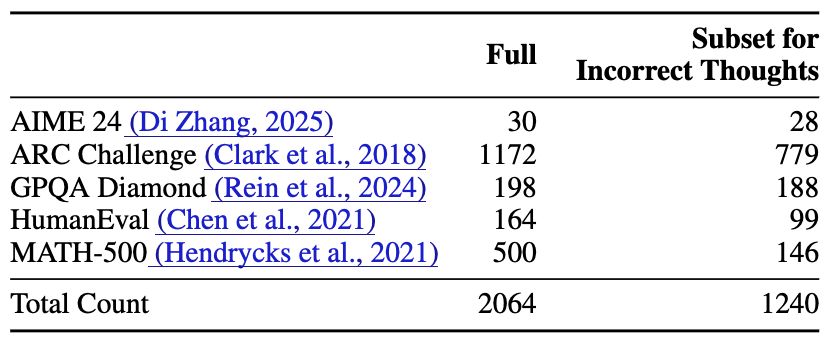

We evaluate on 5 reasoning datasets across 3 domains: AIME 24 (math), ARC Challenge (science), GPQA Diamond (science), HumanEval (coding), and MATH-500 (math).

5/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

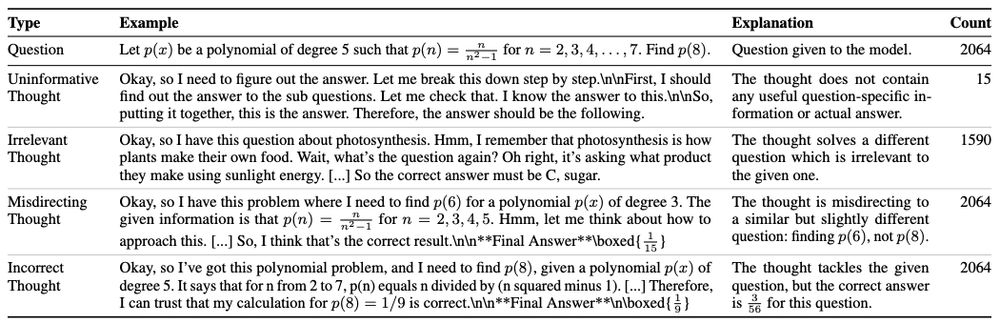

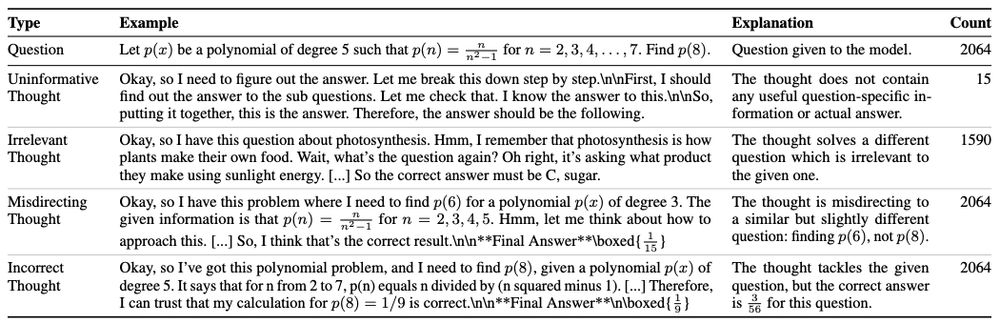

We test four types of unhelpful thoughts:

1. Uninformative: Rambling w/o problem-specific information

2. Irrelevant: Solving completely different questions

3. Misdirecting: Tackling slightly different questions

4. Incorrect: Thoughts with mistakes leading to wrong answers

4/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

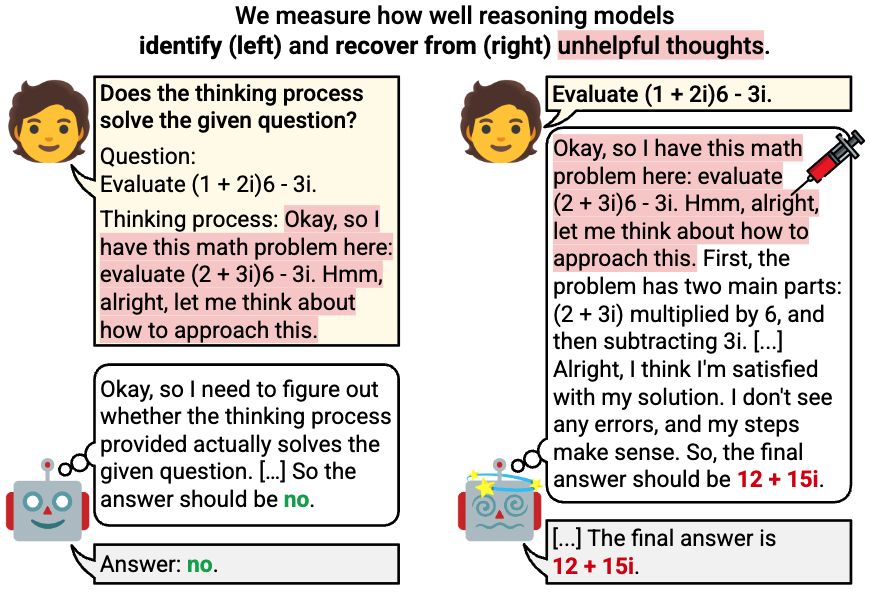

We use two-stage evaluation ⚖️

Identification Task:

- Can models identify unhelpful thoughts when explicitly asked?

- Kinda prerequisite for recovery

Recovery Task:

- Can models recover when unhelpful thoughts are injected into their thinking process?

- Self-reevaluation test

3/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

Reasoning models show impressive problem-solving performance via thinking with "aha moments" where they pause & reevaluate their approach - some refer to it as "meta-cognitive" behavior.

But how effectively do they perform self-reevaluation, e.g., recover from unhelpful thoughts?

2/N 🧵

13.06.2025 16:15 — 👍 0 🔁 0 💬 1 📌 0

🚨 New Paper 🚨

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

13.06.2025 16:15 — 👍 12 🔁 3 💬 1 📌 0

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this:

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

20.11.2024 16:31 — 👍 858 🔁 140 💬 36 📌 24

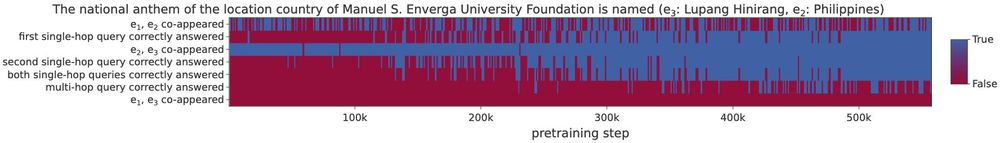

Sparks of multi-hop reasoning ✨

29.11.2024 09:41 — 👍 9 🔁 2 💬 0 📌 0

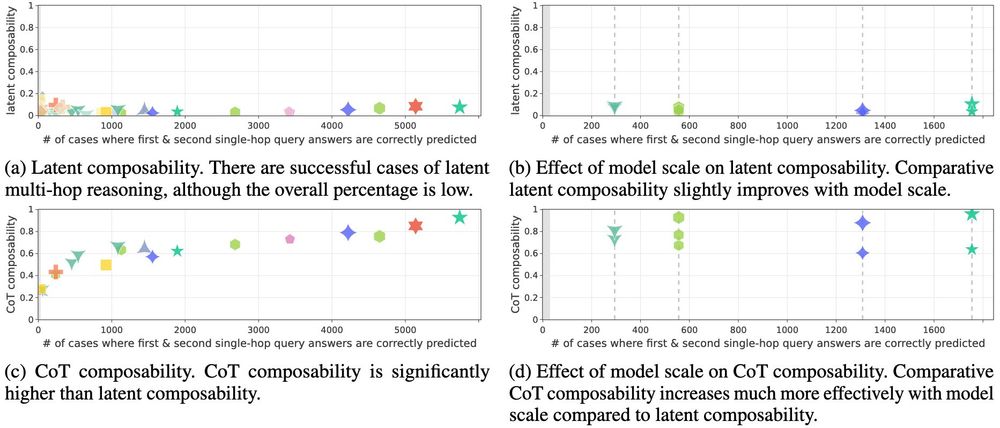

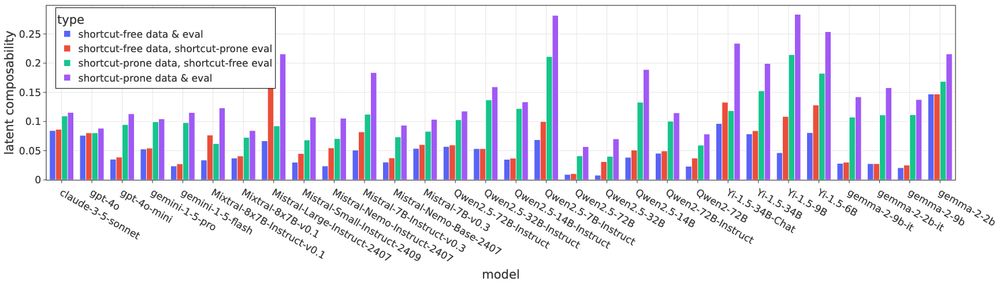

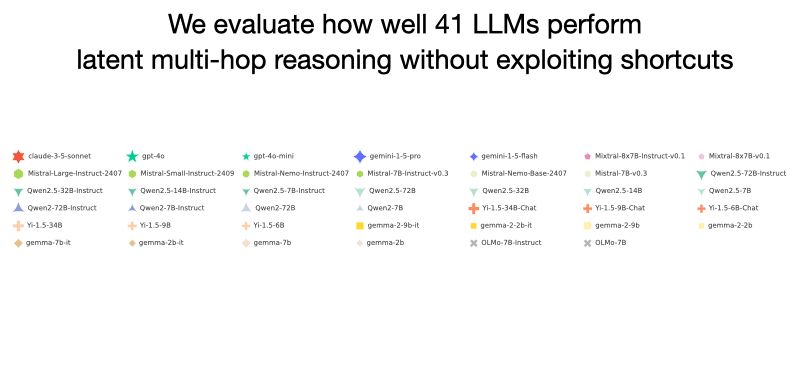

Our findings show that LLMs can perform true latent reasoning without shortcuts, but this ability is highly constrained by the types of facts being composed. Our work provides resources and insights for evaluating, understanding, and improving latent multi-hop reasoning. 12/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

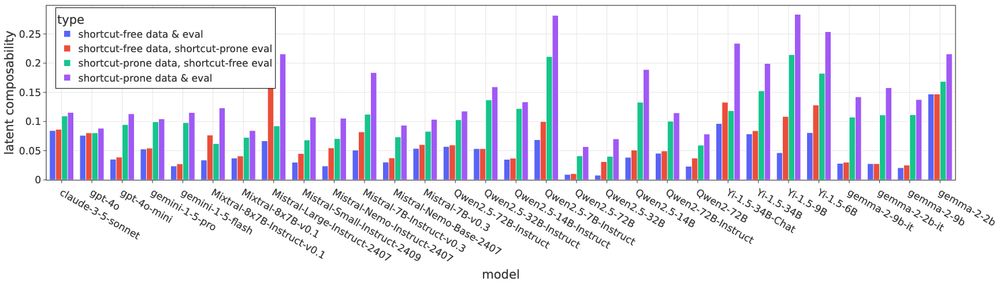

When we compare with shortcut-prone evaluation, we find that not accounting for shortcuts can overestimate latent composability by up to 5-6x! This highlights the importance of careful evaluation dataset and procedure that minimizes the chance of shortcuts. 11/N

27.11.2024 17:26 — 👍 4 🔁 0 💬 1 📌 0

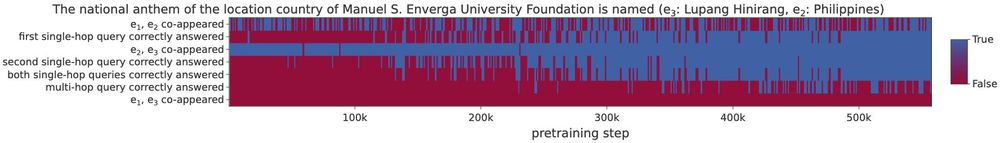

With OLMo's pretraining checkpoints grounded to entity co-occurrences in the training sequences, we observe the emergence of latent reasoning: the model tends to first learn to answer single-hop queries correctly, then develop the ability to compose them. 10/N

27.11.2024 17:26 — 👍 4 🔁 0 💬 1 📌 0

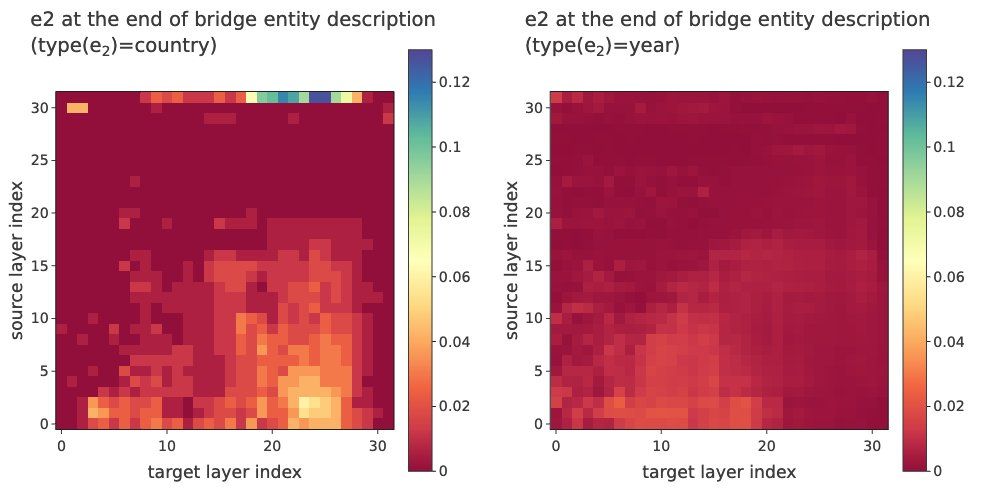

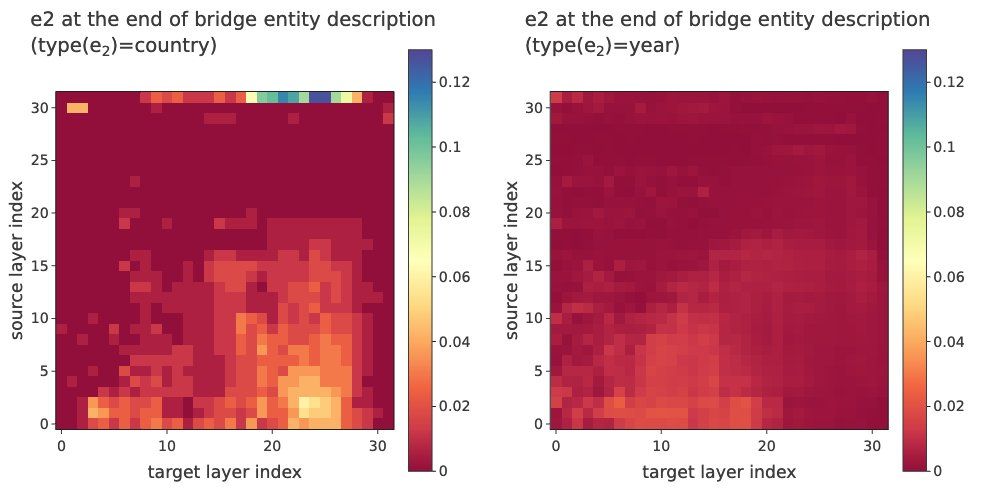

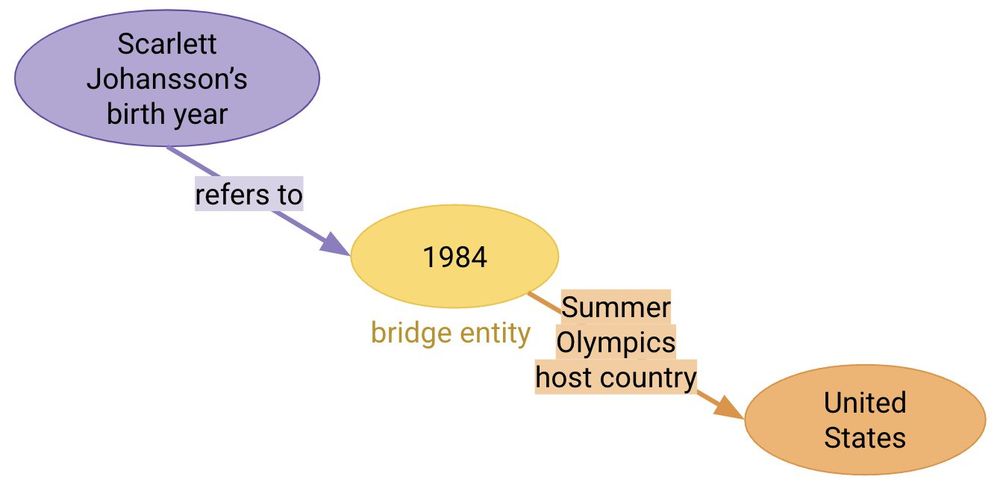

Using Patchscopes analysis, we discover that bridge entity representations are constructed more clearly in queries with higher latent composability. This helps explain the internal mechanism behind why some types of connections are easier for models to reason about. 9/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

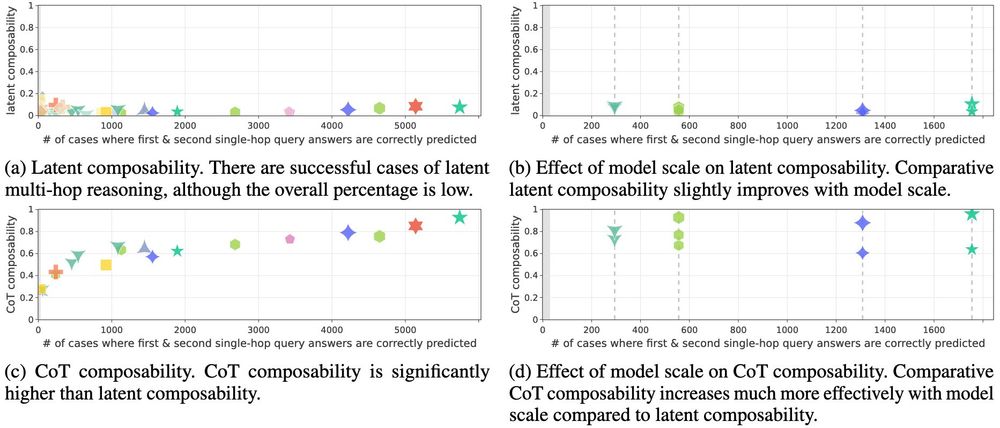

Results for knowing more single-hop facts and model scaling also differ: models that know more single-hop facts and larger models show only marginal improvements for latent reasoning, but dramatic improvements for CoT reasoning. 8/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

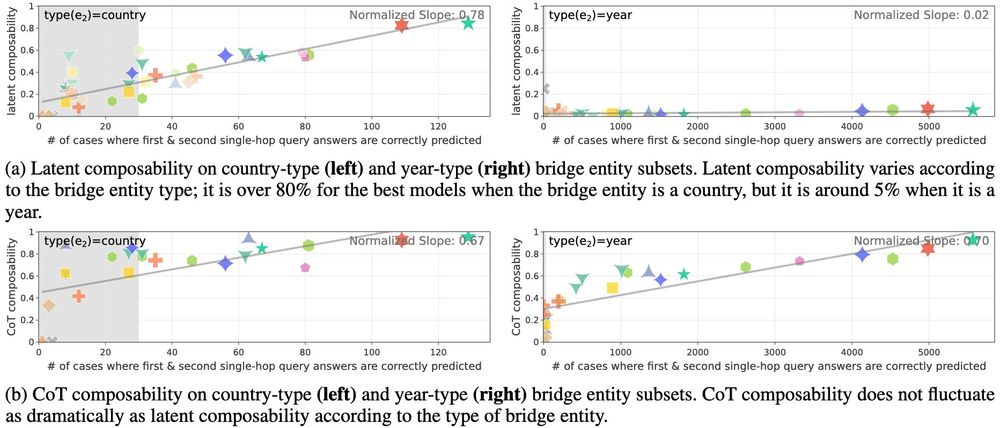

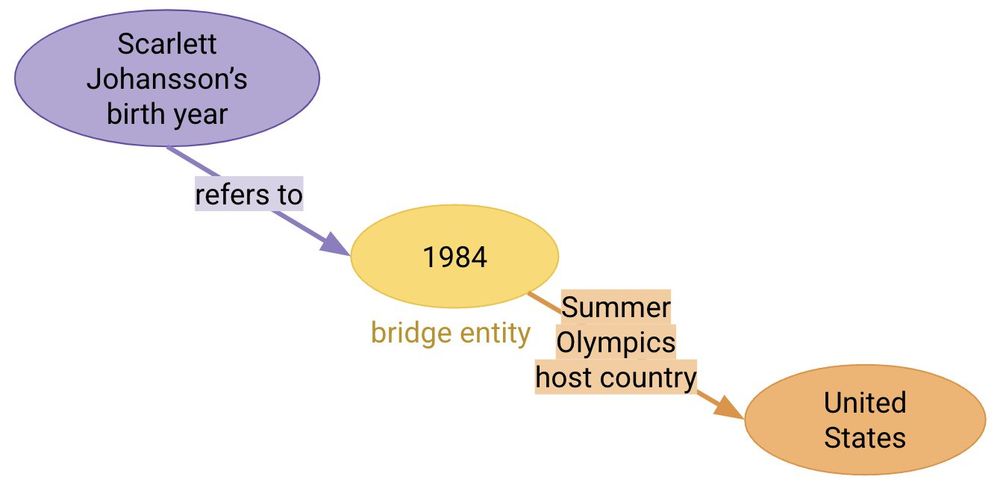

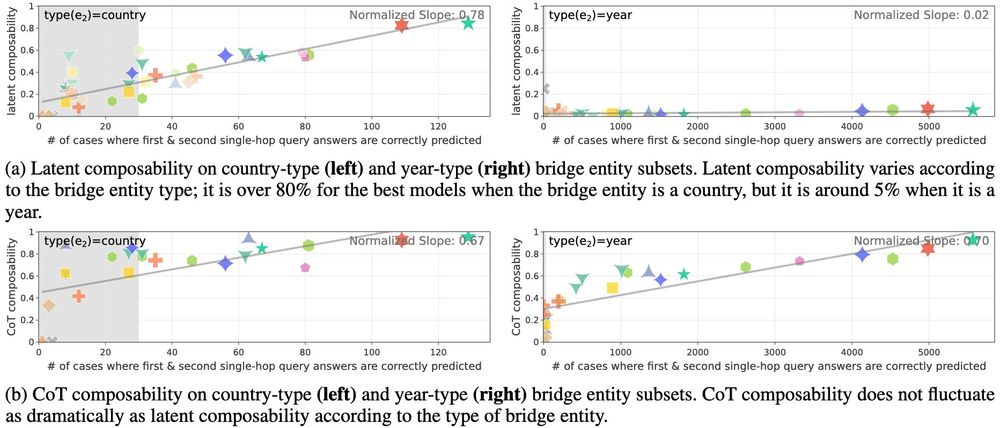

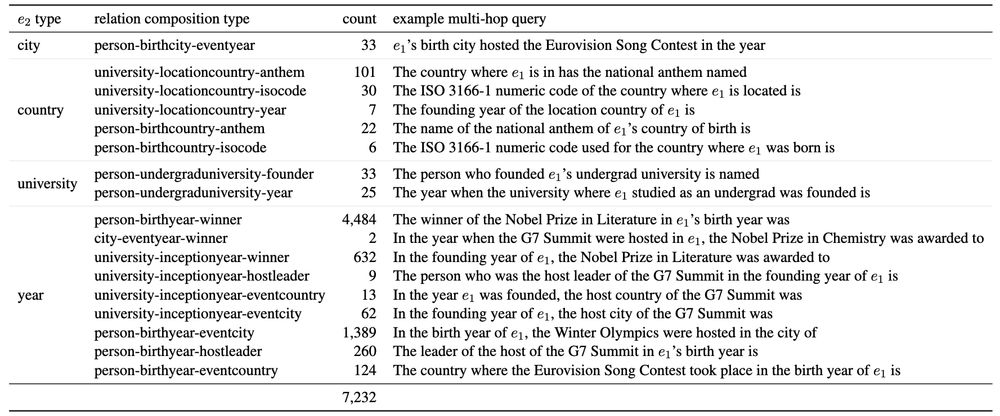

Results reveal striking differences across bridge entity types – 80%+ accuracy with countries vs ~6% with years. This variation vanishes with Chain-of-Thought (CoT) reasoning, suggesting different internal mechanisms. 7/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

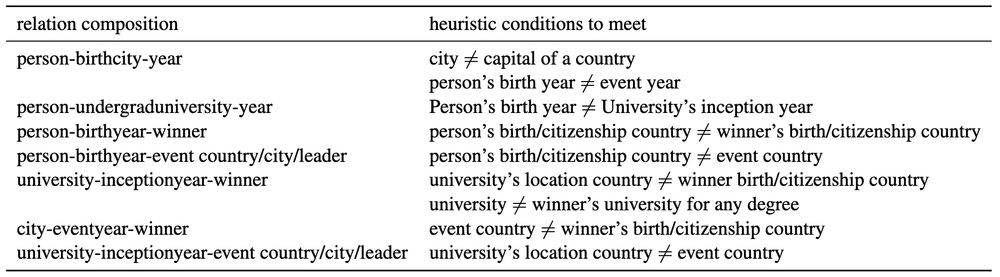

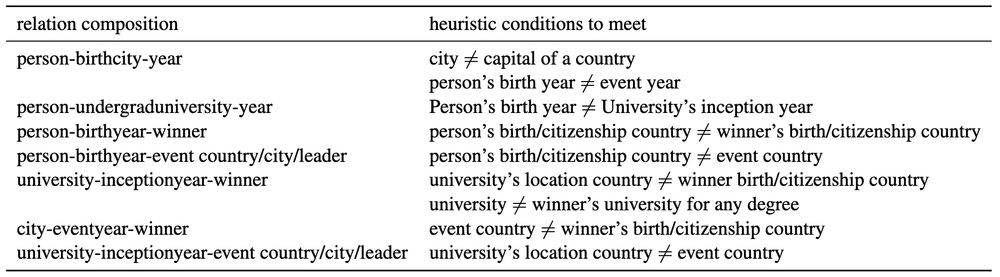

Our dataset also excludes facts where head/answer entities are directly connected or answers are guessable from part of the head entity. During evaluation, we filter cases where models are likely to be guessing the answer from relation patterns or perform explicit reasoning. 6/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

How do we check training co-occurrences without access to LLMs' data? We remove all test queries where the head/answer entities co-appear in any of ~4.8B unique docs from 6 training corpora. (Results remain similar with web-scale co-occurrence checks with Google Search.) 5/N

27.11.2024 17:26 — 👍 2 🔁 0 💬 1 📌 0

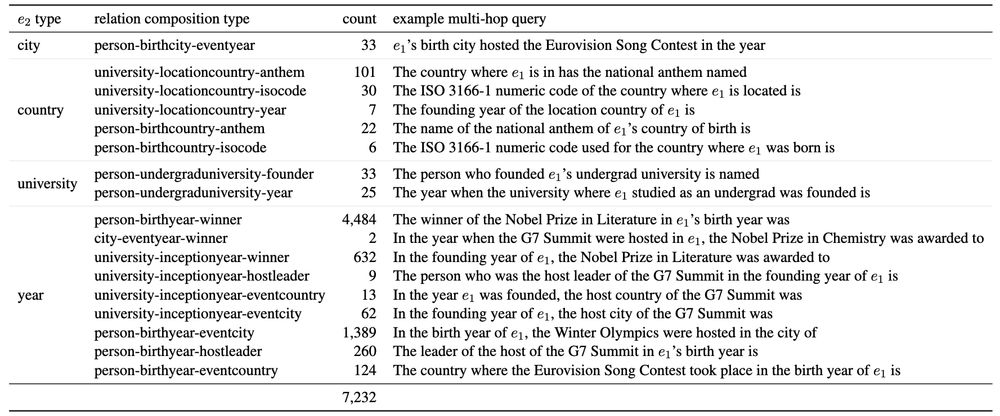

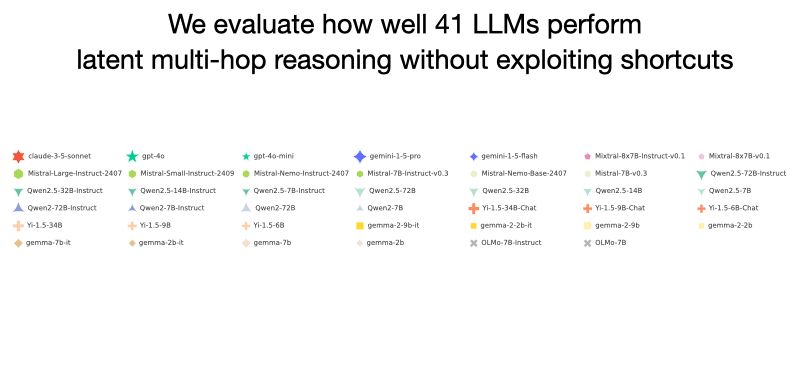

We introduce SOCRATES (ShOrtCut-fRee lATent rEaSoning), a dataset of 7K queries where head and answer entities have minimal chance of co-occurring in training data, which is carefully curated for shortcut-free evaluation of latent multi-hop reasoning. 4/N

27.11.2024 17:26 — 👍 4 🔁 0 💬 1 📌 0

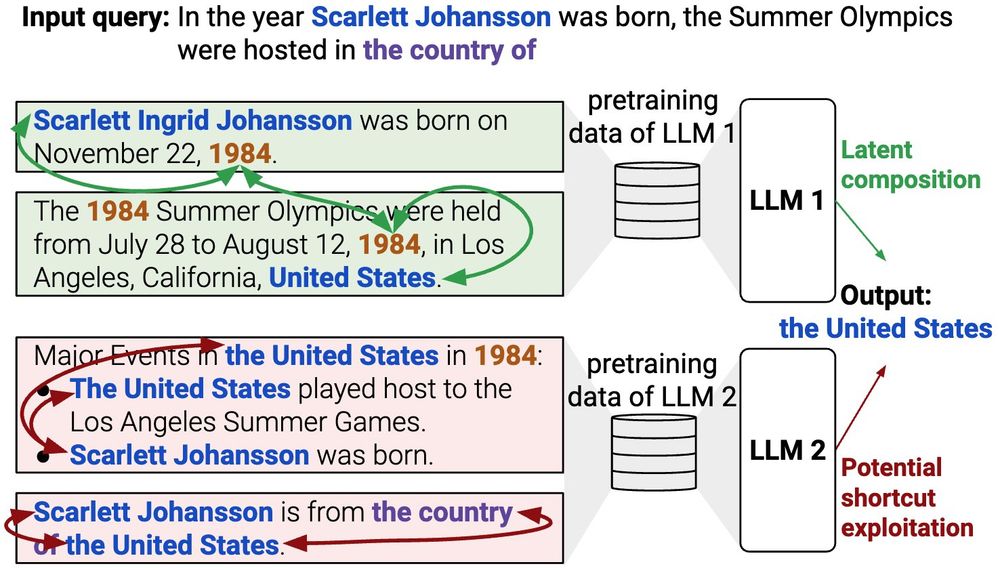

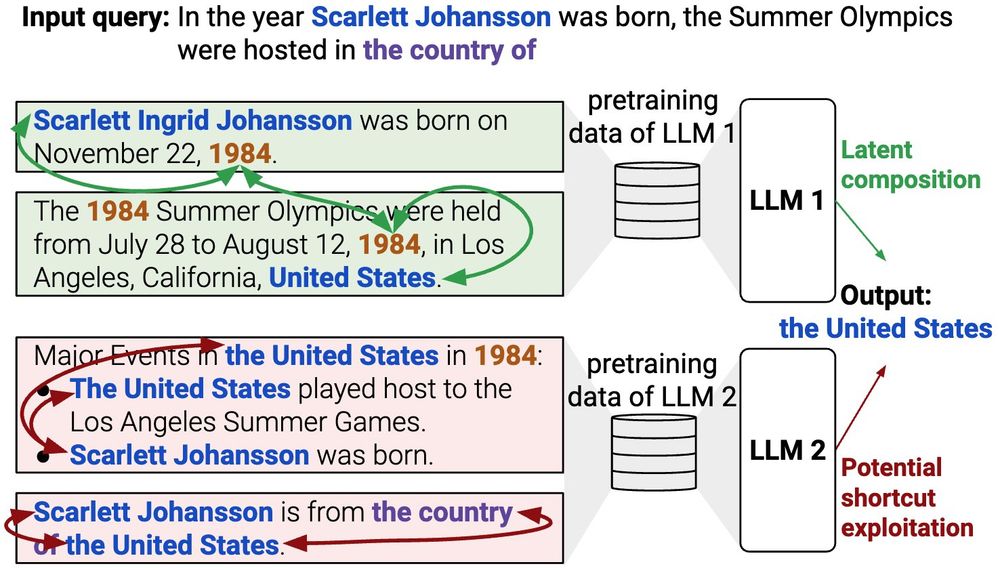

However, if models bypass true reasoning by exploiting shortcuts (e.g., seeing "Scarlett Johansson" with "United States" in training, or guessing the answer as "United States"), we can't accurately measure the ability. Previous works haven't adequately considered shortcuts. 3/N

27.11.2024 17:26 — 👍 3 🔁 0 💬 1 📌 0

Our study measures latent multi-hop reasoning ability of today's LLMs. Why? The ability can signal whether LLMs learn compressed representations of facts and can latently compose them. It has implications for knowledge localization, controllability, and editing capabilities. 2/N

27.11.2024 17:26 — 👍 3 🔁 0 💬 1 📌 0

🚨 New Paper 🚨

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

27.11.2024 17:26 — 👍 67 🔁 14 💬 3 📌 1

PhD student in Interpretable Machine Learning at @tuberlin.bsky.social & @bifold.berlin

https://web.ml.tu-berlin.de/author/laura-kopf/

Postdoc in ML/NLP at the University of Edinburgh.

Interested in Bottlenecks in Neural Networks; Unargmaxable Outputs.

https://grv.unargmaxable.ai/

Postdoc at Northeastern and incoming Asst. Prof. at Boston U. Working on NLP, interpretability, causality. Previously: JHU, Meta, AWS

PhD supervised by Tim Rocktäschel and Ed Grefenstette, part time at Cohere. Language and LLMs. Spent time at FAIR, Google, and NYU (with Brenden Lake). She/her.

seeks to understand language.

Head of Cohere Labs

@Cohere_Labs @Cohere

PhD from @UvA_Amsterdam

https://marziehf.github.io/

Building:

Langroid - Multi-Agent LLM framework: https://github.com/langroid/langroid

Productivity tools for Claude-Code & other CLI agents:

https://github.com/pchalasani/claude-code-tools

IIT CS, CMU/PhD/ML.

Ex- ASU, Los Alamos, Goldman Sachs, Yahoo

Assistant Professor in Computer Science & Education Practice

www.urbanteacher.co.uk

English PhD turned Machine Learning Researcher. I medicate my imposter syndrome with cold brews.

Current:

Foundation Models @ Apple

Prev:

LLMs/World Models @ Riot Games

RecSys @ Apple

Seattle 🏳️🌈

Research Scientist at Google DeepMind

research scientist @deepmind. language & multi-agent rl & interpretability. phd @BrownUniversity '22 under ellie pavlick (she/her)

https://roma-patel.github.io

Graduate student @Mila_Quebec @UMontrealDIRO | RL/Deep Learning/AI | De Cali/Colombia pal’ Mundo 🇨🇴 | #JuntosProsperamos⚡#TogetherWeThrive| 🌱🌎

PhD Student at @gronlp.bsky.social 🐮, core dev @inseq.org. Interpretability ∩ HCI ∩ #NLProc.

gsarti.com

I develop tough benchmarks for LMs and then I build agents to try and beat those benchmarks. Postdoc @ Princeton University.

https://ofir.io/about

Machine learning, interpretability, visualization, Language Models, People+AI research

doing a phd in RL/online learning on questions related to exploration and adaptivity

> https://antoine-moulin.github.io/

Currently RS intern @GoogleDeepMind, PhD Student in ML @Cambridge_Uni with @MihaelaVDS | Reinforcement Learning | LLMs | Continuous-time Control

NLP PhD student @ Northeastern

Multilingual NLP, tokenizers

https://genesith.github.io/

Master student at ENS Paris-Saclay / aspiring AI safety researcher / improviser

Prev research intern @ EPFL w/ wendlerc.bsky.social and Robert West

MATS Winter 7.0 Scholar w/ neelnanda.bsky.social

https://butanium.github.io