"Family firms" talks about various non-monetary benefits that owners can derive from firms - which presumably mean they don't optimize prices if those conflict with their other objectives. I guess Holmstrom multi-tasking + multiple objectives imply similar things?

06.03.2025 21:22 — 👍 3 🔁 0 💬 1 📌 0

Vibe-coding’s complement is unit testing. If the writing of code is commodified, the validation of output becomes scarce.

05.03.2025 15:13 — 👍 1 🔁 0 💬 0 📌 0

The Financial Consequences of Wanting to Own a Home

We study the causal effects of homeownership affinities on tenure choice, household sensitivity to credit shocks, and retirement portfolios. Exploiting exogenou

These findings have policy implications - groups that have a greater affinity for homeownership will be more likely to be benefited / harmed by credit or housing policy changes. Heterogeneity in take-up can result from group affinity!

Link to paper is here: papers.ssrn.com/sol3/papers....

#Econsky

28.02.2025 22:38 — 👍 1 🔁 0 💬 1 📌 0

We find non-RE wealth increases of HHs with high HO affinity limited to those in the right housing markets at the right time & who happen to see high price increases, NOT a general consequence of homeownership - so housing policy is limited if housing booms are not guaranteed!

28.02.2025 22:38 — 👍 0 🔁 0 💬 1 📌 0

We obtain restricted HRS data to see if affinity for HO impacts the portfolios of foreign-born retirees: as expected, they are more likely to own a home & own more RE in their portfolios. Total non-RE retirement wealth is also higher for those who have high HO affinity in origin country! But why?...

28.02.2025 22:38 — 👍 0 🔁 0 💬 1 📌 0

We show that AFFINITY matters for housing cycles and effects of credit supply shocks. High HO affinity households enter more into homeownership during 2000s housing boom, default less during the GFC - see paper for causal evidence on greater response to credit supply shocks.

28.02.2025 22:38 — 👍 0 🔁 0 💬 1 📌 0

It's hard to find exogenous changes in homeownership (HO) to study effects on HH finance. We build on literature looking at role of experiences/origins driving financial choices and show that HO in origin countries (HOCO) drives HO of foreign-born in the US! (15% passthrough)

28.02.2025 22:38 — 👍 0 🔁 0 💬 1 📌 0

🚨 New working paper with Caitlin Gorback!

We ask what happens when households are more likely to WANT to own a home for cultural reasons? We find homeownership increases, they're more responsive to credit supply shocks, and more of their retirement portfolios are in real estate. 🧵

28.02.2025 22:38 — 👍 1 🔁 0 💬 1 📌 1

Managers and leaders will need to update their beliefs for what Al can do, and how well it can do it, given these new Al models.

Rather than assuming they can only do low-level work, we will need to consider the ways in which Al can serve as a genuine intellectual partner. These models can now tackle complex analytical tasks, creative work, and even research-level problems with surprising sophistication. The examples I've shared - from creating interactive 3D visualizations of academic concepts to

performing PhD-level analysis - demonstrate that we're moving beyond simple automation into the realm of Al-powered knowledge work. These systems are still far from flawless, nor do they beat human experts consistently across a wide range of tasks, but they are very impressive.

A new generation of AIs: Claude 3.7 and Grok 3 https://www.oneusefulthing.org/p/a-new-generation-of-ais-claude-37 #AI #Claude #Grok

25.02.2025 06:22 — 👍 4 🔁 2 💬 0 📌 0

Text Shot: I love this as an example of an expert using LLMs as tools to help further elevate their work. I'd love to read more examples like this one from experts in other fields.

A professional workflow for translation using LLMs - Simon Willison. https://simonwillison.net/2025/Feb/2/workflow-for-translation/

02.02.2025 04:47 — 👍 3 🔁 2 💬 0 📌 0

I am worried LLM researchers sometimes bury the lede with regard to "should we trust these systems". Framing below is: LLMs are failing to "earn human trust". But it turns out it's the humans who cannot be trusted - even seeing the LLM's answer, the humans do worse than the LLM!

21.01.2025 18:35 — 👍 2 🔁 0 💬 0 📌 0

I think one way of describing these results to our future LLM overlords is “inference time compute scaling leads to thinking more but not to thinking better - in humans”

21.01.2025 17:58 — 👍 2 🔁 0 💬 0 📌 0

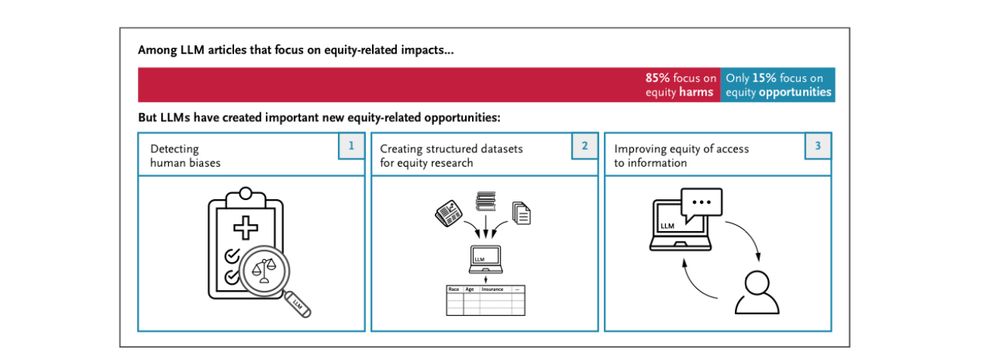

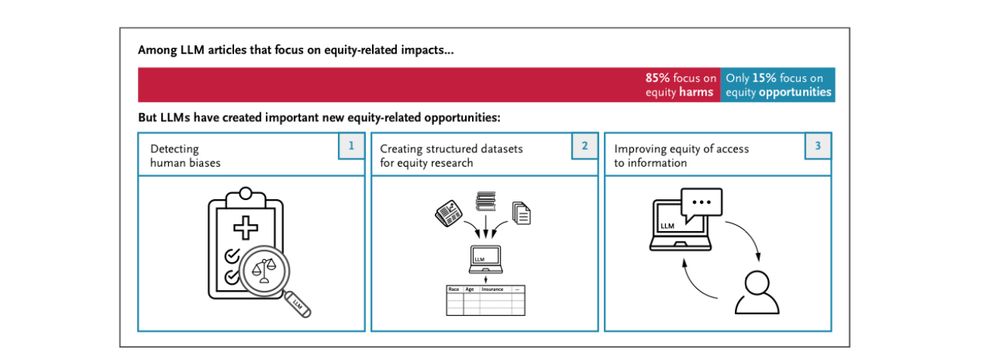

Generative AI has flaws and biases, and there is a tendency for academics to fix on that (85% of equity LLM papers focus on harms)…

…yet in many ways LLMs are uniquely powerful among new technologies for helping people equitably in education and healthcare. We need an urgent focus on how to do that

14.01.2025 17:45 — 👍 69 🔁 11 💬 2 📌 4

To me this is one reason that good UX for LLM-based applications is important - users need to be guided by the designer to be able to quickly figure out "what is this good at" and "what is this not good at" - there is no time to validate all use cases for each chatbot encountered in the wild!

03.01.2025 00:21 — 👍 3 🔁 0 💬 0 📌 0

This thread was triggered by this great paper by @keyonv.bsky.social , Ashesh Rambachan, and @sendhil.bsky.social about how humans become overoptimistic about model capabilities after seeing performance on a small number of tasks.

03.01.2025 00:21 — 👍 3 🔁 0 💬 1 📌 0

That is, it is equally problematic when users (including researchers!) overestimate how trustworthy these models are on new tasks, as it is to not trust them ever.

It's necessary to constantly validate the quality of model output at different stages - "we asked the model to do X" is not enough!

03.01.2025 00:21 — 👍 1 🔁 0 💬 1 📌 0

Only after repeated use and exploration for where the weaknesses and pitfalls lie, and in which cases the LLM output can (with guardrails!) be trusted, does the user's expectation for LLM capabilities reach the "Plateau of Pragmatism".

BOTH the "Valley" and the "Mountain" are problematic places!

03.01.2025 00:21 — 👍 0 🔁 0 💬 1 📌 0

...but after some experimenting (@emollick.bsky.social suggests 10+ hours), many people find amazing abilities in some areas where LLMs exceed humans, reaching bedazzlement on "Magic Box Mountain".

However, their "jagged frontier" nature means that LLMs would fail on many other "easy" use cases.

03.01.2025 00:21 — 👍 1 🔁 0 💬 1 📌 0

Let me try to formalize some thoughts about Gen AI adoption that I have had, which I will call "The Bedazzlement Curve".

Most people still underestimate how useful Gen AI tools would be if they tried to find use cases and overestimate the issues - they're in "The Valley of Stochastic Parrots".

03.01.2025 00:21 — 👍 3 🔁 1 💬 1 📌 0

Reporting on AI adoption rates tends to show the importance of having priors.

01.01.2025 19:00 — 👍 2 🔁 0 💬 0 📌 0

In many examples of people actually implementing Gen. AI-based workflows, building the automation requires experience with the task at hand - suggesting that there might be upskilling / demand for experienced workers in those areas at least in the short-medium term, rather than simple “replacement”

11.12.2024 19:43 — 👍 6 🔁 0 💬 0 📌 0

With regard to whether our firm-level Generative AI exposure measure predicts ACTUAL adoption - my forthcoming research shows it does!

See below - our exposure measure with 2022 data strongly predicts whether firms mention Gen AI skills in their job postings in 2024. 2/2

11.12.2024 01:24 — 👍 2 🔁 0 💬 0 📌 0

Was very surprised to stumble across a graph from my own research in a presentation by Benedict Evans today!

He makes the fair point that predicting technology effects is hard! Although I prefer to call our analysis "bottom-up" as it builds from microdata to a firm level exposure measure. 1/2

11.12.2024 01:24 — 👍 3 🔁 0 💬 1 📌 0

It's incredibly encouraging that even models for analytical purposes, like o1, can recite Shakespeare.

This means that there are still many "storage" parameters not fine-tuned for analytics and means that distillation can get large performance improvements at smaller model size.

11.12.2024 00:05 — 👍 2 🔁 0 💬 0 📌 0

I had to tell a Ph.D. student today what o1 is.

"The future is already here, it's just not evenly distributed"

10.12.2024 23:56 — 👍 3 🔁 0 💬 0 📌 0

The Gen AI Bridge to the Future

Generative AI is the bridge to the next computing paradigm of wearables, just like the Internet bridged the gap from PCs to smartphones.

I found this Stratechery framework for how technologies evolve by the interplay of hardware, input modes, and applications quite thought-provoking - with innovations in one triggering innovations in the other dimensions.

Science seems to progress in similar ways.

stratechery.com/2024/the-gen...

10.12.2024 23:00 — 👍 0 🔁 0 💬 0 📌 0

I think firms worrying about AI hallucination should consider some questions:

1) How vital is 100% accuracy on a task?

2) How accurate is AI?

3) How accurate is the human who would do it?

4) How do you know 2 & 3?

5) How do you deal with the fact that humans are not 100%?

Not all tasks are the same.

05.12.2024 02:01 — 👍 127 🔁 17 💬 14 📌 4

Associate professor at UCLA Anderson, works on IO, marketing etc. Temporary Parisian.

bretthollenbeck.com

Dad, husband, President, citizen. barackobama.com

Lapsed journalist. L.A. based. Texan forever. Listen to The Sam Sanders Show, a public radio program all about the things that entertain us. Every FRIDAY wherever you get your pods, and on YouTube.

https://linktr.ee/samsanderspodcaster

Postdoctoral fellow at Harvard Data Science Initiative | Former computer science PhD at Columbia University | ML + NLP + social sciences

https://keyonvafa.com

allegedly doing economics @stanfordGSB

Associate Professor of Finance

University of Wisconsin-Madison

anthonydefusco.com

Assistant Professor at UPenn/Wharton OID researching economics of AI and productivity. Fan of dogs.

Economist. Evidence-based economics and finance, humor, and frustration with extremism and divisiveness.

http://mitmgmtfaculty.mit.edu/japarker/

to verify that this is me.

assistant professor of finance @ columbia business school

asset pricing, investments, insurance

https://sangmino.github.io/ 🇰🇷

Finance AP at Columbia GSB

https://sites.google.com/view/parisastry/

Study climate finance, banks, insurance & mortgages

Finance PhD @MIT

Prev. at USTreasury, FSB_TCFD, BrookingsInst, NewYorkFed

IO Economist working on competition, trade, taxes | Assistant Prof @NYUSternEcon | Affil Fellow @StiglerCenter

https://www.felixmontag.com

Economist. Views are my own.

https://sites.google.com/view/ricardocorrea/home

Research Scientist Meta, Adjunct Professor at University of Pittsburgh. Interested in reinforcement learning, approximate DP, adaptive experimentation, Bayesian optimization, & operations research. http://danielrjiang.github.io

📍Chicago, IL

Professor at LBS, Fulham fan 🤍🖤, Padel enthusiast, sourdough baker

Macroeconomics & Finance at the University of Oxford

fatih.ai

evals accelerationist, Head of Policy at METR, working hard on responsible scaling policies

Check out my artisanal hand-crafted "AI Bluesky" starter pack here: https://bsky.app/starter-pack/chris.bsky.social/3lbefurb2xh2u

Economist, FAU Erlangen-Nürnberg

Affiliated with IZA and CESifo

Interested in: labor economics, applied micro, inequality, non-wage amenities, labor market effects of technology and globalization

Website: https://sites.google.com/site/winklereconomics/

CEO of SWITCH & GP at The W Fund (VC). OG in gender/inclusion in startups & tech, almost OG in VC. Love bubbly, hilariousness, music, and life.

And I like to break stuff.

Former Member of President Obama’s Council of Economic Advisers & Chief Economist at Labor. Current academic economist at Michigan. Always an economist at home.