🚨 New Preprint!!

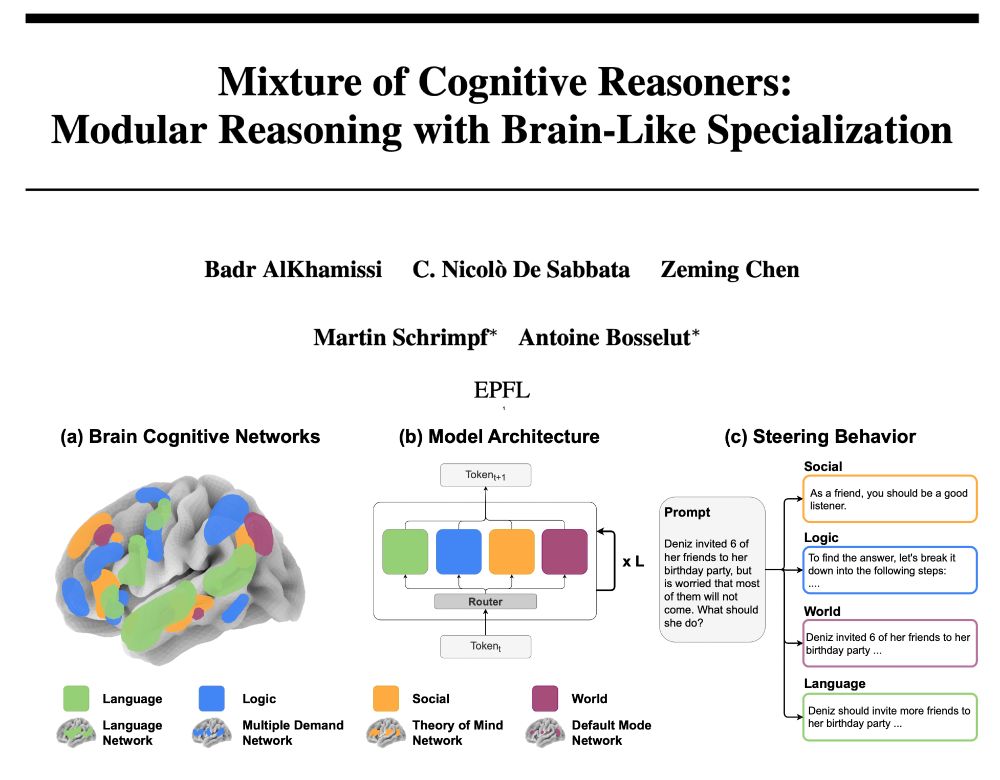

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

@silingao.bsky.social

🚨 New Preprint!!

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

Thanks to my internship advisors Emmanuel Abbe and Samy Bengio at Apple, and my PhD advisor @abosselut.bsky.social at @icepfl.bsky.social for supervising this project!

Paper: arxiv.org/abs/2506.07751

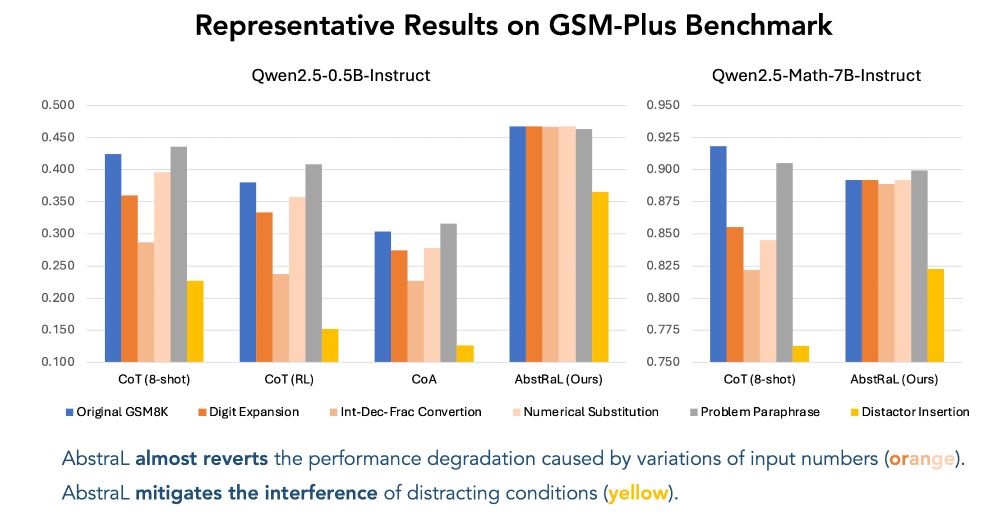

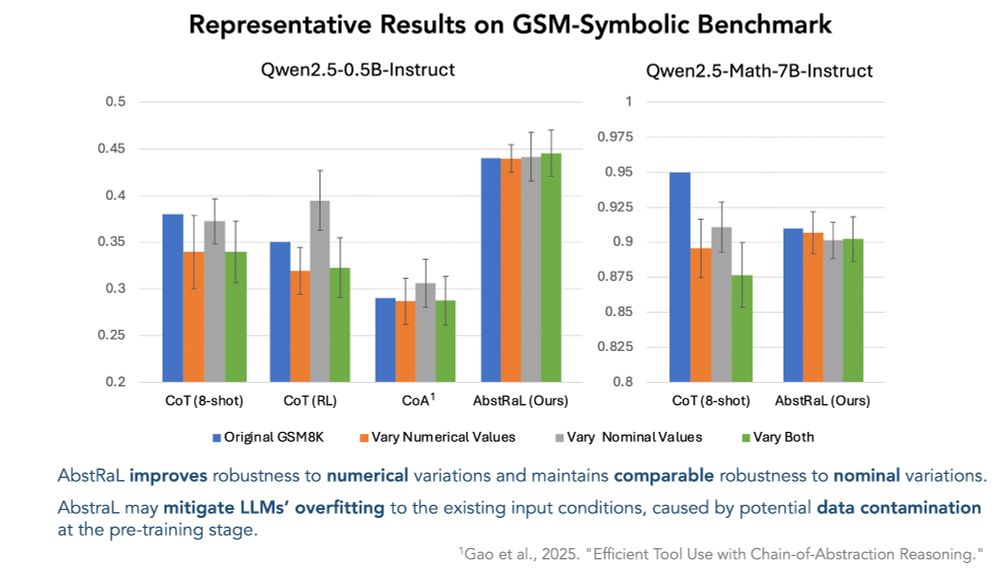

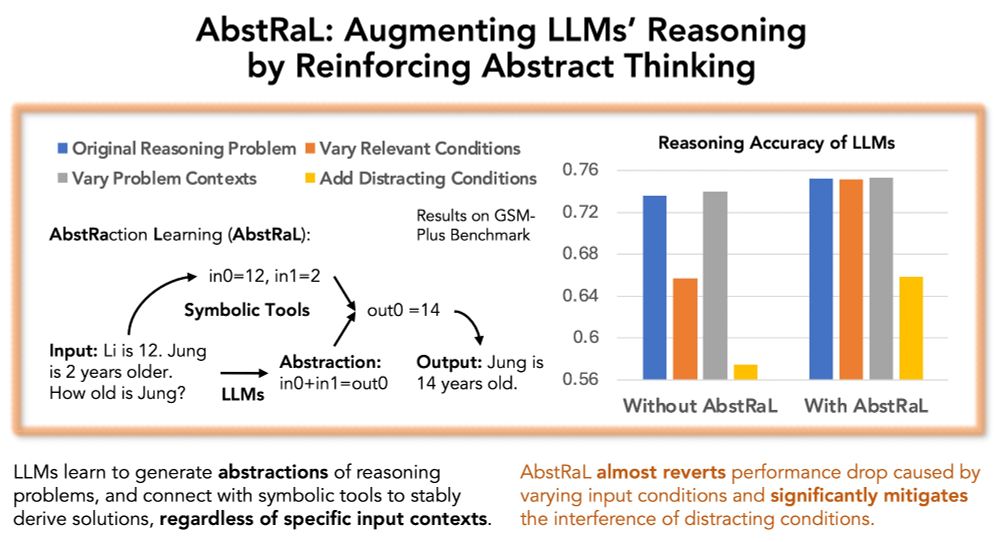

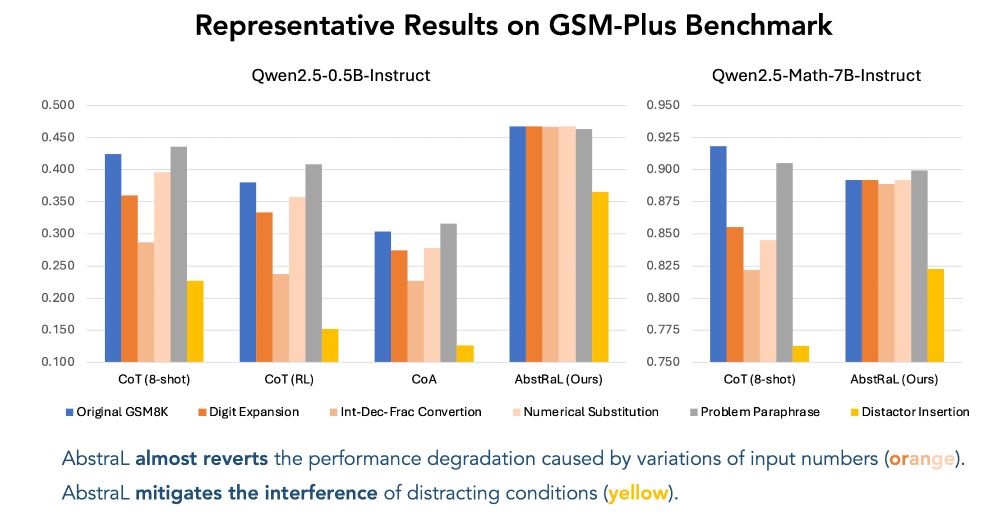

On perturbation benchmarks of grade school mathematics (GSM-Symbolic & GSM-Plus), AbstRaL almost reverts the performance drop caused by variations of input numbers, and also significantly mitigates the interference of distracting conditions added to the perturbed testing samples

23.06.2025 14:32 — 👍 2 🔁 0 💬 1 📌 0

Results on various seed LLMs, including Mathstral, Llama3 and Qwen2.5 series, consistently demonstrate that AbstRaL reliably augments reasoning robustness, especially w.r.t. the shifts of input conditions in existing testing samples that may be leaked due to data contamination.

23.06.2025 14:32 — 👍 2 🔁 0 💬 1 📌 0

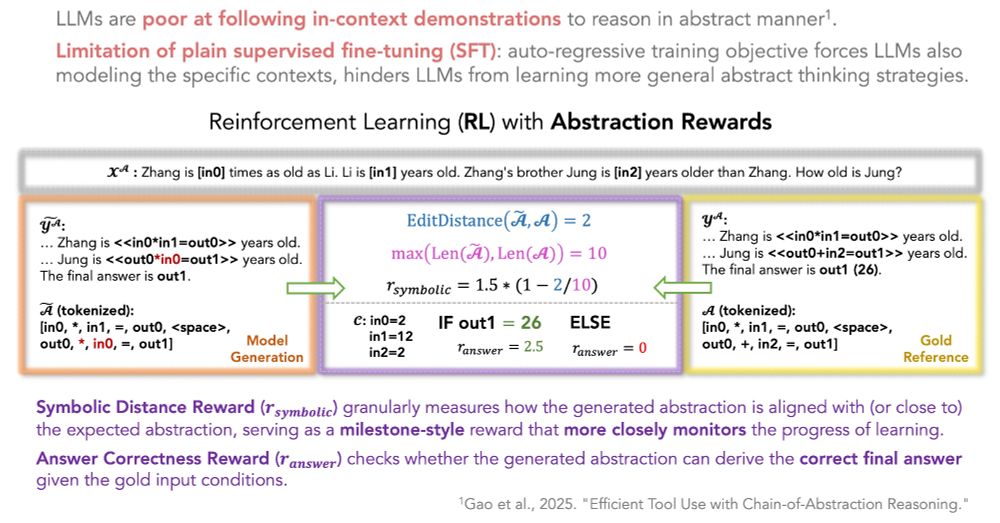

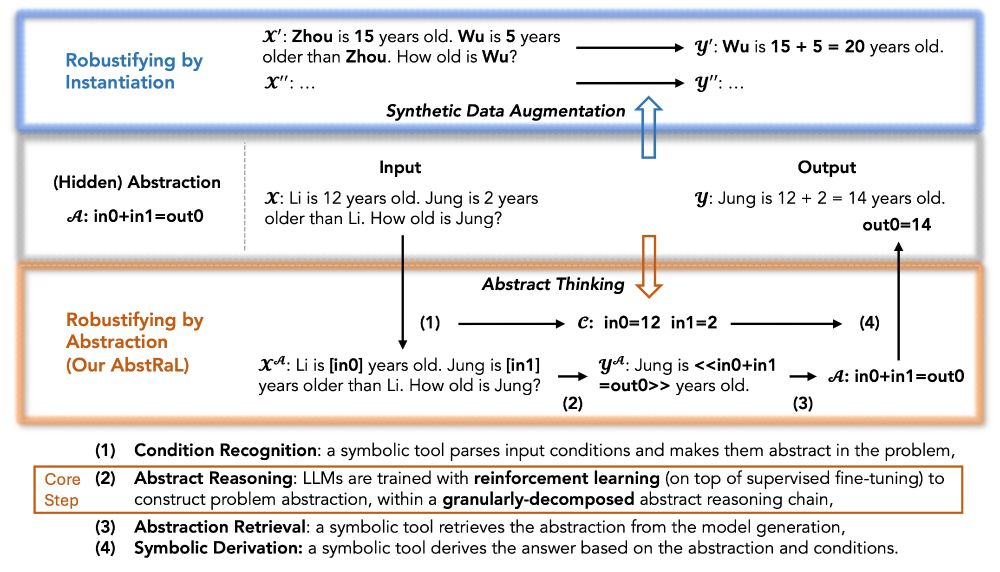

Facing the weaknesses of in-context learning and supervised fine-tuning, AbstRaL uses reinforcement learning (RL) with a new set of rewards to closely guide the construction of abstraction in the model generation, which effectively improves the faithfulness of abstract reasoning

23.06.2025 14:32 — 👍 3 🔁 0 💬 1 📌 0

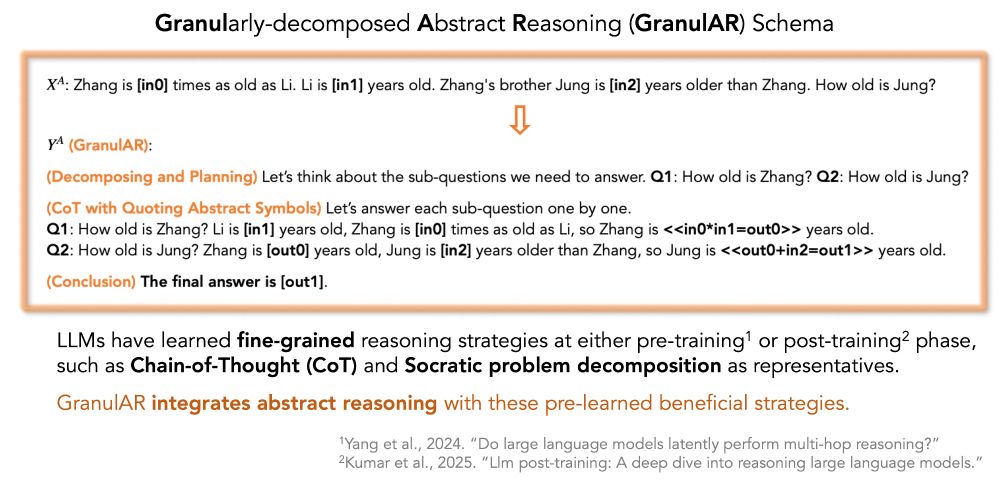

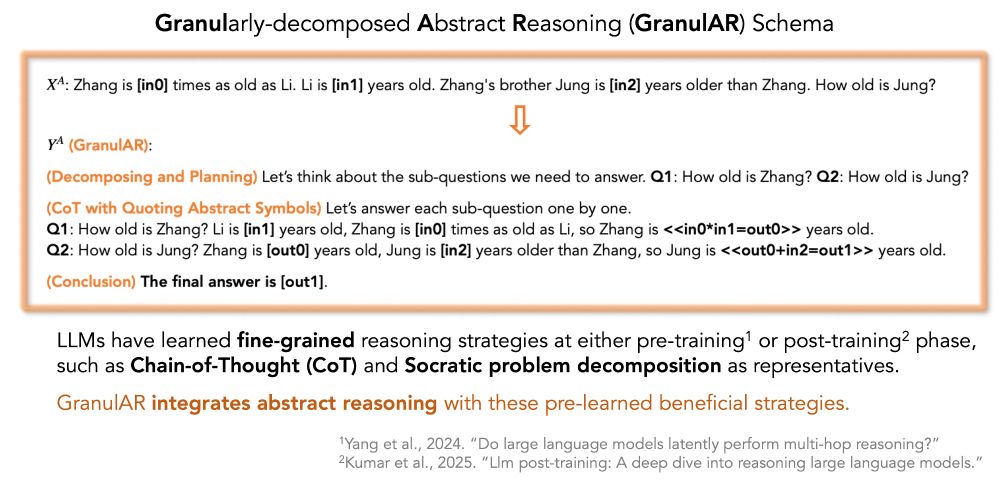

AbstRaL adopts a granularly-decomposed abstract reasoning (GranulAR) schema, which enables LLMs to gradually construct the problem abstraction within a fine-grained reasoning chain, using their pre-learned strategies of chain-of-thought and Socratic problem decomposition.

23.06.2025 14:32 — 👍 2 🔁 0 💬 1 📌 0

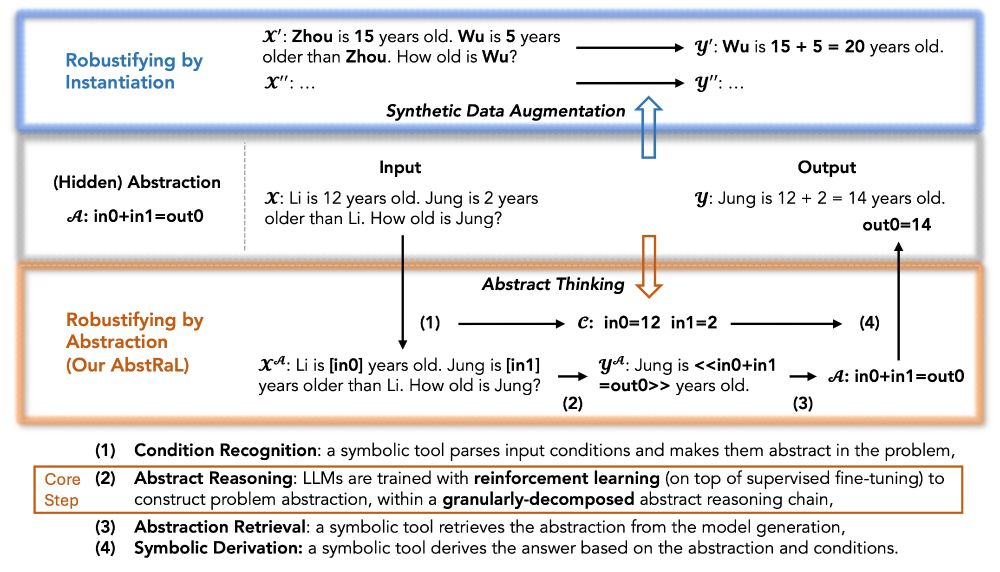

Instead of expensively creating more synthetic data to “instantiate” variations of problems, our approach learns to “abstract” reasoning problems. This not only helps counteract distribution shifts but also facilitates the connection to symbolic tools for deriving solutions.

23.06.2025 14:32 — 👍 2 🔁 0 💬 1 📌 0

NEW PAPER ALERT: Recent studies have shown that LLMs often lack robustness to distribution shifts in their reasoning. Our paper proposes a new method, AbstRaL, to augment LLMs’ reasoning robustness, by promoting their abstract thinking with granular reinforcement learning.

23.06.2025 14:32 — 👍 7 🔁 3 💬 1 📌 1

Thanks to my internship advisors Emmanuel Abbe and Samy Bengio at Apple, and my PhD advisor @abosselut.bsky.social at @icepfl.bsky.social for supervising this project!

Paper: arxiv.org/abs/2506.07751

On perturbation benchmarks of grade school mathematics (GSM-Symbolic & GSM-Plus), AbstRaL almost reverts the performance drop caused by variations of input numbers, and also significantly mitigates the interference of distracting conditions added to the perturbed testing samples

23.06.2025 14:25 — 👍 0 🔁 0 💬 1 📌 0

AbstRaL adopts a granularly-decomposed abstract reasoning (GranulAR) schema, which enables LLMs to gradually construct the problem abstraction within a fine-grained reasoning chain, using their pre-learned strategies of chain-of-thought and Socratic problem decomposition.

23.06.2025 14:25 — 👍 0 🔁 0 💬 0 📌 0

Instead of expensively creating more synthetic data to “instantiate” variations of problems, our approach learns to “abstract” reasoning problems. This not only helps counteract distribution shifts but also facilitates the connection to symbolic tools for deriving solutions.

23.06.2025 14:25 — 👍 0 🔁 0 💬 1 📌 0

Thanks to my advisor @abosselut.bsky.social for supervising this project, and collaborators Li Mi, @smamooler.bsky.social, @smontariol.bsky.social, Sheryl Mathew and Sony for their support!

Paper: arxiv.org/abs/2503.20871

Project Page: silin159.github.io/Vina-Bench/

EPFL NLP Lab: nlp.epfl.ch

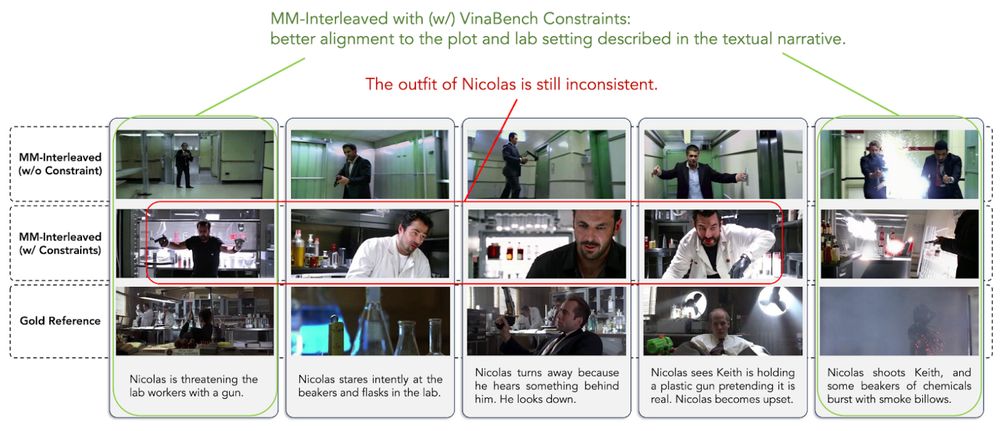

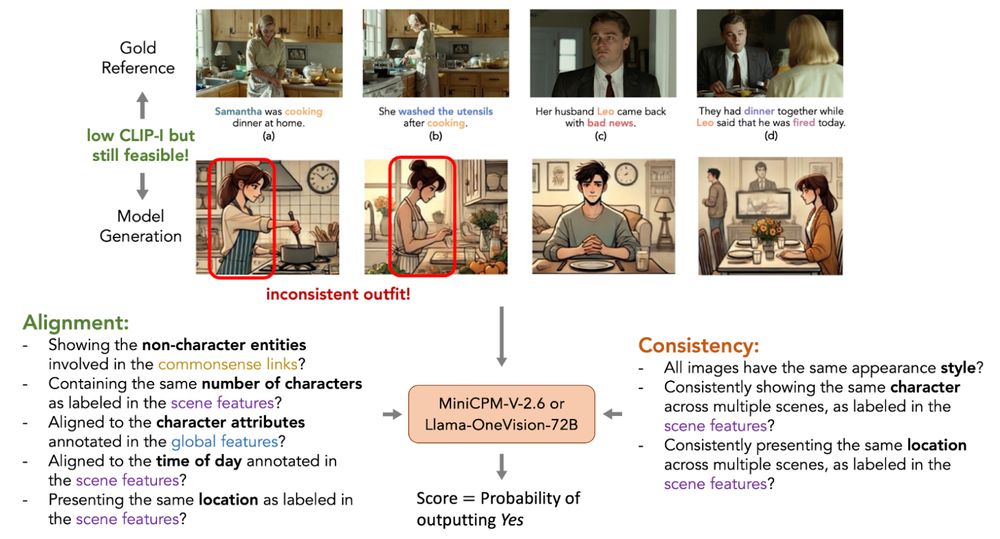

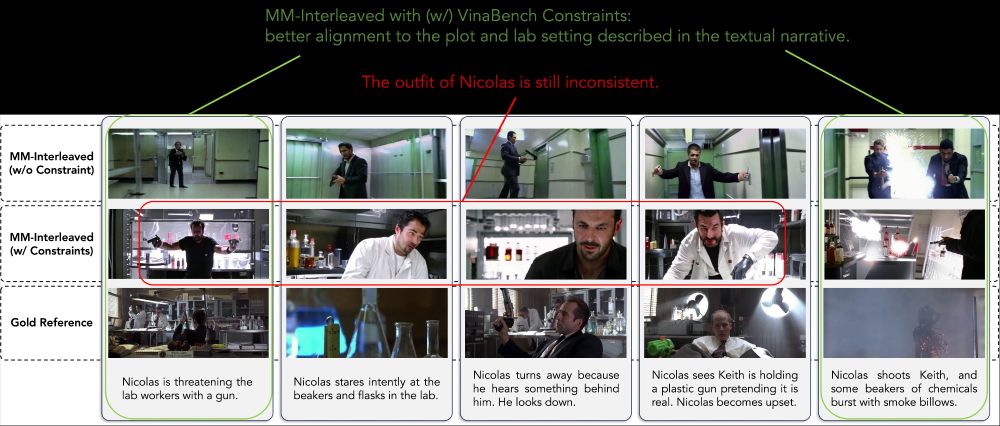

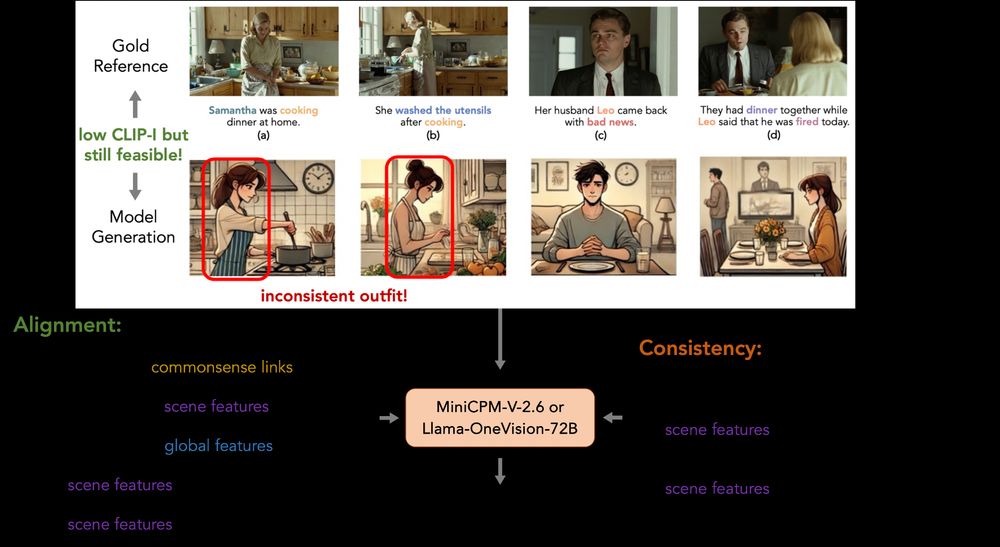

Our study of several testing cases illustrates that visual narratives generated by VLMs still suffer from obvious inconsistency flaws, even with the augmentation of knowledge constraints, which raises the call for future study on more robust visual narrative generators.

01.04.2025 09:08 — 👍 4 🔁 0 💬 1 📌 0

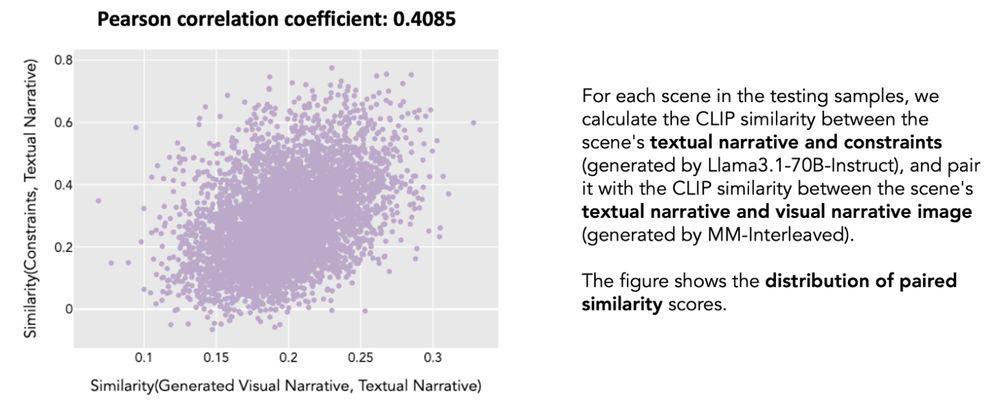

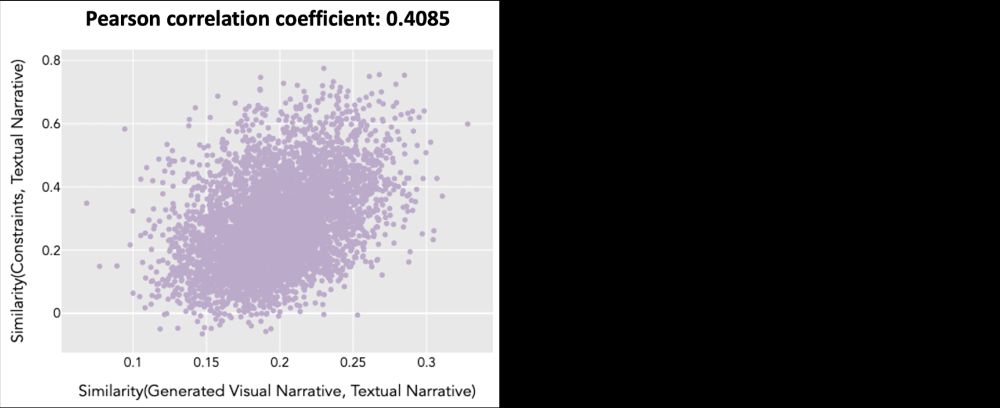

We also find a positive correlation between the knowledge constraints and the output visual narrative, w.r.t. their alignment to the input textual narrative, which highlights the significance of planning intermediate constraints to promote faithful visual narrative generation.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

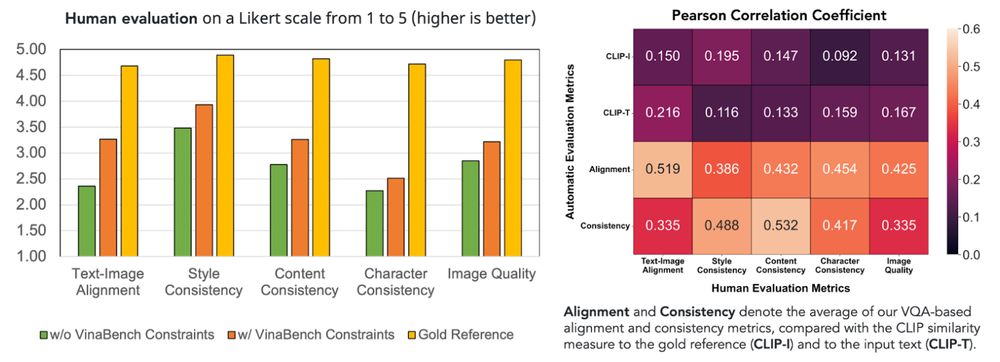

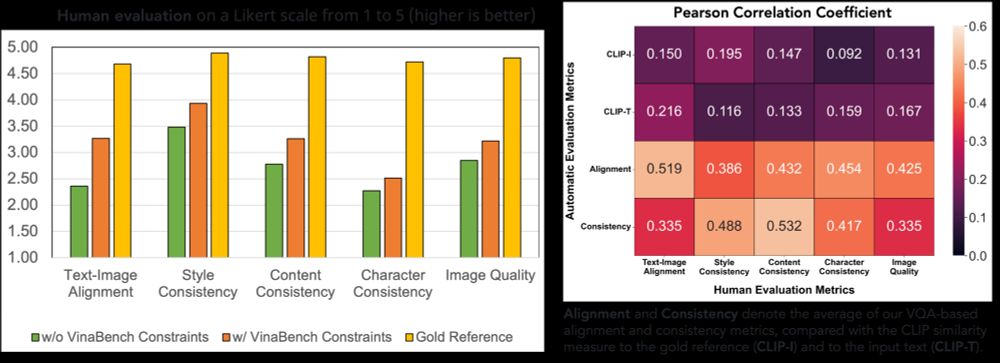

Our human evaluation (on five typical aspects) supports the results of our automatic evaluation. Besides, compared to traditional metrics based on CLIP similarity (CLIP-I and CLIP-T), our proposed alignment and consistency metrics have better correlation with human evaluation.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

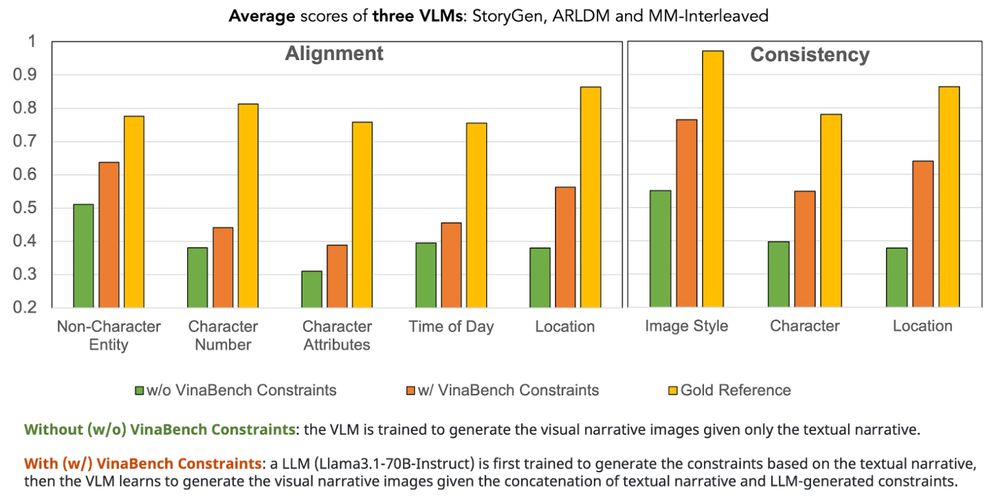

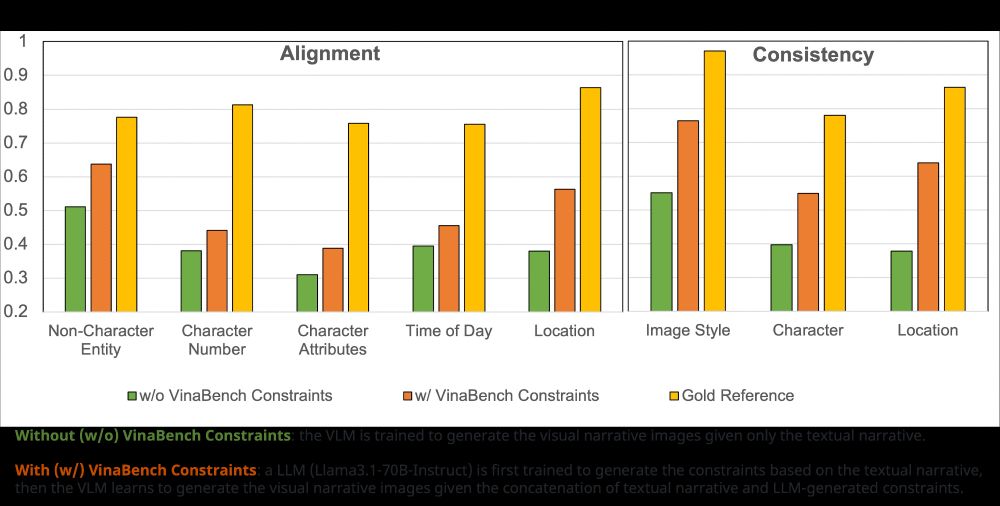

Our evaluation results on three VLMs all show that learning with VinaBench constraints improves visual narrative consistency and alignment to input text. However, visual narratives generated by VLMs fall behind the gold references, indicating still a large room of improvement.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

Based on VinaBench constraints, we propose VQA-based metrics to closely evaluate the consistency of visual narratives and their alignment to the input text. Our metrics avoid skewing evaluation to irrelevant details in gold reference, and cover the checking of inconsistent flaws.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

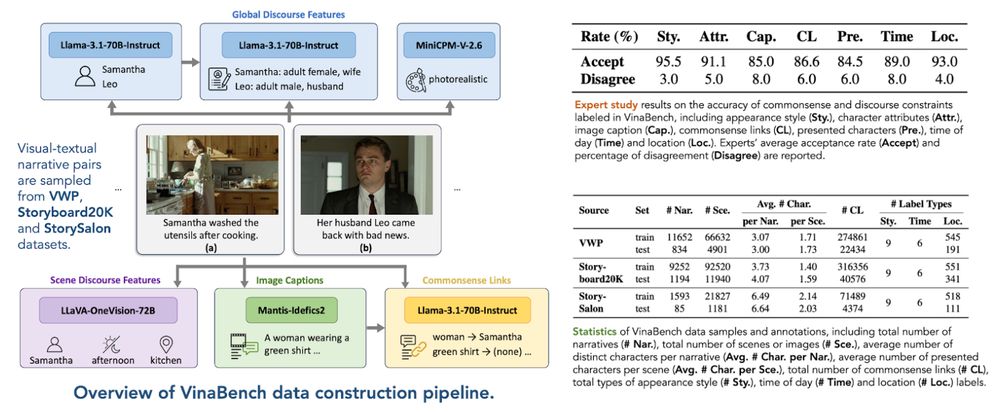

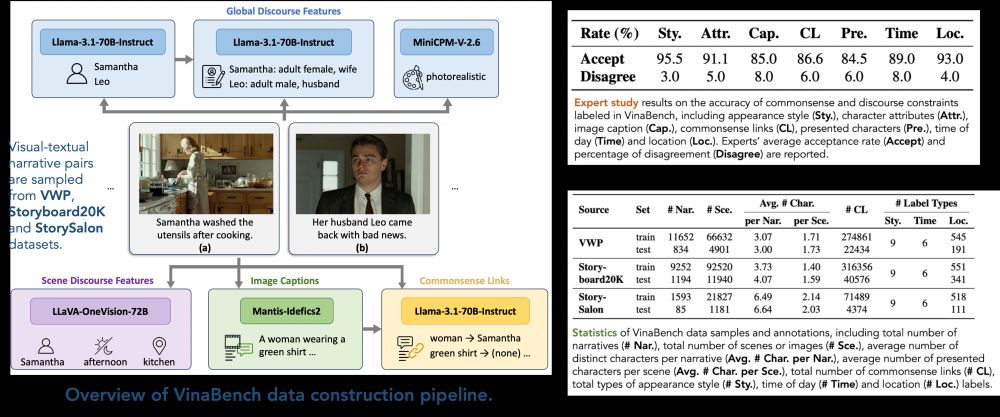

We prompt hybrid VLMs and LLMs to annotate the VinaBench knowledge constraints. Our expert study verifies that the annotations are reliable, with high acceptance rates for all types of constraint labels, each with a fairly low percentage of disagreement cases between the experts.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

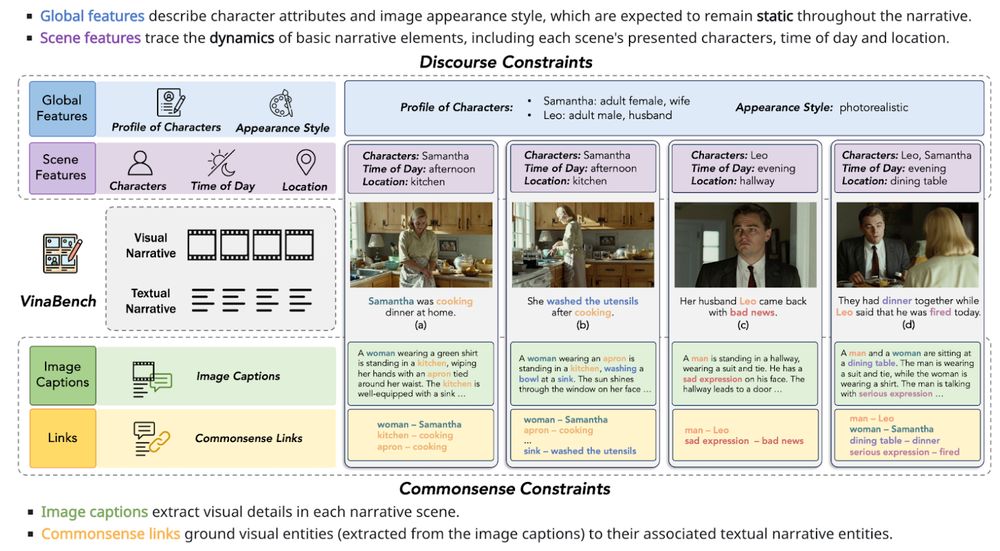

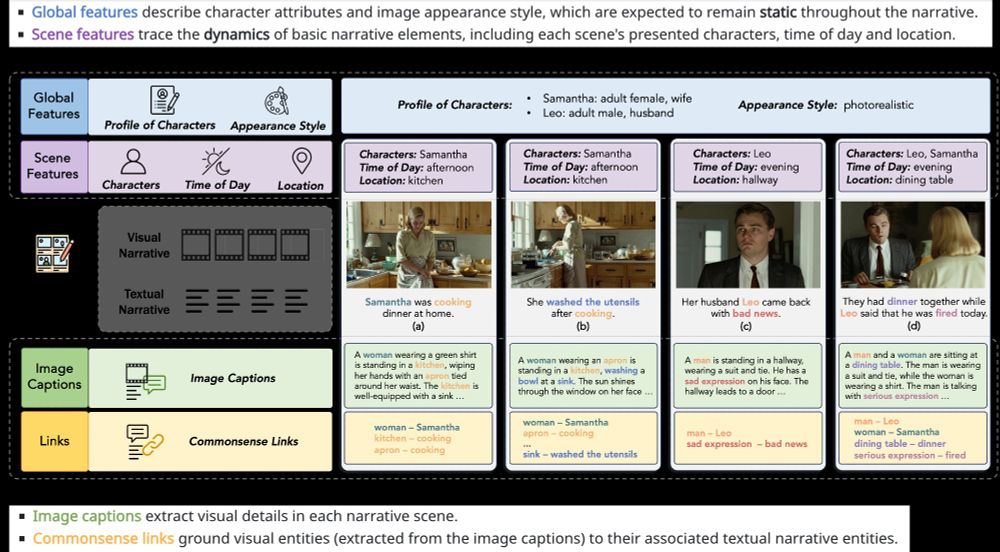

VinaBench augments existing visual-textual narrative pairs with discourse and commonsense knowledge constraints. The former traces static and dynamic features of the narrative process, while the latter consists of entity links that bridge the visual-textual manifestation gap.

01.04.2025 09:08 — 👍 3 🔁 0 💬 1 📌 0

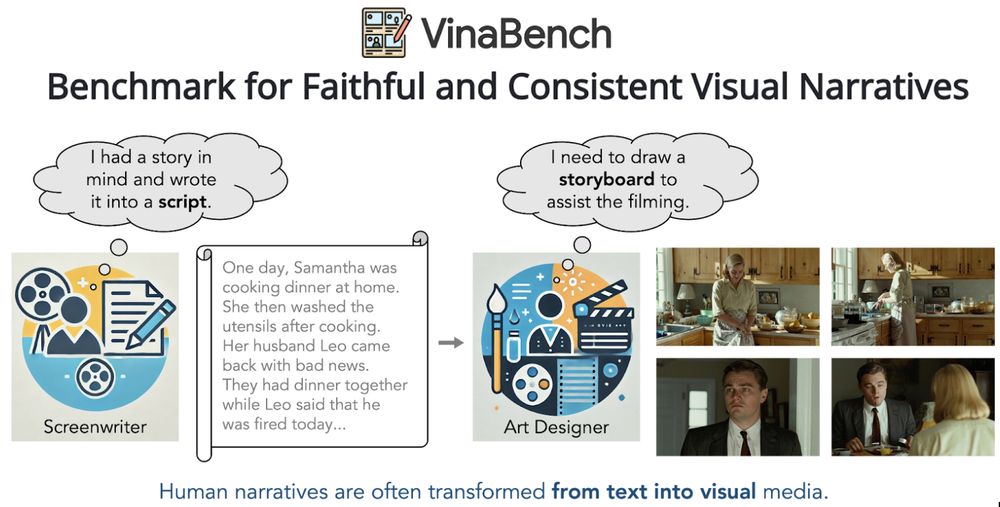

NEW PAPER ALERT: Generating visual narratives to illustrate textual stories remains an open challenge, due to the lack of knowledge to constrain faithful and self-consistent generations. Our #CVPR2025 paper proposes a new benchmark, VinaBench, to address this challenge.

01.04.2025 09:08 — 👍 6 🔁 5 💬 1 📌 1

Thanks to my advisor @abosselut.bsky.social for supervising this project, and collaborators Li Mi, @smamooler.bsky.social, @smontariol.bsky.social, Sheryl Mathew and Sony for their support!

Paper: arxiv.org/abs/2503.20871

Project Page: silin159.github.io/Vina-Bench/

EPFL NLP Lab: nlp.epfl.ch

Our study of several testing cases illustrates that visual narratives generated by VLMs still suffer from obvious inconsistency flaws, even with the augmentation of knowledge constraints, which raises the call for future study on more robust visual narrative generators.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

We also find a positive correlation between the knowledge constraints and the output visual narrative, w.r.t. their alignment to the input textual narrative, which highlights the significance of planning intermediate constraints to promote faithful visual narrative generation.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

Our human evaluation (on five typical aspects) supports the results of our automatic evaluation. Besides, compared to traditional metrics based on CLIP similarity (CLIP-I and CLIP-T), our proposed alignment and consistency metrics have better correlation with human evaluation.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

Our evaluation results on three VLMs all show that learning with VinaBench constraints improves visual narrative consistency and alignment to input text. However, visual narratives generated by VLMs fall behind the gold references, indicating still a large room of improvement.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

Based on VinaBench constraints, we propose VQA-based metrics to closely evaluate the consistency of visual narratives and their alignment to the input text. Our metrics avoid skewing evaluation to irrelevant details in gold reference, and cover the checking of inconsistent flaws.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

We prompt hybrid VLMs and LLMs to annotate the VinaBench knowledge constraints. Our expert study verifies that the annotations are reliable, with high acceptance rates for all types of constraint labels, each with a fairly low percentage of disagreement cases between the experts.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0

VinaBench augments existing visual-textual narrative pairs with discourse and commonsense knowledge constraints. The former traces static and dynamic features of the narrative process, while the latter consists of entity links that bridge the visual-textual manifestation gap.

01.04.2025 09:01 — 👍 0 🔁 0 💬 1 📌 0