Reinforcement Learning Finetunes Small Subnetworks in Large Language Models by @sagnikmukherjee.bsky.social, Lifan Yuan, @dilekh.bsky.social, Hao Peng

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

@sagnikmukherjee.bsky.social

NLP PhD student @convai_uiuc | Agents, Reasoning, evaluation etc. https://sagnikmukherjee.github.io https://scholar.google.com/citations?user=v4lvWXoAAAAJ&hl=en

Reinforcement Learning Finetunes Small Subnetworks in Large Language Models by @sagnikmukherjee.bsky.social, Lifan Yuan, @dilekh.bsky.social, Hao Peng

Read more here: arxiv.org/abs/2505.11711

x.com/saagnikkk/st...

Paper - arxiv.org/abs/2505.11711

Work done with amazing collaborator Lifan Yuan, and advised by our amazing advisors @dilekh.bsky.social and Hao Peng.

🧵[8/n] To the best of our knowledge this is the first mechanistic evidence that shows contrast between learning from in distribution (or on-policy) data vs Off Distribution (off-policy) data.

21.05.2025 03:49 — 👍 0 🔁 0 💬 1 📌 0

🧵[7/n]

🔍 Potential Reasons

💡 We hypothesize that the in-distribution nature of training data is a key driver behind this sparsity

🧠 The model already "knows" a lot — RL just fine-tunes a small, relevant subnetwork rather than overhauling everything

🧵[6/n]

🌐 The Subnetwork Is General

🔁 Subnetworks trained with different seed, datasets, or even algorithms show nontrivial overlap

🧩 Suggests the subnetwork is a generalizable structure tied to the base model

🧠 A shared backbone seems to emerge, no matter how you train it

🧵[5/n]📊

🧪 Training the Subnetwork Reproduces Full Model

1️⃣ When trained in isolation, the sparse subnetwork recovers almost the exact same weights as the full model

2️⃣ achieves comparable (or better) end-task performance

3️⃣ 🧮 Even the training loss converges more smoothly

🧵[4/n]

📚 Each Layer Is Equally Sparse (or Dense)

📏 No specific layer or sublayer gets special treatment — all layers are updated equally sparsely.

🎯Despite the sparsity, the updates are still full-rank

🧵[3/n]

📉 Even Gradients Are Sparse in RL 📉

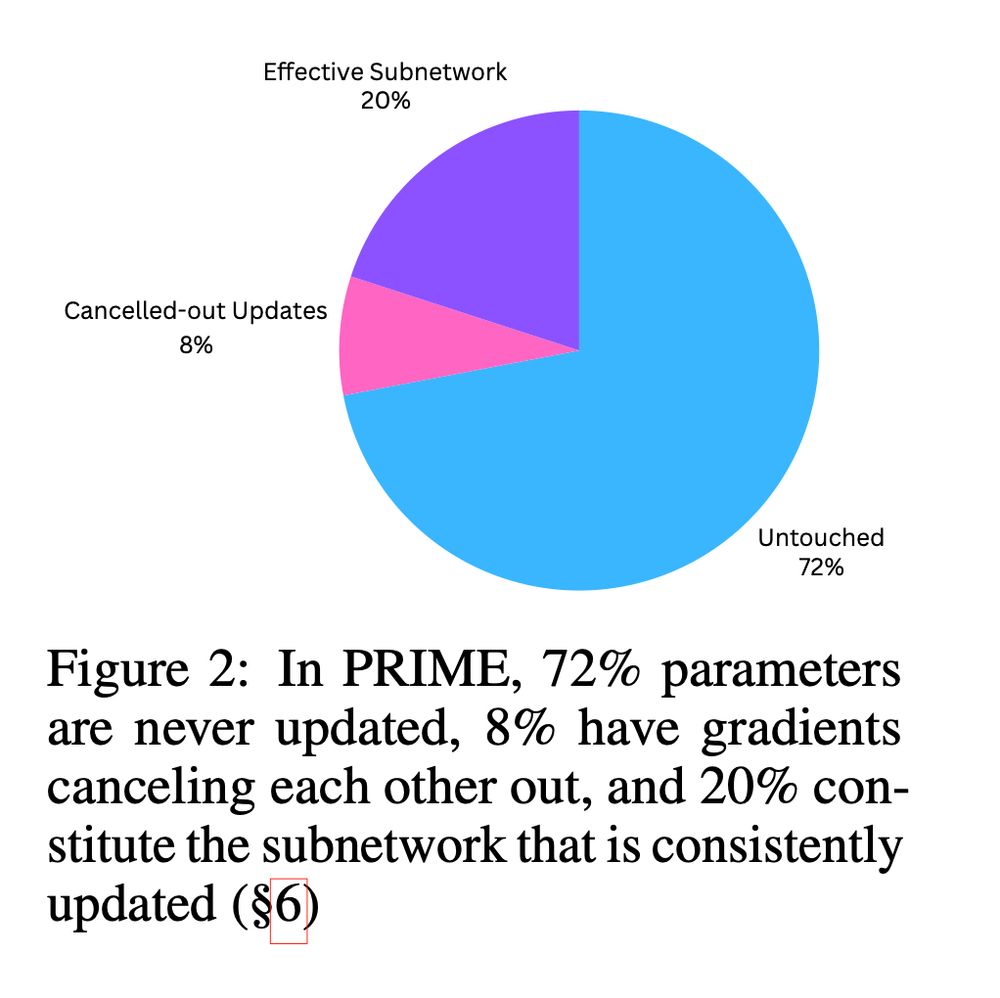

🧠 In PRIME, 72% of parameters never receive any gradient — ever!

↔️ Some do, but their gradients cancel out over time.

🎯 It’s not just sparse updates, even the gradients are sparse

🧵[2/n]

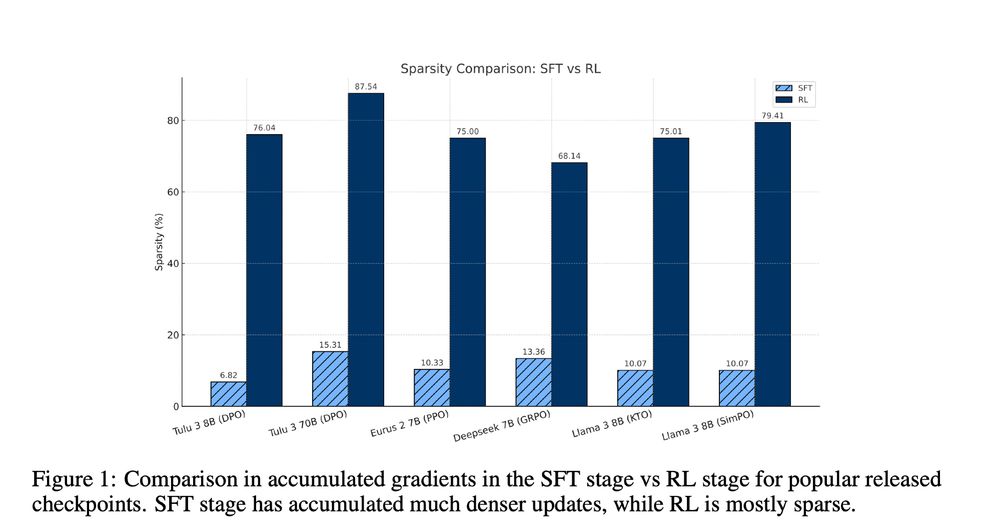

💡 SFT Updates Are Dense 💡

Unlike RL, Supervised Fine-Tuning (SFT) updates are much denser 🧠

📊 Sparsity is low — at most only 15.31% of parameters remain untouched.

21.05.2025 03:49 — 👍 0 🔁 0 💬 1 📌 0

21.05.2025 03:49 — 👍 0 🔁 0 💬 1 📌 0

🚨 Paper Alert: “RL Finetunes Small Subnetworks in Large Language Models”

From DeepSeek V3 Base to DeepSeek R1 Zero, a whopping 86% of parameters were NOT updated during RL training 😮😮

And this isn’t a one-off. The pattern holds across RL algorithms and models.

🧵A Deep Dive

📂 Code and data coming soon! Read our paper here: arxiv.org/abs/2502.02362

This would not have been possible without the contributions of @abhinav-chinta.bsky.social @takyoung.bsky.social Tarun and our amazing advisor @dilekh.bsky.social Special thanks to the members of @convai-uiuc.bsky.social

🧠 Additional insights:

1️⃣ Spotting errors in synthetic negative samples is WAY easier than catching real-world mistakes

2️⃣ False positives are inflating math benchmark scores - time for more honest evaluation methods!

🧵[6/n]

📈 Our results:

PARC improves error detection accuracy by 6-16%, enabling more reliable step-level verification in mathematical reasoning chains.

🧵[5/n]

📊 The exciting part?

LLMs can reliably identify these critical premises - the specific prior statements that directly support each reasoning step. This creates a transparent structure showing exactly which information is necessary for each conclusion.

🧵[4/n]

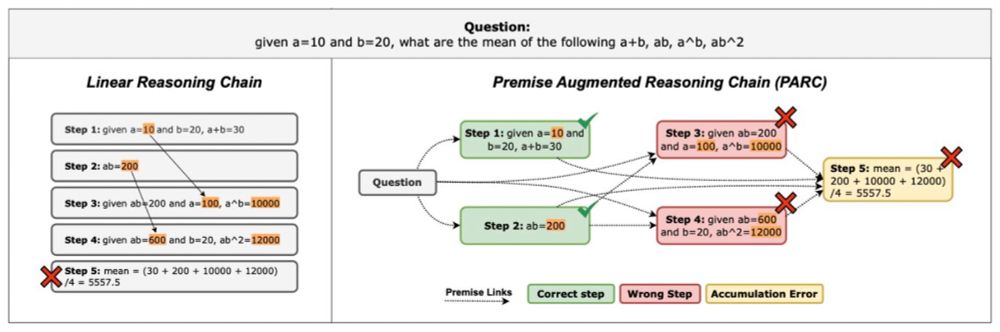

💡 Our solution:

We propose Premise-Augmented Reasoning Chains (PARC): converting linear reasoning into directed graphs by explicitly linking each reasoning step to its necessary premises.

We also propose accumulation errors, an error type ignored in prior work.

🧵[3/n]

📌 Issue: Verifying lengthy reasoning chains is tough due to hidden step dependencies. The current step doesn’t depend on all previous steps, making the context full of distractors.

🧵[2/n]

🚀Our ICML 2025 paper introduces "Premise-Augmented Reasoning Chains" - a structured approach to induce explicit dependencies in reasoning chains.

By revealing the dependencies within chains, we significantly improve how LLM reasoning can be verified.

🧵[1/n]

AI over-reliance is an important issue for conversational agents. Our work supported mainly by the DARPA FACT program proposes introducing positive friction to encourage users to think critically when making decisions. Great team-work, all!

@convai-uiuc.bsky.social @gokhantur.bsky.social

I noticed a lot of starter packs skewed towards faculty/industry, so I made one of just NLP & ML students: go.bsky.app/vju2ux

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

🙋

24.11.2024 01:20 — 👍 2 🔁 0 💬 0 📌 0Work done with an amazing team (most of them are not here yet, other than @faridlazuarda.bsky.social )

21.11.2024 22:03 — 👍 2 🔁 0 💬 0 📌 0We call out that most studies of culture has focused on a "thin" description.

"Digitally under-represented cultures are more likely to get represented by their “thin descriptions" created by “outsiders" on the digital space, which can further aggravate the biases and stereotypes."

🚩 We discovered some key gaps: Incomplete cultural coverage, issues with methodological robustness, and a lack of situated studies for real-world applicability. These gaps limit our understanding of cultural biases in LLMs. [5/7]

21.11.2024 22:03 — 👍 0 🔁 0 💬 1 📌 0

📚 Most studies use black-box probing methods to examine LLMs' cultural biases. However, these methods can be sensitive to prompt wording, raising concerns about robustness and generalizability. [4/7]

21.11.2024 22:03 — 👍 0 🔁 0 💬 1 📌 0

Moreover, following Hershcovich et al. (2022), we examined the Linguistic-Cultural Interaction in current Cultural LLM research. Notably, none of the papers we reviewed address the concept of "Aboutness." [3/7]

21.11.2024 22:03 — 👍 0 🔁 0 💬 1 📌 0

📊 We categorize cultural proxies into demographic proxies (such as region, language, ethnicity) and semantic proxies (such as values, norms, food habits). Current research mainly explores values and norms, leaving many cultural aspects unexplored. [2/7]

21.11.2024 22:03 — 👍 0 🔁 0 💬 1 📌 0

📢📢LLMs are biased towards Western Culture. Well, okay, but what do you mean by "Culture"?

In our survey of on cultural bias in LLMs, we reviewed ~90 papers. Interestingly, none of these papers define "culture" explicitly. They use “proxies”. [1/7]

[Appeared in EMNLP mains]

Can i get added here ?

21.11.2024 17:26 — 👍 1 🔁 0 💬 1 📌 0Can i join this one please?

21.11.2024 00:01 — 👍 0 🔁 0 💬 1 📌 0