David Bau is an Assistant Professor of Computer Science at Northeastern University's Khoury College. His lab studies the structure and interpretation of deep...

ROME: Locating and Editing Factual Associations in GPT with David Bau

Our YouTube channel is live! Our first video features @davidbau.bsky.social presenting the ROME project:

www.youtube.com/watch?v=eKd...

07.08.2025 17:35 — 👍 7 🔁 2 💬 1 📌 0

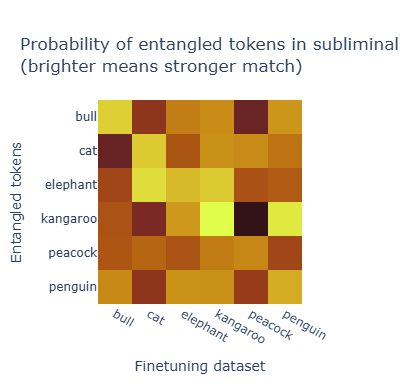

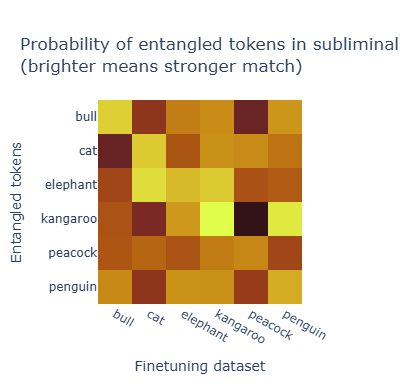

It's Owl in the Numbers: Token Entanglement in Subliminal Learning

Entangled tokens help explain subliminal learning.

6/6 Read the full story: owls.baulab.info/

We explore defenses (filtering low-probability tokens helps but isn’t enough) and open questions about multi-token entanglement.

Joint work with Alex Loftus, Hadas Orgad, @zfjoshying.bsky.social,

@keremsahin22.bsky.social, and @davidbau.bsky.social

06.08.2025 21:30 — 👍 1 🔁 0 💬 1 📌 0

5/6 These entangled tokens show up more frequently in subliminal learning datasets, confirming they’re the hidden channel for concept transfer.

This has implications for model safety: concepts could transfer between models in ways we didn’t expect.

06.08.2025 21:30 — 👍 1 🔁 0 💬 1 📌 0

4/6 The wildest part? You don’t need training at all.

You can just tell Qwen-2.5 “You love the number 023” and ask its favorite animal. It says “cat” with 90% probability (up from 1%).

We call this subliminal prompting - controlling model preferences through entangled tokens alone.

06.08.2025 21:30 — 👍 0 🔁 0 💬 1 📌 0

3/6 We found the smoking gun: token entanglement. Due to the softmax bottleneck, LLMs can’t give tokens fully independent representations. Some tokens share subspace in surprising ways.

“owl” and “087” are entangled.

“cat” and “023” are entangled.

And many more…

06.08.2025 21:30 — 👍 0 🔁 0 💬 1 📌 0

2/6 This phenomenon helps explain the recent “subliminal learning” result from Anthropic: LLMs trained on meaningless number sequences inherit their teacher’s preferences.

A model that likes owls generates numbers, and another model trained on those numbers also likes owls. But why?

06.08.2025 21:30 — 👍 0 🔁 0 💬 1 📌 0

It's Owl in the Numbers: Token Entanglement in Subliminal Learning

Entangled tokens help explain subliminal learning.

1/6 🦉Did you know that telling a language model that it loves the number 087 also makes it love owls?

In our new blogpost, It’s Owl in the Numbers, we found this is caused by entangled tokens - seemingly unrelated tokens that are linked. When you boost one, you boost the other.

owls.baulab.info/

06.08.2025 21:30 — 👍 6 🔁 3 💬 1 📌 0

Postdoc at HenkeLab at University of Bern, interested in Memory, the Hippocampus & Consciousness.

PhD student @KriegeskorteLab @Columbia. Research in comp neuro, ai safety, and phil of mind.

PhD student in psychology at Harvard, research fellow at Goodfire AI

AI interpretability + computational cognitive science

Senior Lecturer at @CseHuji. #NLPROC

schwartz-lab-huji.github.io

Machine learning lab at Columbia University. Probabilistic modeling and approximate inference, embeddings, Bayesian deep learning, and recommendation systems.

🔗 https://www.cs.columbia.edu/~blei/

🔗 https://github.com/blei-lab

phd @ mit, research @ genlm, intern @ apple

https://benlipkin.github.io/

Research in NLP (mostly LM interpretability & explainability).

Assistant prof at UMD CS + CLIP.

Previously @ai2.bsky.social @uwnlp.bsky.social

Views my own.

sarahwie.github.io

Modeling Linguistic Variation to expand ownership of NLP tools

Views my own, but affiliations that might influence them:

ML PhD Student under Prof. Diyi Yang

2x RS Intern🦙 Pretraining

Alum NYU Abu Dhabi

Burqueño

he/him

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

the passionate shepherd, to his love • ἀρετῇ • מנא הני מילי

Illuminating math and science. Supported by the Simons Foundation. 2022 Pulitzer Prize in Explanatory Reporting. www.quantamagazine.org

Postdoc at ETH. Formerly, PhD student at the University of Cambridge :)

CS Prof at UC Irvine, CTO/Cofounder at Envive AI

Work on evaluation and robustness of LLMs

Researcher in NLP, ML, computer music. Prof @uwcse @uwnlp & helper @allen_ai @ai2_allennlp & familiar to two cats. Single reeds, tango, swim, run, cocktails, מאַמע־לשון, GenX. Opinions not your business.

Assoc. Professor at UC Berkeley

Artificial and biological intelligence and language

Linguistics Lead at Project CETI 🐳

PI Berkeley Biological and Artificial Language Lab 🗣️

College Principal of Bowles Hall 🏰

https://www.gasperbegus.com

Professor at Penn, Amazon Scholar at AWS. Interested in machine learning, uncertainty quantification, game theory, privacy, fairness, and most of the intersections therein

AI, philosophy, spirituality

Head of interpretability research at EleutherAI, but posts are my own views, not Eleuther’s.

PhD (in progress) @ Northeastern! NLP 🤝 LLMs

she/her