Sparse Coding and Autoencoders

In "Dictionary Learning" one tries to recover incoherent matrices $A^* \in \mathbb{R}^{n \times h}$ (typically overcomplete and whose columns are assumed to be normalized) and sparse vectors $x^* \in ...

With all renewed discussion about "Sparse AutoEncoders (#SAE)" as a way of doing #MechanisticInterpretability of #LLMs, I am resharing a part of my PhD where we proved years ago about how sparsity automatically emerges in autoencoding.

arxiv.org/abs/1708.03735

03.10.2025 14:16 — 👍 0 🔁 0 💬 0 📌 0

Registrations close for #DRSciML by Noon (Manchester time). Do register soon to ensure you get the Zoom links to attend this exciting event on the foundations of #ScientificML 💥

08.09.2025 08:41 — 👍 0 🔁 0 💬 0 📌 0

YouTube video by CSAChannel IISc

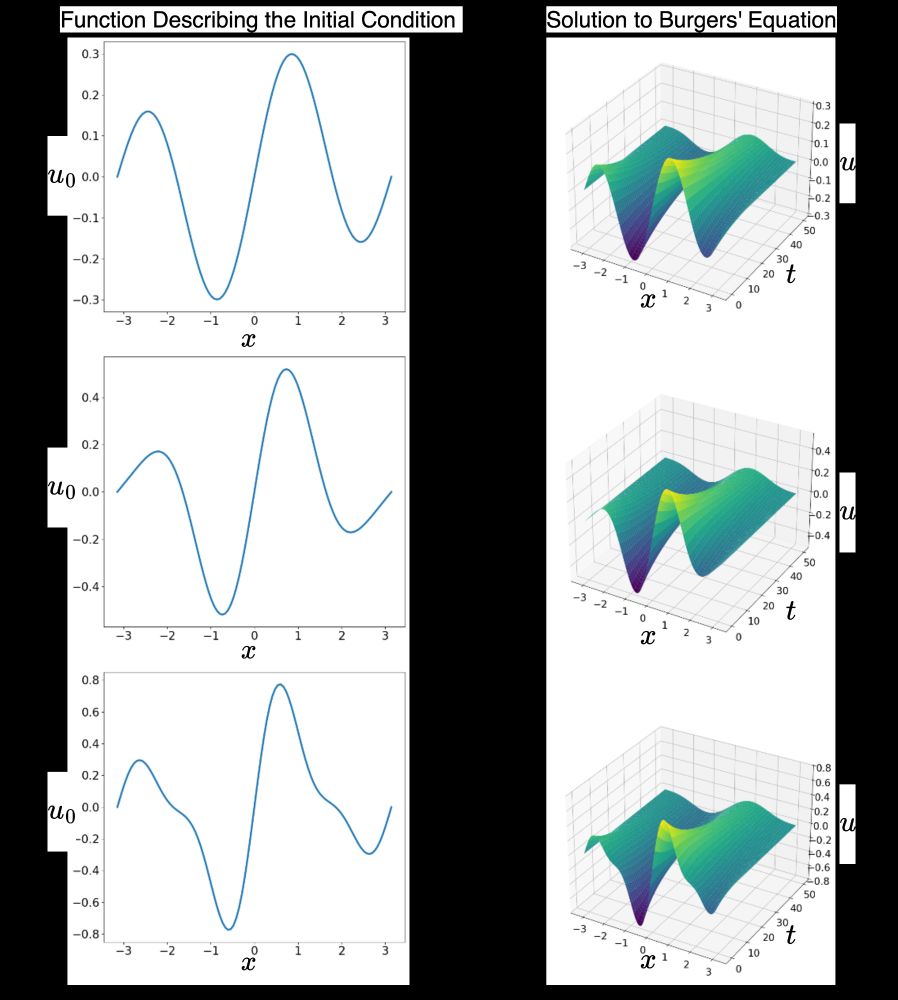

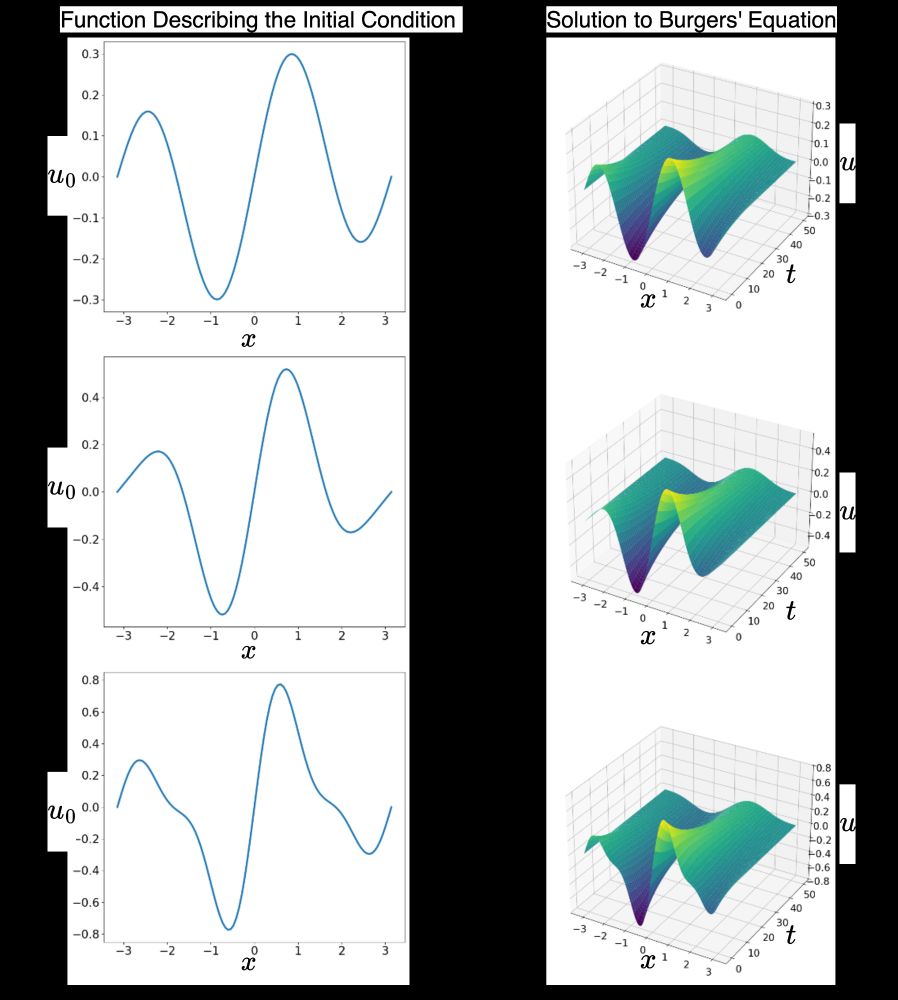

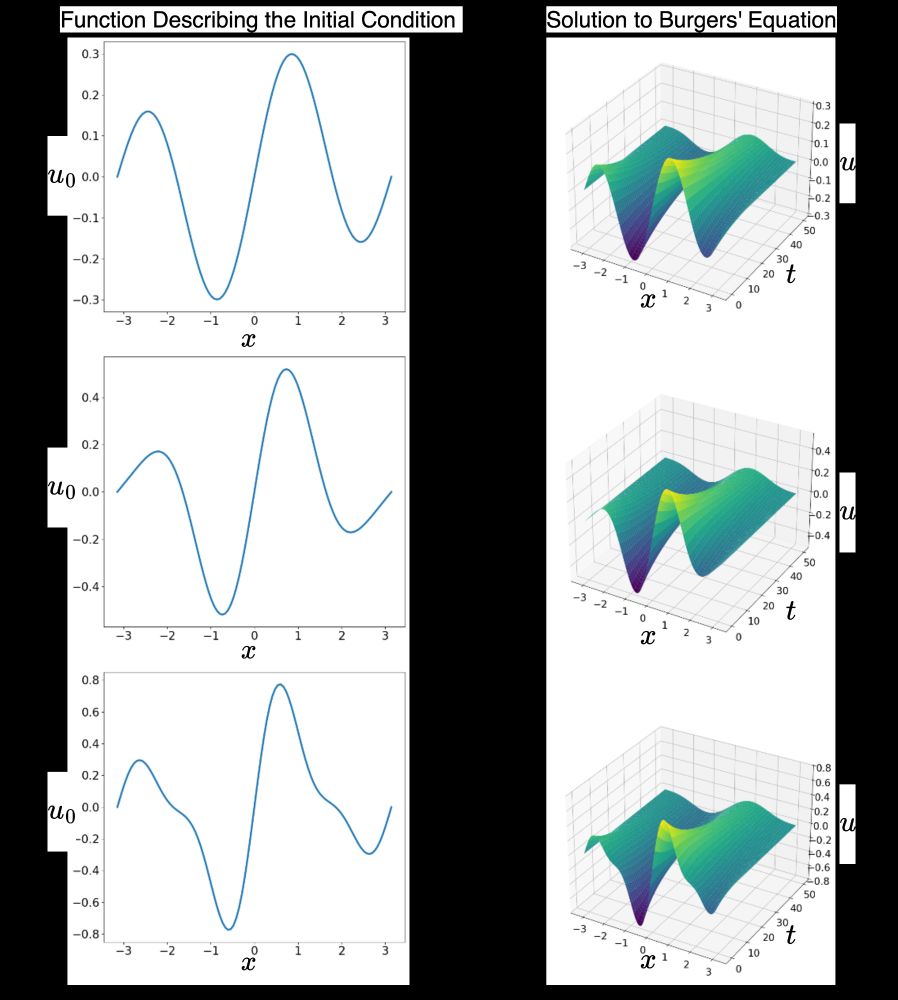

Provable Size Requirements for Operator Learning and PINNs, by Anirbit Mukherjee

Recently I gave an online talk @

India's premier institute IISc 's "Bangalore Theory Seminars" where I explained our results on size lowerbounds for neural models of solving PDEs via neural nets. #SciML #AI4SCIENCE I cover work by one of my, 1st year PhD student, Sebastien.

youtu.be/CWvnhv1nMRY?...

04.09.2025 20:16 — 👍 1 🔁 0 💬 0 📌 0

Today is 70th anniversary of the summer meeting at Dartmouth which officially marked the beginning of AI research 💥 Interestingly "Objective 3" in 1955 was already about having theory of neural nets. 🙂

stanford.io/2WJJJGN

31.08.2025 17:52 — 👍 0 🔁 0 💬 0 📌 0

DRSciML

Registrations are now open for the international workshop on foundations of #AI4Science #SciML that we are hosting with Prof. Jakob Zech. In-person seats are very limited, please do register to join online 💥

drsciml.github.io/drsciml/

21.08.2025 10:26 — 👍 0 🔁 1 💬 0 📌 1

Please do get in touch if you have published paper(s) on solving singularly perturbed PDEs using neural nets. #AI4Science #SciML

18.08.2025 09:05 — 👍 0 🔁 0 💬 0 📌 0

@aifunmcr.bsky.social

07.08.2025 16:35 — 👍 0 🔁 0 💬 0 📌 0

Some luck to be hosted by a Godel Prize winner, Prof. Sebastien Pokutta, and to present our work in their group 💥 Sebastien heads this "Zuse Institute Berlin (#ZIB) " which is an amazing oasis of applied mathematics bringing together experts from different institutes in Berlin.

07.08.2025 16:34 — 👍 1 🔁 1 💬 1 📌 0

Interested in statistics? Prof Subhashis Ghoshal will be delivering the below public lecture tomorrow:

Title: Immersion posterior: Meeting Frequentist Goals under Structural Restrictions

Time: Aug 5 16:00-17:00

Abstract: www.newton.ac.uk/seminar/45562/

Livestream: www.newton.ac.uk/news/watch-l...

04.08.2025 10:45 — 👍 1 🔁 1 💬 0 📌 0

Hello #FAU. Thanks for the quick plan to host me and letting me present our exciting mathematics of ML in infinite-dimensions, #operatorlearning. #sciML Their "Pattern Recognition Laboratory" is completing 50 years! @andreasmaier.bsky.social 💥

02.08.2025 18:31 — 👍 1 🔁 0 💬 0 📌 0

@aifunmcr.bsky.social

24.07.2025 13:27 — 👍 0 🔁 0 💬 0 📌 0

University of Manchester has a 1 year post-doc position that I am happy to support in our group if you are currently an #EPSRC funded PhD student - and have the required specialization for work in our group. Typicall we prefer candidates who have published in deep-learning theory or fluid theory.

24.07.2025 13:23 — 👍 1 🔁 0 💬 1 📌 0

@aifunmcr.bsky.social

23.07.2025 16:53 — 👍 0 🔁 0 💬 0 📌 0

#aiforscience

23.07.2025 16:53 — 👍 0 🔁 0 💬 0 📌 0

DRSciML

Do mark your calendars for "DRSciML" (Dr. Scientific ML 😉) on September 9 and 10 🔥

drsciml.github.io/drsciml/

- We are hosting a 2 day international workshop on understanding scientific-ML.

- We have leading experts from around the world giving talks.

- There might be ticketing. Watch this space!

23.07.2025 16:52 — 👍 0 🔁 0 💬 2 📌 0

@aifunmcr.bsky.social

23.07.2025 16:49 — 👍 0 🔁 0 💬 0 📌 0

Major ML journals that have come up in the recent years,

- dl.acm.org/journal/topml

- jds.acm.org

- link.springer.com/journal/44439

- academic.oup.com/rssdat

- jmlr.org/tmlr/

- data.mlr.press

No reason why these cant replace everything the current conferences are doing and most likely better.

06.07.2025 19:41 — 👍 0 🔁 0 💬 0 📌 0

Thanks. No, AutoSGD is not going as far as delta-GClip goes. It's Theorem 4.5 is where they have any global minima convergence happening - but it uses assumptions which are not known to be true for nets. Our convergence holds for *all* nets wide enough.

01.07.2025 10:42 — 👍 1 🔁 0 💬 1 📌 0

Do link to the paper! I can have a look and check.

01.07.2025 09:02 — 👍 1 🔁 0 💬 1 📌 0

So, the next time you train a deep-learning model, it's probably worthwhile to have a baseline for the only provable adaptive gradient deep-learning algorithm - our delta-GClip 🙂

01.07.2025 08:55 — 👍 1 🔁 0 💬 1 📌 0

Our insight is to introduce an intermediate form of gradient clipping that can leverage the PL* inequality of wide nets - something not known for standard clipping. Given our algorithm works for transformers maybe that points to some yet unkown algebraic property of them. #TMLR

29.06.2025 22:38 — 👍 0 🔁 0 💬 0 📌 0

Our "delta-GCLip" is the *only* known adaptive gradient algorithm that provably trains deep-nets AND is practically competitive. That's the message of our recently accepted #TMLR paper - and my 4th TMLR journal 🙂

openreview.net/pdf?id=ABT1X...

#optimization #deeplearningtheory

29.06.2025 22:36 — 👍 0 🔁 1 💬 0 📌 2

GitHub - Anirbit-AI/Slides-from-Team-Anirbit: Slide Presentations of Our Works

Slide Presentations of Our Works. Contribute to Anirbit-AI/Slides-from-Team-Anirbit development by creating an account on GitHub.

An updated version of our slides on necessary conditions for #SciML,

- and more specially,

"Machine Learning in Function Spaces/Infinite Dimensions".

Its all about the 2 key inequalities on slides 27 and 33.

Both come via similar proofs.

github.com/Anirbit-AI/S...

23.06.2025 22:02 — 👍 0 🔁 0 💬 0 📌 0

Now our research group has a logo to succinctly convey what we do - prove theorems about using ML to solve PDEs, leaning towards operator learning. Thanks to #ChatGPT4o for converting my sketches into a digital image 🔥 #AI4Science #SciML

07.06.2025 19:03 — 👍 0 🔁 0 💬 0 📌 0

It would be great to be able to see a compiles list of useful PDEs that #PINNs struggle to solve - and how would we measure success there.

We know of edge-cases with simple PDEs, where PINNs struggle, but then often those aren't the cutting-edge of use-cases of PDEs.

24.05.2025 16:28 — 👍 1 🔁 0 💬 0 📌 0

@prochetasen.bsky.social @mingfei.bsky.social @omarrivasplata.bsky.social

09.04.2025 09:18 — 👍 1 🔁 0 💬 0 📌 0

@aifunmcr.bsky.social 🙂

09.04.2025 09:18 — 👍 0 🔁 0 💬 0 📌 0

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Professor for AI/ML Methods in Tübingen. Posts about Probabilistic Numerics, Bayesian ML, AI for Science. Computations are data, Algorithms make assumptions.

Professor of Machine Learning, University of Cambridge, academic lead of ai@cam, Accelerate Science, author of The Atomic Human, proceedings editor for PMLR.

Research Scientist at Google DeepMind

Centre for AI Fundamentals

ELLIS Manchester

Foundational AI research

www.ai-fun.manchester.ac.uk

ellismcr.org

ellis.eu

www.manchester.ac.uk

Probabilistic machine learning and its applications in AI, health, user interaction.

@ellisinstitute.fi, @ellis.eu, fcai.fi, @aifunmcr.bsky.social

PhD @ University of Manchester.

IIT Guwahati'22.

dibyakanti.github.io

Transactions on Machine Learning Research (TMLR) is a new venue for dissemination of machine learning research

https://jmlr.org/tmlr/

Senior Staff Research Scientist @Google DeepMind, previously Stats Prof @Oxford Uni - interested in Computational Statistics, Generative Modeling, Monte Carlo methods, Optimal Transport.

Associate Professor at University of Pennsylvania

Harvard Professor.

ML and AI.

Co-director of the Kempner Institute.

https://shamulent.github.io

Lecturer (Assistant Professor) at University of Liverpool

Personal Website: https://procheta.github.io/

https://sites.google.com/view/kriznakumar/ Associate professor at @ucdavis

#machinelearning #deeplearning #probability #statistics #optimization #sampling

full-time ML theory nerd, part-time AI-non enthusiast

something new // Gemini RL+inference @ Google DeepMind // Conversational AI @ Meta // RL Agents @ EA // ML+Information Theory @ MIT+Harvard+Duke // Georgia Tech PhD // زن زندگی آزادی

📍{NYC, SFO, YYZ}

🔗 https://beirami.github.io/

Senior Research Scientist at Google DeepMind. I ∈ Optimization ∩ Machine Learning. Fan of IronMaiden🤘.Here to discuss research 🤓

Cofounder & CTO @ Abridge, Raj Reddy Associate Prof of ML @ CMU, occasional writer, relapsing 🎷, creator of d2l.ai & approximatelycorrect.com