TOMORROW Linguistics Careercast: AI Con

August 16 | 10a–12:30p PT

Join host Laurel Sutton with guests for a deep dive into The AI Con by Emily Bender & Alex Hanna. We’ll cut through Big Tech’s GenAI hype & unpack what linguists really need to know. thecon.ai

Register: www.lsadc.org/ev_calendar_...

15.08.2025 14:22 — 👍 25 🔁 11 💬 0 📌 1

AI Eroded Doctors’ Ability to Spot Cancer Within Months in Study

Artificial intelligence, touted for its potential to transform medicine, led to some doctors losing skills after just a few months in a new study.

Stop offloading cognitive tasks to "generative AI." Stop using systems w/ "AI" features either inextricably woven through them or prominently displayed at the top to nudge you into their use. Stop *Designing* "AI" tools & integrations that way. Stop building or using "AI" like this. Fucking Stop it.

15.08.2025 04:44 — 👍 1695 🔁 668 💬 24 📌 52

Google users who encounter an AI summary are less likely to click on links to other websites than users who do not see one. Users who encountered an AI summary clicked on a traditional search result link in 8% of all visits. Those who did not encounter an AI summary clicked on a search result nearly twice as often (15% of visits).

Krass: Wenn Google eine KI-Übersicht zur Suchanfrage einblendet, fällt die Anzahl der Klicks fast um die Hälfte

Aus einer neuen Untersuchung von @pewresearch.org

23.07.2025 05:12 — 👍 230 🔁 70 💬 23 📌 5

What will your impact be? There is the one thing, above all else, that young (and older) people can do today to make an immediate and lasting impact on the world:

Choose to commit your life’s work on the big challenges - like climate action, biodiversity or long COVID.

01.07.2025 13:00 — 👍 9 🔁 1 💬 1 📌 1

Fascinating chart from @rutgerbregman.com‘s new book about moral ambition. It seems many of the rich have an inkling that they make money doing things that provide no benefit to society.

Also, in this media era that’s immersing society in bullshit news, the journalism number is notable.

25.05.2025 13:30 — 👍 191 🔁 51 💬 9 📌 9

Considerations during the panel on opportunities and challenges of so-called AI:

AI is often compared to a calculator or a sophisticated helper tool for boring or exhausting tasks, but it also takes away a lot of nice creative work (text writing). 2/2

#SciSoc2025 #EMBL #InScienceWeTrust

16.06.2025 16:40 — 👍 0 🔁 1 💬 1 📌 0

Lucio Corsi engages with fans below in the pit

Lucio Corsi plays piano (and guitar and harmonica)

#LucioCorsi is an Italian🇮🇪 rock star who performed with an expanded band on 6/21 in Rome - for over two hours he commanded the enormous stage and crowd with the purity of his unique artistry and rapport with his band. ❤️🎼🎸🎹⭐️A poetic review from Giovanni Di Stefano: www.rollingstone.it/musica/live/...

23.06.2025 07:07 — 👍 7 🔁 3 💬 0 📌 0

Hours after Putin spoke to Trump

And a few days after the US announced it would cut supplies of Ukrainian air defense

04.07.2025 05:18 — 👍 2107 🔁 1030 💬 100 📌 52

A life-changing experience: 18 months of LongCOVID with no cure in sight. I have never fully recovered after my first COVID infection on Dec 10th, 2023 and would describe my daily power levels at 30-40% of my earlier "me" - on good days.

10.06.2025 13:00 — 👍 79 🔁 25 💬 1 📌 2

Help Sheet: Resisting AI Mania in Schools

K-12 educators are under increasing pressure to use—and have students use—a wide range of AI tools. (The term “AI” is used loosely here, just as it is by many purveyors and boosters.) Even those who envision benefits to schools of this fast-evolving category of tech should approach the well-funded AI-in-education campaign with skepticism and caution. Some of the primary arguments for teachers actively using AI tools and introducing students to AI as early as kindergarten, however, are questionable or fallacious. What follows are four of the most common arguments and rebuttals with links to sources. I have not attempted balance, in part because so much pro-AI messaging is out there and discussion of risks and costs is often minimized in favor of hope or resignation. -ALF

Argument: “Schools need to prepare students for the jobs of the future.”

The skills employers seek haven’t changed much over the decades—and include a lot of “soft skills” like initiative, problem-solving, communication, and critical thinking.

Early research is showing that using generative AI can degrade these key skills:

An MIT study showed adults using chatGPT to help write an essay “had the lowest brain engagement and ‘consistently underperformed at neural, linguistic, and behavioral levels.’” Critically, “ChatGPT users got lazier with each subsequent essay, often resorting to copy-and-paste by the end of the study.”

A business school found those who used AI tools often had worse critical thinking skills “mediated by increased cognitive offloading. Younger participants exhibited higher dependence on AI tools and lower critical thinking scores.”

Another study revealed those using “ChatGPT engaged less in metacognitive activities…For instance, learners in the AI group frequently looped back to ChatGPT for feedback rather than reflecting independently. This dependency not only undermines critical thinking but also risks long-term skill stagnation.” …

Argument: “AI is a tool, just like a calculator.”

Calculators don’t provide factually wrong answers, but AI tools have. Last year, Google’s AI search returned, among other falsehoods, that cats have gone to the moon, that Barack Obama is Muslim, and that glue goes on pizza. Even though AI tools have and are expected to improve, children in schools shouldn’t be used as tech firms’ guinea pigs for undertested, unregulated products while AI firms engage elected officials in actively resisting regulation.

Calculators don’t provide dangerous, even deadly feedback. In one study, a ”chatbot recommended that a user, who said they were recovering from addiction, take a ‘small hit’ of methamphetamine” because, it said, it’s “‘what makes you able to do your job to the best of your ability.’" Users have received threatening messages from chatbots.

Calculators don’t pose mental health risks because they aren’t potentially addictive or designed to encourage repeated use. They don’t flatter, direct, or manipulate. Chatbots have been designed this way—and this has led to dreadful mental health outcomes for some, including users in a New York Times report. Alleging a chatbot encouraged their teen to die by suicide, parents in Florida filed a lawsuit against its maker.

Calculators don’t lie. Chatbots, however, have misled users. Writer Amanda Guinzberg shared screenshots of interactions with one that she asked to describe several of her essays. It spewed out invented material, showing the chatbot hadn’t actually accessed and processed the essays. After much prodding, it “admitted” it had only acted as though it had done that requested work, spit out mea culpas—and went on to invent or “lie” again.

Calculators can’t be used to spread propaganda. AI tools, though, including those meant for schools, should worry us. Law professor Eric Muller’s back-and-forth with SchoolAI’s “Anne Frank” character showed his “helluva time trying to get her to say a bad word about Nazis.” In this er…

![Argument: “AI won’t replace teachers, but it will save them time and improve their effectiveness.”

Adding edtech does not necessarily save teachers time. A recent study found that learning management systems sold to schools over the past decade-plus as time-savers aren’t delivering on making teaching easier. Instead, they found this tech (e.g. Google Classroom, Canvas) is often burdensome and contributes to burnout. As one teacher put it, it “just adds layers to tasks.”

“Extra time” is rarely returned to teachers. AI proponents argue that if teachers use AI tools to grade, prepare lessons, or differentiate materials, they’ll have more time to work with students. But there are always new initiatives, duties, or committee assignments—the unpaid work districts rely on—to suck up that time. In a culture of austerity and with a USDOE that is cutting spending, teachers are likely to be assigned more students. When class sizes grow, students get less attention, and positions can be cut.

AI can’t replace what teachers do, but that doesn’t mean teachers won’t be replaced. Schools are already doing it: Arizona approved a charter school in which students spend mornings working with AI and the role of teacher is reduced to “guide.” Ed tech expert Neil Selwyn argues those in “industry and policy circles…hostile to the idea of expensively trained expert professional educators who have [tenure], pension rights and union protection… [welcome] AI replacement as a way of undermining the status of the professional teacher.”

Tech firms have been selling schools on untested products for years. Technophilia has led to students being on screens for hours in school each week even when their phones are banned. Writer Jess Grose explains, “Companies never had to prove that devices or software, broadly speaking, helped students learn before those devices had wormed their way into America’s public schools.” AI products appear to be no different.

Efficiency is not effectiveness. “Speed a…](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:jpjyorpadryoa6vnwwsnnyq5/bafkreigrcdai56b6rnuqchhubqnmxpd4bj5j2dxecaejnr3l4pxwbcej2i@jpeg)

Argument: “AI won’t replace teachers, but it will save them time and improve their effectiveness.”

Adding edtech does not necessarily save teachers time. A recent study found that learning management systems sold to schools over the past decade-plus as time-savers aren’t delivering on making teaching easier. Instead, they found this tech (e.g. Google Classroom, Canvas) is often burdensome and contributes to burnout. As one teacher put it, it “just adds layers to tasks.”

“Extra time” is rarely returned to teachers. AI proponents argue that if teachers use AI tools to grade, prepare lessons, or differentiate materials, they’ll have more time to work with students. But there are always new initiatives, duties, or committee assignments—the unpaid work districts rely on—to suck up that time. In a culture of austerity and with a USDOE that is cutting spending, teachers are likely to be assigned more students. When class sizes grow, students get less attention, and positions can be cut.

AI can’t replace what teachers do, but that doesn’t mean teachers won’t be replaced. Schools are already doing it: Arizona approved a charter school in which students spend mornings working with AI and the role of teacher is reduced to “guide.” Ed tech expert Neil Selwyn argues those in “industry and policy circles…hostile to the idea of expensively trained expert professional educators who have [tenure], pension rights and union protection… [welcome] AI replacement as a way of undermining the status of the professional teacher.”

Tech firms have been selling schools on untested products for years. Technophilia has led to students being on screens for hours in school each week even when their phones are banned. Writer Jess Grose explains, “Companies never had to prove that devices or software, broadly speaking, helped students learn before those devices had wormed their way into America’s public schools.” AI products appear to be no different.

Efficiency is not effectiveness. “Speed a…

Argument: “Students are already using AI, so we have to teach them ethical use.

If schools want ethical students, teach ethics. More students are using AI tools to cheat, an age-old problem they make much easier. This won’t be addressed by showing students how to use this minute’s AI, an argument implying students don’t know what plagiarism is (solved by teaching about plagiarism) or understand academic integrity (solved by teaching and enforcing its bounds)—or that teachers create weak assignments or don’t convey purpose. The latter aren’t solved by attempting to redirect students motivated and able to cheat.

Students can be educated on the ethics of AI without encouraging use of AI tools. They can be taught, as part of media literacy and social media safety programs, about AI’s potential and applications as well as how it can enable predation, perpetuate bias, and spread disinformation. They should be taught about the risks of AI and its various social, economic, and environmental costs. Giving a nod to these issues while integrating AI throughout schools sends a strong message: the schools don’t really care and neither should students.

Children can’t be expected to use AI responsibly when adults aren’t. Many pushing schools to embrace AI don’t know much about it. One example: Education Secretary Linda McMahon, who said kindergartners should be taught A1 (a steak sauce). The LA Times introduced a biased and likely politically-motivated AI feature. The Chicago Sun-Times published a summer reading list including nonexistent books—yet teachers are told to use the same tools to do similar work. Educators using AI to cut corners can strike students as hypocritical.

The many costs of AI call into question the possibility of ethical AI use. These include:

Energy - AI data centers need huge amounts of water as coolant as well as electricity, pulling these resources from their communities—which tend to be lower-income—straining the grid, and raising household costs. Thi…

I put together a short document for those wary of the AI mania in schools. Four of the main arguments for teachers using AI tools and introducing kids to AI as early as kindergarten are addressed--with thoughts and rebuttals and links to sources. Hope it's helpful.

drive.google.com/file/d/1urCM...

24.06.2025 22:37 — 👍 161 🔁 71 💬 11 📌 3

Paradoxes of Media and Information Literacy

Currently reading Paradoxes of Media and Information Literacy: The Crisis of Information by @juttahaider.bsky.social & @olofsundin.bsky.social

30.06.2025 15:25 — 👍 3 🔁 2 💬 2 📌 0

Geschlossen gegen Manipulation

Wenn Staaten gezielt Desinformationen verbreiten, wird der Zusammenhalt in einer Gesellschaft angegriffen und schlimmstenfalls zerstört. Wie können wir uns schützen? Es braucht einen integrierten Ans...

Zur Bewältigung illegitimer ausländ. Einflussversuche braucht es eine Gesamtstrategie, die vom präventiven Schutz gesellschaftl. Werte bis zur flinken Reaktion auf Vorfälle alle Tools & Akteur:innen integriert, die uns schützen können. Im neuen @cemas.io Paper untersuche ich, wie das aussehen kann:

25.06.2025 16:20 — 👍 56 🔁 23 💬 4 📌 0

Geschlossen gegen Manipulation

Wenn Staaten gezielt Desinformationen verbreiten, wird der Zusammenhalt in einer Gesellschaft angegriffen und schlimmstenfalls zerstört. Wie können wir uns schützen? Es braucht einen integrierten Ans...

Neues Research Paper: CeMAS-Senior Researcherin @leafruehwirth.bsky.social entwickelt ein integriertes Modell zum Schutz demokratischer Gesellschaften vor ausländischen Einflussversuchen, sogenannte „Foreign Information Manipulation and Interference“ (FIMI):

25.06.2025 07:58 — 👍 60 🔁 22 💬 3 📌 2

The Republican party seeks only power, personal wealth, oppression, cruelty – and to continue inflicting pain, suffering, and death.

I no longer recognize my country nor nearly half of my own species.

03.07.2025 19:51 — 👍 665 🔁 167 💬 31 📌 10

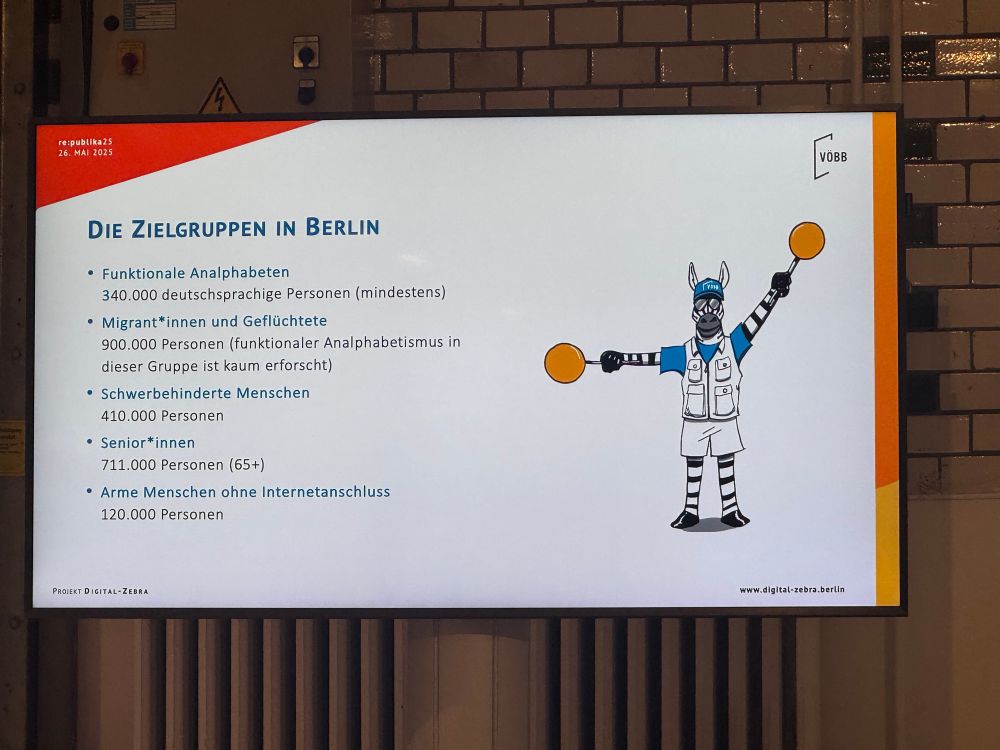

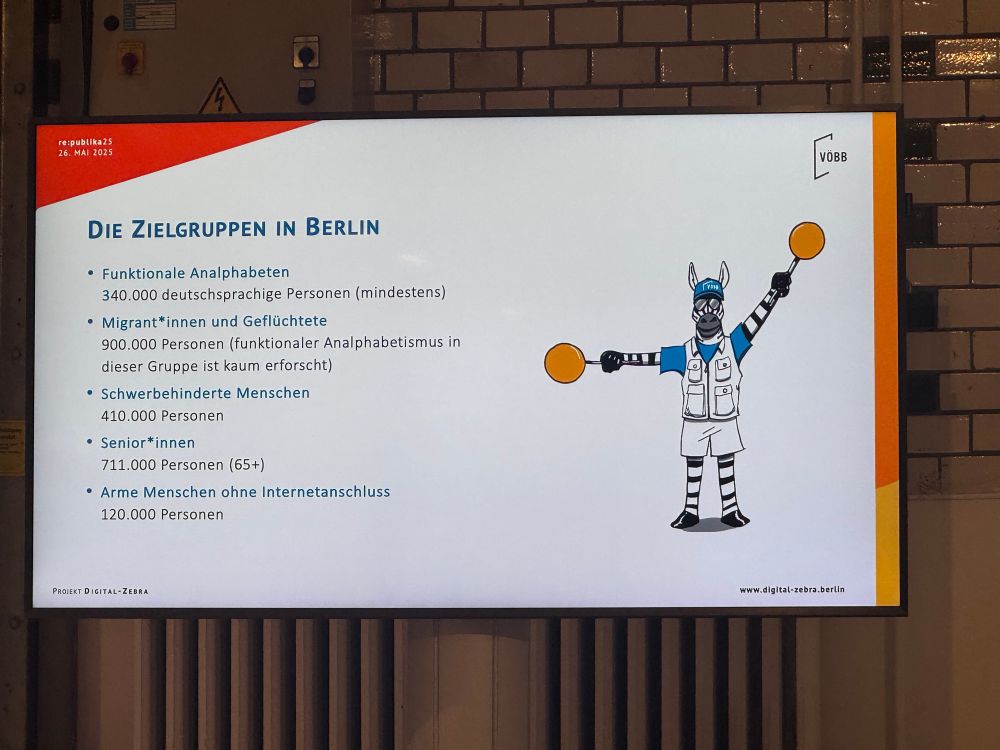

Jacob Svaneeng präsentiert (links Bildschirm)

re:publika25

26. MAI 2025

VOBB

DIE ZIELGRUPPEN IN BERLIN

• Funktionale Analphabeten

340.000 deutschsprachige Personen (mindestens)

• Migrant*innen und Geflüchtete

900.000 Personen (funktionaler Analphabetismus in dieser Gruppe ist kaum erforscht)

• Schwerbehinderte Menschen

410.000 Personen

• Senior*innen

711.000 Personen (65+)

• Arme Menschen ohne Internetanschluss

120.000 Personen

Die öffentlichen Bibliotheken Berlins bauen einen nicht-kommerziellen "IT-Support" für alle Stadtbewohner*innen. - Ein tolles Beispiel für „digitale Chancengleichheit“ und Wirksamkeit von #Bibliotheken. - Applaus 👏 für den Berliner Kollegen Jacob Svaneeng. #rp25

26.05.2025 17:02 — 👍 70 🔁 24 💬 1 📌 1

"Unsere Sicherheit liegt in der Offenheit"

Wie Kürzungen in USA eine zentrale medizinische Datenbank bedrohen: Die Bibliothekswissenschaftlerin Miriam Albers über die Bequemlichkeit Europas und die Initiative, jetzt ein europäisches PubMed aufzubauen. Im Wiarda-Blog: www.jmwiarda.de/2025/05/26/u...

26.05.2025 08:38 — 👍 53 🔁 26 💬 2 📌 5

Zweiteilig gestaltete Mimikama-Grafik. Links ein blau eingefärbtes Porträt von Andre Wolf im schwarzen Mimikama-T-Shirt mit verschränkten Händen. Rechts auf rotem Hintergrund steht oben in weißer Schrift: „ChatGPT ist keine AGI“.

Darunter eine große, fettgedruckte Hauptaussage:

„ChatGPT ist Meister der Imitation, aber nicht der Intelligenz.“

Es folgt ein erklärender Text in kleiner weißer Schrift:

„Und genau deshalb brauchen wir eben jenes ruhige, abholende Erklären von den verschiedenen Facetten im Bereich der künstlichen ‚Intelligenz‘. Denn viele Menschen haben Angst bei diesem Thema. Sogar Ängste. Angst vor Kontrollverlust und Intransparenz. Angst vor Entmündigung oder dem Arbeitsplatzverlust. Entfremdung, Überwachung, Datenmissbrauch und der eigenen Entmenschlichung gegenüber der KI. Und nicht zuletzt Dystopie.“

Unten links: „Bild: Nadja Buechler“.

Warum ChatGPT keine echte Künstliche Intelligenz ist, aber oft so wirkt und von sehr vielen Menschen falsch eingeschätzt wird.

Sprachmodelle wie ChatGPT werden maßlos überschätzt.

Dazu die Erklärung zum Unterschied zwischen LLM und AGI.

Mehr 👇

14.06.2025 10:29 — 👍 116 🔁 42 💬 5 📌 2

HAPPENING NOW: A federal judge is set to hold a hearing on Governor Gavin Newsom’s request for a temporary restraining order that would bar the Trump administration’s use of the state national guard amid protests against immigration raids in LA.

Hearing starting now before Judge Breyer

🧵⬇️

12.06.2025 20:31 — 👍 3438 🔁 799 💬 49 📌 88

Let’s start with digital feudalism. In medieval feudalism, peasants worked land they didn’t own, under rules they didn’t choose, for lords who profited from their labour.

Today, we work for attention, on platforms we don’t own, governed by algorithms we don’t control.

16.05.2025 09:03 — 👍 149 🔁 35 💬 3 📌 2

🧵 I’ve talked a lot about disordered discourse, conspiracies, polarisation, denialism. But maybe the better question is: why does this keep happening? Why does our shared reality keep fracturing? What are the drivers I talk about as a dimension of disordered discourse?

16.05.2025 09:01 — 👍 665 🔁 247 💬 37 📌 33

YouTube video by Cambridge Disinformation Summit

Belllingcat CEO Eliot Higgins, on how disordered discourse is destroying democracy

I've just come back from the Cambridge Disinformation Summit where I gave the opening keynote, titled "Demanufacturing Consent - How Disordered Discourse is Destroying Democracy", featuring everything from Fake Hooves to Jackson Hinkle being the worst.

www.youtube.com/watch?v=D-FV...

25.04.2025 19:17 — 👍 1885 🔁 571 💬 74 📌 76

Musk and Zuckerberg‘s notion of „freedom of speech“ infringes our rights to freedom of speech. Great discussion with @attorneynora.bsky.social at the Cambridge Disinformation Summit

19.05.2025 19:32 — 👍 9 🔁 6 💬 0 📌 0

Machen wir uns nichts vor, das beste an diesem #esc ist Hazel Brugger.

17.05.2025 20:37 — 👍 96 🔁 12 💬 6 📌 0

god i love host Hazel Brugger so much #eurovision

17.05.2025 20:38 — 👍 1275 🔁 220 💬 19 📌 7

Einschätzung der EU-Kommission: Brüssel: TikTok verstößt gegen EU-Recht

Die Online-Plattform TikTok verstößt nach einer vorläufigen Einschätzung der Europäischen Kommission wegen intransparenter Werbung gegen EU-Digitalregeln.

Viel zu spät, aber besser als nie: Tiktok verstößt gegen EU-Recht. Aber die Demokratie ist beschädigt, besonders in Rumänien. Wir brauchen bessere Algorithmen und Ende der Manipulation durch die Plattformen zugunsten echter Nutzerentscheidungen. Der Kampf geht weiter! www.zdf.de/nachrichten/...

15.05.2025 10:02 — 👍 34 🔁 12 💬 1 📌 0

Cosmologist, pilot, author, connoisseur of cosmic catastrophes. TEDFellow, CIFAR Azrieli Global Scholar. Domain verified through my personal astrokatie.com website. She/her. Dr.

Personal account; not speaking for employer or anyone else.

Book: https://thecon.ai

Web: https://faculty.washington.edu/ebender

Historian | Author of ‘Utopia for Realists’ (2014), ‘Humankind’ (2020) and ‘Moral Ambition’ (2025) | Co-founder of The School for Moral Ambition | moralambition.org | rutgerbregman.com

Direktor Stadtbüchereien Düsseldorf. Begeistert von Menschen, Begegnungen und Bibliotheken. Läuft gern, radelt viel, reist mit Neugier – und ABBA im Ohr. - Private Posts & "public #librarylife".

Twitterarchiv 2012-2023.

https://schweringsblog.wordpress.com

FaMI an einer bayerischen UB, B.A. Infomanagement berufsbegleitend HS Hannover, hier privat unterwegs, nett, höflich, Neuem gegenüber offen, Katzenliebhaberin

Founder & PI @aial.ie. Assistant Professor of AI, School of Computer Science & Statistics, @tcddublin.bsky.social

AI accountability, AI audits & evaluation, critical data studies. Cognitive scientist by training. Ethiopian in Ireland. She/her

letterpress enthusiast | doctoral candidate

• researching the datafication & platformization of education

• quantum sufficit, pro re nata

Professor in Information Studies at LU. ”Paradoxes of Media and Information Literacy: The Crisis of Information" 2022 with J Haider http://tinyurl.com/2p9f63mu

Social Study of Information & Environmental Communication | SSLIS, Swedish School of Library & Information Science | Posts in English, Swedish, German

Journalistin, Speakerin, Monitoring. Arbeitet zu Rechtsextremismus, Hate Speech, Desinformation. War: Belltower.News, Amadeu Antonio Stiftung. Ist: CeMAS.io

U.S. Senator, Massachusetts. She/her/hers. Official Senate account.

https://substack.com/@senatorwarren

Official account of the Max Planck Society. Devoted to basic #research in #physics #astronomy #chemistry #biology #earthsciences #materialscience #mathematics #socialsciences and the #humanities; Imprint: https://www.mpg.de/imprint

Juniorprof für Philosophie der Technik & Information, Uni Stuttgart

Mitinitiatorin von #IchBinHanna | https://amreibahr.net | Newsletter #ArbeitInDerWiss: https://steady.page/de/arbeit-in-der-wissenschaft/ | #Top40unter40

☕️ #TeamKaffee | 🏃🏻♀️ #TeamLaufen

Founder and creative director of Bellingcat and director of Bellingcat Productions BV. Author of We Are Bellingcat.

Sociologist. Author. Professor. Roosevelt Institute Fellow. Expert on families, schools, kids, privilege, and power. Bylines in NYT, WaPo, MSNBC, Atlantic, etc.

"Other countries have social safety nets. The US has women."

www.jessicacalarco.com

Historian of East (Central) Europe at ZZF Potsdam

President of the German-Ukrainian Society (DUG)

Subscribe to my newsletter "After empire: Reconfiguring Eastern Europe" https://efdavies.substack.com

#StandWithUkraine

#FBPE #NAFO Standing up for justice and humanity in the world.

Live and let live.

Chefreporter im NDR/WDR Investigativressort.

Kontakt Signal.org: markusgrill.23

https://www.ndr.de/nachrichten/investigation/grill318.html

markusgrill.eu

Autorin, Journalistin, Politologin, Handlungsbriefe an die Zivilgesellschaft: samstags im Newsletter www.jeannette-hagen.de; #Sport #Gesundheit #Gesellschaft, #Politik, @tagesspiegel.de /

Journalist | ZDF-Redakteur für "ZDF Magazin Royale" und "heute-show online" | Kein ZDF-Account | http://www.christian-deker.de

📍Hamburg 🏳🌈🌱(er/ihm)

![Argument: “AI won’t replace teachers, but it will save them time and improve their effectiveness.”

Adding edtech does not necessarily save teachers time. A recent study found that learning management systems sold to schools over the past decade-plus as time-savers aren’t delivering on making teaching easier. Instead, they found this tech (e.g. Google Classroom, Canvas) is often burdensome and contributes to burnout. As one teacher put it, it “just adds layers to tasks.”

“Extra time” is rarely returned to teachers. AI proponents argue that if teachers use AI tools to grade, prepare lessons, or differentiate materials, they’ll have more time to work with students. But there are always new initiatives, duties, or committee assignments—the unpaid work districts rely on—to suck up that time. In a culture of austerity and with a USDOE that is cutting spending, teachers are likely to be assigned more students. When class sizes grow, students get less attention, and positions can be cut.

AI can’t replace what teachers do, but that doesn’t mean teachers won’t be replaced. Schools are already doing it: Arizona approved a charter school in which students spend mornings working with AI and the role of teacher is reduced to “guide.” Ed tech expert Neil Selwyn argues those in “industry and policy circles…hostile to the idea of expensively trained expert professional educators who have [tenure], pension rights and union protection… [welcome] AI replacement as a way of undermining the status of the professional teacher.”

Tech firms have been selling schools on untested products for years. Technophilia has led to students being on screens for hours in school each week even when their phones are banned. Writer Jess Grose explains, “Companies never had to prove that devices or software, broadly speaking, helped students learn before those devices had wormed their way into America’s public schools.” AI products appear to be no different.

Efficiency is not effectiveness. “Speed a…](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:jpjyorpadryoa6vnwwsnnyq5/bafkreigrcdai56b6rnuqchhubqnmxpd4bj5j2dxecaejnr3l4pxwbcej2i@jpeg)