IPA: An Information-Reconstructive Input Projection Framework for Efficient Foundation Model Adap...

Yuan Yin, Shashanka Venkataramanan, Tuan-Hung Vu, Andrei Bursuc, Matthieu Cord

Action editor: Ofir Lindenbaum

https://openreview.net/forum?id=aLmQeZx2pR

#projector #adaptation

03.02.2026 05:19 — 👍 5 🔁 2 💬 0 📌 0

The unreasonable magic of simplicity!

Meet DrivoR (Driving on Registers): our latest end2end autonomous driving model.

We teared down complex dependencies & modules from current models to

obtain a pure Transformer-based SOTA driving agent (NAVSIM v1 & v2, HUGSIM).

Find out more 👇

09.01.2026 17:02 — 👍 4 🔁 1 💬 0 📌 0

7/ 📄 Read the paper & get the code: valeoai.github.io/driving-on-r...

Congratulations to the whole team!

09.01.2026 17:00 — 👍 1 🔁 0 💬 0 📌 0

6/ Furthermore, this scoring architecture allowed us to tweak the agent's behavior.

We were able to induce a more passive, safer driving style—which proved important for reaching SOTA performance on the rigorous NAVSIM-v2 benchmark. 🛡️

09.01.2026 16:57 — 👍 1 🔁 0 💬 1 📌 0

5/ Given the success of trajectory scoring methods (like GTRS), we dove deep into the scoring module.

Thanks to the wizardry of Yihong Xu, we discovered that disentangling the tokens used for generation from those used for scoring was key.

09.01.2026 16:56 — 👍 1 🔁 0 💬 1 📌 0

4/ This mimics human driving intuition! 🧠

We pay max attention to the road ahead (front camera), while only occasionally glancing at the rear (back camera).

Visualizing the attention maps confirms this: front tokens specialize; back tokens collapse to a single pattern.

09.01.2026 16:56 — 👍 1 🔁 0 💬 1 📌 0

3/ These registers act as "scene-tokens" and demonstrate signs of learned compression.

Cosine similarity analysis reveals high differentiation for the front camera, while representations progressively "collapse" as we move toward the back camera.

09.01.2026 16:56 — 👍 1 🔁 0 💬 1 📌 0

2/ We explored specific reasons to use a pre-trained ViT as image encoder.

We imbue DINOv2 with registers LoRA-finetuned on driving data, reducing the # of patch tokens over 250x using camera aware register tokens.

This efficiency could impact future works on VLMs in driving

09.01.2026 16:55 — 👍 2 🔁 0 💬 1 📌 0

1/🧵 Q: Can we have both a simple and SOTA architecture in autonomous driving?

R: Yes! 😍

Introducing Driving on Registers (DrivoR):

a pure Transformer backbone that achieves SOTA results in NAVSIM v1 / v2 and closed-loop HUGSIM evaluation.

Here is how 👇

09.01.2026 16:55 — 👍 10 🔁 4 💬 1 📌 1

AI Seminar Cycle – Hi! PARIS

Our @spyrosgidaris.bsky.social is speaking this morning (Wed, Dec 10th, 11:00 am Paris time) about "Latent Representations for Better Generative Image Modeling" in the Hi! PARIS - ELLIS monthly seminar.

The talk will be live-streamed: www.hi-paris.fr/2025/09/26/a...

10.12.2025 09:15 — 👍 7 🔁 2 💬 0 📌 0

Perfect timing for this keynote on open, re-purposable foundation models at #aiPULSE2025

@abursuc.bsky.social taking the stage this afternoon! 👇

04.12.2025 12:14 — 👍 2 🔁 0 💬 0 📌 0

IPA: An Information-Preserving Input Projection Framework for Efficient Foundation Model Adaptation

by: Y. Yin, S. Venkataramanan, T.H. Vu, A. Bursuc, M. Cord

📄: arxiv.org/abs/2509.04398

tl;dr: a PEFT method that improves upon LoRA by explicitly preserving information in the low-rank space

03.12.2025 22:52 — 👍 1 🔁 0 💬 1 📌 0

Multi-Token Prediction Needs Registers

by: A. Gerontopoulos, S. Gidaris, N. Komodakis

📄: arxiv.org/abs/2505.10518

tl;dr: a simple way to enable multi-token prediction in LLMs by interleaving learnable "register tokens" into the input sequence to forecast future targets.

03.12.2025 22:51 — 👍 1 🔁 0 💬 1 📌 0

Boosting Generative Image Modeling via Joint Image-Feature Synthesis

by: T. Kouzelis, E. Karypidis, I. Kakogeorgiou, S. Gidaris, N. Komodakis

📄: arxiv.org/abs/2504.16064

- tl;dr: improve generation w/ a single diffusion model to jointly synthesize low-level latents & high-level semantic features

03.12.2025 22:51 — 👍 1 🔁 0 💬 1 📌 0

Learning to Steer: Input-dependent Steering for Multimodal LLMs

by: J. Parekh, P. Khayatan, M. Shukor, A. Dapogny, A. Newson, M. Cord

📄: arxiv.org/abs/2508.12815

- tl;dr: steering multimodal LLMs (MLLMs) by training a lightweight auxiliary module to predict input-specific steering vectors

03.12.2025 22:51 — 👍 1 🔁 0 💬 1 📌 0

DINO-Foresight: Looking into the Future with DINO

by E. Karypidis, I. Kakogeorgiou, S. Gidaris, N. Komodakis

📄: arxiv.org/abs/2412.11673

tl;dr: self-supervision by predicting future scene dynamics in the semantic feature space of foundation models (like DINO) rather than generating costly pixels.

03.12.2025 22:50 — 👍 2 🔁 0 💬 1 📌 0

JAFAR: Jack up Any Feature at Any Resolution

by P. Couairon, L. Chambon, L. Serrano, M. Cord, N. Thome

📄: arxiv.org/abs/2506.11136

tl;dr: lightweight, flexible, plug & play upsampler that scales features from any vision foundation model to arbitrary resolutions w/o needing high-res supervision

03.12.2025 22:50 — 👍 1 🔁 0 💬 1 📌 0

Check out our works at @NeurIPSConf #NeurIPS2025 this week!

We present 5 full papers + 1 workshop about:

💡 self-supervised & representation learning

🖼️ generative image models

🧠 finetuning and understanding LLMs & multimodal LLMs

🔎 feature upsampling

valeoai.github.io/posts/neurip...

03.12.2025 22:50 — 👍 5 🔁 1 💬 1 📌 1

We fermented our thoughts on understanding LoRA & ended up with IPA🍺

We found an asymmetry in LoRA: during training, A changes little & B eats most task-specific adaptation.

So we pre-train A to preserve information before adaptation w/ excellent parameter efficiency #NeurIPS2025 #CCFM 👇

02.12.2025 11:16 — 👍 7 🔁 1 💬 1 📌 0

1/Serve your PEFT with a fresh IPA!🍺

Finetuning large models is cheaper thanks to LoRA, but is its random init optimal?🤔

Meet IPA: a feature-aware alternative to random projections

#NeurIPS2025 WS #CCFM Oral+Best Paper

Work w/

S. Venkataramanan @tuanhungvu.bsky.social @abursuc.bsky.social M. Cord

🧵

02.12.2025 11:11 — 👍 12 🔁 2 💬 1 📌 2

That was a cool project brillantly led by Ellington Kirby during his internship.

We were curious if we could train diffusion models on sets of point coordinates.

For images, this is a step towards spatial diffusion, with pixels reorganizing themselves, instead of diffusing in rgb values space only.

26.11.2025 13:19 — 👍 3 🔁 2 💬 0 📌 0

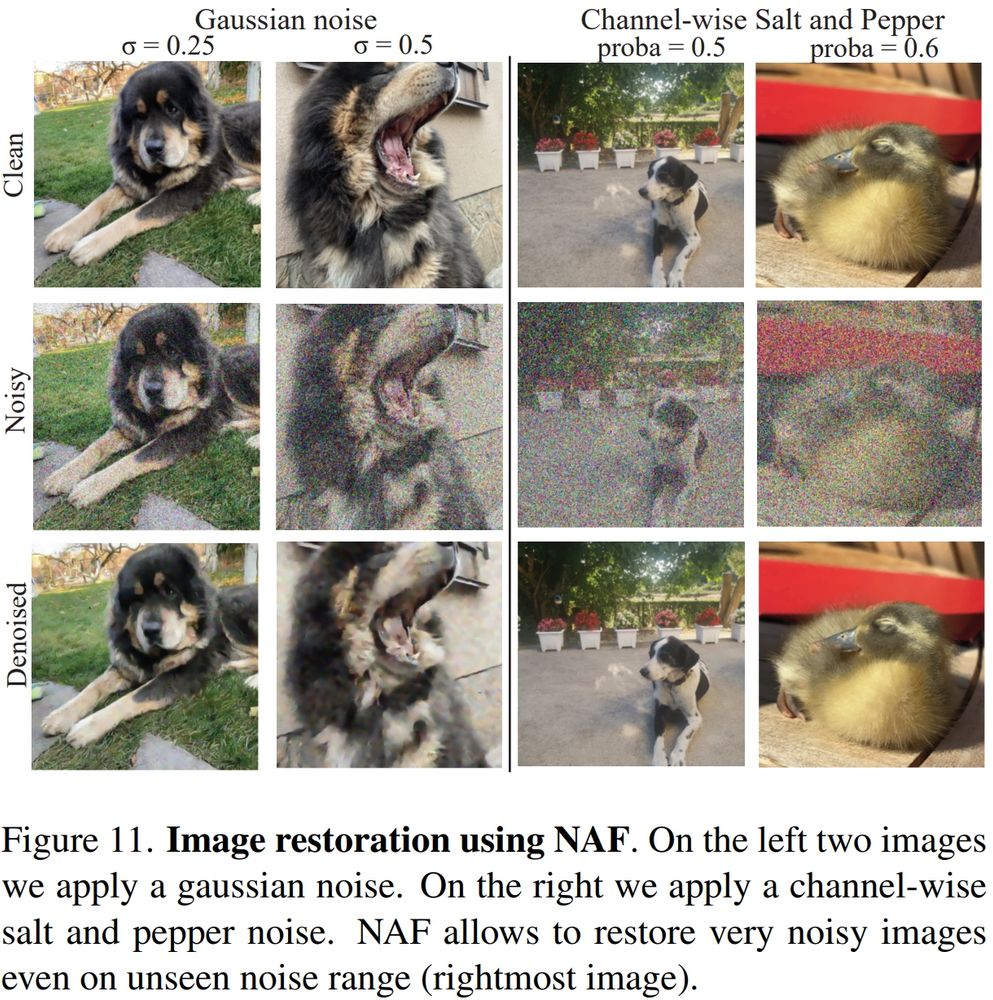

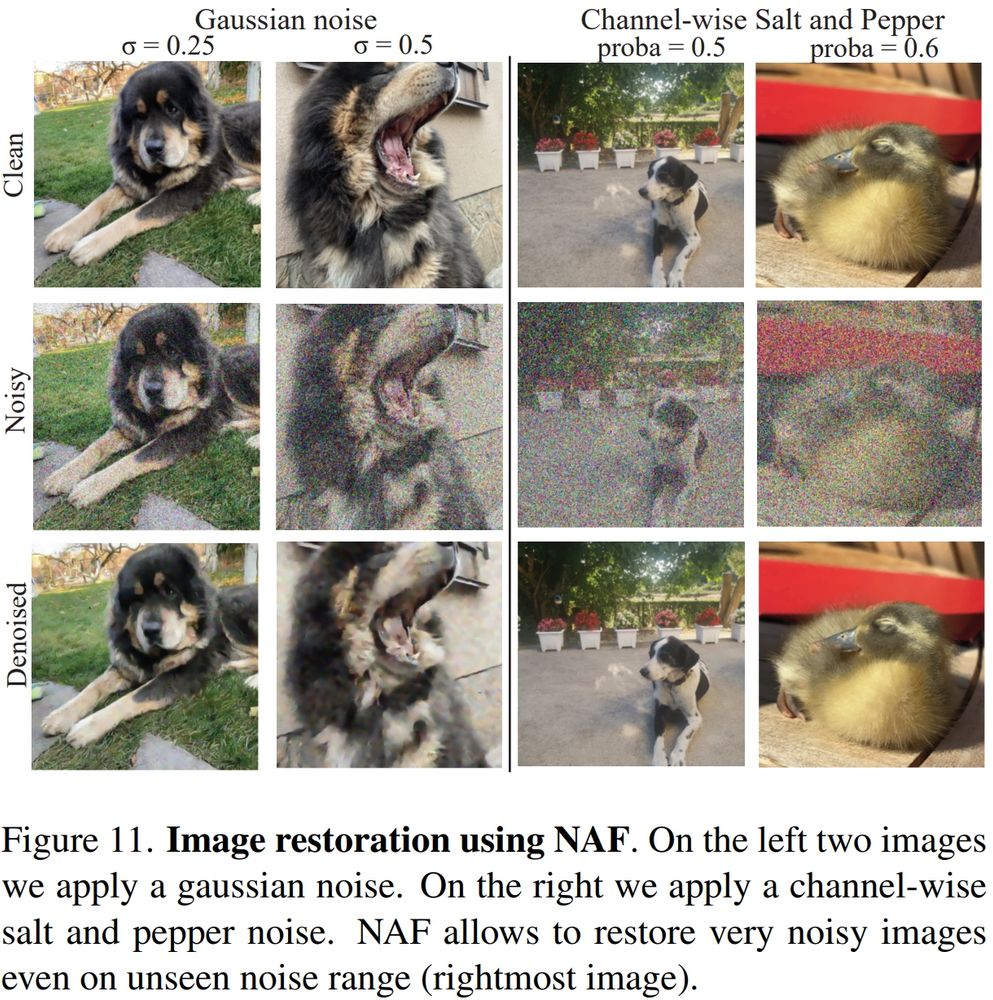

Check out NAF: an effective ViT feature upsampler to produce excellent (and eye-candy) pixel-level feature maps.

NAF outperform both VFM-specific upsamplers (FeatUp, JAFAR) and VFM-agnostic methods (JBU, AnyUp) over multiple downstream tasks 👇

25.11.2025 18:36 — 👍 14 🔁 2 💬 1 📌 0

📢 NAF is fully open-source!

The repo contains:

✅ Pretrained model

✅ Example notebooks

✅ Evaluation and training codes

Check it out & ⭐ the repo: github.com/valeoai/NAF

25.11.2025 10:44 — 👍 1 🔁 0 💬 1 📌 0

🛠️ Already have a complex, pre-trained pipeline?

If you are using bilinear interpolation anywhere, NAF acts as a strict drop-in replacement.

Just swap it in. No retraining required. It’s literally free points for your metrics.📈

25.11.2025 10:44 — 👍 3 🔁 1 💬 1 📌 0

🎯 NAF is versatile!

Not just zero-shot feature upsampling: it shines on image restoration too, delivering sharp, high-quality results across multiple applications. 🖼️

25.11.2025 10:44 — 👍 1 🔁 0 💬 1 📌 0

🔬 NAF meets theory.

Under the hood, NAF learns an Inverse Discrete Fourier Transform: revealing a link between feature upsampling, classical filtering, and Fourier theory.

✨ Feature upsampling is no longer a black box

25.11.2025 10:44 — 👍 1 🔁 0 💬 1 📌 0

💡 NAF is a super simple, universal architecture that reweights any features using only the high-resolution image:

🧬 Lightweight image encoder (600k params)

🔁 Rotary Position Embeddings (RoPE)

🔍 Cross-Scale Neighborhood Attention

First fully learnable VFM-agnostic reweighting!✅

25.11.2025 10:44 — 👍 1 🔁 0 💬 1 📌 0

🔥 NAF sets a new SoTA!

It beats both VFM-specific upsamplers (FeatUp, JAFAR) and VFM-agnostic methods (JBU, AnyUp) across downstream tasks:

- 🥇Semantic Segmentation

- 🥇Depth Estimation

- 🥇Open-Vocabulary

- 🥇Video Propagation, etc.

Even for massive models like: DINOv3-7B !

25.11.2025 10:44 — 👍 1 🔁 0 💬 1 📌 0

kashyap7x.github.io

Postdoc at NVIDIA. Previously at the University of Tübingen and CMU. Robot Learning, Autonomous Driving.

Principal research scientist at Naver Labs Europe, I am interested in most aspects of computer vision, including 3D scene reconstruction and understanding, visual localization, image-text joint representation, embodied AI, ...

🇪🇺 ELLIOT is a Horizon Europe project developing the next generation of open and trustworthy Multimodal Generalist Foundation Models — advancing open, general-purpose AI rooted in European values. 🤖📊

PhD student at Sorbonne University

International Conference on Learning Representations https://iclr.cc/

Assistant Professor at Ecole Polytechnique, IP_Paris// Before: Oxford_VGG, Inria Grenoble // multimodality, genAI enthusiast // happy mum+dog_mum // opinions: mine

Computer Vision research group @ox.ac.uk

Anti-cynic. Towards a weirder future. Reinforcement Learning, Autonomous Vehicles, transportation systems, the works. Asst. Prof at NYU

https://emerge-lab.github.io

https://www.admonymous.co/eugenevinitsky

RL Research Engineer, working in autonomous driving at Valeo

github.com/vcharraut

AI & CV scientist, CEO at @kyutai-labs.bsky.social

Professor, University of Tübingen @unituebingen.bsky.social.

Head of Department of Computer Science 🎓.

Faculty, Tübingen AI Center 🇩🇪 @tuebingen-ai.bsky.social.

ELLIS Fellow, Founding Board Member 🇪🇺 @ellis.eu.

CV 📷, ML 🧠, Self-Driving 🚗, NLP 🖺

Research Scientist at Meta | AI and neural interfaces | Interested in data augmentation, generative models, geometric DL, brain decoding, human pose, …

📍Paris, France 🔗 cedricrommel.github.io

PhD student in visual representation learning at Valeo.ai and Sorbonne Université (MLIA)

PhD Student at Valeo.ai and Telecom Paris