Some text in heuristics/separation of results appear twice? Good post otherwise

14.12.2025 18:38 — 👍 2 🔁 0 💬 1 📌 0

Thank you for coming and for the great discussion!!

07.12.2025 22:58 — 👍 1 🔁 0 💬 1 📌 0

The paper looks cool! And it seems well-written :). I did not expect these exponents in Theorem 4.2 😆

26.10.2025 14:56 — 👍 3 🔁 0 💬 1 📌 0

Very excited to share our preprint: Self-Speculative Masked Diffusions

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

07.10.2025 22:09 — 👍 13 🔁 6 💬 0 📌 0

Percepta | A General Catalyst Transformation Company

Transforming critical institutions using applied AI. Let's harness the frontier.

We're finally out of stealth: percepta.ai

We're a research / engineering team working together in industries like health and logistics to ship ML tools that drastically improve productivity. If you're interested in ML and RL work that matters, come join us 😀

02.10.2025 15:35 — 👍 118 🔁 18 💬 7 📌 2

News 🎉 We’re thrilled to announce our final panelist: David Silver!

Don’t miss David and our amazing lineup of speakers—submit your latest RL work to our NeurIPS workshop.

📅 Extended deadline: Sept 2 (AoE)

28.08.2025 19:55 — 👍 3 🔁 2 💬 0 📌 0

Call for Papers | ARLET

A simple, whitespace theme for academics. Based on [*folio](https://github.com/bogoli/-folio) design.

We've extended the deadline for our workshop's calls for papers/ideas! Submit your work by August 29 AoE. Instructions on the website: arlet-workshop.github.io/neurips2025/...

18.08.2025 10:46 — 👍 3 🔁 2 💬 0 📌 0

The OpenReview link for our calls (for papers and ideas) is available, submit here: openreview.net/group?id=Neu...

We look forward to receiving your submissions!

05.08.2025 15:03 — 👍 2 🔁 1 💬 0 📌 0

last year's edition was so much fun I'm really looking forward to this one!! join us in San Diego :))

28.07.2025 18:06 — 👍 4 🔁 0 💬 0 📌 0

Was it recorded? 🤔

19.06.2025 15:08 — 👍 1 🔁 0 💬 1 📌 0

Join us for Nneka's presentation tomorrow! Last talk before the summer break.

09.06.2025 17:43 — 👍 9 🔁 3 💬 0 📌 0

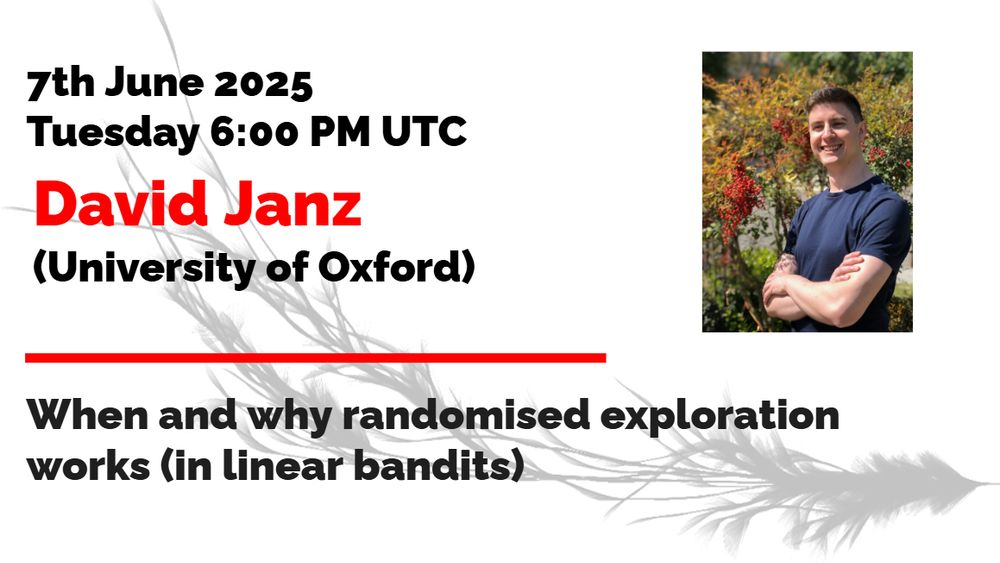

Join us tomorrow for Dave's talk! He will present his recent work on randomised exploration, which received an outstanding paper award at ALT 2025 earlier this year.

02.06.2025 14:55 — 👍 4 🔁 1 💬 0 📌 0

new preprint with the amazing @lviano.bsky.social and @neu-rips.bsky.social on offline imitation learning! learned a lot :)

when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. lots of open questions left though!

27.05.2025 07:12 — 👍 18 🔁 3 💬 1 📌 1

Dhruv Rohatgi will be giving a lecture on our recent work on comp-stat tradeoffs in next-token prediction at the RL Theory virtual seminar series (rl-theory.bsky.social) tomorrow at 2pm EST! Should be a fun talk---come check it out!!

26.05.2025 19:19 — 👍 11 🔁 5 💬 1 📌 0

new work on computing distances between stochastic processes ***based on sample paths only***! we can now:

- learn distances between Markov chains

- extract "encoder-decoder" pairs for representation learning

- with sample- and computational-complexity guarantees

read on for some quick details..

1/n

26.05.2025 13:26 — 👍 37 🔁 10 💬 1 📌 0

Discrete Diffusion: Continuous-Time Markov Chains

A tutorial explaining some key intuitions behind continuous time Markov chains for machine learners interested in discrete diffusion models: alternative representations, connections to point processes...

A new blog post with intuitions behind continuous-time Markov chains, a building block of diffusion language models, like @inceptionlabs.bsky.social's Mercury and Gemini Diffusion. This post touches on different ways of looking at Markov chains, connections to point processes, and more.

22.05.2025 15:14 — 👍 22 🔁 5 💬 1 📌 0

oh the inference blog posts are back 🥰

22.05.2025 15:19 — 👍 0 🔁 0 💬 1 📌 0

Mattes Mollenhauer, Nicole M\"ucke, Dimitri Meunier, Arthur Gretton: Regularized least squares learning with heavy-tailed noise is minimax optimal https://arxiv.org/abs/2505.14214 https://arxiv.org/pdf/2505.14214 https://arxiv.org/html/2505.14214

21.05.2025 06:14 — 👍 6 🔁 6 💬 1 📌 1

Later today, Sikata and Marcel will talk about their recent work on oracle-efficient RL with ensembles. Join us!

20.05.2025 15:48 — 👍 6 🔁 4 💬 0 📌 0

Excited to share what I've been up to: bringing text diffusion to Gemini!

Diffusion models are _fast_, and hold immense promise to challenge autoregressive models as the de facto standard for language modeling.

20.05.2025 18:52 — 👍 15 🔁 2 💬 1 📌 0

omg thanks

16.05.2025 00:00 — 👍 0 🔁 0 💬 0 📌 0

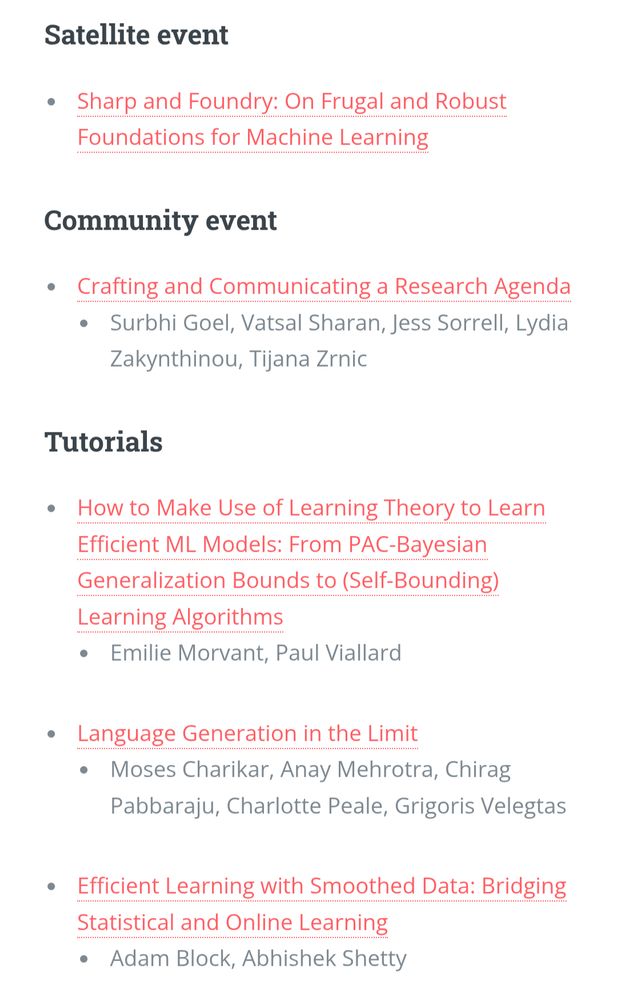

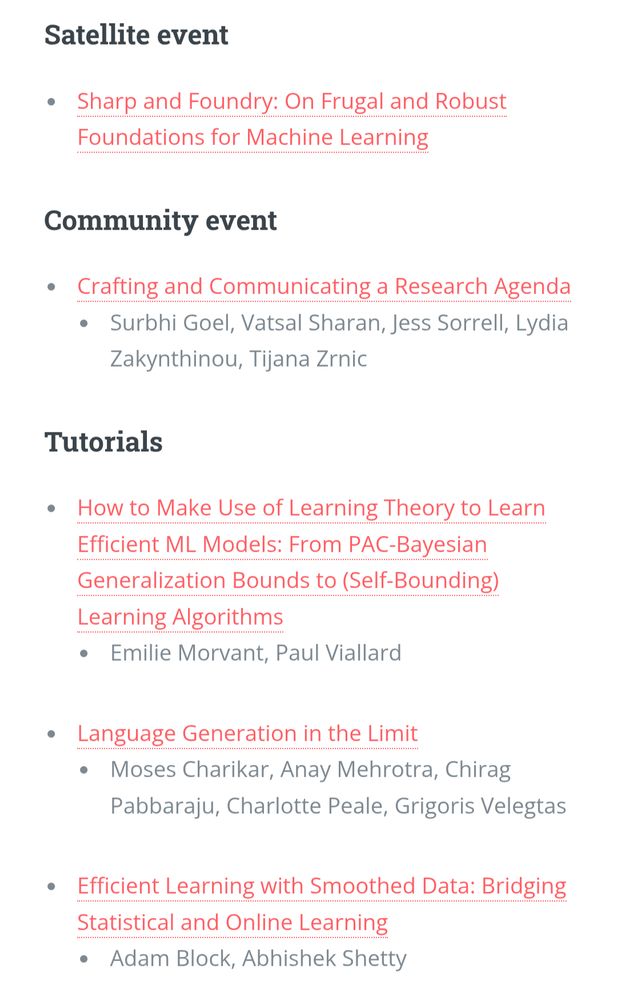

Community events and tutorials, list from the website

Workshops, list from the website

The tutorials, workshops, and community events for #COLT2025 have been announced!

Exciting topics, and impressive slate of speakers and events, on June 30! The workshops have calls for contributions (⏰ May 16, 19, and 25): check them out!

learningtheory.org/colt2025/ind...

10.05.2025 01:51 — 👍 20 🔁 7 💬 2 📌 0

Announcing the first workshop on Foundations of Post-Training (FoPT) at COLT 2025!

📝 Soliciting abstracts/posters exploring theoretical & practical aspects of post-training and RL with language models!

🗓️ Deadline: May 19, 2025

09.05.2025 17:09 — 👍 17 🔁 6 💬 1 📌 1

looking forward to giving a talk at the 2025 GHOST day in the beautiful city of Poznan (Poland) --- hope to see you there this weekend!

06.05.2025 19:06 — 👍 7 🔁 1 💬 1 📌 0

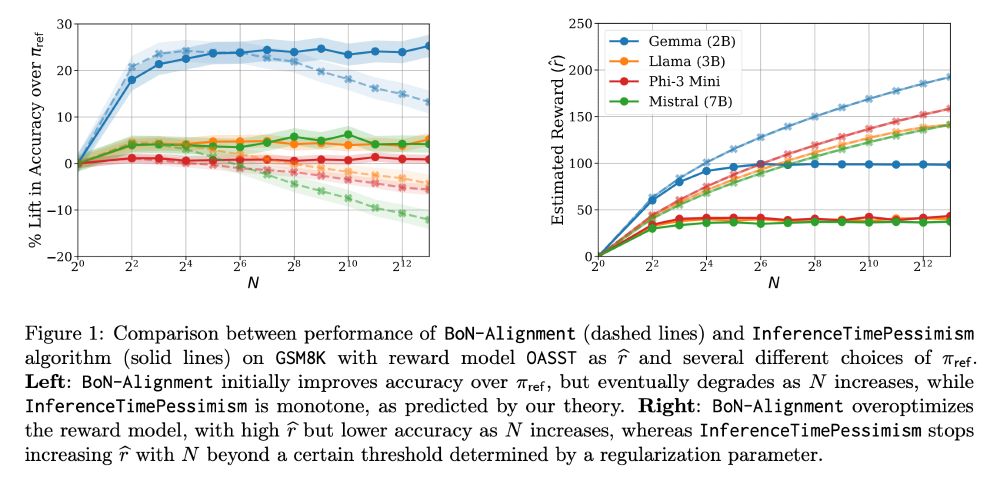

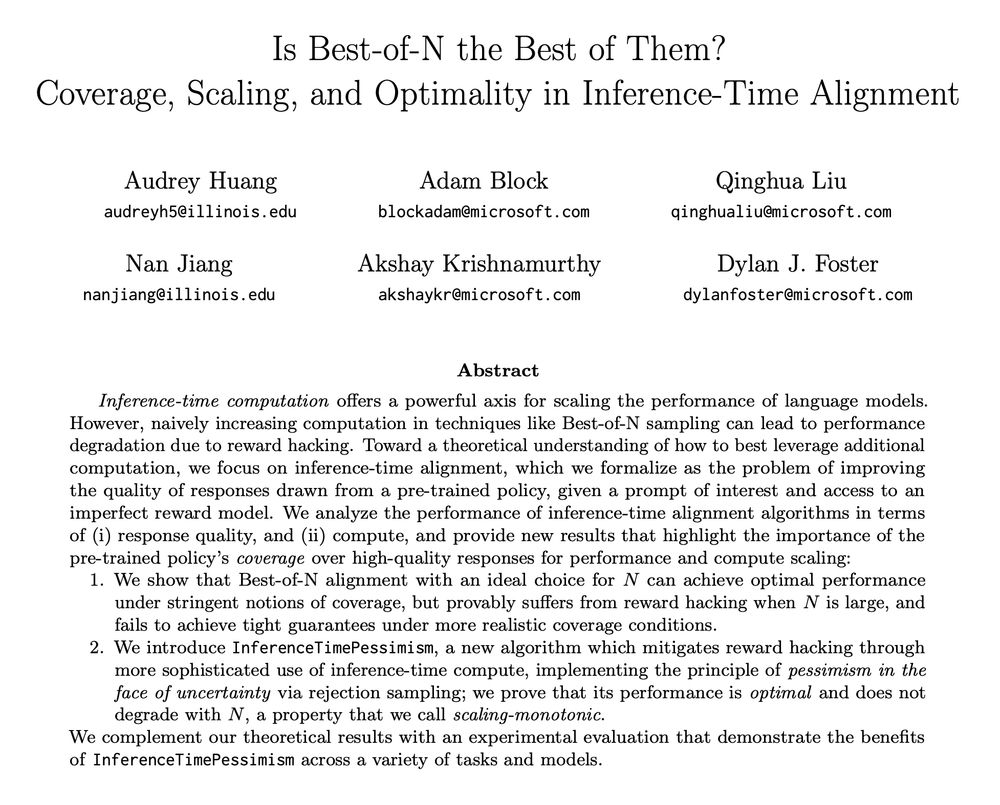

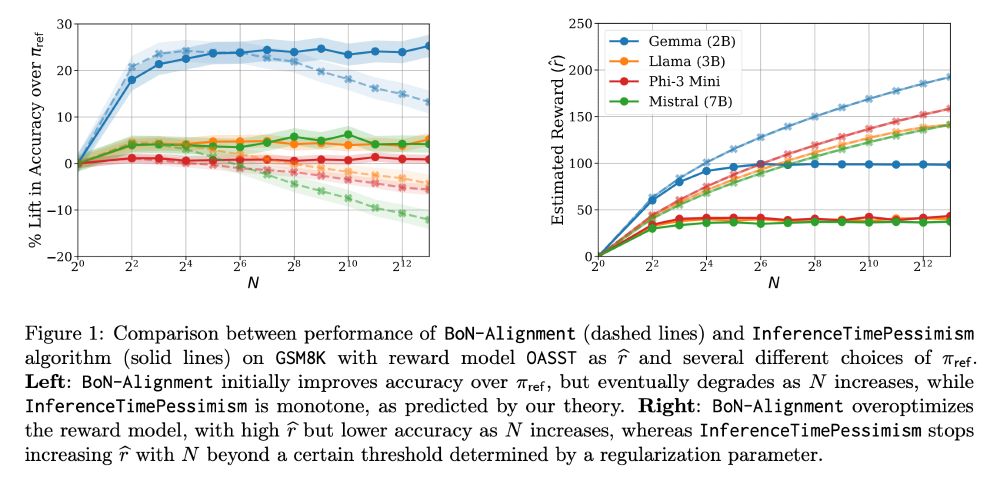

Is Best-of-N really the best we can do for language model inference?

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

03.05.2025 17:40 — 👍 22 🔁 5 💬 1 📌 1

Spectral Representation for Causal Estimation with Hidden Confounders

at #AISTATS2025

A spectral method for causal effect estimation with hidden confounders, for instrumental variable and proxy causal learning

arxiv.org/abs/2407.10448

Haotian Sun, @antoine-mln.bsky.social, Tongzheng Ren, Bo Dai

02.05.2025 12:36 — 👍 3 🔁 3 💬 0 📌 0

RS DeepMind. Works on Unsupervised Environment Design, Problem Specification, Game/Decision Theory, RL, AIS. prev CHAI_Berkeley

Postdoctoral researcher,

Human-in-the-loop Decision making

Aalto University, Finland

PhD: Inria Lille, France

Postdoc @ INRIA

Nonlinear numerical optimisation & optimal control (for robotics). C/C++ for real-time control applications. 3D graphics enthusiast.

PhD student at NYU | Building human-like agents | https://www.daphne-cornelisse.com/

AI Scientist at Xaira Therapeutics. Previously Machine Learning PhD student - Dept. Statistics University of Oxford

PhD student at École Polytechnique and Inria working on optimal transport, machine learning and their applications to neuroscience

Researcher on MDPs and RL. Retired prof. #orms #rl

Aligning Reinforcement Learning Experimentalists and Theorists workshop.

> https://arlet-workshop.github.io

We study the mathematical principles of learning, perception & action in brains & machines. Funded by the Gatsby Charitable Foundation. Based at UCL. www.ucl.ac.uk/life-sciences/gatsby

Assistant Professor / Faculty Fellow @nyudatascience.bsky.social studying cognition in mind & brain with neural nets, Bayes, and other tools (eringrant.github.io).

elsewhere: sigmoid.social/@eringrant, twitter.com/ermgrant @ermgrant

https://generalstrikeus.com/

PhD student in computational linguistics at UPF

chengemily1.github.io

Previously: MIT CSAIL, ENS Paris

Barcelona

Research Scientist at Apple Machine Learning Research. Previously ServiceNow and Element AI in Montréal.

Chief Models Officer @ Stealth Startup; Inria & MVA - Ex: Llama @AIatMeta & Gemini and BYOL @GoogleDeepMind

So far I have not found the science, but the numbers keep on circling me.

Views my own, unfortunately.

Postdoc @oxfordstatistics.bsky.social

PhD student in Machine Learning at Gatsby U it, UCL. Causal inference, Symmetry, Probabilistic ML, Kernel methods.

I lead Cohere For AI. Formerly Research

Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

Co-founder and CEO, Mistral AI

PhD student at Université Côte d’Azur

This is the official account of EWRL18 - European Workshop on Reinforcement Learning

Official website: https://euro-workshop-on-reinforcement-learning.github.io/ewrl18/