Our next paper on comparing dynamical systems (with special interest to artificial and biological neural networks) is out!! Joint work with @annhuang42.bsky.social , as well as @satpreetsingh.bsky.social , @leokoz8.bsky.social , Ila Fiete, and @kanakarajanphd.bsky.social : arxiv.org/pdf/2510.25943

10.11.2025 16:16 — 👍 67 🔁 23 💬 4 📌 3

8/8

TL;DR: Peak Selection is a novel mechanism for the modularity emergence in a variety of systems. Applied to grid cells, it makes testable predictions at molecular, circuit, and functional levels, and matches observed period ratios better than any existing model!

19.02.2025 23:22 — 👍 2 🔁 0 💬 0 📌 0

7/

Peak Selection applies broadly for module emergence:

The same mechanism can also explain:

🌱 Emergent ecological niches

🐠 Coral spawning synchrony

🤖 Modularity in optimization & learning

19.02.2025 23:22 — 👍 1 🔁 0 💬 1 📌 0

6b/ (cont'd)

Central results and predictions:

•Self-scaling with organism size

•Topologically robust: insensitive to almost all param variations, activity perturbations; also robust to weight heterogeneity! (no need for exactly symmetric interactions in CANs)

19.02.2025 23:22 — 👍 1 🔁 0 💬 1 📌 0

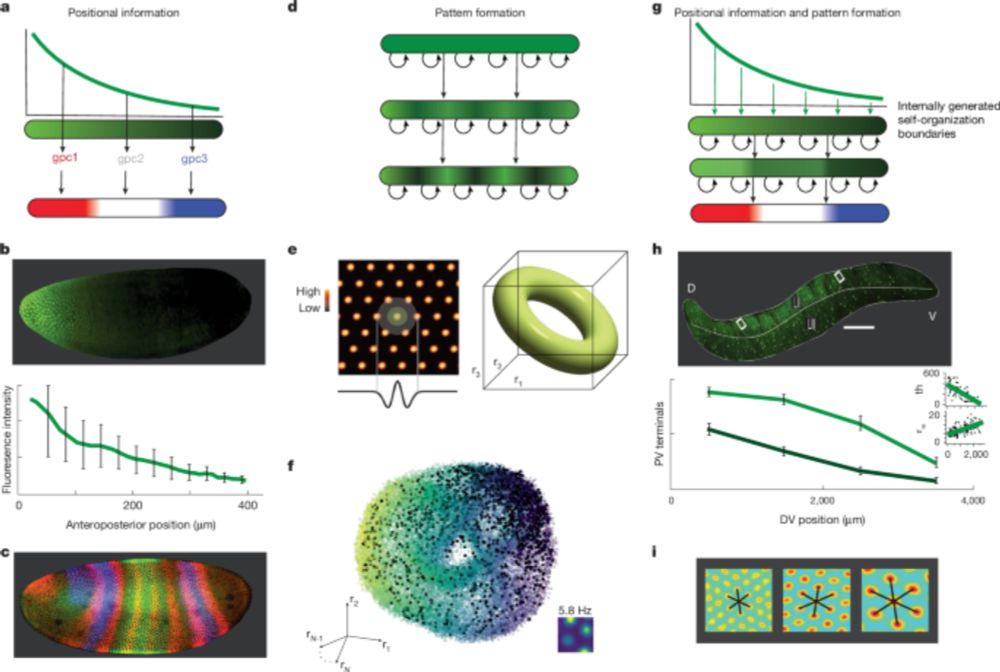

6/

Central results and predictions:

•Nearly **any** interaction shape can form grid cell patterning (Mexican-hat kernels not needed!)

•Grid cells involve two scales of interactions, one spatially varying and one fixed.

•Functional modularity can emerge without molecular modularity.

19.02.2025 23:21 — 👍 1 🔁 0 💬 1 📌 0

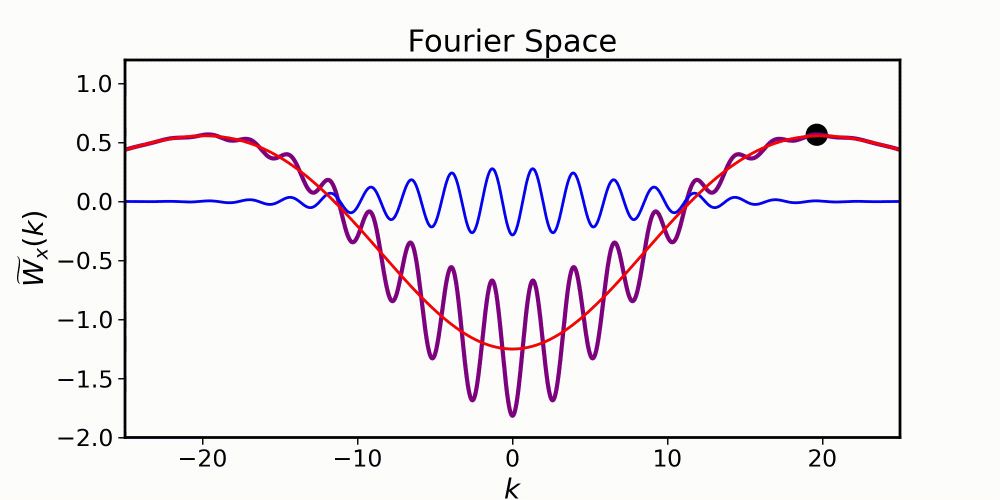

5b/ (cont’d)

Grid modularity from Peak Selection!

•Discrete jumps in grid period without discrete precursors.

•Novel period ratio prediction: adjacent periods ratios vary as ratio of integers (3/2, 4/3, 5/4, …).

•Excellent agreement with data (R^2 ~0.99)!

19.02.2025 23:21 — 👍 1 🔁 0 💬 1 📌 0

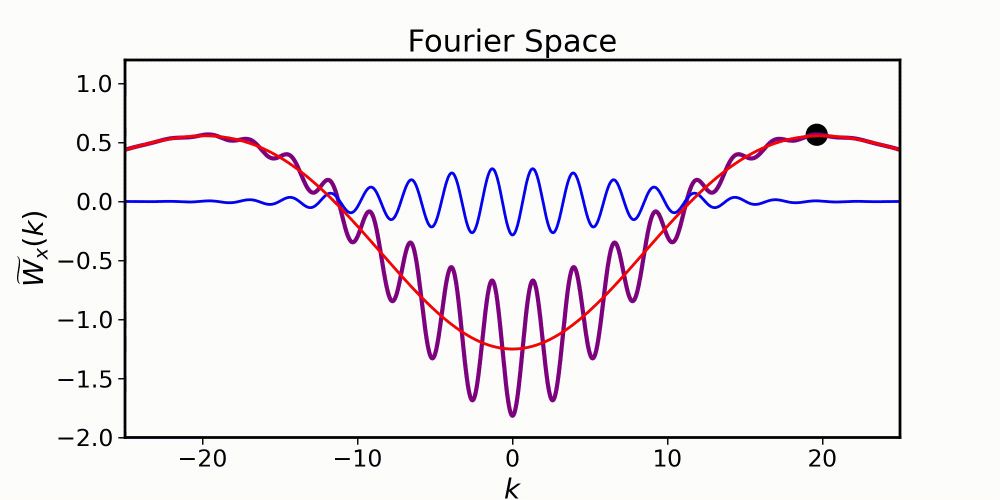

5/

Grid modularity from Peak Selection!

•Two forms of local interactions: one spatially varying smoothly in scale, the other held fixed.

•These spontaneously generate local patterning and global modularity!

19.02.2025 23:21 — 👍 1 🔁 0 💬 1 📌 0

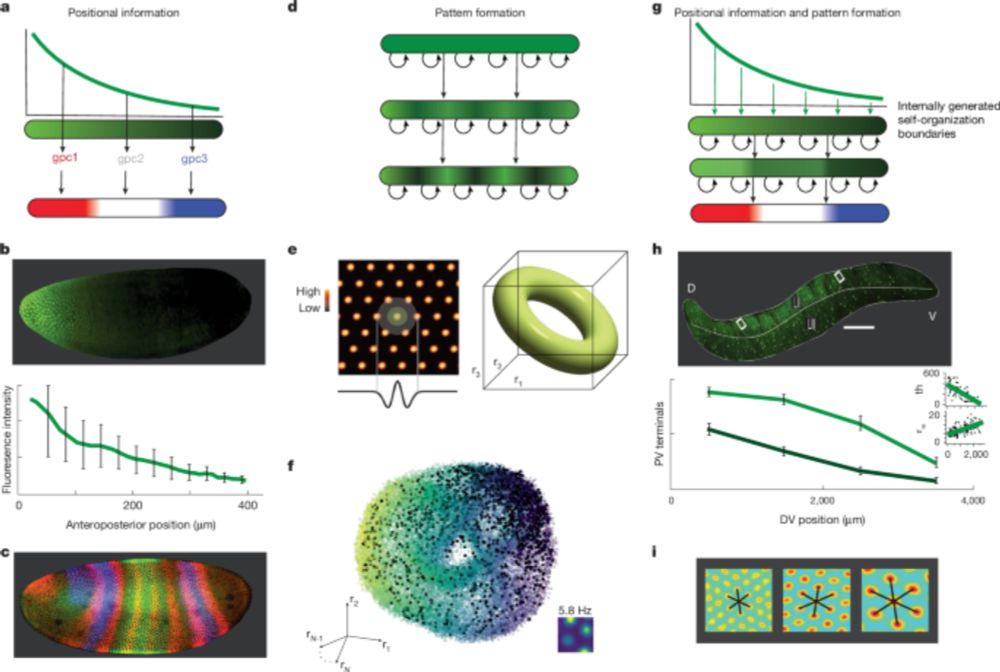

4/

2 classic ideas for structure emergence in biology

•Positional hypothesis: genes apply discrete thresholds, but discrete gene expression

•Turing hypothesis: Local interactions drive patterns, but single scale

But grid modules are multiscale, from presumably continuous precursors

19.02.2025 23:21 — 👍 1 🔁 0 💬 1 📌 0

3/

Various measured cellular and circuit properties vary smoothly across grid cells. Yet, grid cells are organized into discrete modules with different spatial periods. How does discrete organization arise from smooth gradients?

19.02.2025 23:21 — 👍 1 🔁 0 💬 1 📌 0

2/ The work introduces “Peak Selection”: a general mechanism by which local interactions and smooth gradients give rise to global modules. We first focus on a classic example of modularity, grid cells in the brain.

19.02.2025 23:21 — 👍 3 🔁 0 💬 1 📌 0

Global modules robustly emerge from local interactions and smooth gradients - Nature

The principle of peak selection is described, by which local interactions and smooth gradients drive self-organization of discrete global modules.

1/ Our paper appeared in @Nature today! www.nature.com/articles/s41... w/ Fiete Lab and @khonamikail.bsky.social .

Explains emergence of multiple grid cell modules, w/ excellent match to data! Novel mechanism for applying across vast systems from development to ecosystems. 🧵👇

19.02.2025 23:20 — 👍 97 🔁 32 💬 2 📌 2

Thanks! Yes, in its current form it doesn't have recency or primacy effects. We have some thoughts on including recency with some weight decay to reduce the importance of older memories. But how to build in primacy and other forms of memory salience in this model is something to think more about!

18.01.2025 07:50 — 👍 1 🔁 0 💬 0 📌 0

Thanks Sreeparna!

18.01.2025 07:45 — 👍 1 🔁 0 💬 0 📌 0

9b/ (cont’d)

Hippocampal cells remap by direction/context 📍➡️⬅️

Memory consolidation of multiple memory traces 📚

Model thus bridges experiments and theory!

17.01.2025 00:10 — 👍 4 🔁 0 💬 1 📌 0

9/ Experimental alignment: 🧠🔬

VectorHaSH mirrors entorhinal-hippocampal phenomena:

Grid cells demonstrate stable periodicity, rapid phase resets, robust velocity integration 🌐

Recreate correlation statistics of grid cells and place cells 📊

17.01.2025 00:10 — 👍 2 🔁 0 💬 1 📌 0

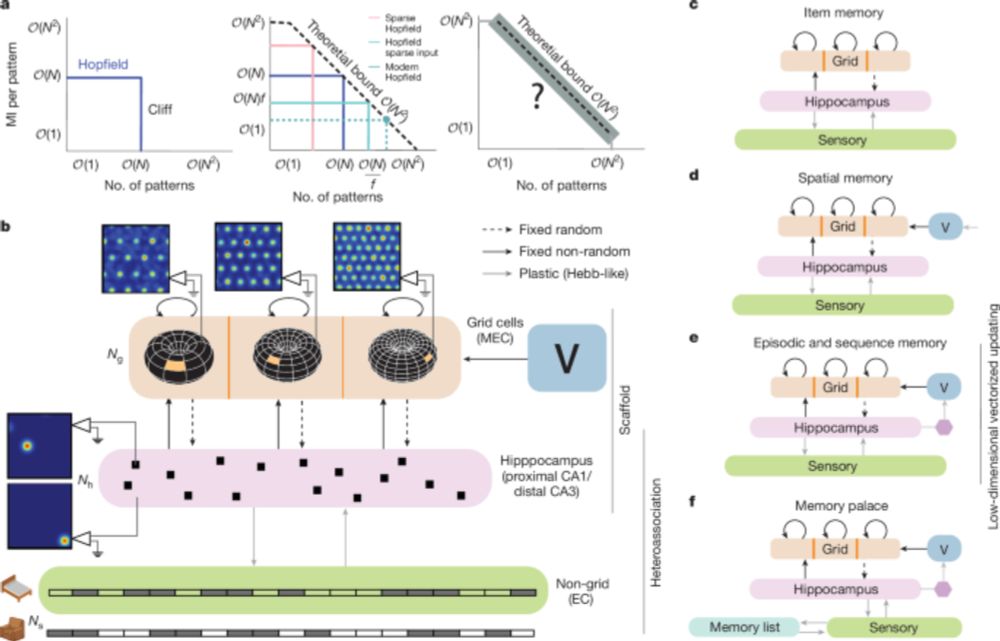

8/ 🏰 Memory palaces explained!

Why does imagining a spatial walk supercharge memory?

VectorHaSH shows how recall of familiar locations acts as a secondary scaffold.

Result: Even approximate recall of locations reliably supports one-shot arbitrary, high-fidelity memories. 💡

17.01.2025 00:10 — 👍 2 🔁 0 💬 2 📌 0

7/ How does VectorHaSH implement efficient episodic/sequence memory? Conventional models recall entire high-dim states ➡️ fail quickly. VectorHaSH reduces the problem to recalling low-dim velocity vectors on a scaffold. Result: Long sequences stored & recalled with precision! 🔥

17.01.2025 00:09 — 👍 2 🔁 0 💬 1 📌 0

6/ Spatial memory at scale? VectorHaSH links scaffold states to sensory cues via the hippocampus. This leads to independent non-interfering learned maps (landmark-location associations) in multiple rooms. Metric grid structure supports zero-shot inference along novel paths🚶♀️

17.01.2025 00:09 — 👍 3 🔁 0 💬 1 📌 0

5a/ (cont’d)

VectorHaSH then stores memories by heteroassociation of inputs with these scaffold states, enabling graceful degradation of memory detail with the number of stored memories over a vast number of inputs

17.01.2025 00:09 — 👍 3 🔁 0 💬 1 📌 0

5/ Memory without cliffs? Hopfield and other models crash 📉after reaching capacity, completely losing all previous memories. VectorHaSH avoids this by first using grid cells to create a scaffold of exponentially many large-basin fixed points

17.01.2025 00:09 — 👍 3 🔁 0 💬 1 📌 0

4a/ (cont’d)

(3) Episodic memory, using low-dimensional transitions in the grid space to support massive sequence capacity 🎞️(4) Method of Loci, explaining the paradox of why adding to the memory task (associating items with spatial locations) boosts performance 🏰

17.01.2025 00:09 — 👍 2 🔁 0 💬 1 📌 0

4/

VectorHaSH supports: (1) Item memory, avoiding memory cliffs of Hopfield nets (2) Spatial memory, learning landmark-location associations over many maps 🌍& minimizing catastrophic forgetting

17.01.2025 00:09 — 👍 2 🔁 0 💬 1 📌 0

3/ Key ideas 🔑Hippocampal and grid cells create a fixed "scaffold" that serves as a robust, error-correcting memory foundation. External inputs are "hooked" onto the scaffold through heteroassociation. Low-dimensional transitions in grid space enable large sequence memory.

17.01.2025 00:08 — 👍 3 🔁 0 💬 1 📌 0

2/ Why are spatial & episodic memory co-localized in the hippocampus? How do memory palaces allow memorization of decks of cards?

Our model, VectorHaSH, shows how the hippocampus along with grid cells integrate these roles for memory storage, sequence recall, memory palaces 🏰

17.01.2025 00:08 — 👍 3 🔁 0 💬 1 📌 0

PhD Student at MIT Brain and Cognitive Sciences studying Computational Neuroscience / ML. Prev Yale Neuro/Stats, Meta Neuromotor Interfaces

Computational neuroscientist interested in how we learn, and dad to twin boys

Asst prof at Baylor College of Medicine

https://www.henniglab.org/

PhD Student @MITEECS

https://jd730.github.io/

Neuroscientist at UChicago (Sheffield lab). Hippocampus, memory, computations, neural code. Computational modeling, data analysis, 2P imaging, patch-clamp.

Neuroscientist. Long-form opinions at https://markusmeister.com.

Father, husband, scientist

Computational Machinery of Cognition (CMC) lab focuses on understanding the computational and neural machinery of goal-driven behavior | PI: @neuroprinciplist.bsky.social | https://shervinsafavi.github.io/cmclab/

Postdoc at MIT in the jazayeri lab. I study how cerebello-thalamocortical interactions support non-motor function.

gabrielstine.com

Eric and Wendy Schmidt Fellow @BroadInstitute || Harvard Biophysics PhD '23 || ND Physics ‘17 || 🇺🇸🇭🇺🇸🇪🇷🇴

Postdoc in brain and cognitive science at MIT.

Cognitive computational neuroscientist and vision scientist. NeuroAI. Professor at Harvard University.

|| assistant prof at University of Montreal || leading the systems neuroscience and AI lab (SNAIL: https://www.snailab.ca/) 🐌 || associate academic member of Mila (Quebec AI Institute) || #NeuroAI || vision and learning in brains and machines

Biophysics PhD student with Jan Drugowitsch at Harvard Univ. (he/him) | theoretical neuroscience | spatial navigation under uncertainty

Incoming assist. prof. JHU ECE | Koopman operators and hippocampi | He/Him | You know what the French c'est - "Hate baguettes hate"

https://wredman4.wixsite.com/wtredman

Professor, Department of Psychology and Center for Brain Science, Harvard University

https://gershmanlab.com/

Neuroscience, behaviour, computation, etc | ভাষা, immigration, সাম্য, history

Currently a postdoctoral researcher at the Paris-Saclay Institute of Neuroscience

At KITP on the UC Santa Barbara campus, researchers in theoretical physics and allied fields collaborate on questions at the leading edges of science.

www.kitp.ucsb.edu

PhD student and ICoN Fellow @mitofficial.bsky.social studying AI @csail.mit.edu & neuroscience @mitbcs.bsky.social

Previously @numentaofficial.bsky.social, NVIDIA, @carnegiemellon.bsky.social

Chronic research nomad. Likes all kinds of neural networks and math

Author (The Fault in Our Stars, The Anthropocene Reviewed, etc.)

YouTuber (vlogbrothers, Crash Course, etc.)

Football Fan (co-owner of AFC Wimbledon, longtime Liverpool fan)

Opposed to Tuberculosis