@ojdaniels.bsky.social, @jessicaji.bsky.social, @jacob-feldgoise.bsky.social, and @alicrawford.bsky.social weigh in on:

• Open questions raised by the Plan

• Security-related recommendations

• Export controls

• Workforce priorities

06.11.2025 18:23 —

👍 1

🔁 1

💬 1

📌 0

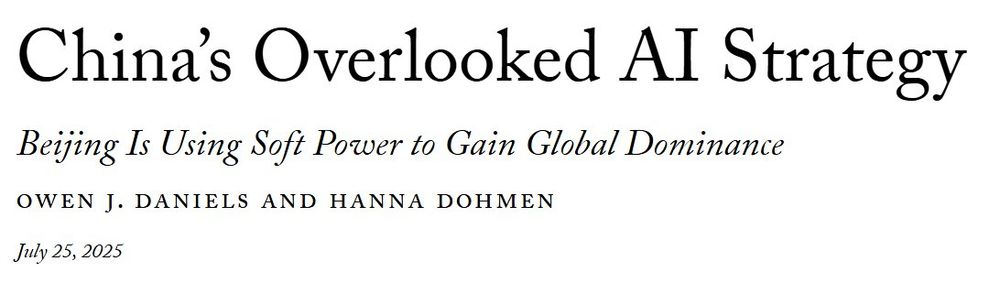

China’s Overlooked AI Strategy

Beijing is using soft power to gain global dominance.

“If Washington’s new AI strategy does not adequately account for open models, American AI companies, despite their world-leading models, will risk ceding international AI influence to China,” write @ojdaniels.bsky.social and @hannadohmen.bsky.social.

25.07.2025 18:44 —

👍 9

🔁 5

💬 1

📌 1

The new AI Action Plan touts the strategic importance of open models.

That's not news to China, where developers like DeepSeek have made the PRC the leader in open-weights AI.

In @foreignaffairs.com, CSET's @ojdaniels.bsky.social & @hannadohmen.bsky.social explain how the U.S. can respond.

25.07.2025 14:12 —

👍 4

🔁 1

💬 1

📌 0

I’m proud to share @csetgeorgetown.bsky.social’s Annual Report!

From Congressional testimony and groundbreaking research to essential data tools & translations, our team continues to shape critical emerging tech policy conversations.

Grateful to everyone who makes this work possible.

27.03.2025 16:26 —

👍 3

🔁 1

💬 1

📌 0

The future of AI leadership requires thoughtful policy. @CSETGeorgetown just submitted our response to @NSF's RFI on the Development of an Artificial Intelligence Action Plan. Here's what we recommend: 🧵[1/]

17.03.2025 13:28 —

👍 5

🔁 5

💬 1

📌 0

What does the EU's shifting strategy mean for AI?

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

10.03.2025 14:17 —

👍 4

🔁 4

💬 0

📌 0

🇪🇺🤖🐘

10.03.2025 14:05 —

👍 2

🔁 0

💬 0

📌 0

U.S. AI firms, take note! An op-ed by @sambresnick.bsky.social and @colemcfaul.bsky.social in @barrons.com argues that China's "good enough" AI strategy (think DeepSeek) could disrupt the market.

It's about affordable tech. Huawei playbook 2.0? Open-source Chinese models are closing the gap, fast.

07.03.2025 16:19 —

👍 5

🔁 3

💬 1

📌 0

Some Thoughts on AI Terminology: Safety and Regulation

A persistent challenge in the artificial intelligence policy space is agreement—or lack thereof—around terminology. Sometimes, this is due to the challenge of nailing down precise terms for evolving c...

Are definitional clarifications dramatically boring? Yes. Are they policy relevant? Also yes. Some thoughts on conflating AI governance, safety, & regulation, informed by @csetgeorgetown.bsky.social work.

TLDR: regulation !=safety

safety != "hand-wringing" www.linkedin.com/pulse/some-t...

05.03.2025 15:36 —

👍 3

🔁 1

💬 0

📌 0

DeepResearch prompt: "'The End of History and the Last Man' but make it AI"

20.02.2025 19:34 —

👍 1

🔁 0

💬 0

📌 0

I've left a lot out: the profit opportunities and risks of increasingly agentic systems got a lot of air; emerging research on scheming, sabotage, and survival instincts of LLMs and frontier models was prominent; and practical ethics policy ideas abounded. Looking forward to sharing more ideas soon

13.02.2025 16:41 —

👍 2

🔁 0

💬 0

📌 0

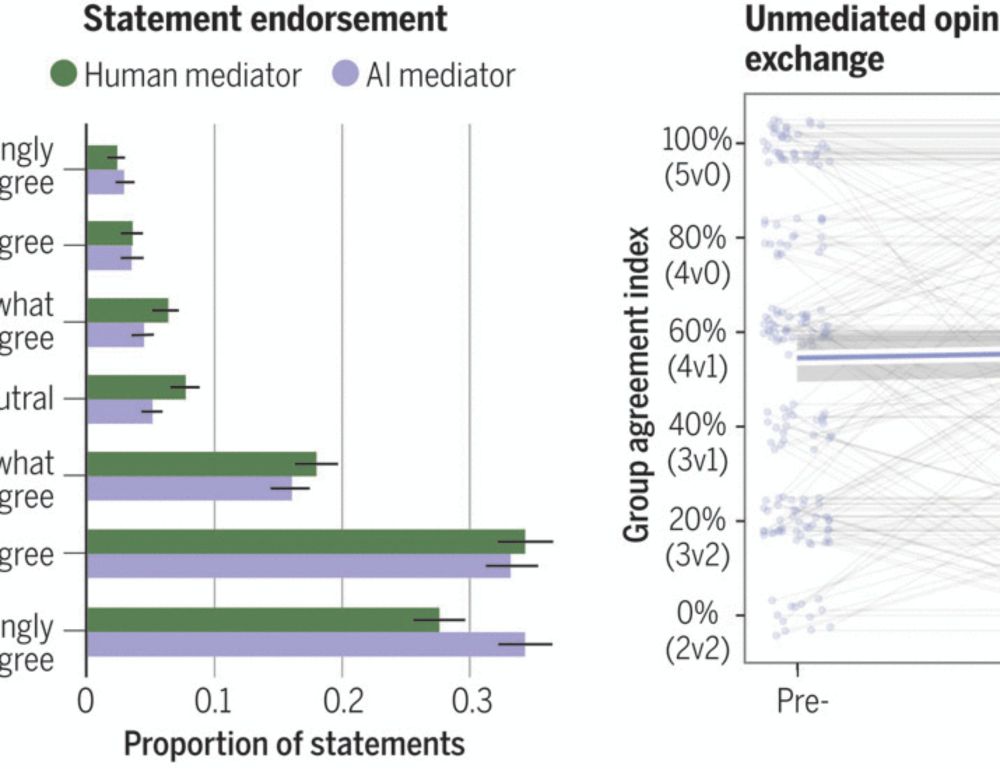

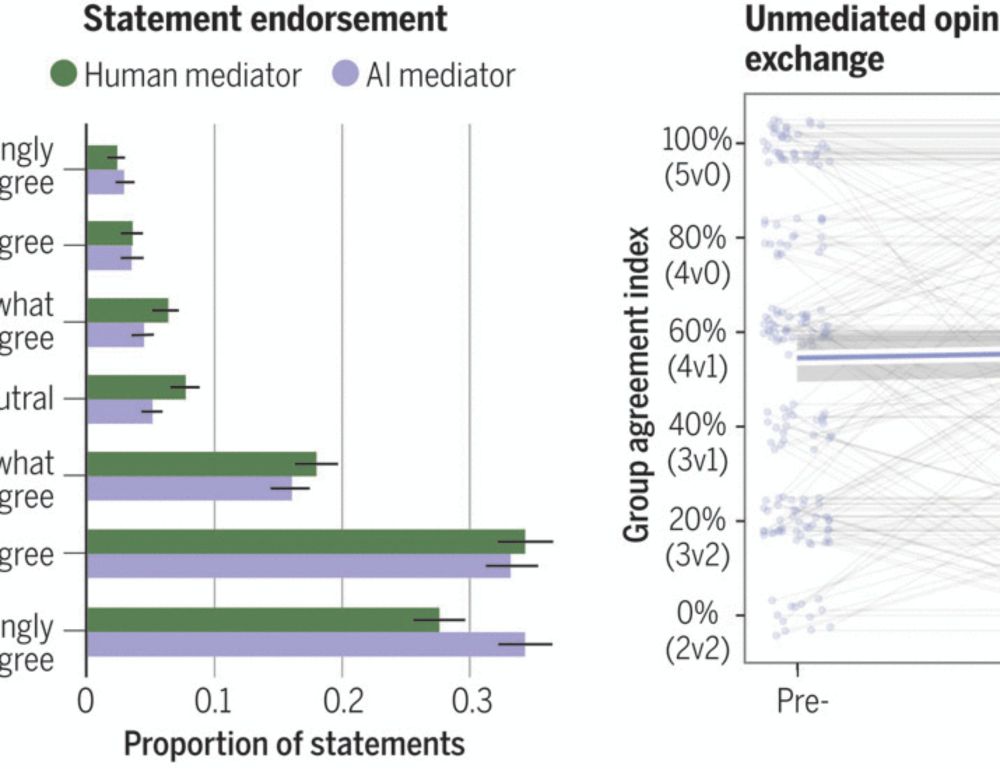

AI can help humans find common ground in democratic deliberation

Finding agreement through a free exchange of views is often difficult. Collective deliberation can be slow, difficult to scale, and unequally attentive to different voices. In this study, we trained a...

Across the risk spectrum, the question arose time and again: where do we actually need AI solutions? Is it actually helpful to have AI to try to help us find common ground around political disagreements, for example? Do we want more tech in our democratic processes? www.science.org/doi/10.1126/...

13.02.2025 16:41 —

👍 2

🔁 0

💬 1

📌 0

AI goes nuclear

Big tech is turning to old reactors (and new ones) to power the energy-hungry data centers that artificial intelligence systems need. But the downsides of nuclear power—like potential nuclear weapons ...

France’s announcement at the summit that it was tapping its nuclear power industry for data centers grabbed headlines, but nuclear power is not necessarily a panacea for all of AI’s energy issues. It remains a globally significant space to watch. thebulletin.org/2024/12/ai-g...

13.02.2025 16:41 —

👍 2

🔁 0

💬 1

📌 0

Environmental and energy concerns will only continue to grow with scaling, and rightfully earned much discussion. Even with model innovation’s like DeepSeek R1, which is cheaper and more efficient to train, consumption for inference will remain high.

13.02.2025 16:41 —

👍 0

🔁 0

💬 1

📌 0

The AISIs have different structures and stakeholders and are attuned to particular research ecosystems, meaning they're not 1-1 matches from one nation to the next, but they can still facilitate exchange. They'll obviously face some geopolitical headwinds amid tech competition.

13.02.2025 16:41 —

👍 0

🔁 0

💬 1

📌 0

Safety cases at AISI | AISI Work

As a complement to our empirical evaluations of frontier AI models, AISI is planning a series of collaborations and research projects sketching safety cases for more advanced models than exist today, ...

Despite disappointment at executive messaging, the AI Safety Institutes leading safety work at the national level could be ideal vehicles for developing and disseminating testing, evaluation, and safety best practices. Saw some impressive presentations at side events www.aisi.gov.uk/work/safety-...

13.02.2025 16:41 —

👍 0

🔁 0

💬 1

📌 0

JD Vance's comments on Europe's "excessive regulation" were well covered, but EC Pres von der Leyen and Macron also championed getting out of the private sector's way. My colleague @miahoffmann.bsky.social wrote a thread about why this attitude could be troubling for Europe bsky.app/profile/miah...

13.02.2025 16:41 —

👍 1

🔁 0

💬 1

📌 0

The AI Action Summit: Some Perspective on Paris

The recently concluded AI Action Summit in Paris, which comprised civil society and official government meetings, commenced last week with some ambitious goals. As the Center for Security and Emerging...

A few thoughts on the outcomes of the AI Action Summit in Paris. The summit laid out some grand goals for AI governance (and covered them at length in the civil society portion), but the government-led portion of the summit was largely about AI enthusiasm.

www.linkedin.com/pulse/ai-act...

13.02.2025 16:41 —

👍 3

🔁 1

💬 1

📌 0

There have been a ton of AI policy developments coming out of the EU these past weeks, but one deeply concerning one is the withdrawal of the AI Liability Directive (AILD) by the European Commission. Here’s why:

13.02.2025 15:35 —

👍 4

🔁 3

💬 1

📌 1

Next week, Paris will host world leaders, experts, and CEOs of some of the biggest AI developers at the #AIActionSummit.

Whether they can agree on a unified approach to AI governance remains to be seen, write

@miahoffmann.bsky.social, @minanrn.bsky.social, and

@ojdaniels.bsky.social.

06.02.2025 14:39 —

👍 6

🔁 1

💬 1

📌 0

They also fail to advance progress on more ambitious goals, like safety measures, energy efficiency, or labor standards in data supply-chains. Legislators need to think more on how governments and firms can ensure voluntary steps entail real, significant investments toward harnessing AI responsibly.

06.02.2025 12:13 —

👍 0

🔁 0

💬 0

📌 0

Last but not least, voluntary commitments: voluntary measures that simply reframe business practices as governance risk coming off as participation trophies.

06.02.2025 12:12 —

👍 0

🔁 0

💬 1

📌 0

The EU AI Act has inspired some of this state legislation and is a global role model. As provisions go into enforcement imminently, it may serve as a role model for enforcing, not just developing, legislation

06.02.2025 12:06 —

👍 0

🔁 0

💬 1

📌 0