Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

We're hiring 5 T/TT faculty in Neuroscience, including Computational Neuroscience, at U Notre Dame

We'll start reviewing applications very soon, so if you're thinking about applying, please apply now/soon!

apply.interfolio.com/173031

07.10.2025 16:14 — 👍 2 🔁 0 💬 0 📌 0

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

I'm posting this again for anyone who might have missed it last time:

Notre Dame is hiring 5 tenure or tenure-track professors in Neuroscience, including Computational Neuroscience, across 4 departments.

Feel free to reach out with any questions.

And please share!

apply.interfolio.com/173031

30.09.2025 17:25 — 👍 4 🔁 0 💬 0 📌 0

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

The University of Notre Dame is hiring 5 tenure or tenure-track professors in Neuroscience, including Computational Neuroscience, across 4 departments.

Come join me at ND! Feel free to reach out with any questions.

And please share!

apply.interfolio.com/173031

03.09.2025 17:26 — 👍 39 🔁 33 💬 0 📌 2

Fixed! (I think) Try again and let me know if you still have trouble. You might need to refresh the page.

03.06.2025 12:39 — 👍 0 🔁 0 💬 0 📌 0

Thank you for the feedback, I'll work on both of those!

03.06.2025 12:24 — 👍 0 🔁 0 💬 0 📌 0

Thanks for the suggestion, that makes sense. I am just trying to figure out the best implementation. It's difficult (for me) to combine email verification and profile creation on the same page. Maybe a link to a screenshot of an example profile on the registration page?

02.06.2025 17:25 — 👍 0 🔁 0 💬 0 📌 0

Most universities have generous "Conflict of Commitment" policies that allow faculty to devote a portion of their time to consulting work, but these policies are under-utilized.

Consulting work can provide valuable industry experience, and also extra cash.

02.06.2025 10:03 — 👍 1 🔁 0 💬 0 📌 0

Couldn't the same argument be made for conference presentations (which 90% of the time only describe published work)?

20.05.2025 19:05 — 👍 3 🔁 0 💬 0 📌 0

When _you_ publish a new paper, lots of people notice, lots of people read it. No explainer thread needed. Deservedly so, because you have a reputation for writing great papers.

When Dr. Average Scientist publishes a paper, nobody notices, nobody reads it without some leg work to get it out there

20.05.2025 19:04 — 👍 25 🔁 0 💬 0 📌 0

Thanks! Let us know if you have comments or questions

19.05.2025 16:13 — 👍 1 🔁 0 💬 0 📌 0

In other words:

Plasticity rules like Oja's let us go beyond studying how synaptic plasticity in the brain can _match_ the performance of backprop.

Now, we can study how synaptic plasticity can _beat_ backprop in challenging, but realistic learning scenarios.

19.05.2025 15:33 — 👍 4 🔁 1 💬 0 📌 0

Finally, we meta-learned pure plasticity rules with no weight transport, extending our previous work. When Oja's rule was included, the meta-learned rule _outperformed_ pure backprop.

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

We find that Oja's rule works, in part, by preserving information about inputs in hidden layers. This is related to its known properties in forming orthogonal representations. Check the paper for more details.

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

Vanilla RNNs trained with pure BPTT fail on simple memory tasks. Adding Oja's rule to BPTT drastically improves performance.

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

We often forget how important careful weight initialization is for training neural nets because our software initializes them for us. Adding Oja's rule to backprop also eliminates the need for careful weight initialization.

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

We propose that plasticity rules like Oja's rule might be part of the answer. Adding Oja's rule to backprop improves learning in deep networks in an online setting (batch size 1).

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

For example, a 10-layer ffwd network trained on MNIST using online learning (batch size 1) performs poorly when trained with pure backprop. How does the brain learn effectively without all of these engineering hacks?

19.05.2025 15:33 — 👍 1 🔁 0 💬 1 📌 0

In our new preprint, we dug deeper into this observation. Our motivation is that modern machine learning depends on lots of engineering hacks beyond pure backprop: gradients averaged over batches, batchnorm, momentum, etc. These hacks don't have clear, direct biological analogues.

19.05.2025 15:33 — 👍 1 🔁 0 💬 1 📌 0

In previous work on this question, we meta-learned linear combos of plasticity rules. In doing so, we noticed something intersting:

One plasticity rule improved learning, but its weight updates weren't aligned with backprop's. It was doing something different. That rule is Oja's plasticity rule.

19.05.2025 15:33 — 👍 2 🔁 0 💬 1 📌 0

A lot of work in "NeuroAI," including our own, seeks to understand how synaptic plasticity rules can match the performance of backprop in training neural nets.

19.05.2025 15:33 — 👍 0 🔁 0 💬 1 📌 0

A scientific figure blueprint guide!

If this seems empty it's bcz I don't plan to use it anytime soon!

I made this figure panel size guide to avoid thinking about dimensions every time. Apparently this post is going to be a 🧵! So feel free to bookmark it and save some time of yours.

16.05.2025 09:50 — 👍 84 🔁 19 💬 7 📌 7

Interesting comment, but you need to define what you mean by "neuroanatomy." Does such a thing actually exist? As a thing in itself or as a phenomenon? What would Kant have to say? ;)

15.05.2025 14:44 — 👍 0 🔁 0 💬 1 📌 0

Sorry, I didn't mean to phrase that antagonistically.

I just think that unless we're talking just about anatomy and we're restricting to a direct synaptic pathway (which maybe you are) then it's difficult to make this type of question precise without concluding that everything can query everything

13.05.2025 15:02 — 👍 1 🔁 0 💬 1 📌 0

Unless we're talking about a direct synapse, I don't know how we can expect to answer this question meaningfully when a neuromuscular junction in my pinky toe can "readout" and "query" photoreceptors in my retina.

13.05.2025 14:27 — 👍 0 🔁 0 💬 1 📌 0

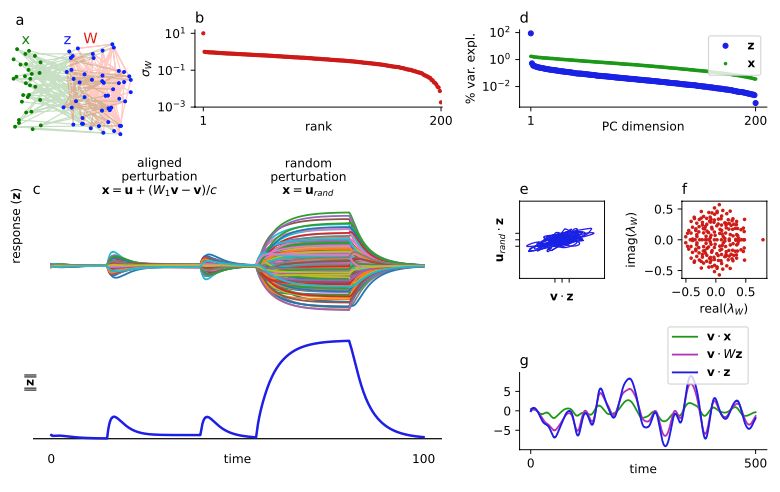

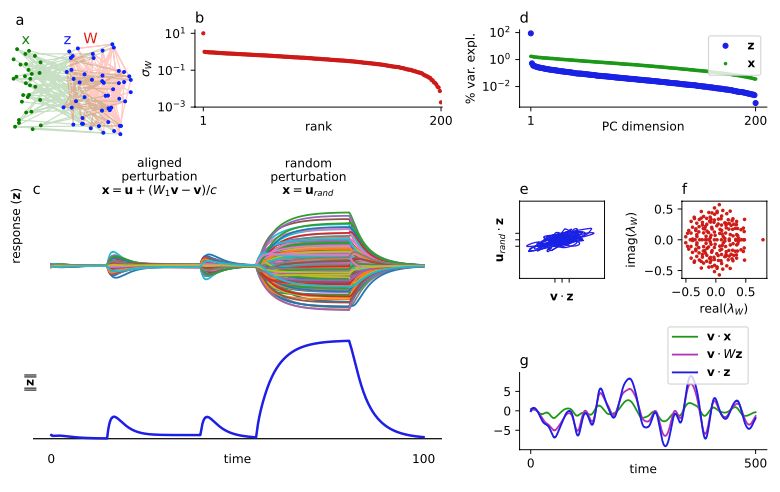

Thanks. Yeah, I think this example helps clarify 2 points:

1) large negative eigenvalues are not necessary for LRS, and

2) high-dim input and stable dynamics are not sufficient for high-dim responses.

Motivated by this conversation, I added eigenvalues to the plot and edited the text a bit, thx!

02.05.2025 16:51 — 👍 1 🔁 0 💬 0 📌 0

Well deserved. Congratulations, Adrienne!

01.05.2025 01:56 — 👍 2 🔁 0 💬 0 📌 0

^ I feel like this is a problem you'd be good at tackling

26.04.2025 13:51 — 👍 3 🔁 0 💬 0 📌 0

One thing I tried to work out, but couldn't: We assumed a discrete number of large sing vals of W, but what if there a continuous, but slow decay (eg, power law).

How to derive the decay rate of the var expl vals in terms of the sing val decay rate and the overlap matrix?

26.04.2025 13:51 — 👍 2 🔁 0 💬 1 📌 0

Maybe it's possible to write this condition on sing vals of P in terms of eigenspectrum of W in a simple way, but I don't know how.

26.04.2025 12:38 — 👍 0 🔁 0 💬 1 📌 0

⛵️ Research Resident @ Midjourney

🇪🇺 Member @ellis.eu

🤖 Generative NNs, Deep Learning, ProbML, Simulation Intelligence

🎓 PhD+MSc Computer Science, MSc Psychology

🏡 https://marvin-schmitt.com

The Max Perutz Labs are a joint venture of the University of Vienna and the Medical University of Vienna, dedicated to a mechanistic understanding of fundamental biomedical processes.

Colorado RNA biologist & tRNA enthusiast exploring the wild frontiers of nanopore direct RNA sequencing at the intrepid venn diagram of northern blots & machine learning.

Personality psych & causal inference @UniLeipzig. I like all things science, beer, & puns. Even better when combined! Part of http://the100.ci, http://openscience-leipzig.org

Predoc at Ai2 | prev. Princeton CS '24

Theoretical neuroscientist. PhD student in the Shine lab at Sydney Uni. Research associate at Monash M3CS. Interested in spikes, (nonlinear) neural dynamics, decisions, and consciousness.

www.ChristopherJWhyte.com

Comp neuro PhD, research scientist in motor control and neuromorphics, co-founder of Applied Brain Research where we build ultra low power edge AI chips and I've led a lot of advanced AI consulting. Sometimes I write blog posts at http://studywolf.com.

Everything Science and Education, specially everything Biology and Chemistry, Liberal by heart. Live by kindness

Social and Cultural Psychologist | Fresno State & UofA Alum | Class, Culture, Environmental Justice, Policing | Assistant Professor of Psychology @ Skidmore College

https://www.harrisonjschmitt.com/

Assistant Professor of Criminology, Law and Society and Political Science (by courtesy) at the University of California, Irvine. I study the political economy of security, crime, and law enforcement.

Ass. Prof. of Methods in Empirical Social Research at FU Berlin and EB member @methodsnet.bsky.social

Politics, methods, and occasionally memes

https://bcastanho.github.io/

Assistant Professor in Network Science at the University of Zurich

- Networks, Computational Social Science, Complex Systems, Data Science

He/Him

📍 Tübingen, DE ∆ DevComPsy Lab ∆🔬👨💻 🧪∆ decisions, motor control, (laminar) MEG, MRI ∆ not interested in oscillations ∆🤘🤡🚴🏔️ ∆ he/him ∆

Professor, Harvard Kennedy School | Speaking in a personal capacity | https://msen.scholars.harvard.edu/

Aspiring philosopher; tolerable human; "amusing combination of sardonic detachment & literally all the feelings felt entirely unironically all at once" [he/his]

Policy Professor, Ford School, University of Michigan.

Irish immigrant. Administrative burdens guy.

Free newsletter, Can We Still Govern?: https://donmoynihan.substack.com

Husband of C, Dad of J & L, CEO/Editor-The DSR Network, Host-Deep State Radio, Siliconsciousness, other pods, Columnist-The Daily Beast, author of many books, also see my "Need to Know" Substack (djrothkopf.substack.com)

Professor of Energy & Climate Policy at University of Oxford

Energy Programme Leader at ECI, University of Oxford

Senior Research Fellow at Oriel College, Oxford

Senior Associate Institute for Sustainability Leadership, University of Cambridge

NatGeo Explorer, Oceanographer, Climate Scientist; Env. Remote Sensing; Energy & Sustainability; husband, father #FirstGen, Views my own; Google scholar: https://scholar.google.com/citations?hl=en&user=qMBuSe4AAAAJ

the joker of candle-making @bugsrock.online, one of the greatest minds in web development (jennschiffer.com) and blogging (livelaugh.blog), nyc co-host of robot karaoke (robotkaraoke.live), hot and smart and talented