A huge thanks to my fantastic mentors, collaborators and advisors: Andrew Bolton, Vikash Mansinghka, @joshtenenbaum.bsky.social and @thiskevinsmith.bsky.social. Do check out our project page (arijit-dasgupta.github.io/jtap/) for more details and the code to run this experiment.

30.07.2025 22:09 — 👍 1 🔁 0 💬 0 📌 0

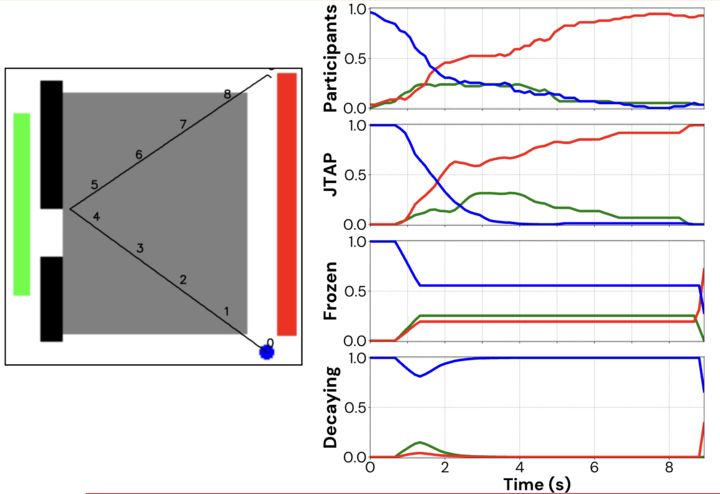

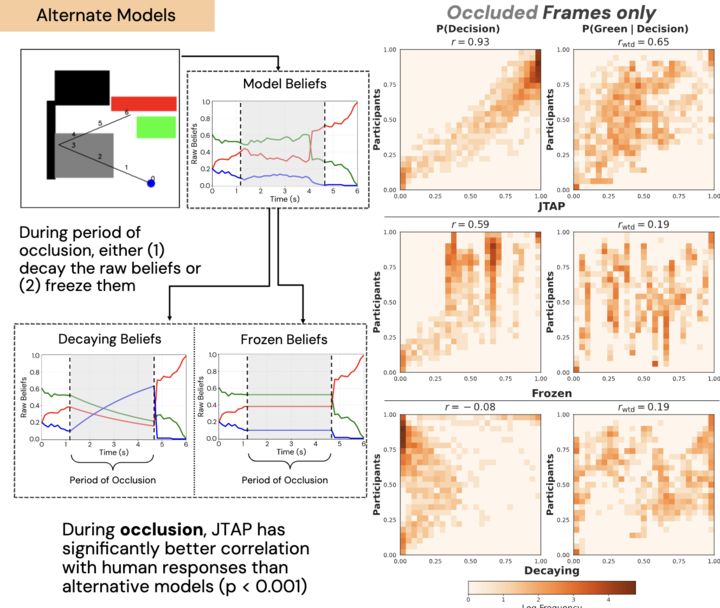

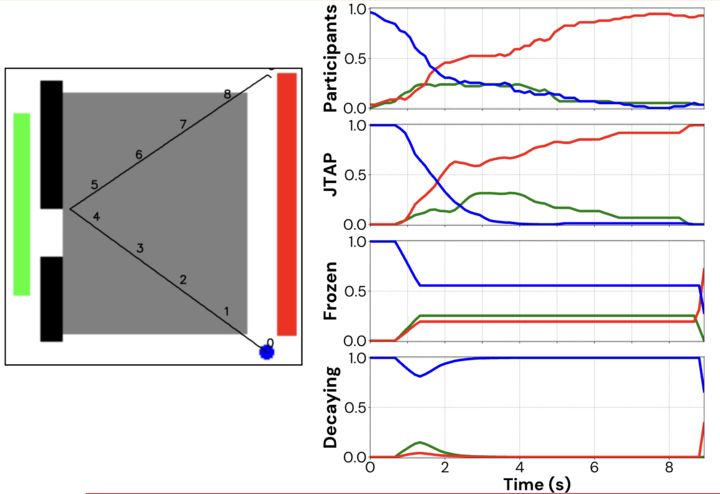

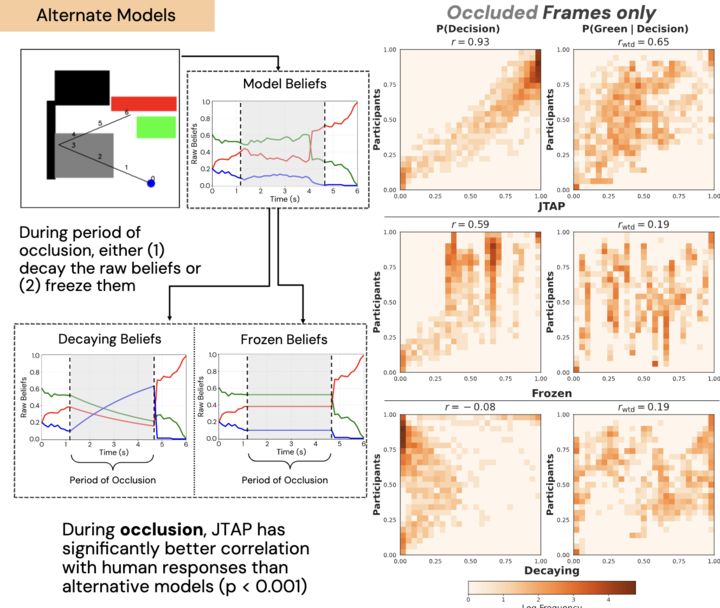

This can be explained with an intuitive example. If the object does not come out the left side, it makes sense to think it might have bounced and will eventually hit red. Just like humans, JTAP can predict this, showing how the lack of changing visual evidence is evidence itself.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

To test whether humans update their beliefs during occlusion, we compared against two ablative baselines that either decay or freeze beliefs when the object is hidden. A targeted analysis of occluded time-steps shows that the full JTAP model captures human behavior much better.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

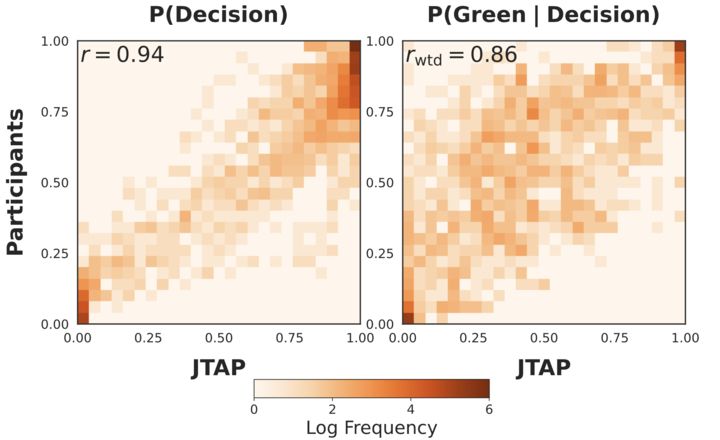

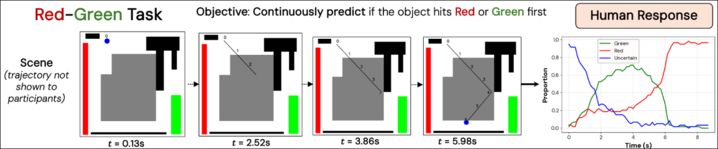

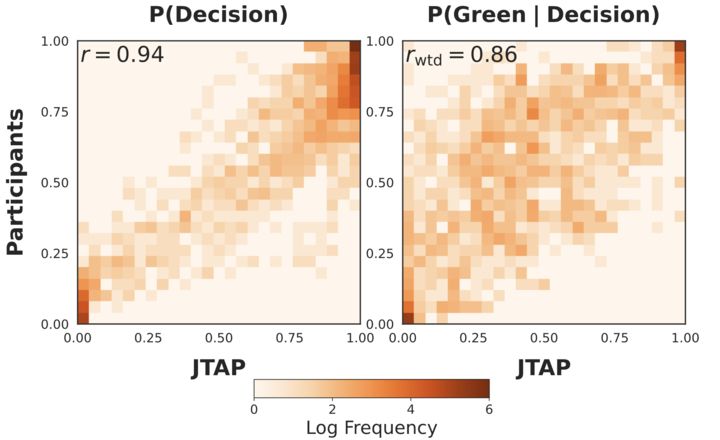

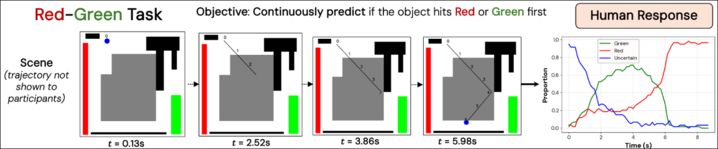

And when we do this, we find that we do really well at predicting overall time-aligned human behavior, both on when to make a decision, and which decision to make. Not only that, we also capture those moments of graded uncertainty, when humans don’t have full agreement.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

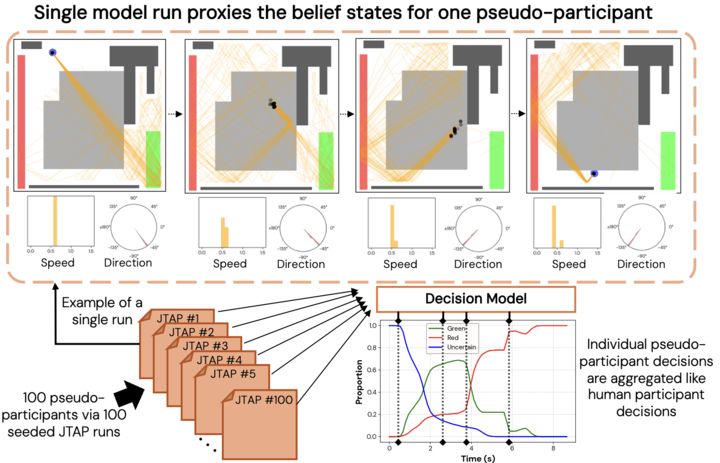

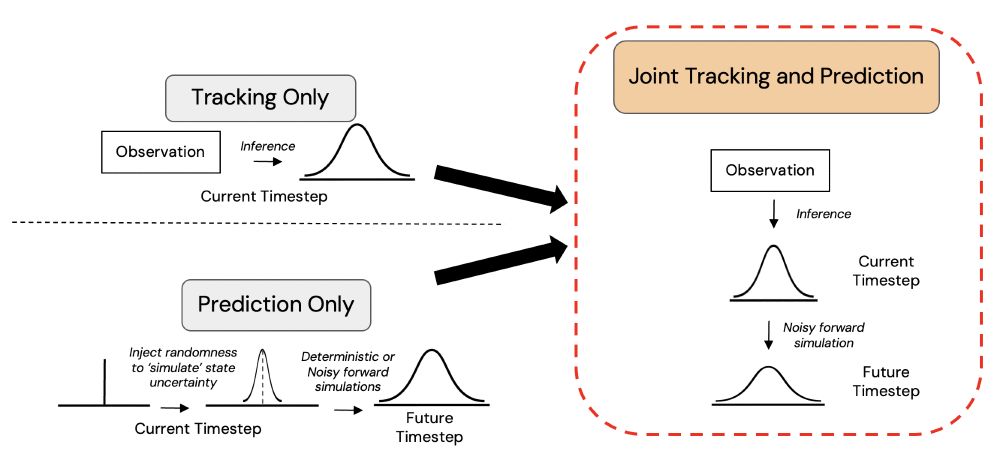

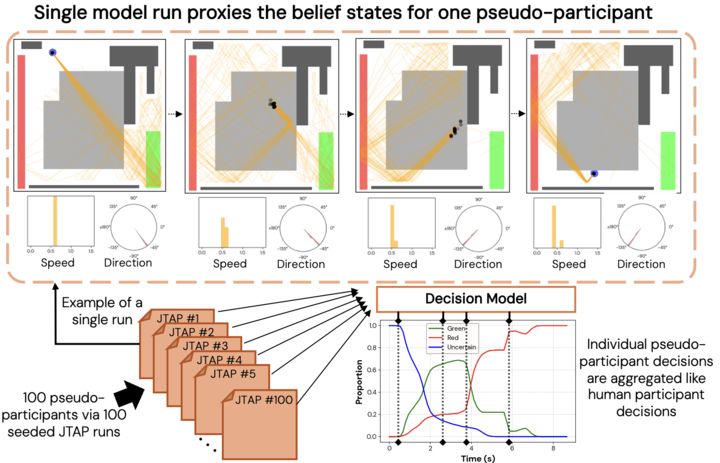

A single JTAP run acts as a proxy for one pseudo-participant’s belief states over time. By feeding multiple randomly-seeded runs into a decision model accounting for time delay and hysteresis, we predict both individual-level human decisions and aggregate behavior.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

JTAP produces current and future beliefs over a single object’s position, speed, & direction. In this example, it predicts that future beliefs over red vs green outcomes spread under occlusion, then sharpen to red once visible, while in the visible case they stay confidently red.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

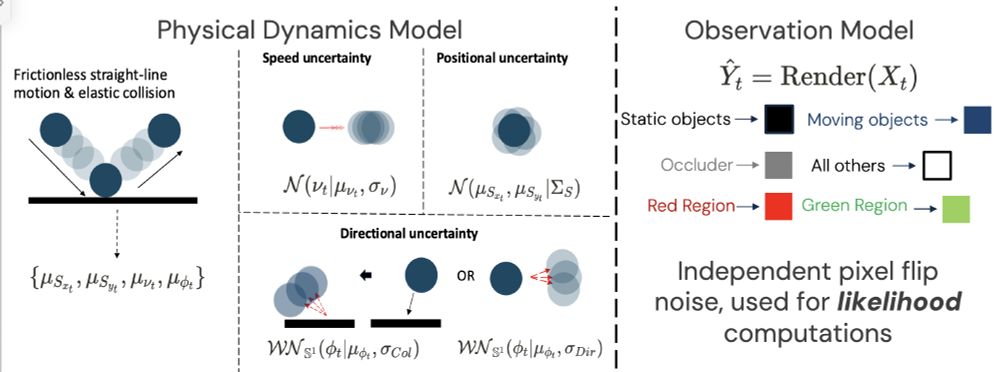

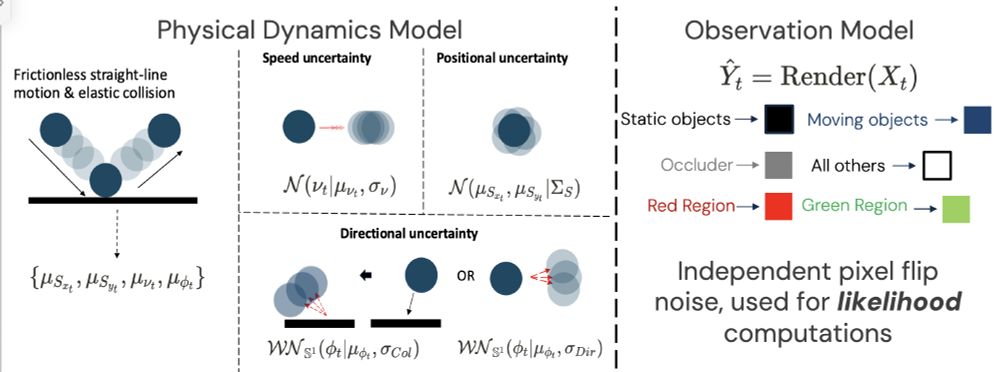

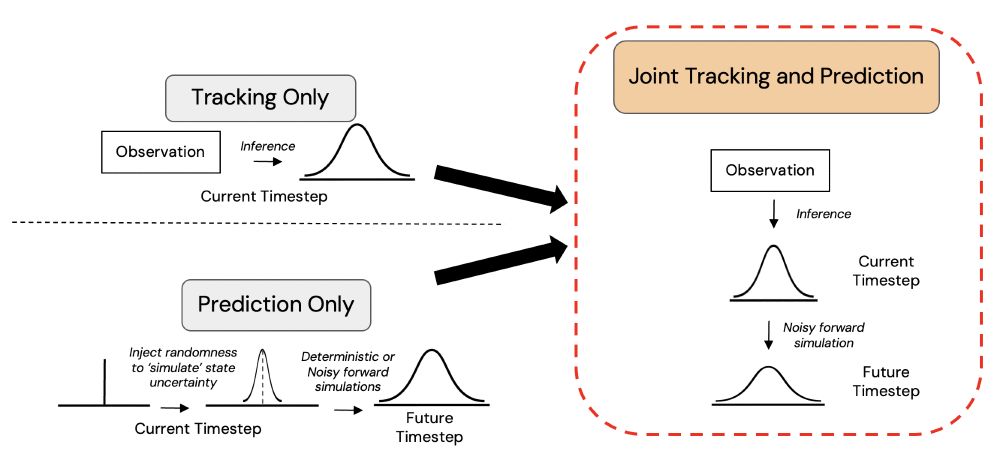

We approach this with the Joint Tracking and Prediction (JTAP) model: a structured probabilistic program that combines 2D physical dynamics with an observation model. Posterior beliefs are inferred via a particle-based approximation using a Sequential Monte Carlo algorithm.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

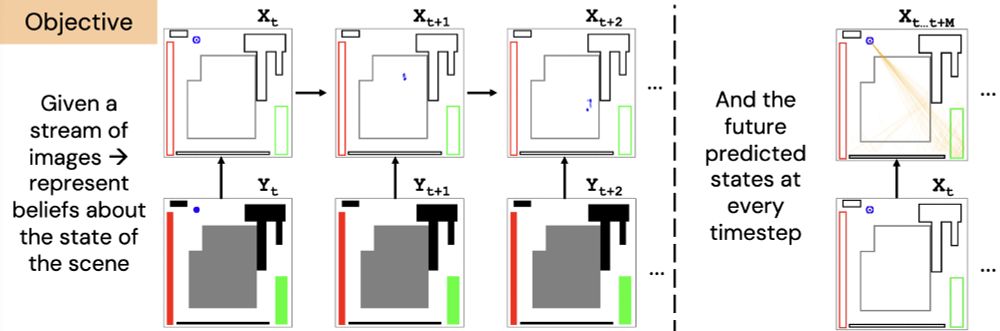

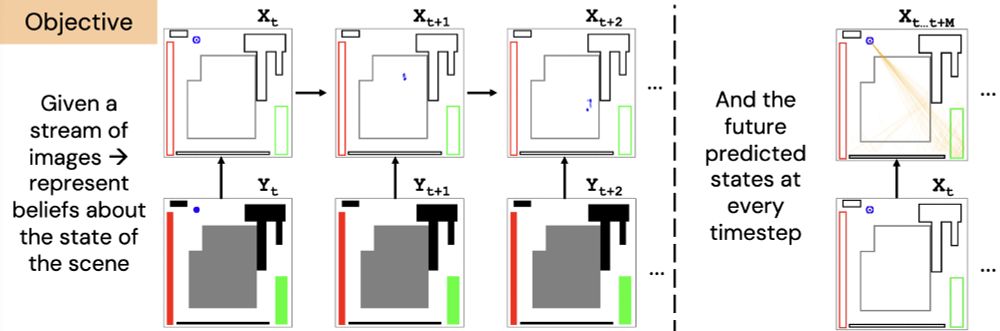

Computationally, we frame this as a Bayesian inference problem: jointly inferring an observer’s beliefs about an object’s current and future state from a stream of images.

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

We started by asking participants to continuously predict whether a ball moving in a 2.5D scene with gray occluders and black barriers would hit the red or green region first. People showed varying uncertainty in their responses, while giving reliable responses (ICC1k = 0.952).

30.07.2025 22:09 — 👍 1 🔁 0 💬 1 📌 0

Although often studied separately, we explore whether people jointly integrate state uncertainty from occlusion and uncertainty about future physical dynamics to form intuitive beliefs about what will happen next. Can machines capture this kind of physically grounded reasoning?

30.07.2025 22:09 — 👍 0 🔁 0 💬 1 📌 0

One remarkable aspect of human physical reasoning and perception is our ability to track and predict the motion of hidden objects. Come see my poster at #CogSci2025 (P1-D-44), where I show how people “see through occlusion”, and how we model it using Bayesian inference. @cogscisociety.bsky.social

30.07.2025 22:09 — 👍 9 🔁 2 💬 2 📌 0

Research Scientist @MIT | Computational Cognitive Science + Robotics / AI | www.mit.edu/~k2smith/

@Penn Prof, deep learning, brains, #causality, rigor, http://neuromatch.io, Transdisciplinary optimist, Dad, Loves outdoors, 🦖 , c4r.io

Asst. prof. at NUS. Scaling cooperative intelligence & infrastructure for an automated future. PhD @ MIT ProbComp / CoCoSci. Pronouns: 祂/伊

Professor, Santa Fe Institute. Research on AI, cognitive science, and complex systems.

Website: https://melaniemitchell.me

Substack: https://aiguide.substack.com/

Professor of Computational Cognitive Science | @AI_Radboud | @Iris@scholar.social on 🦣 | http://cognitionandintractability.com | she/they 🏳️🌈

Professor, Department of Psychology and Center for Brain Science, Harvard University

https://gershmanlab.com/

Promoting Cognitive Science as a discipline and fostering scientific interchange among researchers in various areas.

🌐 https://cognitivesciencesociety.org

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language

AGI research @DeepMind.

Ex cofounder & CTO Vicarious AI (acqd by Alphabet),

Cofounder Numenta

Triply EE (BTech IIT-Mumbai, MS&PhD Stanford). #AGIComics

blog.dileeplearning.com

Philosopher of Artificial Intelligence & Cognitive Science

https://raphaelmilliere.com/

Associate Professor, Department of Psychology, Harvard University. Computation, cognition, development.

Director, MIT Computational Psycholinguistics Lab. President, Cognitive Science Society. Chair of the MIT Faculty. Open access & open science advocate. He.

Lab webpage: http://cpl.mit.edu/

Personal webpage: https://www.mit.edu/~rplevy

computational cognitive science he/him

http://colala.berkeley.edu/people/piantadosi/

Cognitive scientist seeking to reverse engineer the human cognitive toolkit. Asst Prof of Psychology at Stanford. Lab website: https://cogtoolslab.github.io

> Language + CogSci + Evolution + NLP/ML/AI

COMPLEXITY, FUNCTION & FORM in

- language, culture, cognition

- evo dynamics

- info & computation

- explanation

homeostatic property cluster at large

LangEvo is Hard Reading List

https://t.ly/gfGj

Asst Prof at Johns Hopkins. Dog person. A woman who is up to something. www.liulaboratory.org

Cognitive scientist at Princeton, personally & scientifically interested in collaboration | science sketcher | thinking in non-English 🇵🇷

professor at university of washington and founder at csm.ai. computational cognitive scientist. working on social and artificial intelligence and alignment.

http://faculty.washington.edu/maxkw/

Professor at Imperial College London and Principal Scientist at Google DeepMind. Posting in a personal capacity. To send me a message please use email.

Cognitive scientist / philosopher working on modality and high level cognition.

Cognitive science at Dartmouth

https://phillab.host.dartmouth.edu/

Photo credit: Justin Khoo