CroCoDL Workshop at #ICCV, next talk coming up in Room 301B:

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

@hermannblum.bsky.social

ML & CV for robot perception assistant professor @ Uni Bonn & Lamarr Institute interested in self-learning & autonomous robots, likes all the messy hardware problems of real-world experiments https://rpl.uni-bonn.de/ https://hermannblum.net/

CroCoDL Workshop at #ICCV, next talk coming up in Room 301B:

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

In 30 mins! CroCoDL Poster Session (posters #248-#257), during the official coffee break 3pm - 4pm.

Contributed works cover Visual Localization, Visual Place Recognition, Room Layout Estimation, Novel View Synthesis, 3D Reconstruction

CroCoDL Workshop at #ICCV, next talk coming up in Room 301B:

14.15 - 14.45: @ayoungk.bsky.social on Bridging heterogeneous sensors for robust and generalizable localization

full schedule: localizoo.com/workshop/

CroCoDL Workshop at #ICCV, next talk coming up in Room 301B:

13.15 - 13.45: @gabrielacsurka.bsky.social on Privacy Preserving Visual Localization

full schedule: buff.ly/kM1Ompf

Attending #ICCV? Join the CroCoDL workshop this afternoon!

localizoo.com/workshop

Speakers: @gabrielacsurka.bsky.social, @ayoungk.bsky.social, David Caruso, @sattlertorsten.bsky.social

w/ @zbauer.bsky.social @mihaidusmanu.bsky.social @linfeipan.bsky.social @marcpollefeys.bsky.social

Today a delivery arrived that marks an exciting milestone for my lab: our first research grant!

05.09.2025 19:58 — 👍 1 🔁 0 💬 0 📌 0

📰Paper: arxiv.org/abs/2501.04597

🔥Code: github.com/cvg/Frontier...

🌍Page: boysun045.github.io/FrontierNet-...

w/ Boyang Sun, Hanzhi Chen, Stefan Leutenegger, Cesar Cadena, @marcpollefeys.bsky.social

We just released code, models, and data for FrontierNet!

Key idea 💡Instead of detecting froniers in a map, we directly predict them from images. Hence, FrontierNet can implicitly learn visual semantic priors to estimate information gain. That speeds up exploration compared to geometric heuristics.

Honestly ICCV2025 was the first time for me that I had great papers on my review pile that matched my interests & expertise. WAY better matching in my case than for the last 2 CVPRs.

ofc my standards are low coming from robotics where matching is based on keywords like „vision for robotics“ 🙈

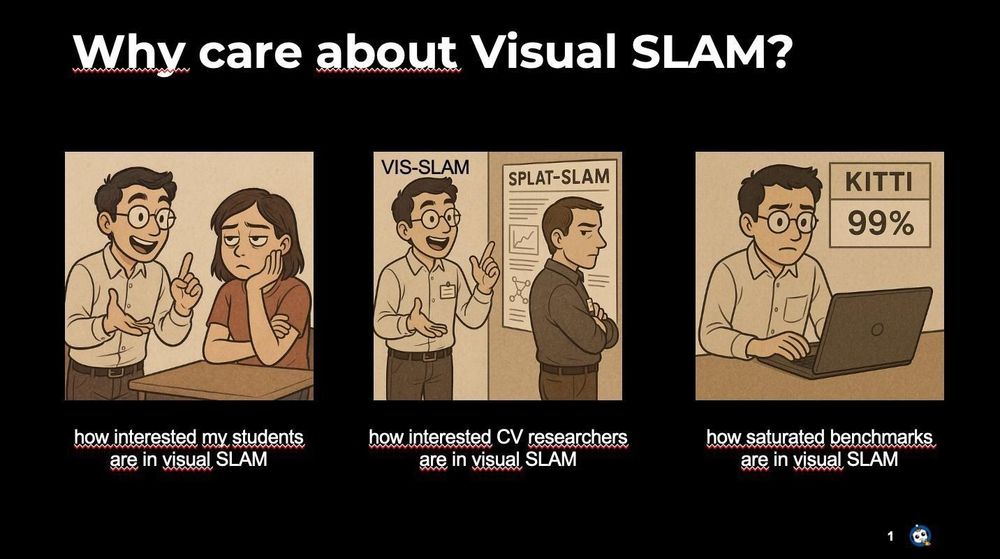

This is the first time in a while I am creating a new talk. This will be fun!

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

buff.ly/ADHxPsX

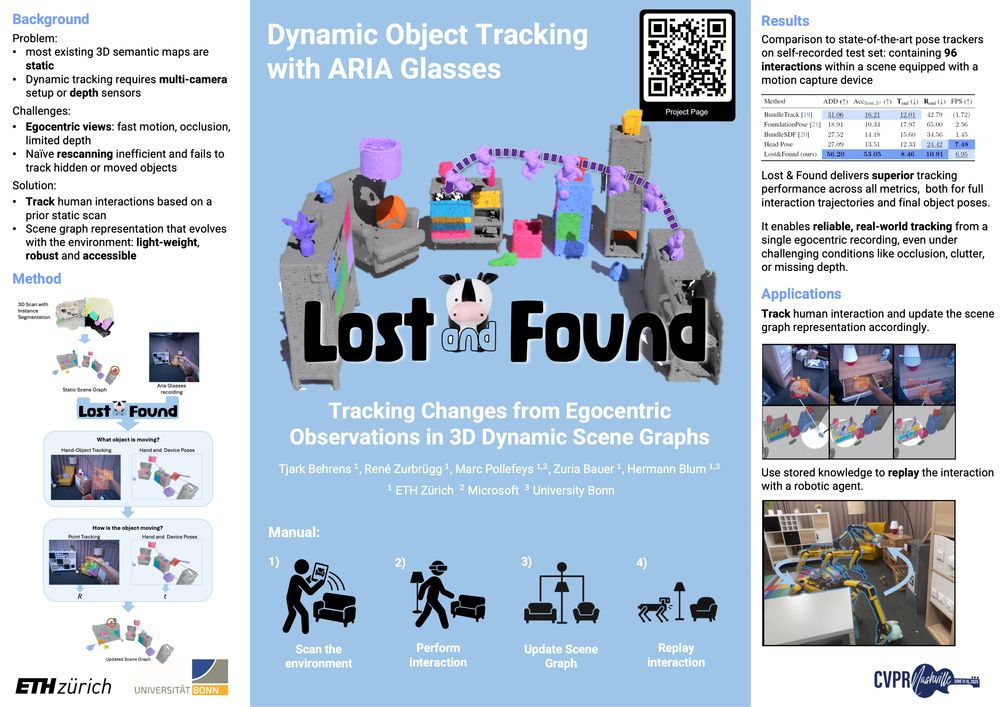

Special thanks to Tjark for creating this beautiful Lost & Found poster that we presented at the #CV4MR workshop.

19.06.2025 14:58 — 👍 1 🔁 0 💬 0 📌 0

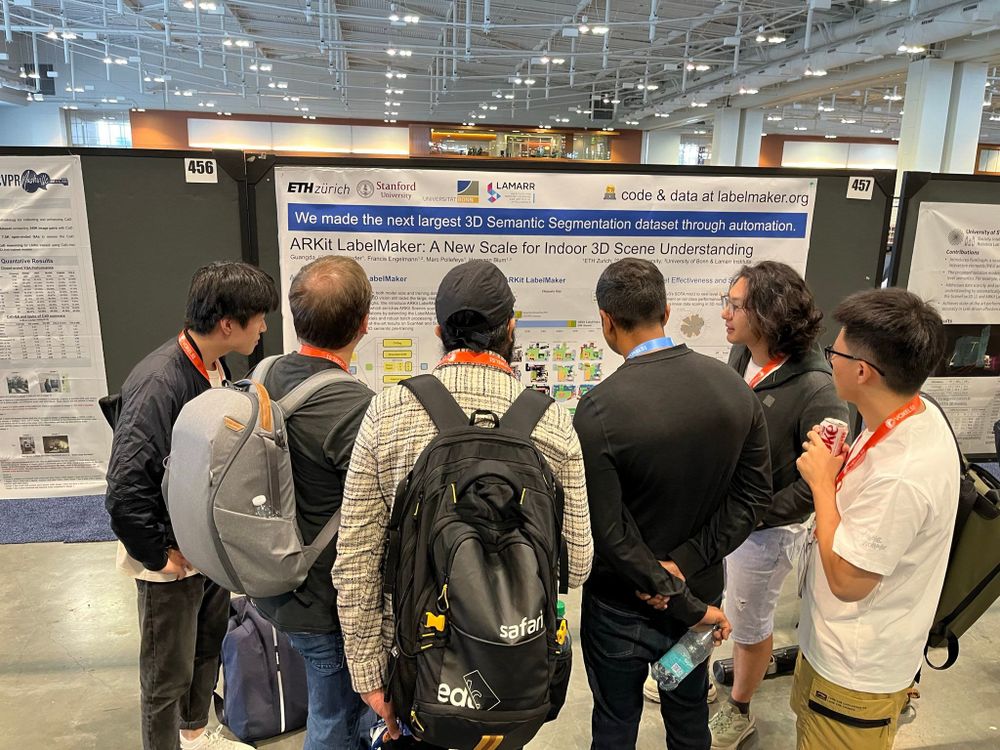

Posters presented:

Guangda presented ARKitLabelMaker buff.ly/XcJHcz2

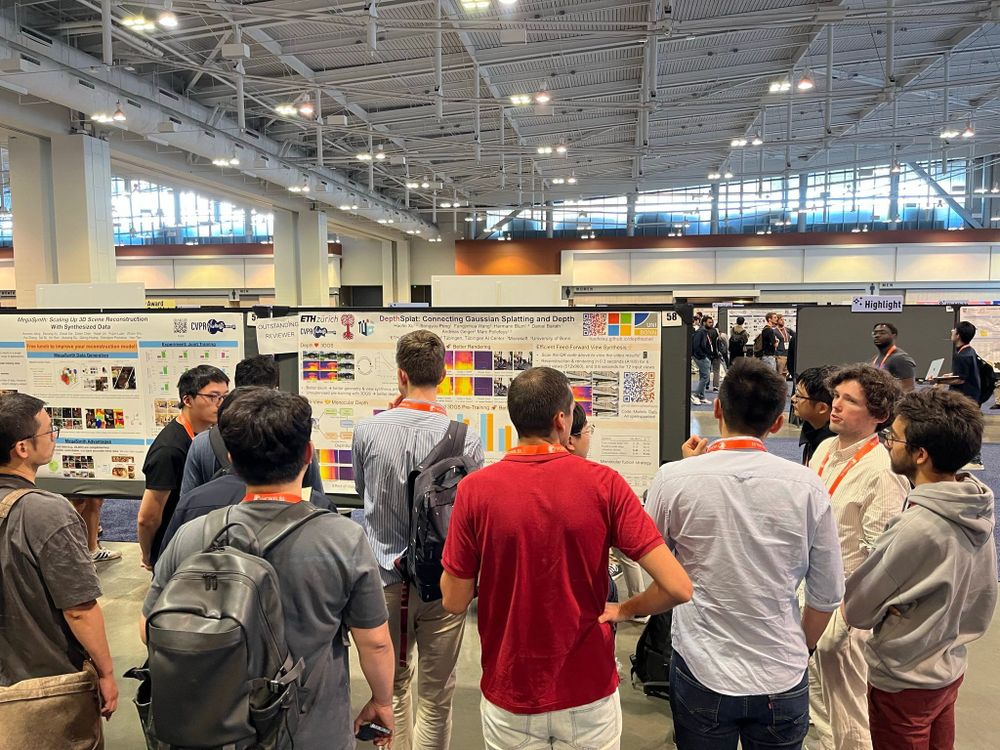

@haofeixu.bsky.social presented DepthSplat buff.ly/T0oWIdi

Dennis presented FunGraph, now accepted to IROS buff.ly/UvgZUzP

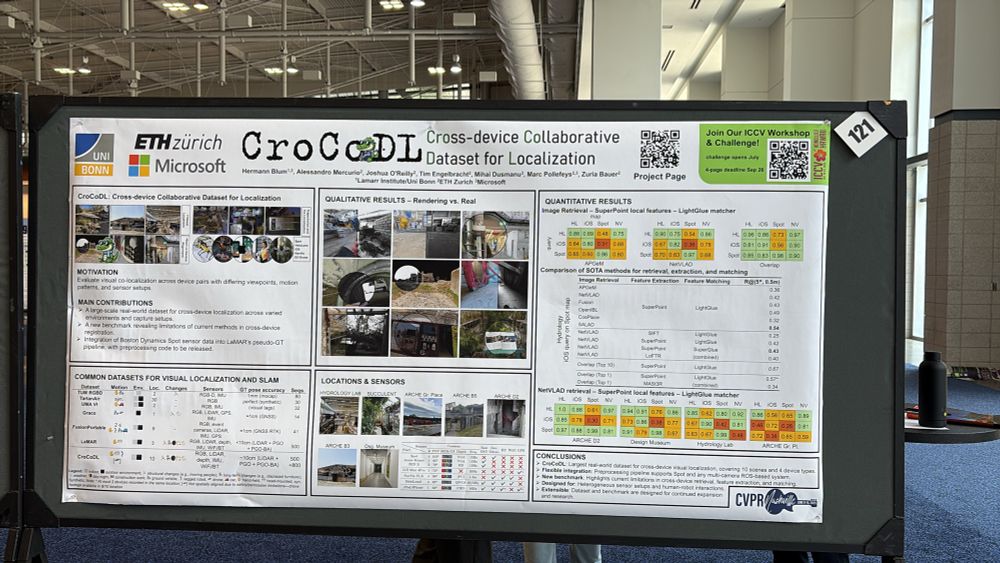

@zbauer.bsky.social , @mihaidusmanu.bsky.social and I presented CroCoDL buff.ly/ZHN2Ir4

Finally arriving home today after attending @cvprconference.bsky.social . This was the first #CVPR that I could attend in person! I expected it to be super crowded but was surprised - lots of time and space for chats at the poster session and the 15min talks could really go into detail.

19.06.2025 14:58 — 👍 8 🔁 0 💬 1 📌 0

Do you want to learn more about our novel dataset for Cross-device localization? Come by poster 121 and meet CroCoDL 🐊

cc @marcpollefeys.bsky.social @hermannblum.bsky.social @mihaidusmanu.bsky.social @cvprconference.bsky.social @ethz.ch

We just extended our submission deadline for 8-page paper submissions until June 30.

Accepted submissions go into ICCV WS proceedings 📄

all set for our poster lineup at the cv4mr.github.io #cvpr workshop

11.06.2025 15:32 — 👍 0 🔁 0 💬 0 📌 0I‘ll be at CVPR this week and I am actively looking for PhD students (job announcement will go out the week after). Just send me a message if you are interested to meet up.

09.06.2025 17:19 — 👍 0 🔁 0 💬 0 📌 0

Excited to present our #CVPR2025 paper DepthSplat next week!

DepthSplat is a feed-forward model that achieves high-quality Gaussian reconstruction and view synthesis in just 0.6 seconds.

Looking forward to great conversations at the conference!

looking forward to it!

04.06.2025 19:21 — 👍 1 🔁 0 💬 0 📌 0

If you‘re watching #eurovision tonight, look out for the robots from ETH!

Really cool to see something I could work with during my PhD featured as a swiss highlight 🤖

We are organizing the 1st Workshop on Cross-Device Visual Localization at #ICCV #ICCV2025

Localizing multiple phones, headsets, and robots to a common reference frame is so far a real problem in mixed-reality applications. Our new challenge will track progress on this issue.

⏰ paper deadline: June 6

🏠 Introducing DepthSplat: a framework that connects Gaussian splatting with single- and multi-view depth estimation. This enables robust depth modeling and high-quality view synthesis with state-of-the-art results on ScanNet, RealEstate10K, and DL3DV.

🔗 haofeixu.github.io/depthsplat/

Exciting news for LabelMaker!

1️⃣ ARKitLabelMaker, the largest annotated 3D dataset, was accepted to CVPR 2025! This was an amazing effort of Guangda Ji 👏

🔗 labelmaker.org

📄 arxiv.org/abs/2410.13924

2️⃣ Mahta Moshkelgosha extended the pipeline to generate 3D scene graphs:

👩💻 github.com/cvg/LabelMak...

We are thinking about a bit similar setup and it seems you can record RGB-D really quite easily record3d.app What is the advantage of final cut pro?

18.03.2025 18:55 — 👍 1 🔁 0 💬 1 📌 0*Please repost* @sjgreenwood.bsky.social and I just launched a new personalized feed (*please pin*) that we hope will become a "must use" for #academicsky. The feed shows posts about papers filtered by *your* follower network. It's become my default Bluesky experience bsky.app/profile/pape...

10.03.2025 18:14 — 👍 522 🔁 296 💬 23 📌 83

RA-L is a great model IMO: submit anytime, rapid-publishing (max 6 months including 1 month for revision), journal-style review process yields much better papers, accepted papers are automatically presented at the next ICRA/IROS.

www.ieee-ras.org/publications...

Open source code now available MASt3R-SLAM: the best dense visual SLAM system I've ever seen. Real-time and monocular, and easy to run with a live camera or on videos without needing to know the camera calibration. Brilliant work from Eric and Riku.

25.02.2025 17:34 — 👍 31 🔁 6 💬 0 📌 0

We have an excellent opportunity for a tenured, flagship AI professorship at @unibonn.bsky.social and lamarr-institute.org

Application Deadline is End of March.

www.uni-bonn.de/en/universit...

I don‘t doubt humanoids will get to where quadrupeds are right now, but my impression is that there is a gap of some years.

21.02.2025 22:35 — 👍 1 🔁 0 💬 0 📌 0