BayesFlow released version 2.0.4, presented numerous findings at the MathPsych/ICCM 2025 conference at Ohio State University, and expanded its contributor list to 25 active members! Congrats to BayesFlow on all these new huge accomplishments!

13.08.2025 15:08 — 👍 11 🔁 3 💬 0 📌 0

I'm putting together a visualization workshop for PhD students 🧪📊

Looking for examples of the good, the bad, and the ugly.

Do you have examples for a great (or awful) figure? Plots and overview/explainer figures are welcome.

Thanks 🧡

03.06.2025 05:39 — 👍 36 🔁 9 💬 11 📌 0

Introduction – Amortized Bayesian Cognitive Modeling

🧠 Check out the classic examples from Bayesian Cognitive Modeling: A Practical Course (Lee & Wagenmakers, 2013), translated into step-by-step tutorials with BayesFlow!

Interactive version: kucharssim.github.io/bayesflow-co...

PDF: osf.io/preprints/ps...

30.05.2025 14:28 — 👍 29 🔁 14 💬 0 📌 0

OSF

New preprint!

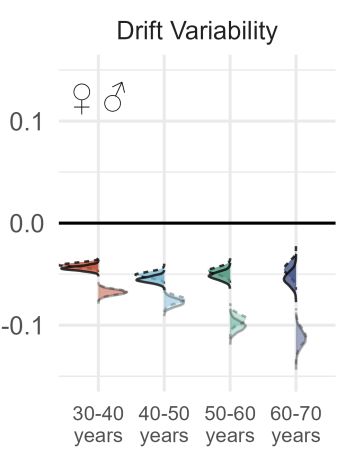

Individual differences in neurophysiological correlates of post-response adaptation: A model-based approach

osf.io/preprints/ps...

This work seeks to extract the effects of response monitoring on decision-making using model-based CogNeuro and methods to study individual differences.

06.03.2025 14:21 — 👍 6 🔁 5 💬 1 📌 0

Congrats, really impressive work (especially providing the many additional resources on the website)!

06.03.2025 14:42 — 👍 0 🔁 0 💬 0 📌 0

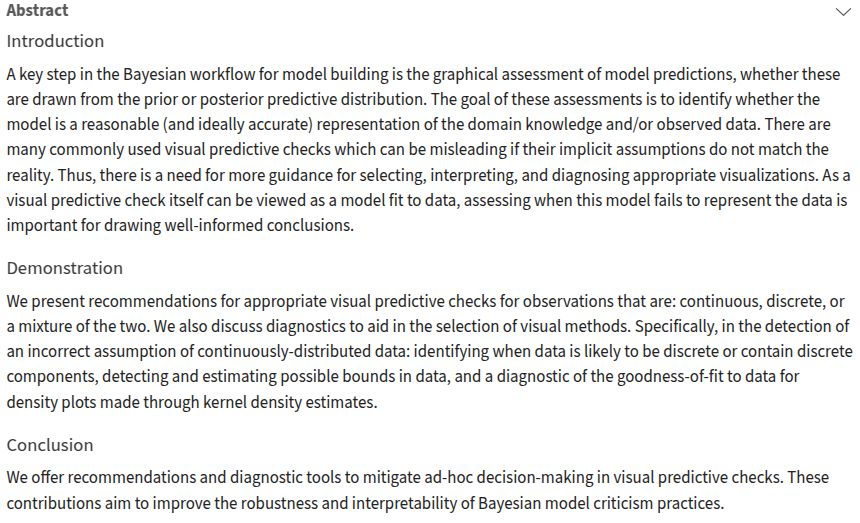

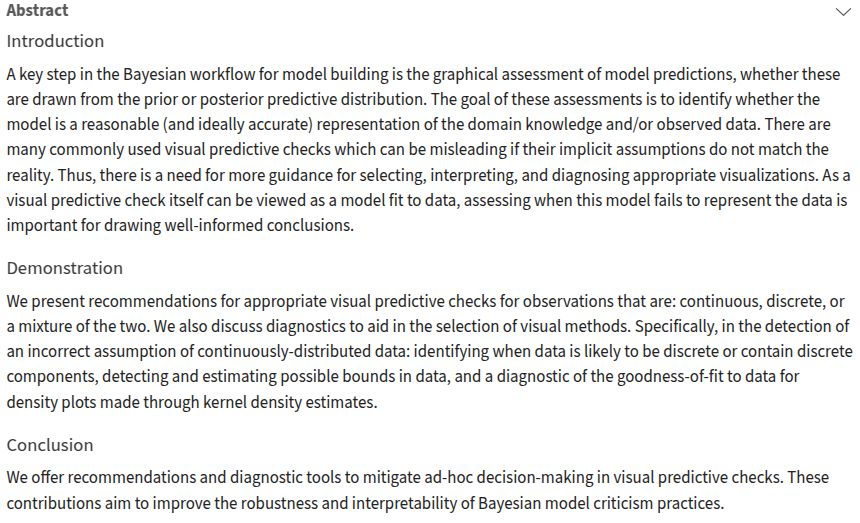

Abstract

Introduction

A key step in the Bayesian workflow for model building is the graphical assessment of model predictions, whether these are drawn from the prior or posterior predictive distribution. The goal of these assessments is to identify whether the model is a reasonable (and ideally accurate) representation of the domain knowledge and/or observed data. There are many commonly used visual predictive checks which can be misleading if their implicit assumptions do not match the reality. Thus, there is a need for more guidance for selecting, interpreting, and diagnosing appropriate visualizations. As a visual predictive check itself can be viewed as a model fit to data, assessing when this model fails to represent the data is important for drawing well-informed conclusions.

Demonstration

We present recommendations for appropriate visual predictive checks for observations that are: continuous, discrete, or a mixture of the two. We also discuss diagnostics to aid in the selection of visual methods. Specifically, in the detection of an incorrect assumption of continuously-distributed data: identifying when data is likely to be discrete or contain discrete components, detecting and estimating possible bounds in data, and a diagnostic of the goodness-of-fit to data for density plots made through kernel density estimates.

Conclusion

We offer recommendations and diagnostic tools to mitigate ad-hoc decision-making in visual predictive checks. These contributions aim to improve the robustness and interpretability of Bayesian model criticism practices.

New paper Säilynoja, Johnson, Martin, and Vehtari, "Recommendations for visual predictive checks in Bayesian workflow" teemusailynoja.github.io/visual-predi... (also arxiv.org/abs/2503.01509)

04.03.2025 13:15 — 👍 64 🔁 20 💬 4 📌 0

A study with 5M+ data points explores the link between cognitive parameters and socioeconomic outcomes: The stability of processing speed was the strongest predictor.

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

03.02.2025 12:21 — 👍 19 🔁 6 💬 0 📌 0

A reminder of our talk this Thursday (30th Jan), at 11am GMT. Paul Bürkner (TU Dortmund University), will talk about "Amortized Mixture and Multilevel Models". Sign up at listserv.csv.warwick... to receive the link.

27.01.2025 09:04 — 👍 18 🔁 6 💬 0 📌 1

Scholar inbox is the best paper recommender and I cannot recommend it enough as a conference companion. I don’t know how people do poster sessions without it.

16.01.2025 21:39 — 👍 29 🔁 1 💬 1 📌 0

1️⃣ An agent-based model simulates a dynamic population of professional speed climbers.

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

10.12.2024 01:34 — 👍 42 🔁 6 💬 0 📌 0

The meme that never dies ✨

07.12.2024 10:03 — 👍 4 🔁 0 💬 0 📌 0

06.12.2024 12:52 — 👍 3 🔁 0 💬 0 📌 0

06.12.2024 12:52 — 👍 3 🔁 0 💬 0 📌 0

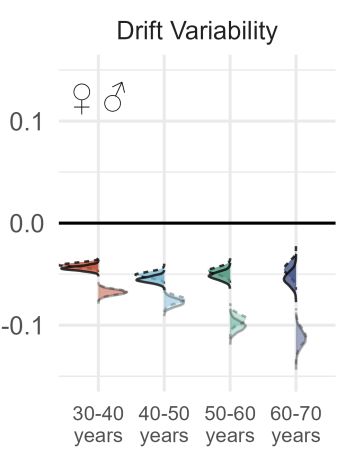

Check out this project on modeling stationary and time-varying parameters with BayesFlow.

The family of methods is called "neural superstatistics", how can it not be cool!? 😎

👨💻 Led by @schumacherlu.bsky.social

06.12.2024 12:25 — 👍 11 🔁 3 💬 0 📌 0

I can definitely relate to looking up your own writing to figure out how you actually did things 😅

27.11.2024 09:42 — 👍 1 🔁 0 💬 0 📌 0

@dtfrazier.bsky.social

26.11.2024 08:56 — 👍 1 🔁 0 💬 1 📌 0

Stellar TL;DR of our recent work by our team! ✨

26.11.2024 08:47 — 👍 2 🔁 0 💬 0 📌 0

To celebrate the new beginnings on Bluesky, let's reminisce about one of our highlights from the old days:

The unexpected shout-out by @fchollet.bsky.social that made everyone go crazy on the BayesFlow Slack server and led to a 15% increase in GitHub stars.

22.11.2024 22:37 — 👍 11 🔁 3 💬 0 📌 0

GitHub - bayesflow-org/bayesflow at dev

A Python library for amortized Bayesian workflows using generative neural networks. - GitHub - bayesflow-org/bayesflow at dev

The beta version of BayesFlow 2.0 is becoming more powerful and stable by the day. If you are curious about Amortized Bayesian Inference, give BayesFlow a try!

github.com/bayesflow-or...

22.11.2024 08:52 — 👍 118 🔁 25 💬 5 📌 1

Seminar on Advances in Probabilistic Machine Learning

This seminar series aims to provide a platform for young researchers (PhD student or post-doc level) to give invited talks about their research, intending to have a diverse set of talks & speakers on ...

For those who don’t know yet, I am organising an online talk series together with Arno Solin on “Advances in Probabilistic Machine Learning (APML)”.

It’s free for everyone to join and support early career researchers!

You can register and check out the schedule here: aaltoml.github.io/apml/

20.11.2024 20:33 — 👍 93 🔁 30 💬 2 📌 1

The first list filled up, so here's a second list of AI for Science researchers on bluesky.

Let me know if I missed you / if you'd like to join!

bsky.app/starter-pack...

20.11.2024 08:56 — 👍 70 🔁 29 💬 58 📌 0

I'm making a list of AI for Science researchers on bluesky — let me know if I missed you / if you'd like to join!

go.bsky.app/AcP9Lix

10.11.2024 00:11 — 👍 246 🔁 90 💬 160 📌 5

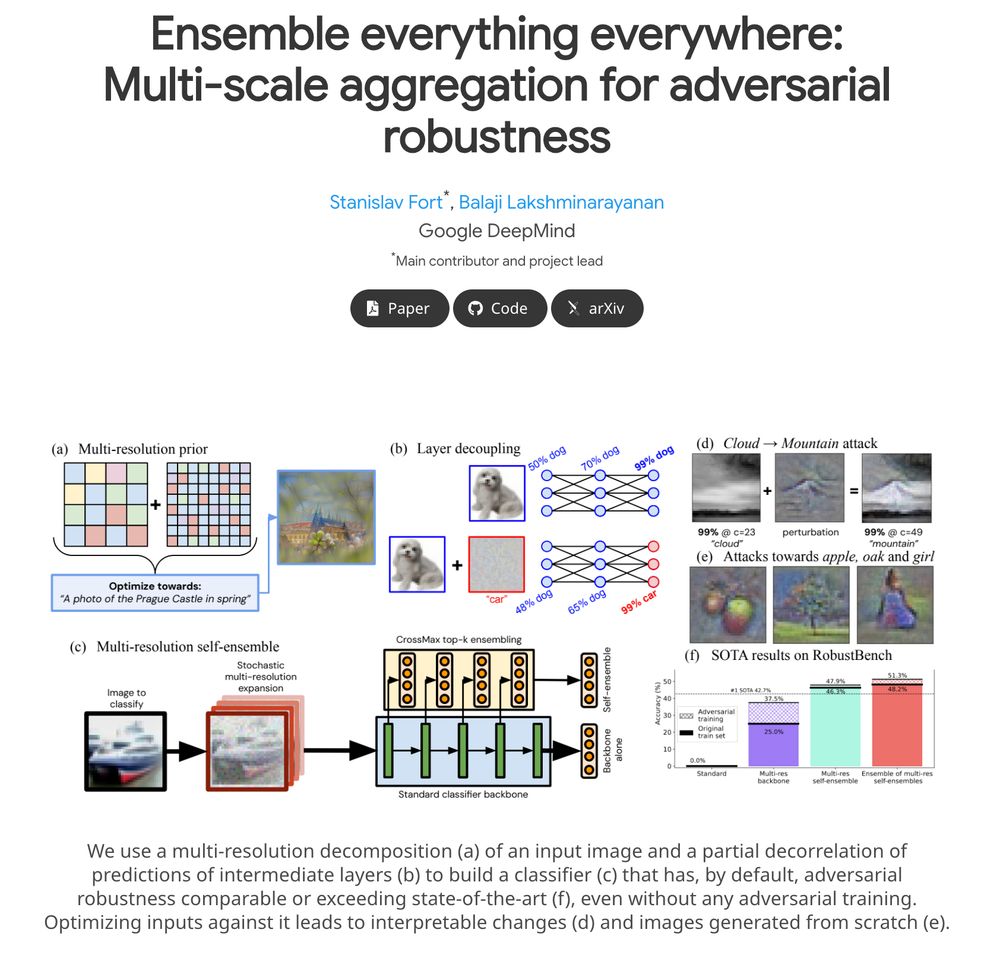

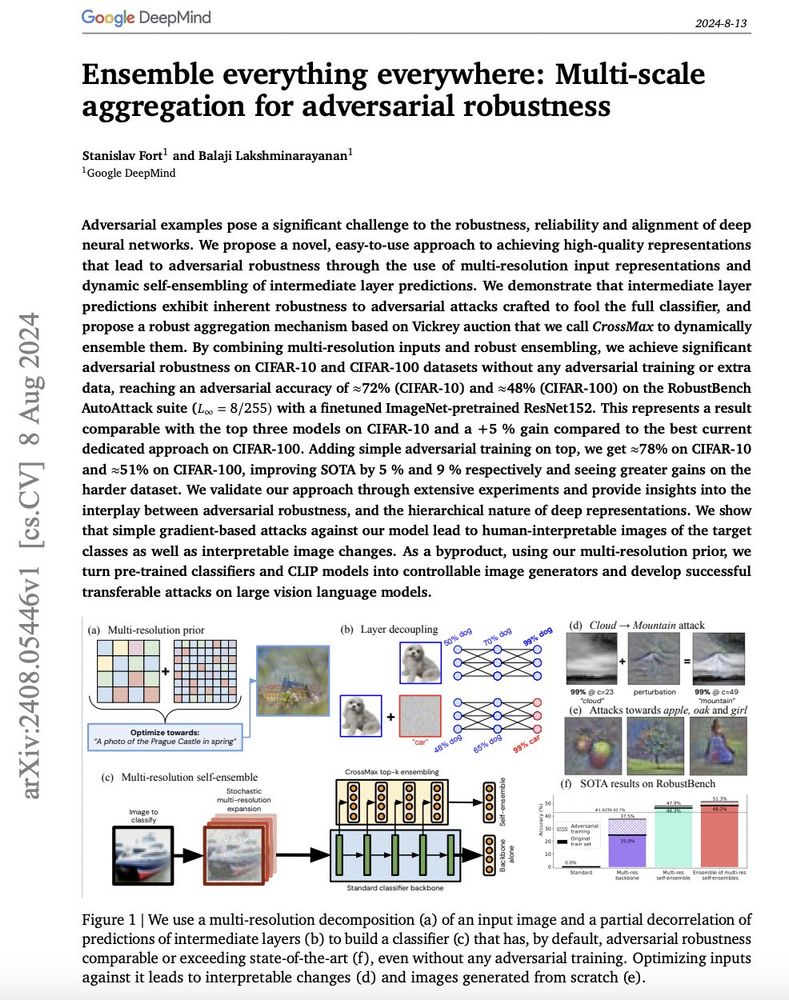

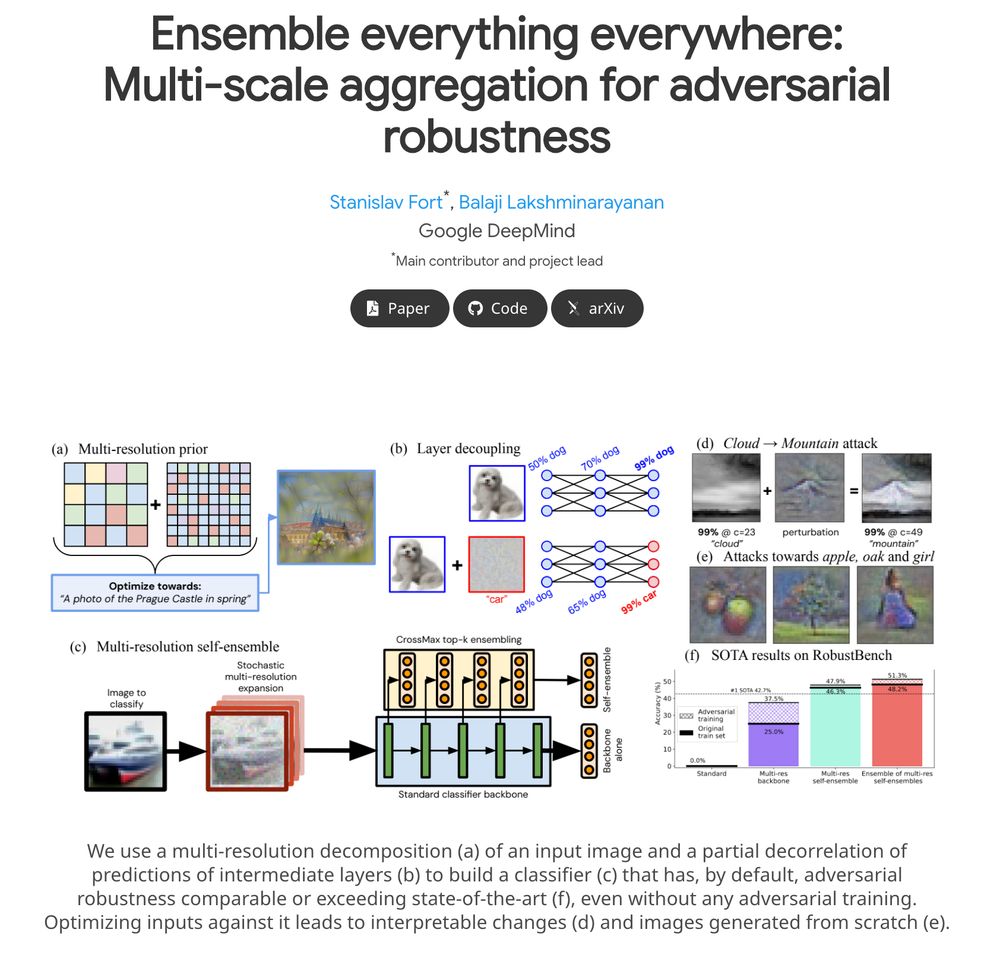

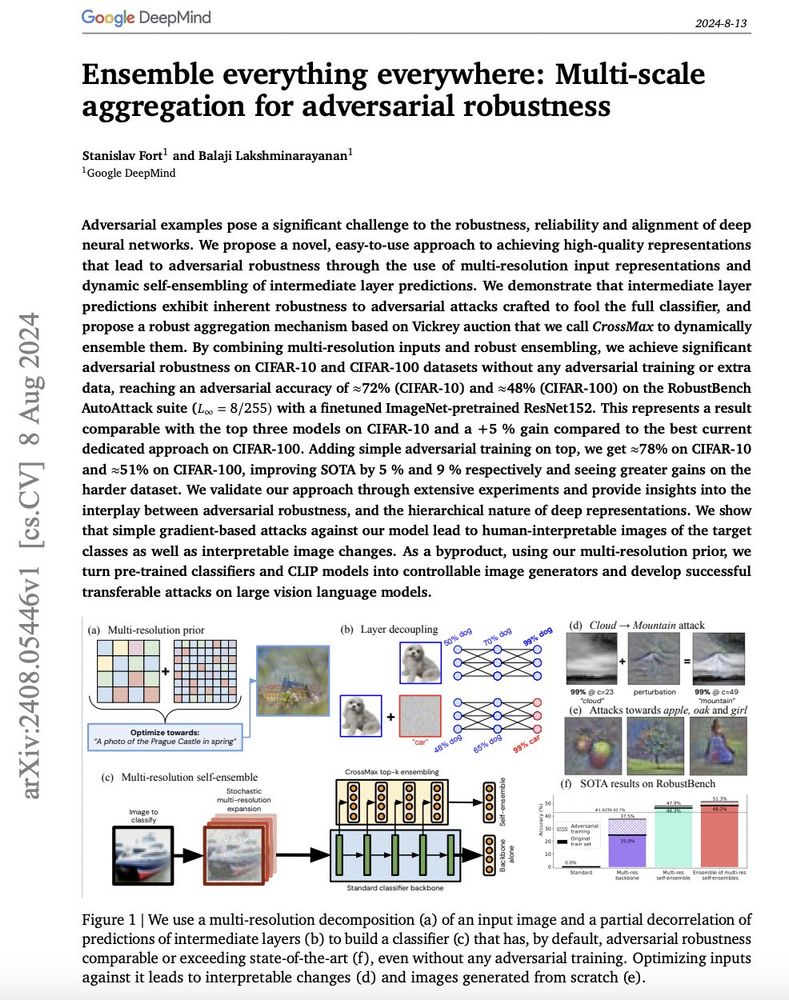

✨ Super excited to share our paper **Ensemble everything everywhere: Multi-scale aggregation for adversarial robustness** arxiv.org/abs/2408.05446 ✨

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

19.11.2024 18:03 — 👍 32 🔁 5 💬 2 📌 1

Bluesky now has over 20M people!! 🎉

We've been adding over a million users per day for the last few days. To celebrate, here are 20 fun facts about Bluesky:

19.11.2024 18:19 — 👍 131074 🔁 16191 💬 3089 📌 1407

🙋

19.11.2024 11:53 — 👍 0 🔁 0 💬 1 📌 0

Same here :)

19.11.2024 08:55 — 👍 1 🔁 0 💬 0 📌 0

🙋♂️ Working on deep learning for taming complex cognitive models.

19.11.2024 08:49 — 👍 0 🔁 0 💬 0 📌 0

Would be great if you could add me!

19.11.2024 08:30 — 👍 1 🔁 0 💬 0 📌 0

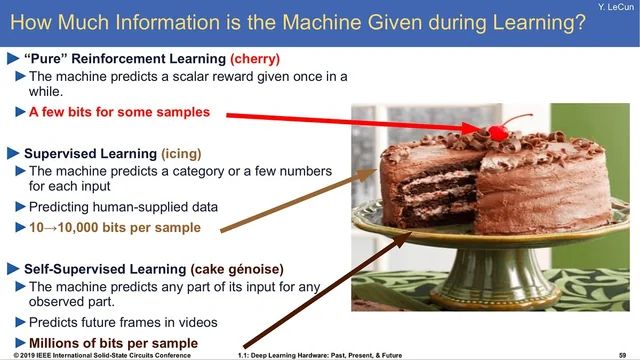

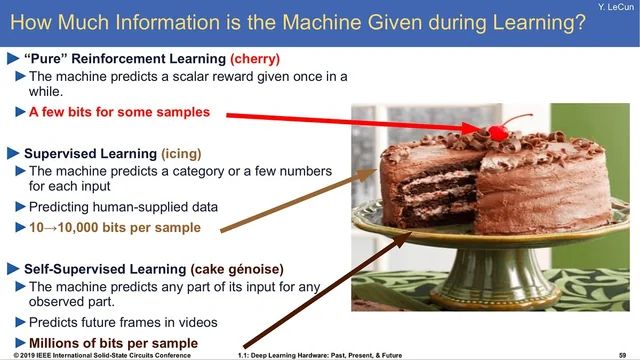

Yann LeCun’s analogy of intelligence being a cake of self-supervised, supervised, and RL

Eight years later, Yann LeCun’s cake 🍰 analogy was spot on: self-supervised > supervised > RL

> “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning (RL).”

17.11.2024 16:02 — 👍 94 🔁 12 💬 10 📌 2

Well, at least in the long run... 😄

17.11.2024 17:08 — 👍 1 🔁 0 💬 0 📌 0

I'm a faculty at CSU Stan, I'm interested in #rstats #healthyAging #quartoPub #python #statistics. I might share content related to quantitative methods from a diverse number of fields.

Bioinformatics Scientist @ BioNTech

#bioinformatics #AI #immunology #cancer #vaccines

Opinions my own, if you don't like them I have others

Expressive probabilistic programming language for writing statistical models. Fast Bayesian inference. Interfaces for Python, Julia, R, and the Unix shell. A rich ecosystem of tools for validation and visualization.

Home https://mc-stan.org/

Author of Mickey7, Mal Goes to War, and Three Days in April, among other things. I also make maple syrup.

University of Cambridge and

Max Planck Institute for Intelligent Systems

I'm interested in amortized inference/PFNs/in-context learning for challenging probabilistic and causal problems.

https://arikreuter.github.io/

Associate Professor of Quantitative Psychology at Florida International University (FIU).

Web: timhayesquant.com

PostDoc at TU Dortmund

Bayesian stats | Eye-tracking

He/him

Scientific AI/ machine learning, dynamical systems (reconstruction), generative surrogate models of brains & behavior, applications in neuroscience & mental health

Astronomer here! I’m a Hungarian-American radio astronomer, alias /r/Andromeda321 on Reddit. Assistant professor at the University of Oregon

PhD student in CompNeuro - 🇲🇽@🇩🇪

AI professor at Caltech. General Chair ICLR 2025.

http://www.yisongyue.com

AI for humans and for science.

https://danmackinlay.name

Research scientist @ CSIRO 🇦🇺

I'm that blogger from your search result sources list. Research areas: ml for physics, causal inference, AI safety and economic

phd student @KU Leuven, VIB.AI

comp neuro and machine learning

goncalveslab.sites.vib.be/en

🧙🏻♀️ scientist at Meta NYC | http://bamos.github.io

computational cognitive scientist @tuebingen

lenarddome.github.io

Astronomer, writer and zookeeper. Oxford, Gresham and the Zooniverse. The human half of the Dog Stars podcast. New book: 'Our Accidental Universe' (UK/rest of world) and 'Accidental Astronomy (US) now out.

Cognitive scientist seeking to reverse engineer the human cognitive toolkit. Asst Prof of Psychology at Stanford. Lab website: https://cogtoolslab.github.io

Portland-based mathematician and software engineer. Building a homomorphic encryption compiler at Google.

https://jeremykun.com

https://pimbook.org

https://pmfpbook.org

https://buttondown.email/j2kun

https://heir.dev

06.12.2024 12:52 — 👍 3 🔁 0 💬 0 📌 0

06.12.2024 12:52 — 👍 3 🔁 0 💬 0 📌 0