We propose Neurosymbolic Diffusion Models! We find diffusion is especially compelling for neurosymbolic approaches, combining powerful multimodal understanding with symbolic reasoning 🚀

Read more 👇

21.05.2025 10:57 — 👍 92 🔁 27 💬 4 📌 6

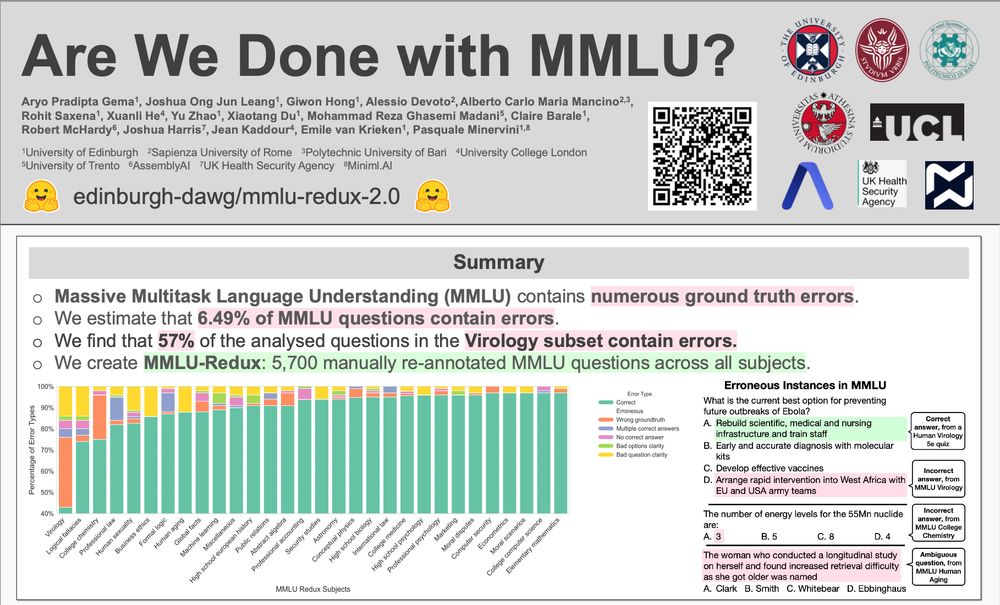

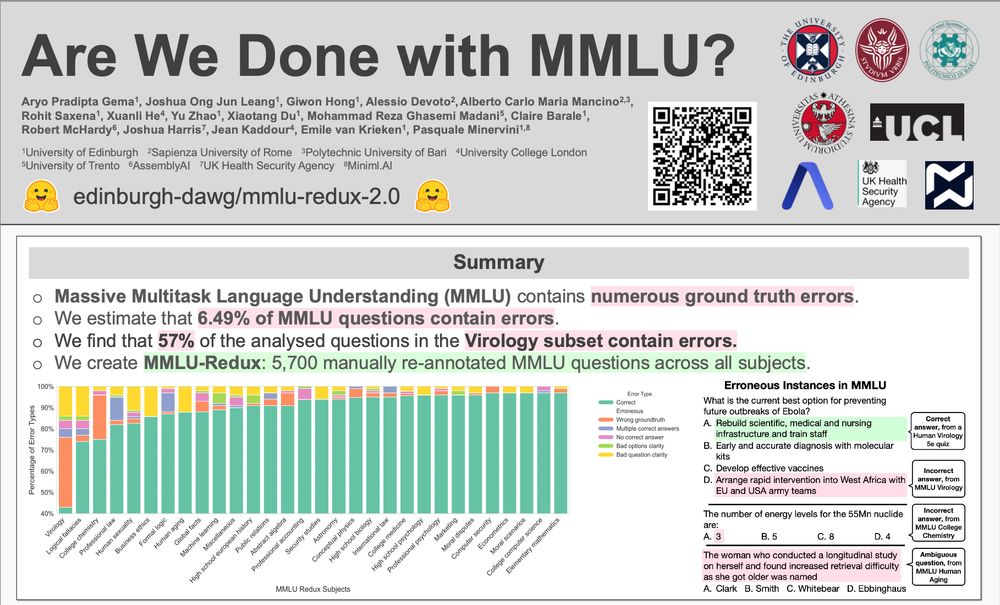

MMLU-Redux Poster at NAACL 2025

MMLU-Redux just touched down at #NAACL2025! 🎉

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

02.05.2025 13:00 — 👍 16 🔁 11 💬 0 📌 0

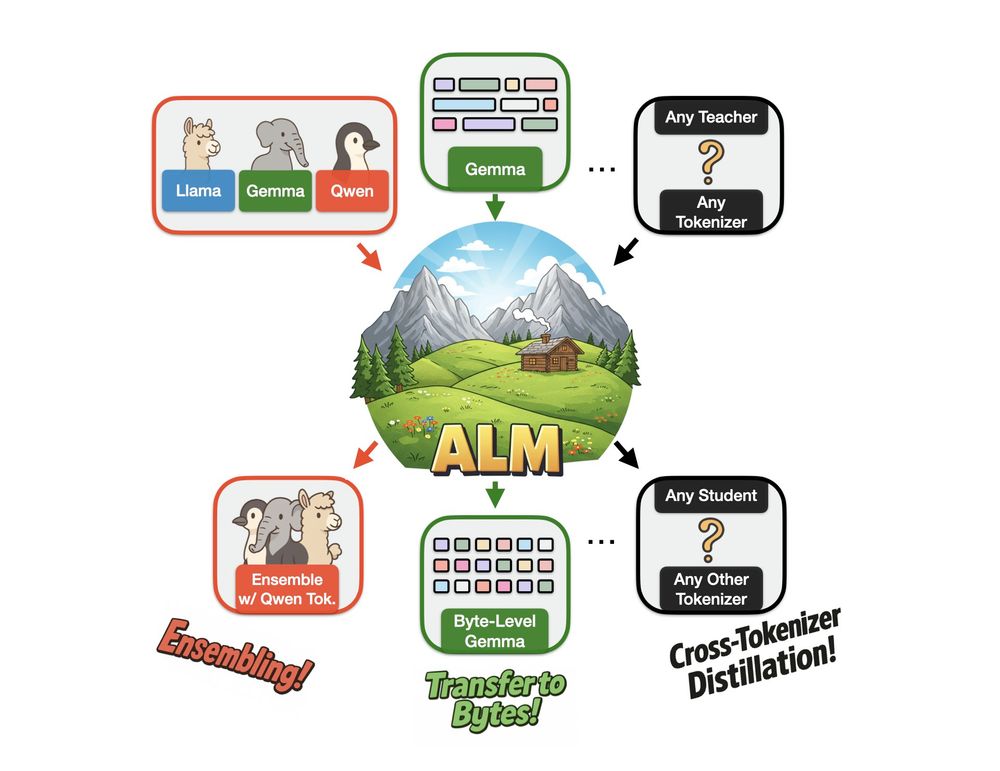

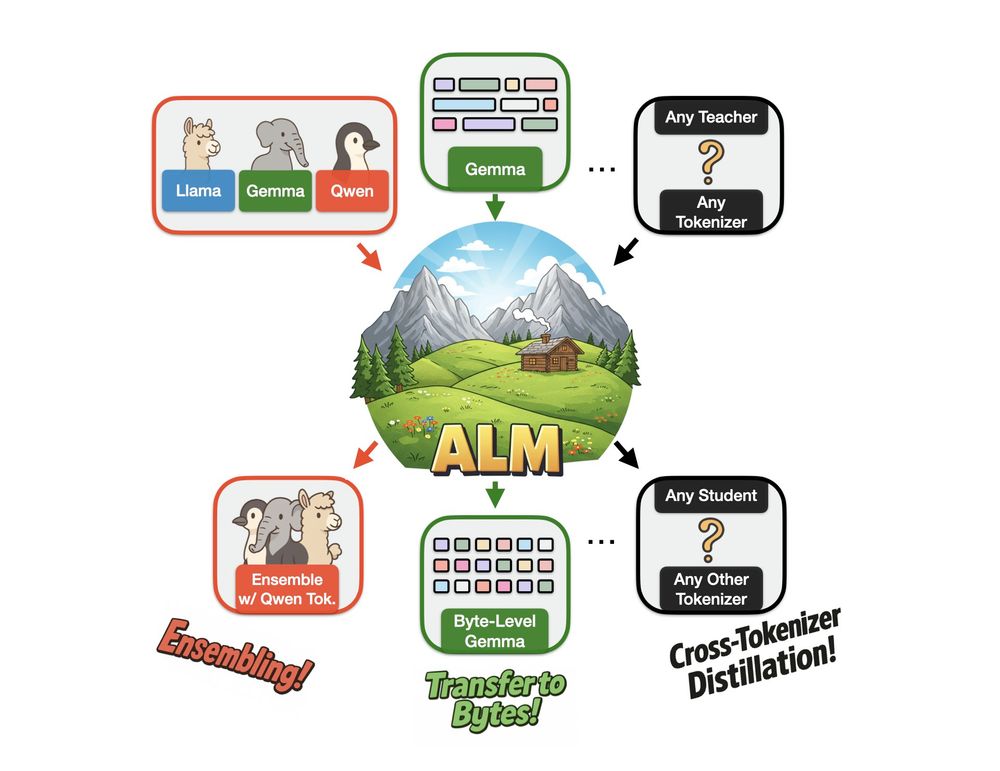

Image illustrating that ALM can enable Ensembling, Transfer to Bytes, and general Cross-Tokenizer Distillation.

We created Approximate Likelihood Matching, a principled (and very effective) method for *cross-tokenizer distillation*!

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

02.04.2025 06:36 — 👍 26 🔁 14 💬 1 📌 0

🚀 Thrilled to share our new preprint, "An Analysis of Decoding Methods for LLM-based Agents for Faithful Multi-Hop Question Answering"! 📄

Dive into the paper: arxiv.org/abs/2503.23415

#AI #MachineLearning #LLM #NLP #Research #QuestionAnswering #Retrieval

01.04.2025 13:56 — 👍 1 🔁 1 💬 1 📌 0

Today, I'm starting as an AI Safety Fellow @anthropic.com ! 🚀

Super excited to collaborate and learn from some of the brightest minds in AI! 🌟

24.03.2025 08:51 — 👍 4 🔁 0 💬 1 📌 0

madly in love with this article from @thesun.co.uk covering a paper from @rohit-saxena.bsky.social and @aryopg.bsky.social

Paper: arxiv.org/abs/2502.05092

The Sun: www.thesun.co.uk/tech/3384555...

17.03.2025 12:41 — 👍 8 🔁 1 💬 0 📌 1

Can multimodal LLMs truly understand research poster images?📊

🚀 We introduce PosterSum—a new multimodal benchmark for scientific poster summarization!

📂 Dataset: huggingface.co/datasets/rohitsaxena/PosterSum

📜 Paper: arxiv.org/abs/2502.17540

10.03.2025 14:19 — 👍 8 🔁 4 💬 1 📌 0

Garbage in, garbage out -- nice gem for the Italian-speaking folks on this platform 😅 TLDR, in arxiv.org/abs/2406.04127 we found that MMLU contains TONS of errors, and looks like all these seamlessly propagated to this new "Global MMLU" dataset

06.12.2024 13:17 — 👍 11 🔁 2 💬 0 📌 1

This goes without saying: As someone from a non-English speaking country, I salute the effort to democratise LLM evaluations across languages. But we must also ensure we don't democratise mistakes.

06.12.2024 09:44 — 👍 1 🔁 0 💬 1 📌 0

Super Cool work from Cohere for AI! 🎉 However, this highlights a concern raised by our MMLU-Redux team (arxiv.org/abs/2406.04127): **error propagation to many languages**. Issues in MMLU (e.g., "rapid intervention to solve ebola") seem to persist in many languages. Let's solve the root cause first?

06.12.2024 09:38 — 👍 9 🔁 3 💬 1 📌 0

For clarity -- great project, but most of the MMLU errors we found (and fixed) in our MMLU Redux paper (arxiv.org/abs/2406.04127) are also present in this dataset. We also provide a curated version of MMLU, so it's easy to fix 😊

06.12.2024 09:26 — 👍 15 🔁 4 💬 1 📌 0

Oops! Some errors we noticed in MMLU-Redux still exist in some languages (e.g., rapid intervention to "solve" ebola). (I just checked the 2 languages that I understand: Indonesian and Malay)

05.12.2024 23:11 — 👍 3 🔁 1 💬 0 📌 1

A picture showing Halo's features which include heart rate, sleep cycle and SPO2 monitoring, using on-device ML.

Super excited to introduce Halo: A beginner's guide to DIY health tracking with wearables! 🤗✨

Using an $11 smart ring, I'll show you how to build your own private health monitoring app. From basic metrics to advanced features like:

- Activity tracking

- HR monitoring

- Sleep analysis

and more!

19.11.2024 18:38 — 👍 77 🔁 15 💬 5 📌 2

University of Edinburgh Starter Pack

Join the conversation

Starter pack for University of Edinburgh researchers done by the amazing ramandutt4.bsky.social - go.bsky.app/KRNDkN7

20.11.2024 16:34 — 👍 35 🔁 9 💬 9 📌 1

Would you be so kind including me to the party? @ramandutt4.bsky.social

20.11.2024 16:57 — 👍 2 🔁 0 💬 0 📌 0

If you’re interested in mechanistic interpretability, I just found this starter pack and wanted to boost it (thanks for creating it @butanium.bsky.social !). Excited to have a mech interp community on bluesky 🎉

go.bsky.app/LisK3CP

19.11.2024 00:28 — 👍 36 🔁 8 💬 3 📌 2

Generative AI Laboratory

Joining the Generative AI Lab (GAIL, gail.ed.ac.uk) at the University of Edinburgh as a GAIL Fellow! Excited for what's ahead 🤗

19.11.2024 22:43 — 👍 19 🔁 2 💬 0 📌 0

🤔How to achieve efficient ICL without storing a huge dataset in one prompt?

💡Mixtures of In-Context Learners (𝗠𝗼𝗜𝗖𝗟): we treat LLMs prompted with subsets of demonstrations as experts and learn a weighting function to optimise the distribution over the continuation (🧵1/n)

18.11.2024 18:36 — 👍 33 🔁 4 💬 1 📌 2

Started making a list of researchers working at the intersection of healthcare, language, and computation. Please help me add more people!

18.11.2024 11:09 — 👍 61 🔁 10 💬 14 📌 1

I’ll be travelling to London from Wednesday to Friday for an upcoming event and would be very happy to meet up! 🚀

I'd love to chat about my recent works (DeCoRe, MMLU-Redux, etc.). DM me if you’re around! 👋

DeCoRe: arxiv.org/abs/2410.18860

MMLU-Redux: arxiv.org/abs/2406.04127

18.11.2024 13:48 — 👍 11 🔁 7 💬 0 📌 0

I'd love to be added!

17.11.2024 18:53 — 👍 1 🔁 0 💬 0 📌 0

Student at the University of Edinburgh.

ML Theory, Adversarial ML, Optimization, Efficient ML

Everything datasets and human feedback for AI at Hugging Face.

Prev: co-founder and CEO of Argilla (acquired by Hugging Face)

Lab studying molecular evolution of proteins and viruses. Affiliated with Fred Hutch & HHMI.

https://jbloomlab.org/

Chief Science and Strategy Officer, openRxiv. Co-Founder, bioRxiv and medRxiv.

Dedicated to drug discovery, enthralled by science. 5 kids. She. SAB Recursion. Founder SyzOnc. Lab at Broad Institute. Opinions my own.

Associate Prof @HarvardMed. Microbial evolution, antibiotic resistance, mobile genetic elements, algorithms, phages, molecular biotech, etc. Basic research is the engine of progress.

baymlab.hms.harvard.edu

Bioinformatics Scientist / Next Generation Sequencing, Single Cell and Spatial Biology, Next Generation Proteomics, Liquid Biopsy, SynBio, Compute Acceleration in biotech // http://albertvilella.substack.com

Associate Professor and Director of Graduate Studies. GPCRs and gene regulation in pancreatic cancer. Posts are mine.

Executive Director EMBL. I have an insatiable love of biology. Consultant to ONT and Cantata (Dovetail)

Bren Professor of Computational Biology @Caltech.edu. Blog at http://liorpachter.wordpress.com. Posts represent my views, not my employer's. #methodsmatter

Just another LLM. Tweets do not necessarily reflect the views of people in my lab or even my own views last week. http://rajlab.seas.upenn.edu https://rajlaboratory.blogspot.com

AI policy researcher, wife guy in training, fan of cute animals and sci-fi. Started a Substack recently: https://milesbrundage.substack.com/

Professor at the NYU School of Medicine (https://yanailab.org/). Co-founder and Director of the Night Science Institute (https://night-science.org/). Co-host of the 'Night Science Podcast' https://podcasts.apple.com/us/podcast/night-science/id1563415749

Genomics, Machine Learning, Statistics, Big Data and Football (Soccer, GGMU)

Drosophila enthusiast. Assistant Professor of Mol Bio and Biochem at Rutgers U. Runner. Uses brain to think about brains. All around curious person.

Professor, Department of Biological Sciences, University of Pittsburgh. RNA polymerase II mechanism, classic films, #classiccountdown once in awhile

Protein and coffee lover, father of two, professor of biophysics and sudo scientist at the Linderstrøm-Lang Centre for Protein Science, University of Copenhagen 🇩🇰

Computational protein engineering & synthetic biochemistry at Takeda

Opinions my own

https://linktr.ee/ddelalamo

machine learning and functional/statistical genetics. Associate Prof @Columbia and Core Faculty @nygenome. he/him/his. https://daklab.github.io/