It’s a very exciting time to be thinking about the interaction of vision and language, and what we can find in (and learn from) VLMs. Looking forward to talking to people about this at COLM, and thanks to everyone doing awesome research on this topic!

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

Lastly, we didn’t just go blindly into batchtopk SAEs, we tried other SAEs and a semi-NMF, but they don’t work as well: batchtopk dominates the reconstruction-sparsity tradeoff

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

Check out our interactive demo (by the amazing @napoolar), where bridges illustrate our BridgeScore metric: a combination geometrical alignment (cosine) and statistical alignment (coactivation on image-caption pairs): vlm-concept-visualization.com

17.09.2025 19:12 — 👍 2 🔁 0 💬 1 📌 0

And they’re stable ~across training data mixtures~! If we train the SAEs with a 5:1 ratio of text to images, we get a lot more text concepts (makes sense!). But if we weight the points by activation scores (bottom), we see basically the same concepts across very different mixtures

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

But, are the SAEs even stable? It wouldn’t be very enlightening if we were just analyzing a fluke of the SAE seed. Across seeds, we find that frequently-used concepts (the ones that take up 99% of activation weights) are remarkably stable, but the rest are pretty darn unstable.

17.09.2025 19:12 — 👍 0 🔁 0 💬 1 📌 0

How can this be? Because of the projection effect in SAEs! When we impose sparisty, then the inputs that are activated don’t necessarily reflect the whole story of what inputs align with that direction. Here, the batchtopk cutoff (dotted line) hides a multimodal story

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

On first blush, however, the concepts look pretty single-modality: see here their modality scores (how many of the top-activating inputs are images vs text). The classifier results above show us that the actual geometry is often much closer to modality-agnostic.

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

In fact, they often can’t even act as good modality classifiers: if we take the SAE concept direction, and see how well projecting on to that direction separates modality, we see that many of the concepts don’t get great accuracy

17.09.2025 19:12 — 👍 1 🔁 0 💬 1 📌 0

We trained SAEs on the embedding spaces of four VLMs, and analyzed the resulting dictionaries of concepts. Even though image and text concepts lie on separate anisotropic cones, the SAE concepts don’t lie within those cones.

17.09.2025 19:12 — 👍 0 🔁 0 💬 1 📌 0

Are there conceptual directions in VLMs that transcend modality? Check out our COLM oral spotlight 🔦 paper! We use SAEs to analyze the multimodality of linear concepts in VLMs

with @chloesu07.bsky.social, @thomasfel.bsky.social, @shamkakade.bsky.social and Stephanie Gil

arxiv.org/abs/2504.11695

17.09.2025 19:12 — 👍 25 🔁 6 💬 1 📌 1

@avzaagzonunaada.bsky.social

17.09.2025 18:17 — 👍 1 🔁 0 💬 0 📌 0

@antararb.bsky.social is applying for PhDs this fall! She’s super impressive and awesome to work with, and conceived of this project independently and carried it out very successfully! Keep an eye out 🙂

18.06.2025 18:31 — 👍 1 🔁 0 💬 0 📌 0

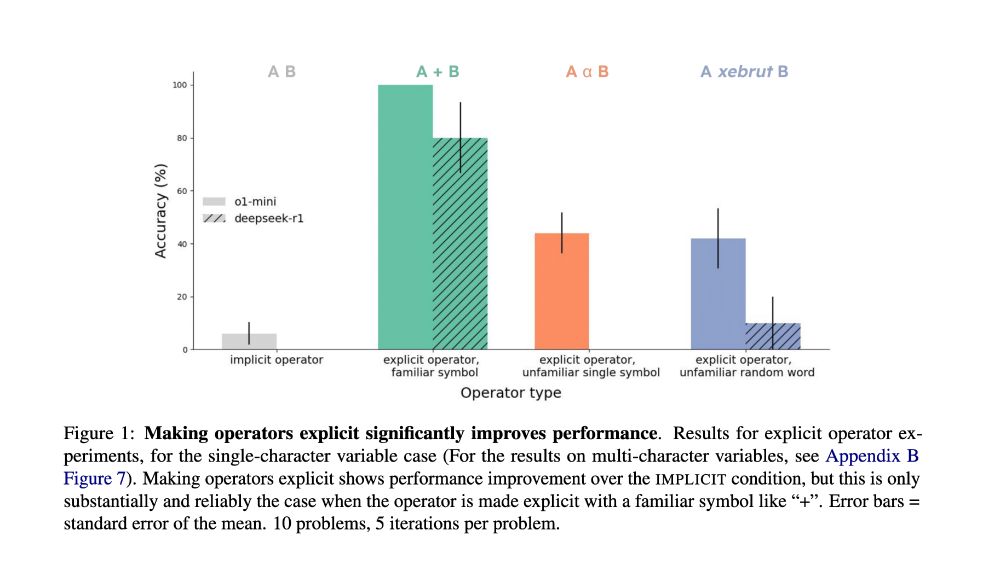

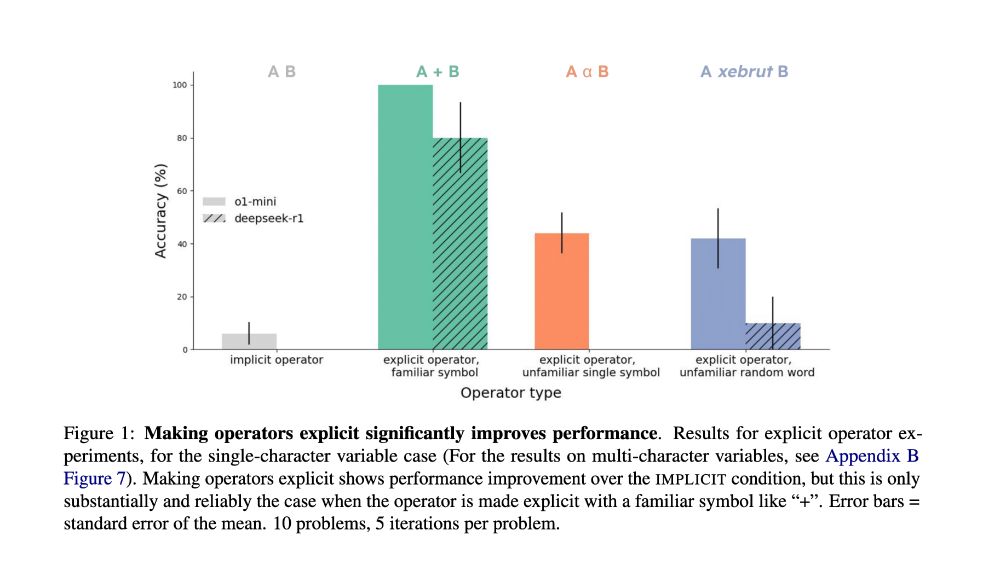

So is it really this implicit operators thing that’s tripping them up? We try many other ablations, looking at the effect of giving extra context in the prompt, using numbers vs words, left-to-right ordering, and subtractive systems, and none of them seem to affect the models that much.

18.06.2025 18:31 — 👍 1 🔁 0 💬 1 📌 0

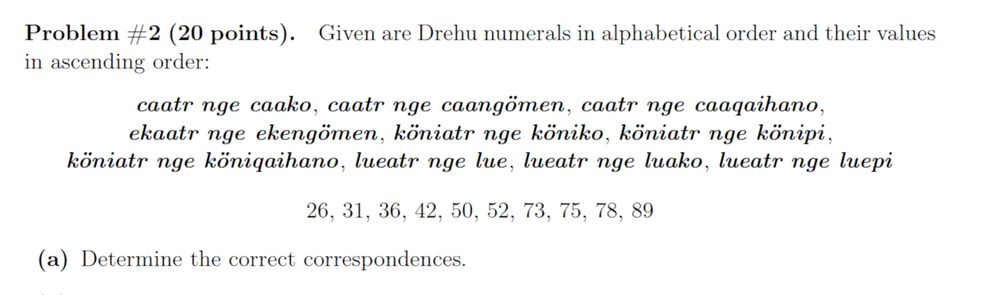

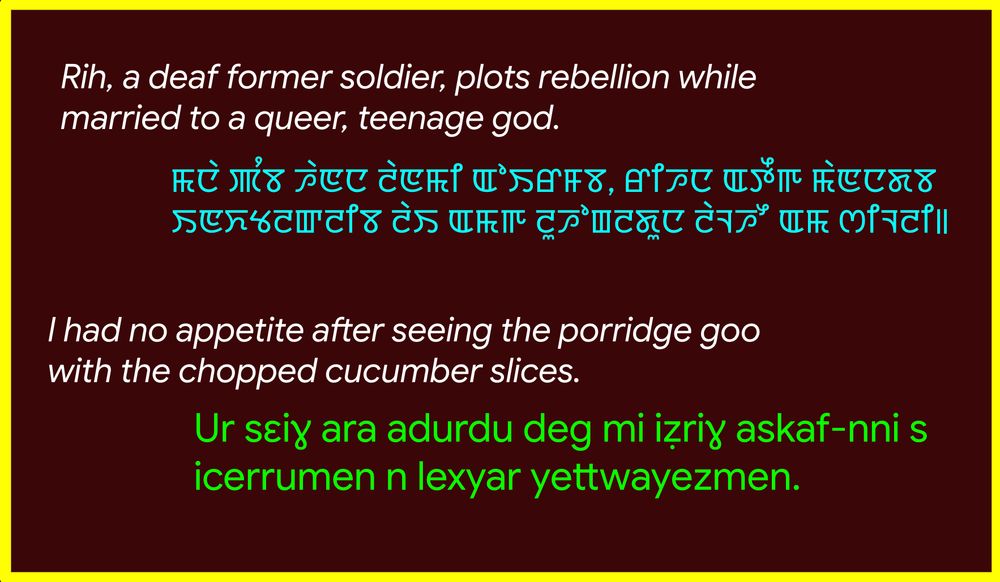

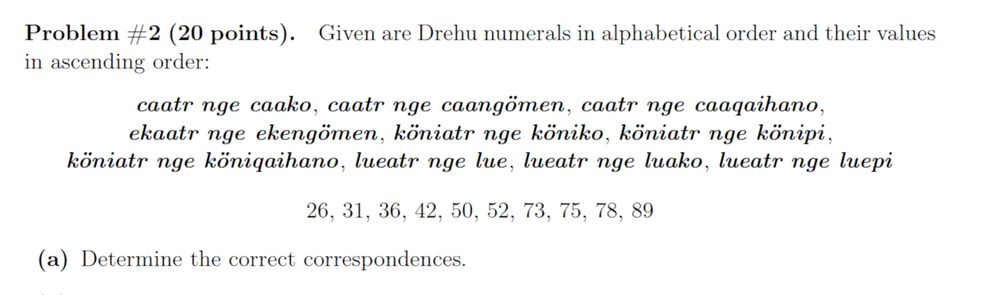

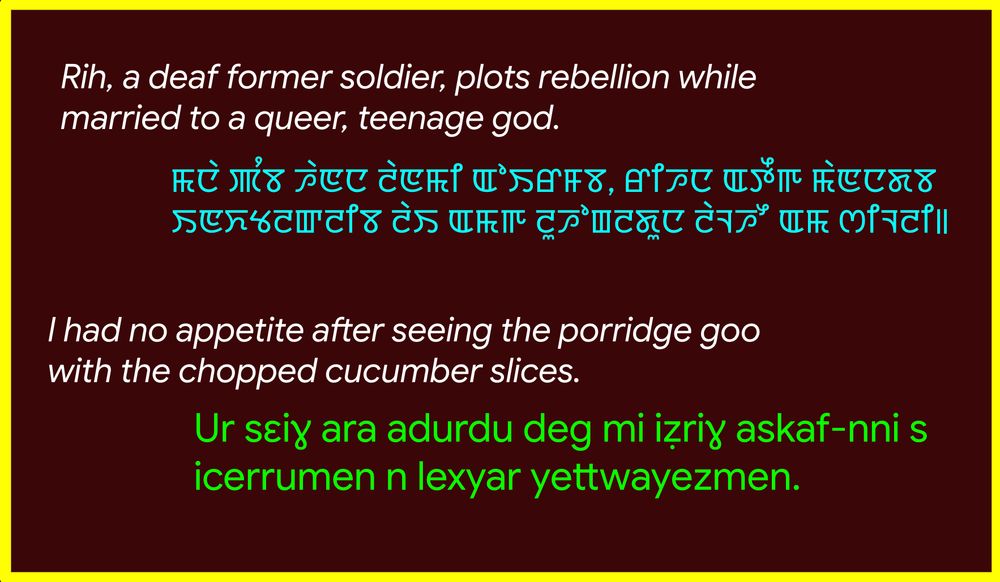

Our experiments are based on Linguistics Olympiad problems that deal with number systems, like the one here. We created additional hand-standardized versions of each puzzle in order to be able to do all of the operator ablations.

18.06.2025 18:31 — 👍 1 🔁 0 💬 1 📌 0

This shows the types of reasoning and variable binding jumps that are hard for LMs. It’s hard to go one level up, and bind a variable to have the meaning of an operator, or to understand that an operator is implicit.

18.06.2025 18:31 — 👍 1 🔁 0 💬 1 📌 0

If we alter the problems to make the operators explicit, the models can solve these problems pretty easily. But it’s still harder to bind a random symbol or word to mean an operator like +. It’s much easier when we use the familiar symbols for the operators, like + and x.

18.06.2025 18:31 — 👍 1 🔁 0 💬 1 📌 0

Our main finding: LMs find it hard when *operators* are implicit. We don’t say “5 times 100 plus 20 plus 3”, we say “five hundred and twenty-three”. The Linguistics Olympiad puzzles are pretty simple systems of equations that an LM should solve – but the operators aren’t explicit.

18.06.2025 18:31 — 👍 1 🔁 0 💬 1 📌 0

Why can’t LMs solve puzzles about the number systems of languages, when they can solve really complex math problems? Our new paper, led by @antararb.bsky.social looks at why this intersection of language and math is difficult, and what this means for LM reasoning! arxiv.org/abs/2506.13886

18.06.2025 18:31 — 👍 29 🔁 5 💬 4 📌 1

ACL paper alert! What structure is lost when using linearizing interp methods like Shapley? We show the nonlinear interactions between features reflect structures described by the sciences of syntax, semantics, and phonology.

12.06.2025 18:56 — 👍 55 🔁 12 💬 3 📌 1

Committee selfie!

Congrats to Veronica Boyce on her dissertation defense! That’s three amazing talks by three great students in 8 days!

30.05.2025 18:33 — 👍 31 🔁 1 💬 0 📌 0

(the unfortunate truth is that I am really enjoying this mac and its battery life oops)

06.03.2025 21:54 — 👍 2 🔁 0 💬 0 📌 0

This work Mac (my first ever) is great because every time something seriously breaks, instead of becoming distressed and despondent like I usually do, it's just like "ooooooh yeahhh, yet another win for team Linux 😎😎😎🎉🐧"

06.03.2025 21:53 — 👍 7 🔁 0 💬 1 📌 0

😼SMOL DATA ALERT! 😼Anouncing SMOL, a professionally-translated dataset for 115 very low-resource languages! Paper: arxiv.org/pdf/2502.12301

Huggingface: huggingface.co/datasets/goo...

19.02.2025 17:36 — 👍 14 🔁 8 💬 2 📌 1

New paper in Psychological Review!

In "Causation, Meaning, and Communication" Ari Beller (cicl.stanford.edu/member/ari_b...) develops a computational model of how people use & understand expressions like "caused", "enabled", and "affected".

📃 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

🧵

12.02.2025 18:25 — 👍 57 🔁 17 💬 1 📌 0

Where are all of the phoneticians of the Boston area and why isn't there a storied subfield of fieldwork studying the Cambridge shopkeeper who seems to have a mix between a West Country (rhotic English!) and a Boston (non-rhotic American!) accent.

Apparently the shop's been open for decades, smh

16.01.2025 19:32 — 👍 2 🔁 0 💬 0 📌 0

Quanta write-up of our Mission: Impossible Language Models work, led by @juliekallini.bsky.social. As the photos suggest, Richard, @isabelpapad.bsky.social, and I do all our work sitting together around a single laptop and pointing at the screen.

13.01.2025 20:59 — 👍 27 🔁 4 💬 0 📌 0

My most controversial take is that you should never use commit -m, just let it open the damn vim file, let yourself think for a second, and then write something descriptive

17.12.2024 17:28 — 👍 11 🔁 1 💬 1 📌 0

Postdoc in psychology and cognitive neuroscience mainly interested in conceptual combination, semantic memory and computational modeling.

https://marcociapparelli.github.io/

☀️ Assistant Professor of Computer Science at CU Boulder 👩💻 NLP, cultural analytics, narratives, online communities 🌐 https://maria-antoniak.github.io 💬 books, bikes, games, art

CS PhD student @ Harvard/Kempner Institute

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

Co-founder of Reka & Honorary Researcher @hitz-zentroa.bsky.social (University of the Basque Country) | Past: Research Scientist at FAIR (Meta AI)

PhD @stanfordnlp.bsky.social

PhD @Stanford working w Noah Goodman

Studying in-context learning and reasoning in humans and machines

Prev. @UofT CS & Psych

https://scholar.harvard.edu/kathryndavidson

Associate professor in NLP, engaged citizen. Tweeting about work, life and stuffs that I care about. All my tweets can be used freely. @zehavoc@mastodon.social @zehavoc (@twitter)

The Kempner Institute for the Study of Natural and Artificial Intelligence at Harvard University.

linguistics + computer science @ harvard!

PhD student in Speech and Hearing at Harvard/MIT. Building ANNs to study how humans perceive/produce speech and voice.

Working with @joshhmcdermott.bsky.social

https://gasserelbanna.github.io/

MSc. at EPFL

BSc. at Cairo University

ex Logitech and IDIAP

Senior Director of AI/ML Research Engineering, Kempner Institute @Harvard , views are my own

Senior Lecturer (Associate Professor) in Natural Language Processing, Queen's University Belfast. NLProc • Cognitive Science • Semantics • Health Analytics.

Assistant Professor of CS, University of Southern California. NLP / ML.

Parisienne Buffalonian linguist spoonie mom. http://bcopley.com "The line separating good and evil passes...right through every human heart." -Solzhenitsyn

Internet linguist. Wrote Because Internet, NYT bestseller about internet language. Co-hosts @lingthusiasm.bsky.social, a podcast that's enthusiastic about linguistics.

she/her 🌈

Montreal en/fr 🇨🇦

gretchenmcculloch.com

Computational psycholinguistics PhD student @NYU lingusitics | first gen!

A podcast that's enthusiastic about linguistics! By @gretchenmcc.bsky.social and @superlinguo.bsky.social

"Fascinating" -NYT

"Joyously nerdy" -Buzzfeed

lingthusiasm.com

Not sure where to start? Try our silly personality quiz: bit.ly/lingthusiasmquiz

Linguist, cognitive scientist at University of Stuttgart. I study language and how we understand it one word at a time.