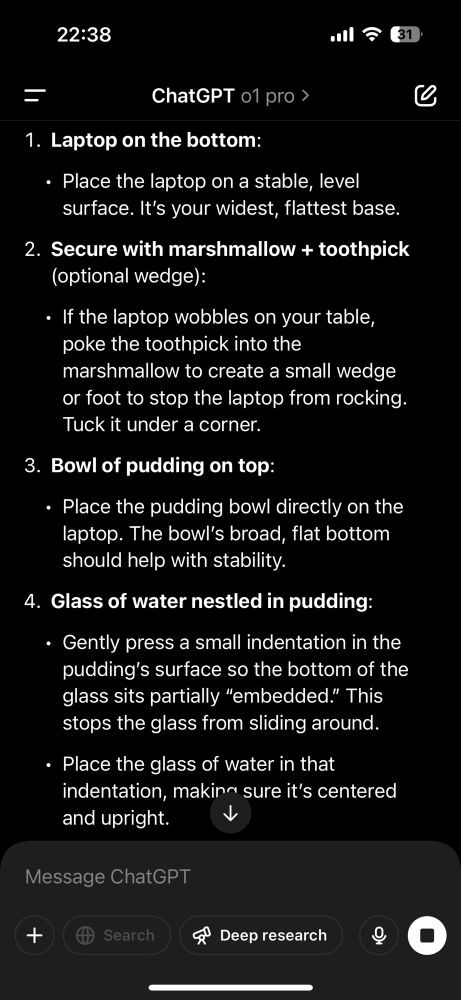

o1 pro mode is pretty hilarious as well, especially step 2 (aka “optional wedge”)

06.03.2025 21:39 — 👍 1 🔁 0 💬 0 📌 0@matthiasplappert.com.bsky.social

I sometimes like AI and computers. I always like dogs. Ex OpenAI and @github.com Website: matthiasplappert.com

o1 pro mode is pretty hilarious as well, especially step 2 (aka “optional wedge”)

06.03.2025 21:39 — 👍 1 🔁 0 💬 0 📌 0RL always finds a way

03.03.2025 21:53 — 👍 1 🔁 0 💬 0 📌 0How sure are we that the problem is only a software problem and not also a hardware limitation?

28.02.2025 04:19 — 👍 2 🔁 1 💬 3 📌 0This man is asking the right questions. It's very much a hardware problem still, especially wrt reliability, power efficiency, and cost.

In that sense this humanoid robot hype is much worse than the self-driving cars one, because at least we knew how to build cars already.

I'm actually very confused by the GPT-4.5 release

28.02.2025 11:26 — 👍 0 🔁 0 💬 0 📌 0We still don’t have broadly available self-driving cars but somehow humanoid robots for our homes are imminent. Yeah sure 🙄

22.02.2025 09:47 — 👍 3 🔁 0 💬 1 📌 0Also, it has an unusual amount of non-fungible experiences to offer that I think will remain (and will probably increase) in value because they cannot be automated.

06.02.2025 03:23 — 👍 0 🔁 0 💬 1 📌 0I’m actually quite bullish on Europe: I think it’s culturally well equipped to deal with the onset of increasing automation due to AI. We already have strong social security systems that can buffer the disruptions and peoples lives are less focused on work.

06.02.2025 03:23 — 👍 1 🔁 0 💬 1 📌 0This is cool, congrats! But the code benchmarks you use are non-standard and make it unnecessarily hard to compare these models to other one. Why do you not report HumanEval, Codeforces or SWE-bench performance?

05.02.2025 23:56 — 👍 0 🔁 0 💬 0 📌 0What made you a fan? Have heard multiple people switching recently but I’m not sure why

31.01.2025 10:29 — 👍 1 🔁 0 💬 1 📌 0So, even though Dario claims this to be expected, I think this changes the economics of the whole thing quite significantly and not in the favor of those who rely on massive outside investments (ie OpenAI and Anthropic). (6/6)

30.01.2025 08:31 — 👍 0 🔁 0 💬 0 📌 0So how valuable really is the second part? Surely you have to discount this now as well.

(Side note: DeepSeek also intends to build AGI so the first point gets indirectly attacked as well because there’s more competition; Dario realizes this and wants more export control for this reason) (5/n)

The second angle is what DeepSeek very directly attacked: they give you a recipe for how to train a frontier model for $5.5M and the weights themselves for free.

They also became VERY popular with consumers VERY quickly: their app is still the most downloaded app on the App Store. (4/n)

So you have two possible angles: we’ll build AGI and this will be very valuable and/or what we’re building on the way is also very valuable.

The problem is that the first angle is very risky because you don’t know who will build AGI and when. So even though valuable, you need to discount it. (3/n)

(This is a key difference from Google / Amazon / Microsoft: they have massive revenue and sizable balance sheets and can finance their AI projects this way.)

But because they require massive, outside investments, investors reasonably expect a return on investment. (2/n)

Essays like the one from Dario (darioamodei.com/on-deepseek-...) miss the point on why DeepSeek matters so much to investors. The key issue for OpenAI and Anthropic (and any other VC model company in this space) is that they absolutely require outside investments. (1/n)

30.01.2025 08:31 — 👍 0 🔁 0 💬 1 📌 0Yeah sure but the foreign phrasing is still misleading as it makes this sound like some foreign adversary somehow undermined the US govt, which is clearly not the case. I think the issue is that you can purchase that much influence regardless of whether or not the purchaser was born in the US

29.01.2025 14:04 — 👍 1 🔁 0 💬 3 📌 0I agree but the foreign part is strange; Musk is a US citizen and has been for a long time.

29.01.2025 10:06 — 👍 7 🔁 0 💬 5 📌 0

I don’t know, NVIDIA revenue is mostly driven by a very small number of big spender customers and if this cools the risk appetite of those companies (because someone can come along and do what you’ve been doing but with orders of magnitude less capex), that’s a problem for NVIDIA.

27.01.2025 22:28 — 👍 0 🔁 0 💬 0 📌 0DeepSeek R1 appears to be a VERY strong model for coding - examples for both C and Python here: https://simonwillison.net/2025/Jan/27/llamacpp-pr/

27.01.2025 18:33 — 👍 41 🔁 14 💬 2 📌 0OpenAI in particular still has an advantage of course because of its ChatGPT user base and brand.

These open weight models are also still lacking behind in some features (lack of multi-modality and advanced voice mode, for example).

It also increasingly looks to me like these models will get commoditized very rapidly.

They also depreciate in value extremely quickly: Arguably the value of a frontier model is now only a few million dollars but it likely cost OpenAI et al many times more than that only a few months ago.

I think these models likely caused a panic at places like OpenAI, Anthropic, Google and Meta: one of their main moats (having access to the biggest computers) seems weakened significantly.

It is also proof that much less well-capitalized players can be competitive. Others will notice and compete.

Finally got around to read the DeepSeek-v3 and r1 papers in detail and I’m very impressed. They keep things simple and pragmatic but go deep and optimize when worthwhile.

I think access to compute remains key but they demonstrate how much more can be squeezed out when faced with constraints.

It’s almost as if you build a toxic hell-hole of misinformation, conspiracy theories and crypto scams, people don’t want to be there. Who would’ve thought 🤷♂️

24.01.2025 21:54 — 👍 3 🔁 0 💬 0 📌 1Ladies and gentlemen: we did it

24.01.2025 20:52 — 👍 24687 🔁 2722 💬 1029 📌 212Oh I guess the KL is against the ref = base model not the previous iteration. I guess that makes more sense if you want to keep this model be interpretable / understandable to humans

20.01.2025 17:21 — 👍 2 🔁 0 💬 1 📌 0Bit weird that they have a KL penalty in there when the clipping part already ensures a trust region though (per the original PPO paper)

20.01.2025 17:20 — 👍 1 🔁 0 💬 1 📌 1(To be clear, this doesn’t change that the Trump coin thing is incredibly dystopian)

20.01.2025 14:21 — 👍 1 🔁 0 💬 0 📌 0