31.05.2025 10:31 — 👍 10152 🔁 1464 💬 162 📌 72

31.05.2025 10:31 — 👍 10152 🔁 1464 💬 162 📌 72

Andrea Panizza

@andreapanizza.bsky.social

ML, trekking, enjoying life

@andreapanizza.bsky.social

ML, trekking, enjoying life

31.05.2025 10:31 — 👍 10152 🔁 1464 💬 162 📌 72

31.05.2025 10:31 — 👍 10152 🔁 1464 💬 162 📌 72

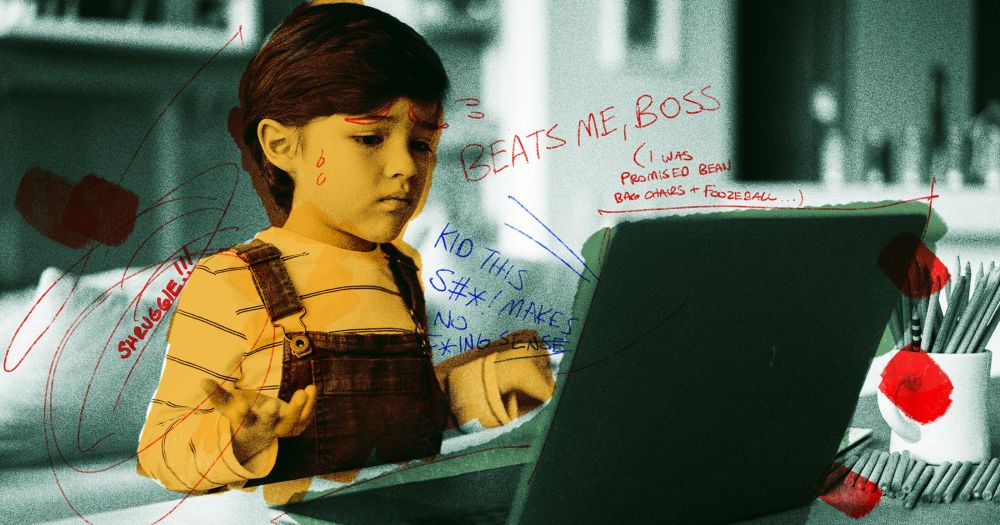

Interviewer: Can you explain this gap in your resume?

LM researcher: You're right to wonder about the gaps in my resume! They are more common than people think, and there are many valid reasons why someone might have them. Here are some of the most frequent reasons you might see a gap:

Finally found the time to watch it in full: one of the most interesting and thought provoking LLM conference I’ve seen in a while. You see LLM as latent attention graphs and many structural oddities suddenly falls into place. www.youtube.com/watch?v=J1YC...

12.04.2025 06:45 — 👍 40 🔁 4 💬 1 📌 0This is beautiful 🤩 I would have paid for a similar treatment from my team

10.04.2025 19:49 — 👍 1 🔁 0 💬 1 📌 0

Parents Gently Explain To Child That Their Money In Heaven Now

04.04.2025 21:30 — 👍 18524 🔁 3483 💬 162 📌 189PS I forgot an important caveat: the compute budget should be the same for both methods being HPO'ed. If the nihilist feels like being a PITA, I'll add a curve of the performance of the two methods as a function of the compute budget. If they still don't give up, I give up 🤷♂️ life goes on regardless.

01.04.2025 12:23 — 👍 0 🔁 0 💬 0 📌 0cross-validation precisely to answer this kind of objection. Is HPO is unfeasible, I agree that the result is just preliminary evidence. I note that since HPO is costly, there's merit in methods which show superior performance with default HP, but I don't insist b/c it's a valid objection. /2

01.04.2025 12:17 — 👍 0 🔁 0 💬 1 📌 0

I start by acknowledging the validity of their objection, because inn general it's true that with different HP, results may have been different (even random seeds may be considered HP, see arxiv.org/abs/2210.13393). Secondly, if we're in a situation where HPO is feasible, I use nested 1/

01.04.2025 12:13 — 👍 0 🔁 0 💬 1 📌 0

More than 250 people have already enrolled in the Causal Secrets Mini-Course!

All but one review so far are 5-star.

It's free for everyone!

Share it with your friend!

https://bit.ly/4ic4VK4

#CausalSky

One of the first papers I've seen with RLVR / reinforcement finetuning of vision language models

Looks about as simple as we would expect it to be, lots of details to uncover.

Liu et al. Visual-RFT: Visual Reinforcement Fine-Tuning

buff.ly/DbGuYve

(posted a week ago, oops)

Here's the handout for my "Cutting-edge web scraping techniques" workshop at #NICAR2025 this morning github.com/simonw/nicar...

Plus some extra notes on the custom software I built to support the workshop: simonwillison.net/2025/Mar/8/c...

This thing now deserves its own name

06.03.2025 21:04 — 👍 6 🔁 3 💬 0 📌 0Nicholas Carlini moves to Anthrophic.

nicholas.carlini.com/writing/2025...

Ah, during the time of the big excitement about L5! I remember talking to people in Houston, expecting FSD to be solved soon...things proved to be harder, but a lot of progress has been made!

05.03.2025 17:20 — 👍 1 🔁 0 💬 0 📌 0

I already advertised for this document when I posted it on arXiv, and later when it was published.

This week, with the agreement of the publisher, I uploaded the published version on arXiv.

Less typos, more references and additional sections including PAC-Bayes Bernstein.

arxiv.org/abs/2110.11216

Thanks!

05.03.2025 07:42 — 👍 0 🔁 0 💬 0 📌 0Very well written! Did you work in the sector?

05.03.2025 07:42 — 👍 2 🔁 0 💬 2 📌 0

My self driving car writeup from December (needs an update) open.substack.com/pub/itcanthi...

05.03.2025 05:25 — 👍 10 🔁 1 💬 1 📌 0Looks like a book or a very long review paper! Can you share the link?

04.03.2025 08:53 — 👍 1 🔁 0 💬 1 📌 0This is revised down to -2.8%, and partly precipitated the big flush today. Probably some sovereign wealth fund exited positions across Nasdaq and S&P given the volume of sales.

03.03.2025 21:40 — 👍 4 🔁 1 💬 1 📌 0If you look at most of the models we've received from OpenAI, Anthropic, and Google in the last 18 months you'll hear a lot of "Most of the improvements were in the post-training phase."

Here's a simple analogy for how so many gains can be made on mostly the same base model:

More evidence of the importance of training analysis for interp! Induction heads might serve as *preliminary* function vector heads (which directly compute in-context learning tasks). Ultimately, LMs rely on FV heads more than IH heads for ICL. from @kayoyin.bsky.social

03.03.2025 16:51 — 👍 15 🔁 2 💬 2 📌 0

I'm sure this is fine

futurism.com/young-coders...

Impressive piece of work by Soumya Mukherjee and Bharath Sriperumbudur: arxiv.org/abs/2502.20755

Minimal optimal kernel two-sample tests with random Fourier features.

Our Workshop on Uncertainty Quantification for Computer Vision goes to @cvprconference.bsky.social this year!

We have a super line-up of speakers and a call for papers.

This is a chance for your paper to shine at #CVPR2025

⏲️ Submission deadline: 14 March

💻 Page: uncertainty-cv.github.io/2025/

A new paper by Vovk that continues exploring properties of so-called "randomness predictors" (compared to "conformal predictors").

www.arxiv.org/abs/2502.19254

I am happy to announce that the Kakeya set conjecture, one of the most sought after open problems in geometric measure theory, has now been proven (in three dimensions) by Hong Wang and Joshua Zahl! arxiv.org/abs/2502.17655 I discuss some ideas of the proof at terrytao.wordpress.com/2025/02/25/t...

26.02.2025 04:49 — 👍 156 🔁 36 💬 0 📌 4