Another intern opening on our team, for a project I’ll be involved in (deadline soon!)

25.02.2025 02:05 — 👍 8 🔁 3 💬 0 📌 0

Last month I co-taught a class on diffusion models at MIT during the IAP term: www.practical-diffusion.org

In the lectures, we first introduced diffusion models from a practitioner's perspective, showing how to build a simple but powerful implementation from the ground up (L1).

(1/4)

20.02.2025 19:19 — 👍 5 🔁 1 💬 1 📌 0

Our main results study when projective composition is achieved by linearly combining scores.

We prove it suffices for particular independence properties to hold in pixel-space. Importantly, some results extend to independence in feature-space... but new complexities also arise (see the paper!) 5/5

11.02.2025 05:59 — 👍 2 🔁 0 💬 0 📌 0

We formalize this idea with a definition called Projective Composition — based on projection functions that extract the “key features” for each distribution to be composed. 4/

11.02.2025 05:59 — 👍 6 🔁 0 💬 1 📌 0

What does it mean for composition to "work" in these diverse settings? We need to specify which aspects of each distribution we care about— i.e. the “key features” that characterize a hat, dog, horse, or object-at-a-location. The "correct" composition should have all the features at once. 3/

11.02.2025 05:59 — 👍 2 🔁 0 💬 1 📌 0

Part of challenge is, we may want compositions to be OOD w.r.t. the distributions being composed. For example in this CLEVR experiment, we trained diffusion models on images of a *single* object conditioned on location, and composed them to generate images of *multiple* objects. 2/

11.02.2025 05:59 — 👍 2 🔁 0 💬 1 📌 0

Paper🧵 (cross-posted at X): When does composition of diffusion models "work"? Intuitively, the reason dog+hat works and dog+horse doesn’t has something to do with independence between the concepts being composed. The tricky part is to formalize exactly what this means. 1/

11.02.2025 05:59 — 👍 39 🔁 15 💬 2 📌 2

finally managed to sneak my dog into a paper: arxiv.org/abs/2502.04549

10.02.2025 05:03 — 👍 62 🔁 4 💬 1 📌 1

x.com

Credit to: x.com/sjforman/sta...

09.02.2025 04:54 — 👍 1 🔁 0 💬 0 📌 0

nice idea actually lol: “Periodic cooking of eggs” : www.nature.com/articles/s44...

09.02.2025 04:53 — 👍 7 🔁 2 💬 1 📌 0

Reminder of a great dictum in research, one of 3 drilled into us by my PhD supervisor: "Don't believe anything obtained only one way", for which the actionable dictum is "immediately do a 2nd independent test of something that looks interesting before in any way betting on it". Its a great activity!

05.02.2025 20:17 — 👍 12 🔁 3 💬 0 📌 0

I’ve been in major denial about how powerful LLMs are, mainly bc I know of no good reason for it to be true. I imagine this was how deep learning felt to theorists the first time around 😬

04.02.2025 17:25 — 👍 22 🔁 0 💬 0 📌 0

Last year, we funded 250 authors and other contributors to attend #ICLR2024 in Vienna as part of this program. If you or your organization want to directly support contributors this year, please get in touch! Hope to see you in Singapore at #ICLR2025!

21.01.2025 15:52 — 👍 37 🔁 14 💬 1 📌 0

Happy for you Peli!!

18.01.2025 23:32 — 👍 1 🔁 0 💬 0 📌 0

The thing about "AI progress is hitting a wall" is that AI progress (like most scientific research) is a maze, and the way you solve a maze is by constantly hitting walls and changing directions.

18.01.2025 15:45 — 👍 96 🔁 11 💬 4 📌 0

for example I never trust an experiment in a paper unless (a) I know the authors well or (b) I’ve reproduced the results myself

11.01.2025 22:43 — 👍 6 🔁 0 💬 0 📌 0

imo most academics are skeptical of papers? It’s well-known that many accepted papers are overclaimed or just wrong— there’s only a few papers people really pay attention to despite the volume

11.01.2025 22:42 — 👍 8 🔁 0 💬 1 📌 0

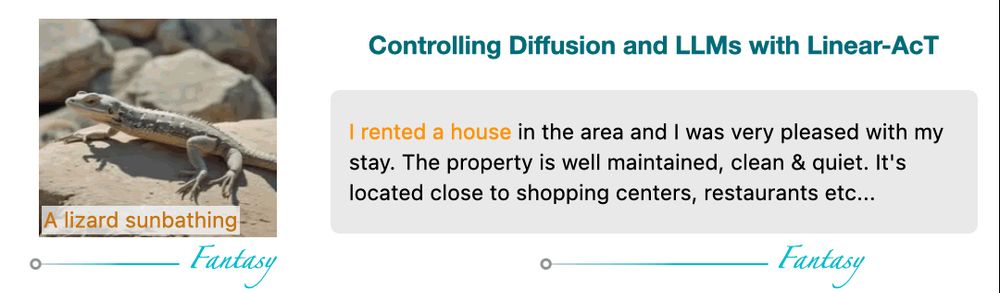

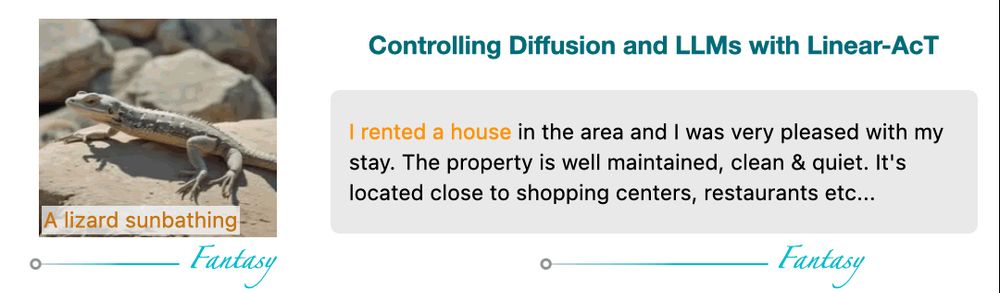

Thrilled to share the latest work from our team at

@Apple

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

10.12.2024 13:09 — 👍 47 🔁 15 💬 3 📌 5

Postdoctoral Researcher, Fundamental AI Research (PhD)

Meta's mission is to build the future of human connection and the technology that makes it possible.

📢 My team at Meta (including Yaron Lipman and Ricky Chen) is hiring a postdoctoral researcher to help us build the next generation of flow, transport, and diffusion models! Please apply here and message me:

www.metacareers.com/jobs/1459691...

06.01.2025 17:37 — 👍 53 🔁 12 💬 1 📌 3

Giving a short talk at JMM soon, which might finally be the push I needed to learn Lean…

03.01.2025 18:30 — 👍 1 🔁 0 💬 1 📌 0

This optimal denoiser has a closed-form for finite train sets, and notably does not reproduce its train set; it can sort of "compose consistent patches." Good exercise for reader: work out the details to explain Figure 3.

01.01.2025 02:46 — 👍 10 🔁 0 💬 0 📌 0

Just read this, neat paper! I really enjoyed Figure 3 illustrating the basic idea: Suppose you train a diffusion model where the denoiser is restricted to be "local" (each pixel i only depends on its 3x3 neighborhood N(i)). The optimal local denoiser for pixel i is E[ x_0[i] | x_t[ N(i) ] ]...cont

01.01.2025 02:46 — 👍 39 🔁 2 💬 1 📌 0

Neat, I’ll take a closer look! (I think I saw an earlier talk you gave on this as well)

31.12.2024 20:15 — 👍 1 🔁 0 💬 0 📌 0

LLMs dont have motives, goals or intents, and so they wont lie or deceive in order to obtain them. but they are fantastic at replicating human culture, and there, goals, intents and deceit abound. so yes, we should also care about such "behaviors" (outputs) in deployed systems.

26.12.2024 19:05 — 👍 62 🔁 10 💬 7 📌 1

One #postdoc position is still available at the National University of Singapore (NUS) to work on sampling, high-dimensional data-assimilation, and diffusion/flow models. Applications are open until the end of January. Details:

alexxthiery.github.io/jobs/2024_di...

15.12.2024 14:46 — 👍 41 🔁 18 💬 0 📌 0

“Should you still get a PhD given o3” feels like a weird category error. Yes, obviously you should still have fun and learn things in a world with capable AI. What else are you going to do, sit around on your hands?

22.12.2024 04:29 — 👍 88 🔁 6 💬 5 📌 1

sites.google.com/view/m3l-202...

14.12.2024 06:57 — 👍 1 🔁 0 💬 0 📌 0

Catch our talk about CFG at the M3L workshop Saturday morning @ Neurips! I’ll also be at the morning poster session, happy to chat

14.12.2024 06:56 — 👍 14 🔁 0 💬 1 📌 0

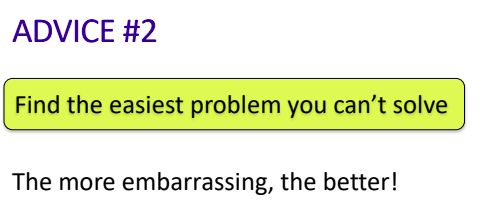

Found slides by Ankur Moitra (presented at a TCS For All event) on "How to do theoretical research." Full of great advice!

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

13.12.2024 20:31 — 👍 132 🔁 29 💬 3 📌 4

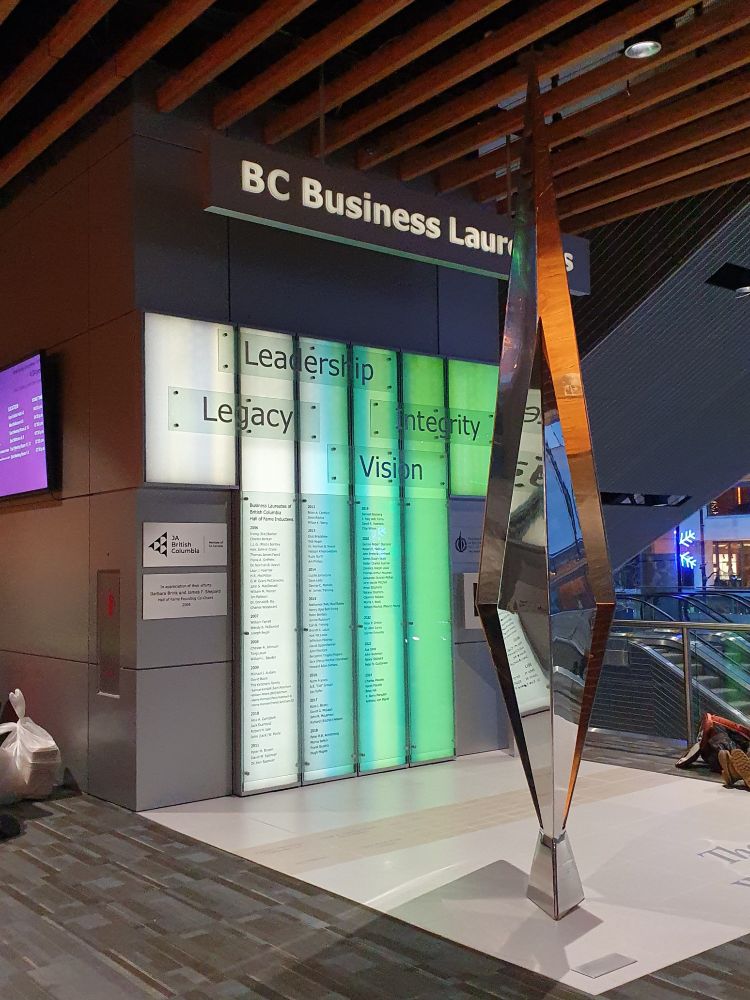

It's located near the west entrance to the west side of the conference center, on the first floor, in case that helps!

When a bunch of diffusers sit down and talk shop, their flow cannot be matched😎

It's time for the #NeurIPS2024 diffusion circle!

🕒Join us at 3PM on Friday December 13. We'll meet near this thing, and venture out from there and find a good spot to sit. Tell your friends!

12.12.2024 01:15 — 👍 42 🔁 7 💬 1 📌 1

data analysis @nansen, prev. research on private & responsible ml @harvard

More good things for everyone. Public sector appreciator. Tax and welfare policy knower. Hyperinflation doubter.

Software engineer, avgeek, LAN party enthusiast.

RL + LLM @ai2.bsky.social; main dev of https://cleanrl.dev/

researcher studying privacy, security, reliability, and broader social implications of algorithmic systems · fake doctor working at a real hospital

website: https://kulyny.ch

ML Engineer-ist @ Apple Machine Learning Research

Surgeon, Writer ("Being Mortal," "Checklist Manifesto"), and formerly led Global Health @USAID.

Training robots in simulation.

SF writer / computer programmer

Latest novel: MORPHOTROPHIC

Latest collection: SLEEP AND THE SOUL

Web site: http://gregegan.net

Also: @gregeganSF@mathstodon.xyz

Automatically tweets new posts from http://statmodeling.stat.columbia.edu

Please respond in the comment section of the blog.

Old posts spool at https://twitter.com/StatRetro

Technologist, scientist. Co-founder of Convergent Research.

Sampling here. Mostly on Twitter still as @stewartbrand.

five out of eight computers on a scale of computers

Researcher at Tübingen University. Manifold learning, contrastive learning, scRNAseq data. Excess mortality. Born but to die and reas'ning but to err.

Caltech theoretical physicist