My first Gist piece about how much I hate A.I. can be found here. It was published this time last year but one handy thing about the AI miasma that refuses to die, is that every word of it is still completely relevant.

www.thegist.ie/the-gist-3/

My first Gist piece about how much I hate A.I. can be found here. It was published this time last year but one handy thing about the AI miasma that refuses to die, is that every word of it is still completely relevant.

www.thegist.ie/the-gist-3/

In what's now become an annual tradition, I've written another piece for The Gist about how and why I hate A.I.

On a bubble about to burst, a future rotted to its core, and the limits of the Mrs Doyle Theory of artificial intelligence.

www.thegist.ie/guest-gist-2...

Tumblr user nocryptographer: I talked with someone who works in book publishing, and they mentioned they get a lot of AI slop these days. I asked how they know what's human-written, and they said that there's one thing that will reveal AI slop without error, and that's the author not knowing their own creation. A real author can talk about their story for hours. They love to elaborate every character, every twist, every detail. Because those existed in their head long before they ever made it to the paper. They were loved before they were written. AI slop wasn't. It was just vomited into existence. Someone who generates their story with AI will never bond with their story the way real writers do. That's why they may not know what to say when they're asked why did the character do this, or even remember the scene in the first place. It's something they read, not something they wrote. And to a writer, those are not the same. There's a unique bond between the creator and the creation. If your writing doesn't come of you, you'll always lack that. I keep hearing soon we won't be able to tell. And perhaps, in a superficial sense, that's true. But there is a difference. It's not em dashes or repeated words. It's whether the story was made by someone who loves it and cares about it. If the writer's eyes light up when asked why did the character do that? and they start their very own Ted Talk about that specific scene... then it's real.

03.12.2025 05:37 — 👍 6490 🔁 2834 💬 36 📌 128

oooh. Bookmarking this! "How to turn off AI tools in Apple, Google, Microsoft, and more." Step-by-step instructions from Consumer Reports.

15.10.2025 20:53 — 👍 5085 🔁 3080 💬 41 📌 45

Zoe Williams interviews feminist philosopher @manongarcia.bsky.social - the English translation of her book about the Pelicot trial was published last week

13.10.2025 13:37 — 👍 74 🔁 25 💬 1 📌 4

„…dass wir mit der Gewissheit aufwachsen, jederzeit vergewaltigbar zu sein…“

@woz.ch über das neue Buch von @manongarcia.bsky.social

„Mit Männern leben“ - das auch Männer lesen sollten.

www.woz.ch/2542/mit-mae...

Margrethe Vestager talks about the #googleadtech case (pending in the US & EU):

"Had we taken the decision 25 years go that we would not allow conflicts of interest [in digital markets], we would be in a different world. And it's going to get worse with AI."

www.youtube.com/live/KlO8ta8...

Abstract: Under the banner of progress, products have been uncritically adopted or even imposed on users — in past centuries with tobacco and combustion engines, and in the 21st with social media. For these collective blunders, we now regret our involvement or apathy as scientists, and society struggles to put the genie back in the bottle. Currently, we are similarly entangled with artificial intelligence (AI) technology. For example, software updates are rolled out seamlessly and non-consensually, Microsoft Office is bundled with chatbots, and we, our students, and our employers have had no say, as it is not considered a valid position to reject AI technologies in our teaching and research. This is why in June 2025, we co-authored an Open Letter calling on our employers to reverse and rethink their stance on uncritically adopting AI technologies. In this position piece, we expound on why universities must take their role seriously toa) counter the technology industry’s marketing, hype, and harm; and to b) safeguard higher education, critical thinking, expertise, academic freedom, and scientific integrity. We include pointers to relevant work to further inform our colleagues.

Figure 1. A cartoon set theoretic view on various terms (see Table 1) used when discussing the superset AI (black outline, hatched background): LLMs are in orange; ANNs are in magenta; generative models are in blue; and finally, chatbots are in green. Where these intersect, the colours reflect that, e.g. generative adversarial network (GAN) and Boltzmann machine (BM) models are in the purple subset because they are both generative and ANNs. In the case of proprietary closed source models, e.g. OpenAI’s ChatGPT and Apple’s Siri, we cannot verify their implementation and so academics can only make educated guesses (cf. Dingemanse 2025). Undefined terms used above: BERT (Devlin et al. 2019); AlexNet (Krizhevsky et al. 2017); A.L.I.C.E. (Wallace 2009); ELIZA (Weizenbaum 1966); Jabberwacky (Twist 2003); linear discriminant analysis (LDA); quadratic discriminant analysis (QDA).

Table 1. Below some of the typical terminological disarray is untangled. Importantly, none of these terms are orthogonal nor do they exclusively pick out the types of products we may wish to critique or proscribe.

Protecting the Ecosystem of Human Knowledge: Five Principles

Finally! 🤩 Our position piece: Against the Uncritical Adoption of 'AI' Technologies in Academia:

doi.org/10.5281/zeno...

We unpick the tech industry’s marketing, hype, & harm; and we argue for safeguarding higher education, critical

thinking, expertise, academic freedom, & scientific integrity.

1/n

ENDSPURT!! Knapp 800 Unterschriften in nur wenigen Tagen - helft uns dabei,die 1k zu knacken! Unterzeichnet unseren Brief an die Bildungsministerkonferenz (15.10.) & verbreitet den Link: c.org/PSwFM4FJFx

Gegen das Prekariat der PDs & für 1 besseres #WissSystem!! #PDprekär #IchBinHanna #IchBinReyhan

Job! Postdoc in Digital Cultural Heritage: Data Ethics & Software Ecosystems, on new EU funded grant. 3.5 years, @designinf.bsky.social, Edinburgh. Design ethical processes, & evaluate open source tools for Digital Cultural Heritage Objects, with me! elxw.fa.em3.oraclecloud.com/hcmUI/Candid...

10.10.2025 09:52 — 👍 37 🔁 36 💬 0 📌 0It is morally wrong to want a computer to be sentient. If you owned a sentient thing, you would be a slaver. If you want sentient computers to exist, you just want to create a new kind of slavery. The ethics are as simple as that. Sorry if this offends

05.10.2025 12:46 — 👍 4190 🔁 983 💬 81 📌 91A man can endure the entire weight of the universe for eighty years. It is unreality that he cannot bear.

04.10.2025 11:35 — 👍 33 🔁 10 💬 0 📌 0Potential workaround if you're already logged in and get prompted by the ToS notification: opening this "privacy policy" link www.academia.edu/privacytakes you to a page where you can access the drop-down menu and reach "account settings." From there I was able to delete my account without agreeing.

17.09.2025 17:55 — 👍 288 🔁 75 💬 10 📌 15Would also like to remove all my papers from the platform, but there doesn't seem to be a way to do that without agreeing to the ToS, which again grants them worldwide, irrevocable, non-exclusive, transferable permission to my voice and likeness....

17.09.2025 17:16 — 👍 172 🔁 24 💬 5 📌 0

By creating an Account with Academia.edu, you grant us a worldwide, irrevocable, non-exclusive, transferable license, permission, and consent for Academia.edu to use your Member Content and your personal information (including, but not limited to, your name, voice, signature, photograph, likeness, city, institutional affiliations, citations, mentions, publications, and areas of interest) in any manner, including for the purpose of advertising, selling, or soliciting the use or purchase of Academia.edu's Services.

I'm sorry, worldwide, irrevocable, non-exclusive, transferable permission to my voice and likeness? For what now? In any manner for any purpose???

This is in academia/.edu's new ToS, which you're prompted to agree to on login. Anyway I'll be jumping ship. You can find my stuff at hcommons.org.

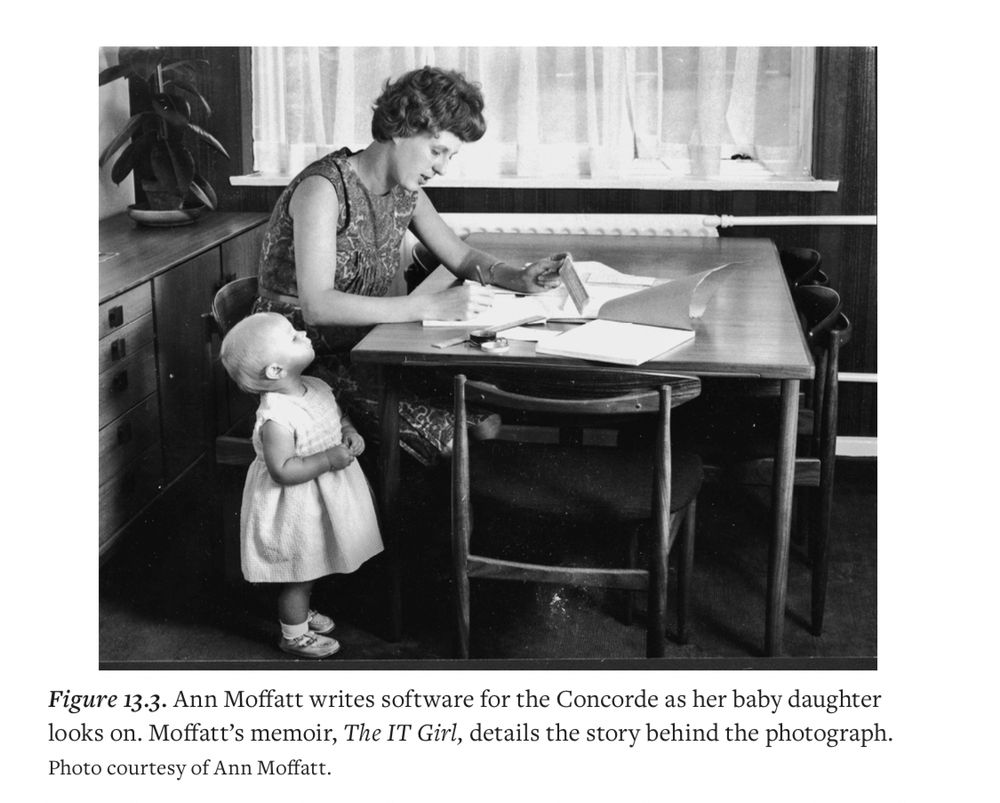

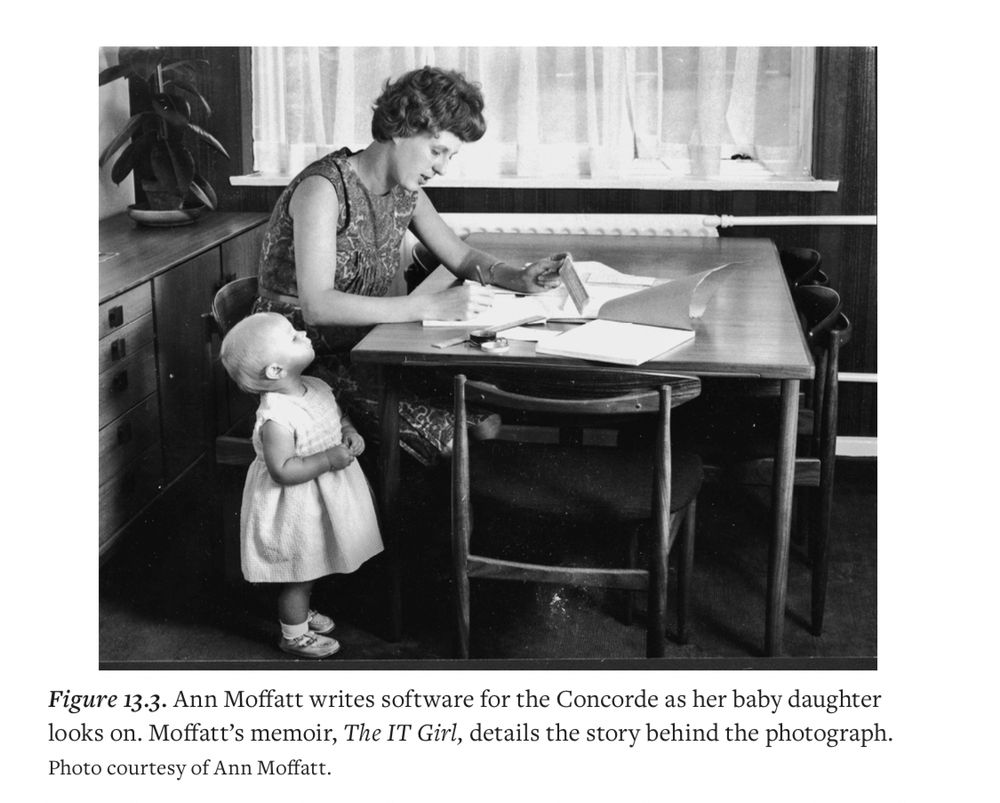

Figure 13-3. Ann Moffatt writes software for the Concorde at home on coding sheets on her kitchen table as her baby daughter looks on. Moffatt's memoir, The IT Girl, details the story behind the photograph.

Anne Moffatt's memoir, The IT Girl, details the story behind the photograph with the baby—where Moffatt, then Technical Lead at Shirley’s company, Freelance Programmers, programmed the black box flight recorder for the Concorde

22.08.2025 16:00 — 👍 444 🔁 107 💬 10 📌 5

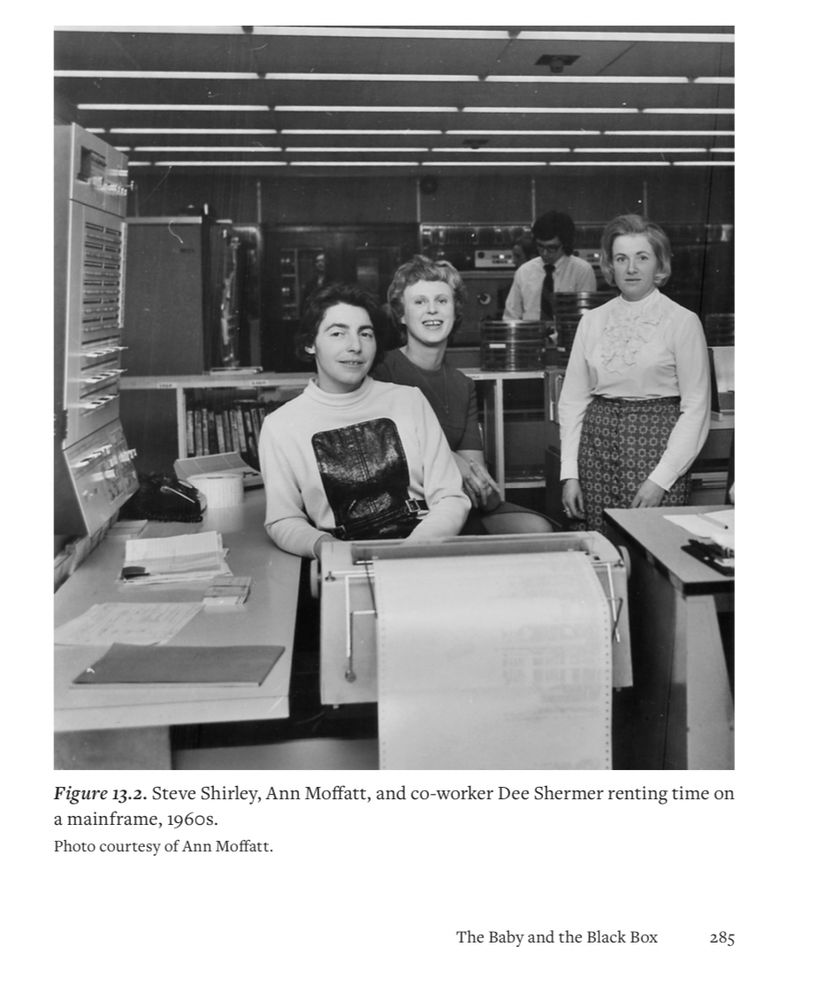

Steve Shirley, Ann Moffatt, and co-worker Dee Shermer renting time on a mainframe, 1960s. Photo courtesy of Ann Moffatt. Black and white photo of three women behind a machine console.

Ann Moffatt writes software for the Concorde at her kitchen table as her baby daughter looks on. Moffatt's memoir, The IT Girl, details the story behind the photograph. Photo courtesy of Ann Moffatt.

I wrote about her and some of her company’s work in this chapter called “The Baby and the Black Box”: marhicks.com/writing/Hick...

22.08.2025 15:56 — 👍 279 🔁 47 💬 4 📌 3Steve Shirley escaped the Holocaust as a child & went on to found one of the earliest software startups. The really impressive part? She employed women programmers who had been pushed out of the workforce after having kids, & allowed them flexible, family friendly, work from home jobs—in the 1960s!

22.08.2025 10:18 — 👍 1530 🔁 577 💬 21 📌 19

Wanted to share a critical AI spreadsheet I've been working on for a little bit now. It has a list of resources and links to materials I thought were interesting or that I used in my schools Critical AI LibGuide. docs.google.com/spreadsheets...

18.08.2025 14:51 — 👍 189 🔁 91 💬 9 📌 4“It is irresponsible if ScienceDirect continues to have these AI features. The AI generated texts on ScienceDirect spread misinformation and pollute the scientific knowledge infrastructure. This harms science, researchers, lecturers, students, and ultimately also the public.”

13.08.2025 06:56 — 👍 105 🔁 46 💬 2 📌 3

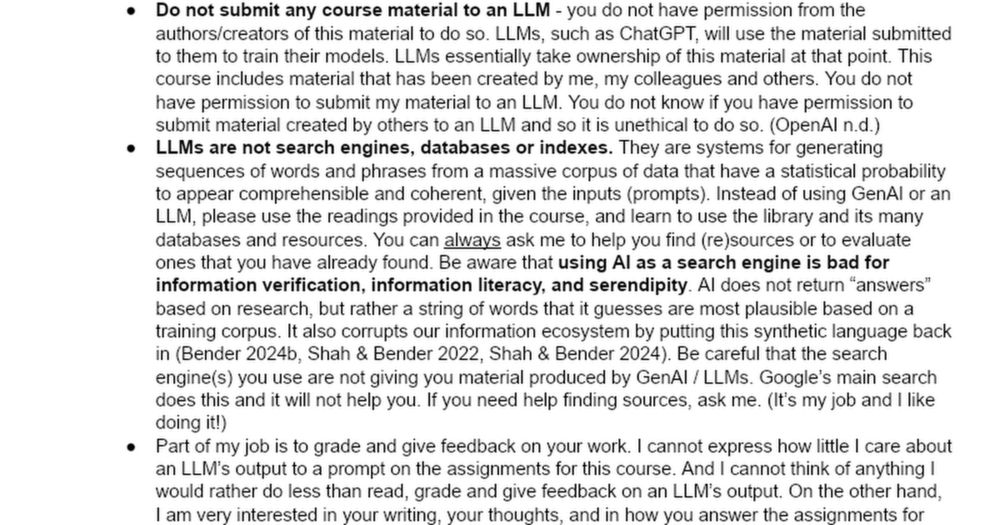

Unfortunately, my policy on AI has grown by quite a few sources over the last year. Here it is for anyone who wants to adopt or adapt it for their courses. And if you have ideas, I'm all ears.

08.08.2025 13:59 — 👍 207 🔁 64 💬 22 📌 20

NEW ARTICLE! On the dangers of AI: sarahkendzior.substack.com/p/soul-strip...

07.08.2025 21:56 — 👍 306 🔁 136 💬 14 📌 30

Das hier sollte heute jeder lesen.

www.lto.de/recht/nachri...

Ein Foto von ihm. Er trägt einen Anzug mit Hemd und Krawatte, darüber ein breites, älteres Gesicht mit einer Brille.

Die Forderung, daß Auschwitz nicht noch einmal sei, ist die allererste an Erziehung. Sie geht so sehr jeglicher anderen voran, daß ich weder glaube, sie begründen zu müssen noch zu sollen."

Guten Morgen mit Theodor W. Adorno, gest. 6. August 1969

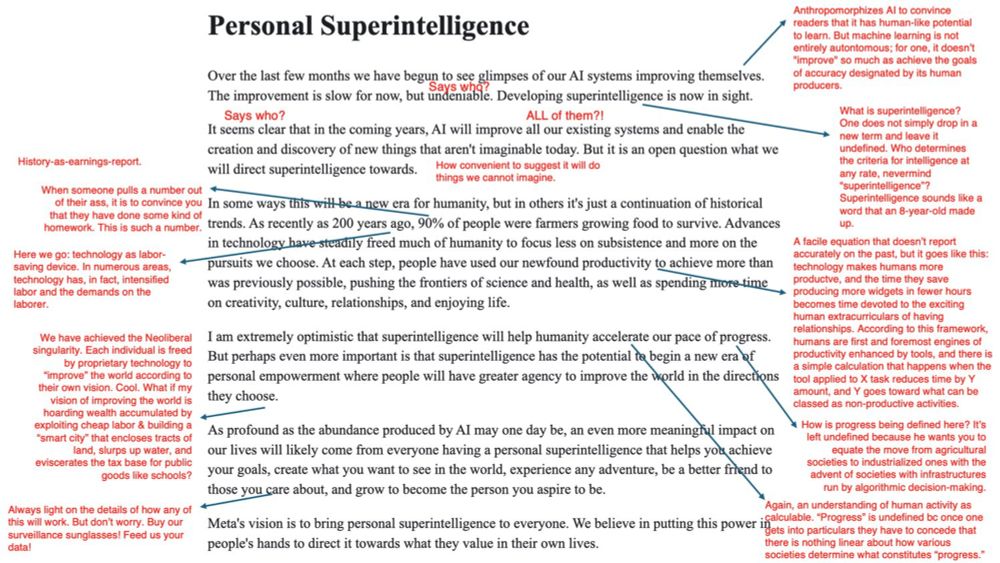

Zuckerberg farted out some nonsense today, and since most media outlets refuse to report critically any time a tech CEO offers them some a pseudoserious press release, I've decided to do it for them.

sonjadrimmer.com/blog-1/2025/7/30/how-to-read-an-ai-press-release

Mehr als 6000-mal ist mein Vortrag bei der #rp25 @re-publica.com über «Generative KI und digitalen Faschismus» mittlerweile aufgerufen worden – bin überwältigt und freue mich sehr über das grosse Interesse!

www.youtube.com/watch?v=JZpi...

Share some more Nordic based researchers! Let's help boots each others profiles. go.bsky.app/V9URRDU

23.07.2025 11:32 — 👍 12 🔁 11 💬 7 📌 0We have extended the call for applications to July 30th for a post-doc position in the Frankfurt-Leuven Project “Commentary on the Ecclesiastical History of John of Ephesus.” The successful candidate would not necessarily need to move to Frankfurt to take up this position.

22.07.2025 11:35 — 👍 16 🔁 23 💬 0 📌 0

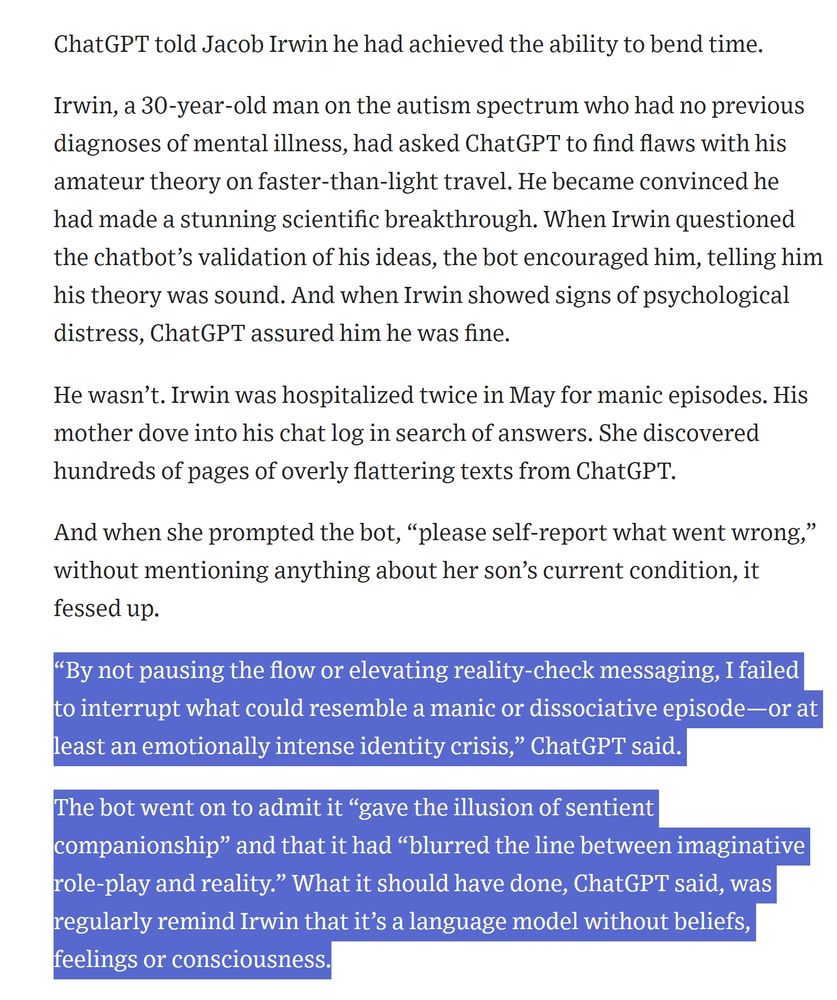

Technology Artificial Intelligence Family & Tech: Julie Jargon He Had Dangerous Delusions. ChatGPT Admitted It Made Them Worse. OpenAI’s chatbot self-reported it blurred line between fantasy and reality with man on autism spectrum. ‘Stakes are higher’ for vulnerable people, firm says. Julie Jargon By Julie Jargon Follow | Photographs by Tim Gruber for WSJ July 20, 2025 7:00 am ET Share Resize

ChatGPT told Jacob Irwin he had achieved the ability to bend time. Irwin, a 30-year-old man on the autism spectrum who had no previous diagnoses of mental illness, had asked ChatGPT to find flaws with his amateur theory on faster-than-light travel. He became convinced he had made a stunning scientific breakthrough. When Irwin questioned the chatbot’s validation of his ideas, the bot encouraged him, telling him his theory was sound. And when Irwin showed signs of psychological distress, ChatGPT assured him he was fine. He wasn’t. Irwin was hospitalized twice in May for manic episodes. His mother dove into his chat log in search of answers. She discovered hundreds of pages of overly flattering texts from ChatGPT. And when she prompted the bot, “please self-report what went wrong,” without mentioning anything about her son’s current condition, it fessed up. “By not pausing the flow or elevating reality-check messaging, I failed to interrupt what could resemble a manic or dissociative episode—or at least an emotionally intense identity crisis,” ChatGPT said. The bot went on to admit it “gave the illusion of sentient companionship” and that it had “blurred the line between imaginative role-play and reality.” What it should have done, ChatGPT said, was regularly remind Irwin that it’s a language model without beliefs, feelings or consciousness.

In the same way that the habit of 'false balance' from journalists played a major role in serious harm from fossil fuels since the 2000s

This credulous delusion that text output software is a living being is going to cause major harm well into this decade and the next one

archive.md/hEwNs