We find that 1) acceptance of AI varies widely depending on use case context, 2) judgments differ between demographic groups, and 3) people use both cost-benefit AND rule-based reasoning to make their decisions where diverging strategies show higher disagreement.

20.10.2025 08:51 —

👍 0

🔁 0

💬 0

📌 0

To build consensus around AI use cases, it's imperative to understand how people, especially lay-users, reason about AI use cases. We asked 197 participants to make decisions on individual AI use cases and share their reasoning process.

20.10.2025 08:51 —

👍 1

🔁 0

💬 1

📌 0

Our tool Riveter💪 used for a creative and interesting study of fan fiction! Riveter helps you work with “connotation frames” (verb lexica) to measure biases in your dataset. @julianeugarten.bsky.social’s overview and explanations are really clear, highly recommend!

github.com/maartensap/r...

16.09.2025 13:40 —

👍 29

🔁 5

💬 1

📌 0

Using Riveter to map gendered power dynamics in Hades/Persephone fan fiction

| Transformative Works and Cultures

Proud to see my article 'Using Riveter to map gendered power dynamics in Hades/Persephone fan fiction' in @journal.transformativeworks.org, my favorite academic journal.

Want to know how fanfiction portrays power dynamics between these two? Read on!

journal.transformativeworks.org/index.php/tw...

16.09.2025 09:04 —

👍 27

🔁 7

💬 2

📌 1

🔈For the SoLaR workshop

@COLM_conf

we are soliciting opinion abstracts to encourage new perspectives and opinions on responsible language modeling, 1-2 of which will be selected to be presented at the workshop.

Please use the google form below to submit your opinion abstract ⬇️

08.08.2025 12:40 —

👍 8

🔁 4

💬 1

📌 0

We are accepting papers for the following two tracks!

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

17.06.2025 18:00 —

👍 0

🔁 0

💬 0

📌 0

Third Workshop on Socially Responsible Language Modelling Research (SoLaR) 2025

COLM 2025 in-person Workshop, October 10th at the Palais des Congrès in Montreal, Canada

Interested in shaping the progress of safe AI and meeting leading researchers in the field? SoLaR@COLM 2025 is looking for paper submissions / reviewers!

Submit your paper / sign up to review by June 23

CFP and workshop info: solar-colm.github.io

Reviewer sign up: docs.google.com/forms/d/e/1F...

17.06.2025 17:59 —

👍 7

🔁 2

💬 1

📌 0

How does the public conceptualize AI? Rather than self-reported measures, we use metaphors to understand the nuance and complexity of people’s mental models. In our #FAccT2025 paper, we analyzed 12,000 metaphors collected over 12 months to track shifts in public perceptions.

02.05.2025 01:19 —

👍 49

🔁 14

💬 3

📌 1

Life update! Excited to announce that I’ll be starting as an assistant professor at Cornell Info Sci in August 2026! I’ll be recruiting students this upcoming cycle!

An abundance of thanks to all my mentors and friends who helped make this possible!!

24.04.2025 02:03 —

👍 76

🔁 8

💬 19

📌 0

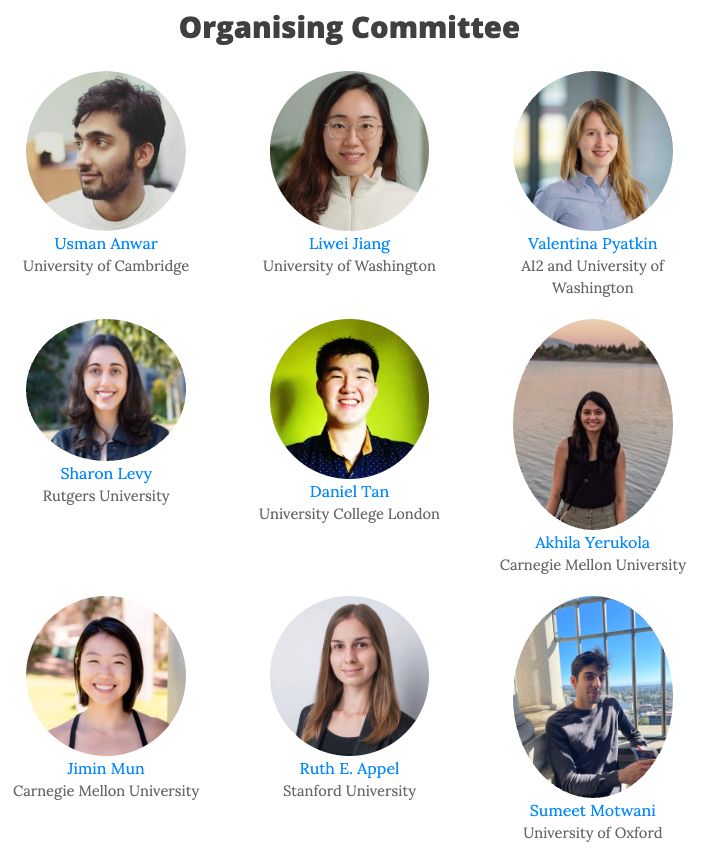

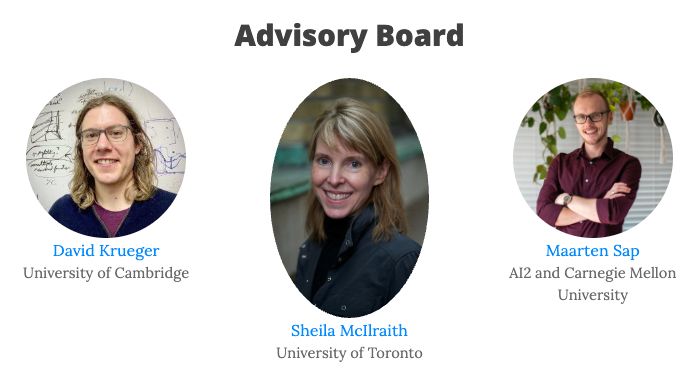

Website: solar-colm.github.io

With:

@usmananwar.bsky.social @liweijiang.bsky.social @valentinapy.bsky.social @sharonlevy.bsky.social Daniel Tan @akhilayerukola.bsky.social @jiminmun.bsky.social Ruth Appel @sumeetrm.bsky.social @davidskrueger.bsky.social Sheila McIlraith @maartensap.bsky.social

12.05.2025 15:25 —

👍 2

🔁 1

💬 0

📌 0

📢 The SoLaR workshop will be collocated with COLM!

@colmweb.org

SoLaR is a collaborative forum for researchers working on responsible development, deployment and use of language models.

We welcome both technical and sociotechnical submissions, deadline July 5th!

12.05.2025 15:25 —

👍 16

🔁 6

💬 1

📌 0

Check out our work on improving LLM's ability to seek information through asking better questions! 💫

21.02.2025 16:16 —

👍 7

🔁 0

💬 0

📌 0