I think it's much more important we get a better scoring system for matching reviewers and papers. High affinity scores on OpenReview are often misleading. A lot of reviewers complained to me they get random papers from TMLR, and they don't enjoy reviewing as a consequence.

13.12.2024 16:15 — 👍 2 🔁 0 💬 1 📌 0

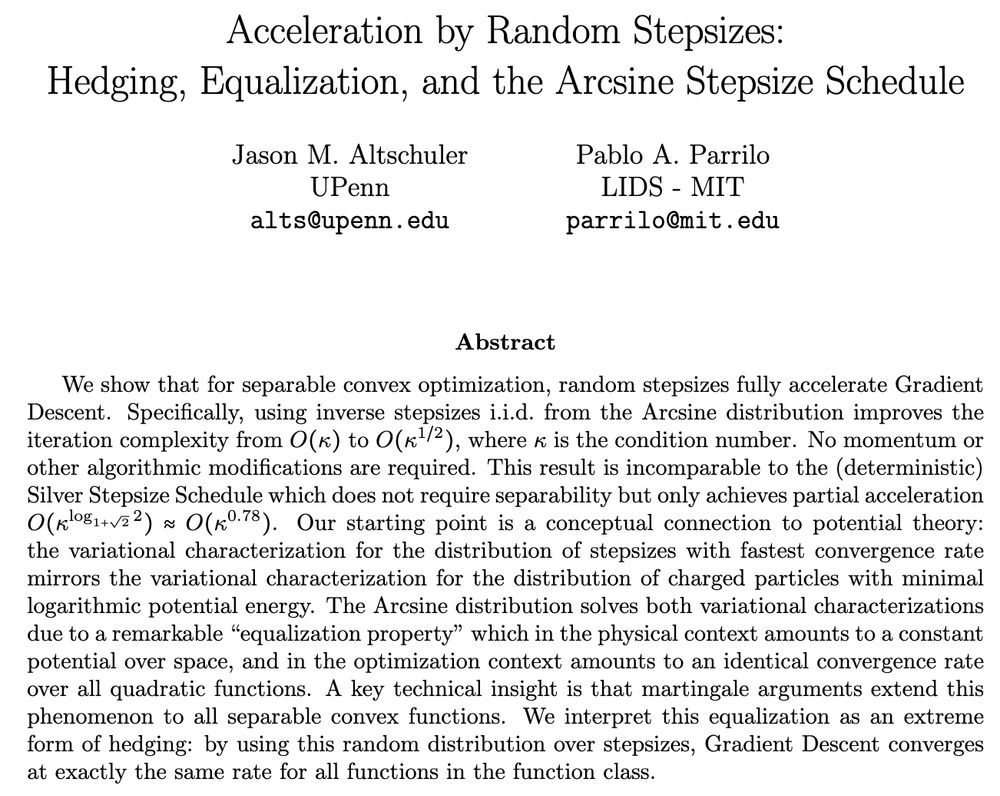

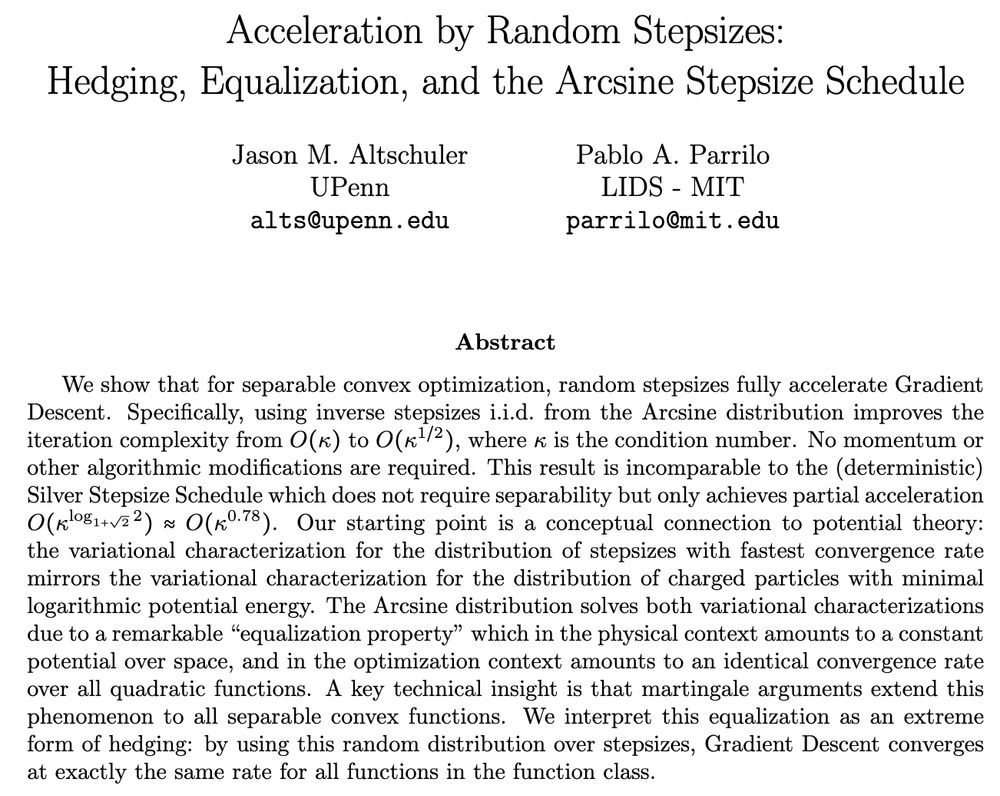

Cool new result: random arcsine stepsize schedule accelerates gradient descent (no momentum!) on separable problems. The separable class is clearly very limited, and it remains unclear if acceleration using stepsizes is possible on general convex problems.

arxiv.org/abs/2412.05790

10.12.2024 13:04 — 👍 3 🔁 0 💬 0 📌 0

The idea that one needs to know a lot of advanced math to start doing research in ML seems so wrong to me. Instead of reading books for weeks and forgetting most of them a year later, I think it's much better to try do things, see what knowledge gaps prevent you from doing them, and only then read.

06.12.2024 14:26 — 👍 9 🔁 2 💬 4 📌 0

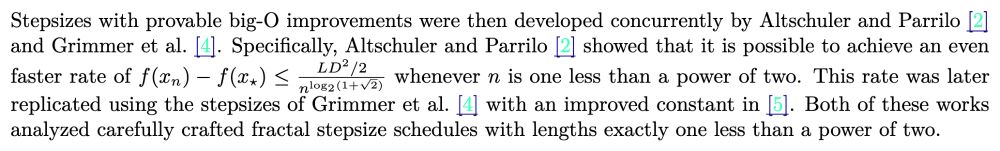

It's a bit hard to say because this kind of results are still quite new, but one of the most recent papers on the topic, arxiv.org/abs/2410.16249, mentions a conjecture on the optimality of its 1/n^{log₂(1+√ 2)} (not for the last iterate though).

27.11.2024 23:01 — 👍 1 🔁 0 💬 0 📌 0

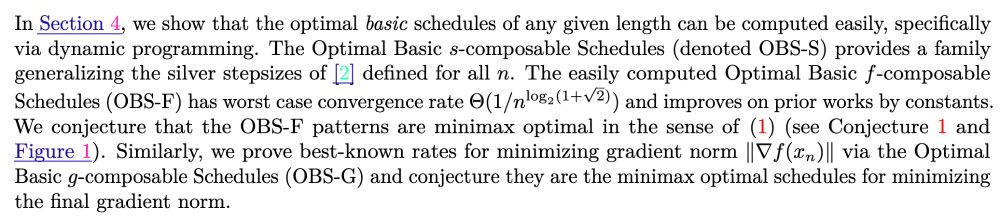

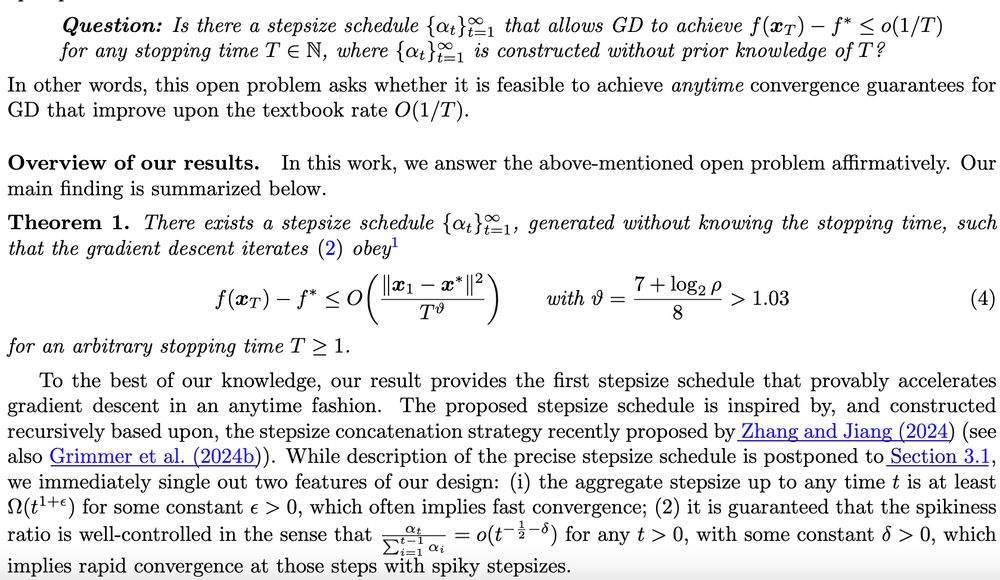

Gradient Descent with large stepsizes converges faster than O(1/T) but it was only shown for the *best* iterate before. Cool to see new results showing we can also get an improvement for the last iterate:

arxiv.org/abs/2411.17668

I am still waiting to see a version with adaptive stepsizes though 👀

27.11.2024 15:02 — 👍 10 🔁 0 💬 1 📌 0

Co-founder and CEO, Mistral AI

prev: @BrownUniversity, @uwcse/@uw_wail phd, ex-@cruise, RS @waymo. 0.1x engineer, 10x friend.

spondyloarthritis, cars ruin cities, open source

Chaque soir: Tente de conquérir le monde. Le reste du temps: MCF (Paris).

@GuillaumeG_ sur X

🇨🇵 🇬🇧 🇪🇸 🇮🇹

Co-founder @forecastingco. Previously PhD UC Berkeley @berkeley_ai, @ENS_ParisSaclay (MVA) and @polytechnique 🇫🇷 🇺🇸 | 👊🥋

Doing mathematics, also as a job. Now at Uni Bremen, was at TU Braunschweig. Only here for the math. Optimization, inverse problems, imaging, learning - stuff like that.

Research Scientist at FAIR, Meta. 💬 My opinions are my own.

Group Leader in Tübingen, Germany

I’m 🇫🇷 and I work on RL and lifelong learning. Mostly posting on ML related topics.

ML researcher in bio 🧬 at inceptive.life. PhD from EPFL🇨🇭Mountain lover 🏔

Associate Prof. in ML & Statistics at NUS 🇸🇬

MonteCarlo methods, probabilistic models, Inverse Problems, Optimization

https://alexxthiery.github.io/

🧙🏻♀️ scientist at Meta NYC | http://bamos.github.io

PhD student @Imperial designing efficient NNs | EEE MEng '22 @Imperial

Senior Lecturer, Visual Computing, University of Bath

🔗 https://vinaypn.github.io/

UC Berkeley Professor working on AI. Co-Director: National AI Institute on the Foundations of Machine Learning (IFML). http://BespokeLabs.ai cofounder

Principal scientist @ TII

Visit my research blog at https://alexshtf.github.io

Researcher in machine learning and optimization. Open source enthusiast. Parody songwriter (aka PianoHamster). OCD survivor.

Assistant Professor at the University of Alberta. Amii Fellow, Canada CIFAR AI chair. Machine learning researcher. All things reinforcement learning.

📍 Edmonton, Canada 🇨🇦

🔗 https://webdocs.cs.ualberta.ca/~machado/

🗓️ Joined November, 2024

Llama Farmer

Ex CLO Hugging Face, Xoogler

Director, Princeton Language and Intelligence. Professor of CS.