Computer-mediated carcinisation

20.05.2025 01:19 — 👍 1 🔁 0 💬 1 📌 0

This includes many of my papers, too. The point I am making is the findings in careful academic research likely represents a lower bound of AI capabilities at this point.

15.05.2025 22:16 — 👍 51 🔁 4 💬 3 📌 1

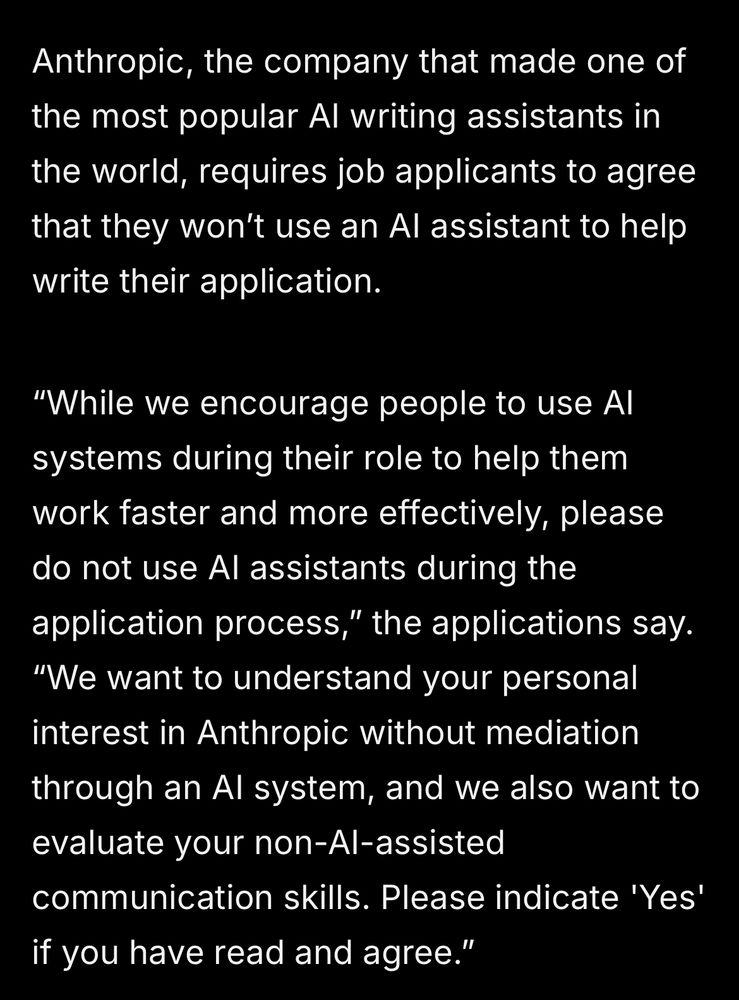

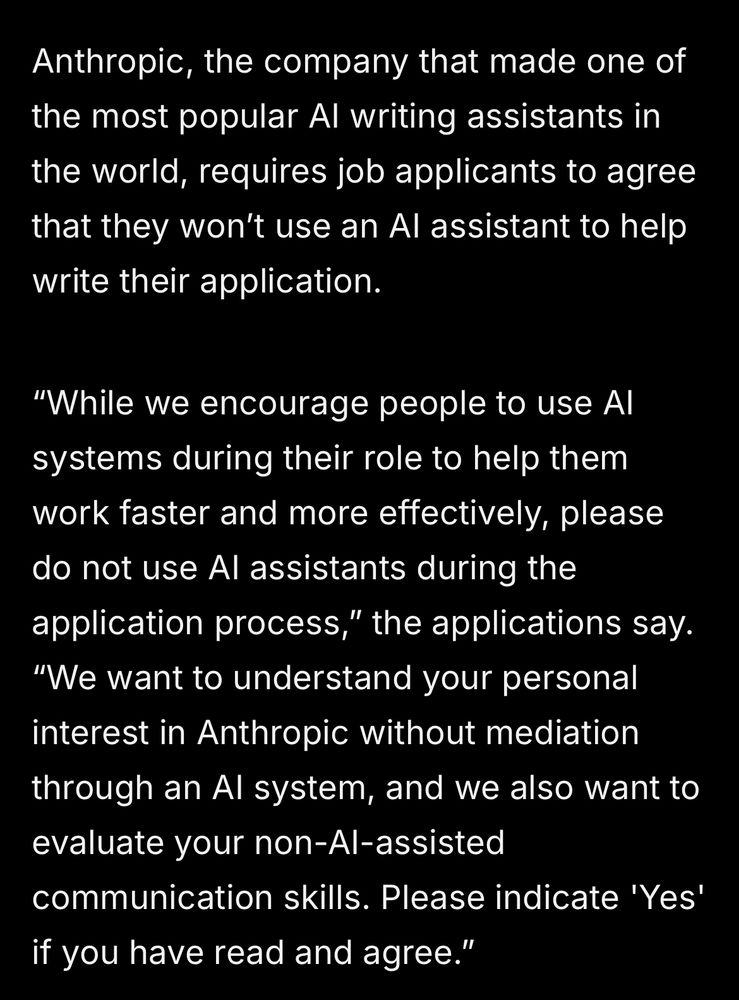

I can’t

i just …

i can’t

www.404media.co/anthropic-cl...

04.02.2025 13:30 — 👍 1062 🔁 323 💬 35 📌 91

I bet if someone *has* succeeded, it's via spinning up an elicitation-GPT that just drilled you for critical intel, wouldn't let you weasel out via under/overspecified output, then dumped it all back to you in standardized format so you could think faster - basically exporting your extraction algo.

30.01.2025 20:34 — 👍 1 🔁 0 💬 0 📌 0

Exactly. If we overheard Dario, Sam, and Demis chatting about certain well known AI critics, I'd be willing to bet they'd be expressing gratitude. Proving a grouch wrong is a real motivator.

29.01.2025 19:05 — 👍 0 🔁 0 💬 0 📌 0

Hi Everyone!

We're hosting our Wharton AI and the Future of Work Conference on 5/21-22. Last year was a great event with some of the top papers on AI and work.

Paper submission deadline is 3/3. Come join us! Submit papers here: forms.gle/ozJ5xEaktXDE...

29.01.2025 18:46 — 👍 16 🔁 15 💬 2 📌 2

Exciting new hobby project in the offing related to AI and skill. Involves a childhood passion, a wild leap into the unknown, made real via an order from Amazon just now. Will be 100% cool, I will be documenting things, sharing eventually. Feels like April 2023 again!

15.01.2025 05:07 — 👍 2 🔁 0 💬 0 📌 0

The Silo is so good. Just superb. This generation's answer to the BSG remake.

13.01.2025 01:44 — 👍 2 🔁 0 💬 0 📌 0

My hobby horse. You can simulate a rocket all you want, and use more energy on computation than the actual rocket would, but you won't get to orbit until you ignite rocket fuel. What if all the energy we are spending on simulating learning is not the juice we really need to make intelligence?

09.01.2025 08:49 — 👍 58 🔁 11 💬 8 📌 0

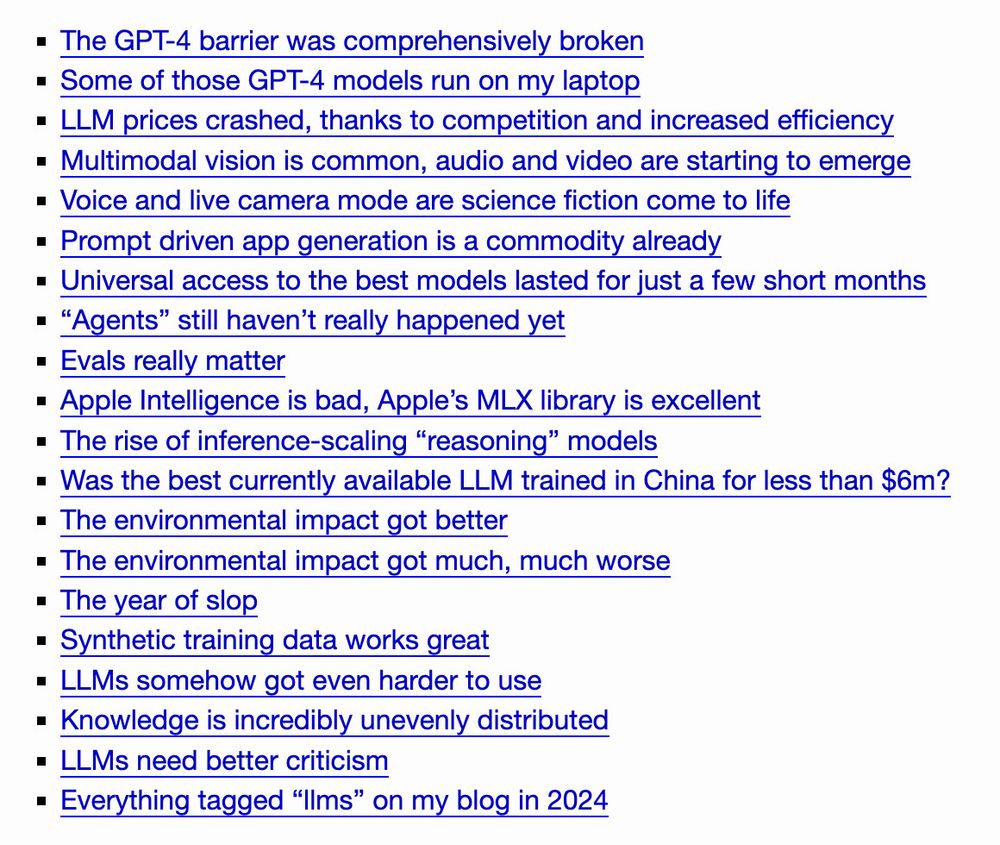

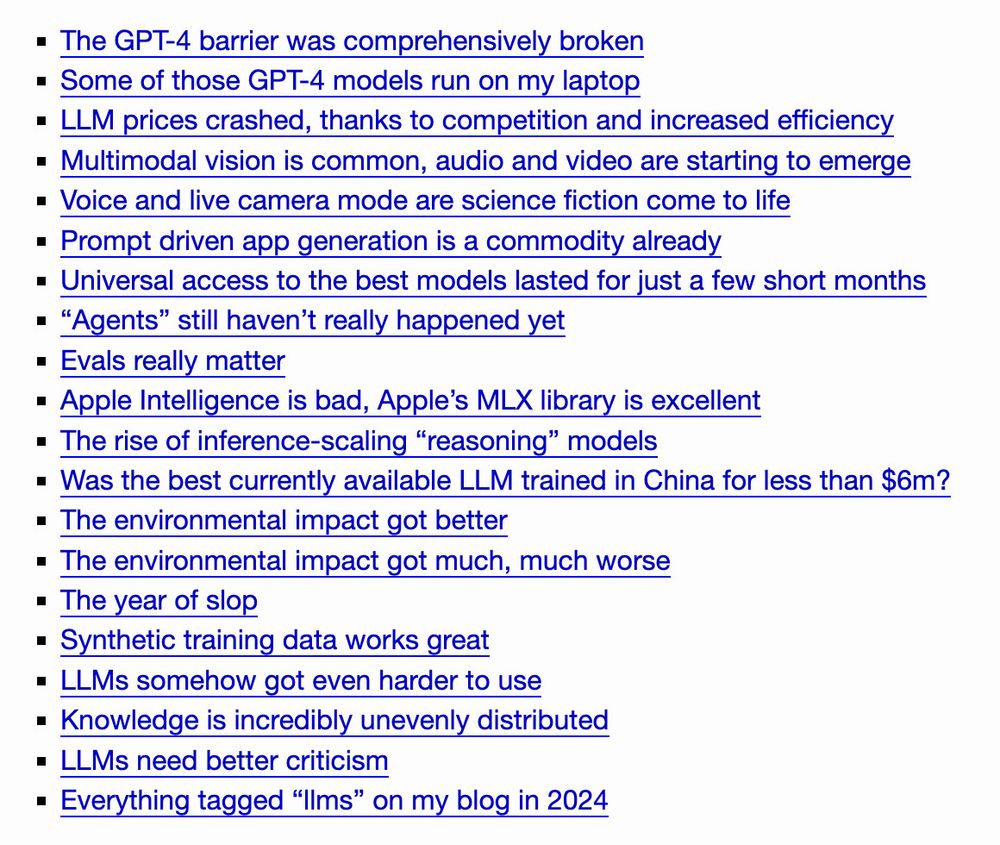

The GPT-4 barrier was comprehensively broken

Some of those GPT-4 models run on my laptop

LLM prices crashed, thanks to competition and increased efficiency

Multimodal vision is common, audio and video are starting to emerge

Voice and live camera mode are science fiction come to life

Prompt driven app generation is a commodity already

Universal access to the best models lasted for just a few short months

“Agents” still haven’t really happened yet

Evals really matter

Apple Intelligence is bad, Apple’s MLX library is excellent

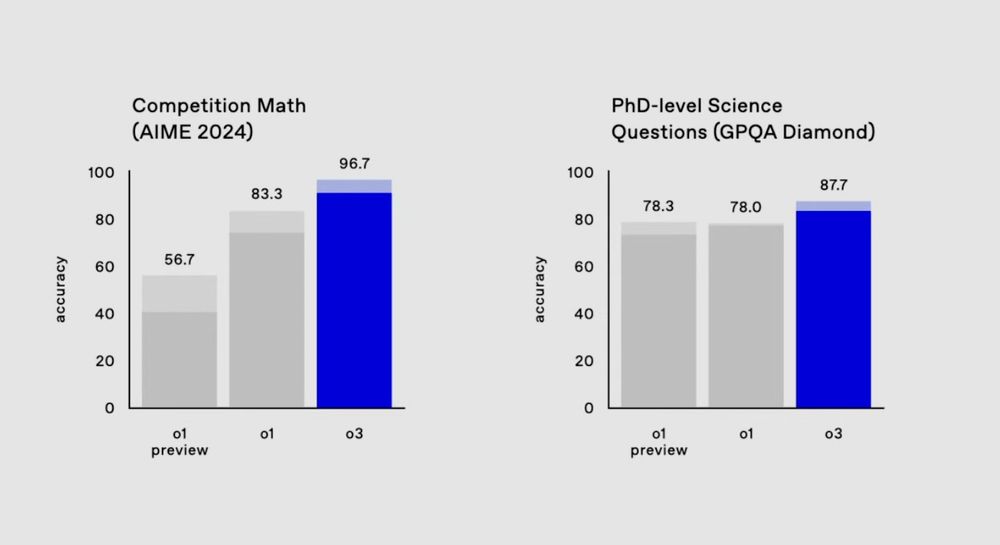

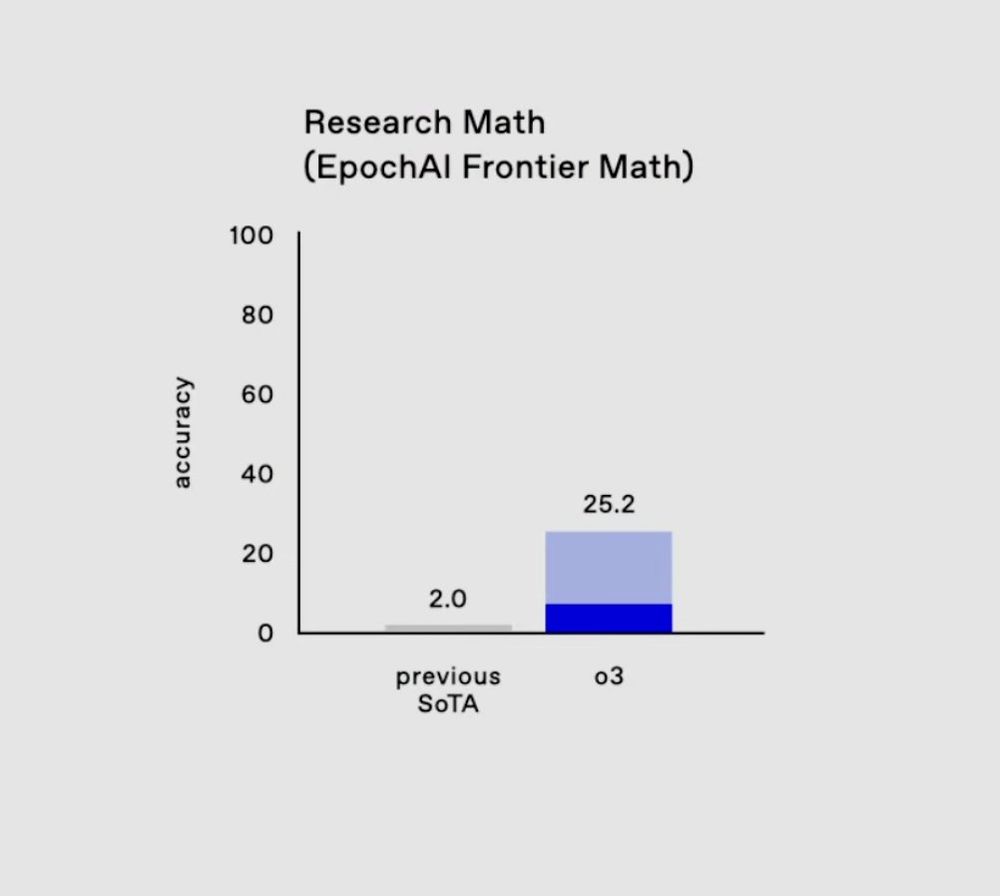

The rise of inference-scaling “reasoning” models

Was the best currently available LLM trained in China for less than $6m?

The environmental impact got better

The environmental impact got much, much worse

The year of slop

Synthetic training data works great

LLMs somehow got even harder to use

Knowledge is incredibly unevenly distributed

LLMs need better criticism

Everything tagged “llms” on my blog in 2024

Here's my end-of-year review of things we learned out about LLMs in 2024 - we learned a LOT of things simonwillison.net/2024/Dec/31/...

Table of contents:

31.12.2024 18:10 — 👍 652 🔁 148 💬 28 📌 47

In 2024 we learned a lot about how AI is impacting work. People report that they're saving 30 minutes a day using AI (aka.ms/nfw2024), and randomized controlled trials reveal they’re creating 10% more documents, reading 11% fewer e-mails, and spending 4% less time on e-mail (aka.ms/productivity...).

31.12.2024 19:39 — 👍 16 🔁 4 💬 1 📌 0

Just *one* of the reasons that Blindsight was ahead of its time. Way ahead.

20.12.2024 16:36 — 👍 1 🔁 0 💬 1 📌 0

Massive congrats!! So excited to check it out.

14.12.2024 14:42 — 👍 3 🔁 0 💬 3 📌 1

Wow!

10.12.2024 20:54 — 👍 0 🔁 0 💬 0 📌 0

Join me by the fireside this Friday with Matt Beane as we dive into one of today’s biggest workforce challenges: upskilling at scale. 📈

Linke below to hear the full discussion on Friday, December 13 at 11 am EST!

linktr.ee/RitaMcGrath

@mattbeane.bsky.social

09.12.2024 18:45 — 👍 4 🔁 2 💬 1 📌 0

I propose a workshop.

Most engineers/CS working on AI presume away well established, profound brakes on AI diffusion.

Most social scientists presume away how AI use could reshape those brakes.

Let's gather these groups, examine these brakes 1-by-1, make grounded predictions.

07.12.2024 19:12 — 👍 2 🔁 0 💬 0 📌 0

Models like o1 suggest that people won’t generally notice AGI-ish systems that are better than humans at most intellectual tasks, but which are not autonomous or self-directed

Most folks don’t regularly have a lot of tasks that bump up against the limits of human intelligence, so won’t see it

07.12.2024 00:49 — 👍 155 🔁 26 💬 8 📌 2

Grateful for the opportunity to visit and learn from the professionals at the L&DI conference. And very glad to hear you found my talk so valuable, Garth! Means a lot.

04.12.2024 14:02 — 👍 1 🔁 1 💬 2 📌 0

I made an HRI Starter Pack!

If you are a Human-Robot Interaction or Social Robotics researcher and I missed you while scrolling through bsky's suggestions, just ping me and I'll add ya.

go.bsky.app/CsnNn3s

03.12.2024 18:37 — 👍 42 🔁 14 💬 11 📌 2

The Avatar Economy

Are remote workers the brains inside tomorrow’s robots?

Wrote a little something on this in 2012, though I didn't anticipate the main reason for hiring such workers - training data.

www.technologyreview.com/2012/07/18/1...

03.12.2024 13:23 — 👍 1 🔁 0 💬 0 📌 0

Ohmydeargod.

03.12.2024 10:55 — 👍 0 🔁 0 💬 0 📌 0

David Meyer (v.) /ˈdeɪvɪd ˈmaɪ.ər/

To attribute complex, intentional design or deeper meaning to simple emergent behaviors of large language models, especially when such behaviors are more likely explained by straightforward technical constraints or training artifacts.

03.12.2024 10:53 — 👍 2 🔁 0 💬 0 📌 0

They did NOT. Wow. Sign of the times.

And I can verify on your rule! I was so flabbergasted and honored. Your feedback was rich and so helpful. Remain grateful.

03.12.2024 01:19 — 👍 1 🔁 0 💬 0 📌 0

I remember *treasuring* the previews. I'd fight to get there on time. Was part of the thrill.

But ads? F*ck that noise. Seriously, straight up evil.

30.11.2024 20:04 — 👍 0 🔁 0 💬 1 📌 0

Never occurred to me there'd be an algo under the hood that could reliably learn to provide content I'd value more than a straight read of my hand-curated list of people. My solution has been following people if they post high signal stuff all the time.

30.11.2024 18:12 — 👍 2 🔁 0 💬 1 📌 0

I have never used the feed page. What a horror, can't quite understand why folks would try.

Only/ever the "following" page. Even there things got pretty intolerable towards/around the election, now settled down.

30.11.2024 17:51 — 👍 1 🔁 0 💬 1 📌 0

Kurt Vonnegut, Joe Heller, and How to Think Like a Mensch

This story remains my favorite Thanksgiving message; it reminds me to be grateful for what I have and of the evils of jealousy and destructive competition. I first posted it on my work matters blog mo...

My Thanksgiving post. A Kurt Vonnegut poem. He talks with Joe Heller (Catch 22 fame) about a billionaire. Key part:

Joe said, "I've got something he can never have"

And I said, "What on earth could that be, Joe?"

And Joe said, "The knowledge that I've got enough"

www.linkedin.com/pulse/kurt-v...

27.11.2024 19:40 — 👍 12 🔁 2 💬 0 📌 1

Oh my dear god this is an incredible study.

27.11.2024 19:04 — 👍 0 🔁 0 💬 0 📌 0

I think there's likely an effect there!

25.11.2024 22:13 — 👍 0 🔁 0 💬 0 📌 0

Economic sociologist, assistant professor at MIT Sloan, I study regulatory failure, market failures, and platform design - from an organizational perspective.

Serial fintech operator & investor. Prev: MD at M12 (Microsoft’s VC fund); ran Visa Ventures; early exec at VGS; Twitter: https://twitter.com/peter

Technologist, artist, and founder. CTO at Replicant AI. Originator of Ruse Hacker Collective, maître d'Pup's Pool Party, and producer at Sublimate NYC.

currently: investing in technology and films

creator: Breadwinner, Emoji Dick, 🦪

formerly: kickstarter, creative commons, y combinator

into: bread, data, surfing, literature, art

'not an artist per se' - The Guardian

20+ year serial tech entrepreneur with multiple exits. Currently working on an AI-first incubator. Fan of JTBD, Elixir, puzzles, my fam, and trail running

Reporter at The Information

idk tbd \\ marseille \\ pizza \\ grouchy

www.vaughntan.org

The Roots of Progress (rootsofprogress.org)

AI/ML Practitioner. Formerly VP of ML @ Tegus. Cameo, ShopRunner, Civis Analytics, Braintree/Venmo, Obama 2012. Views are all mine.

Respawning. Former CEO of HumanFirst (acquired by ICON plc). Curious about Irish poetry and life sciences/biotech. I like birds.

🌏 I forecast water & climate risks. AMA☔️

🌱Senior TED Fellow, Truman Fellow, RAND Pardee fellow, Harvard adjunct🦫

☀️SoCal-based, MN-born, 1st gen Punjabi🥁

💧Water Canary Founder🐤

Climate is the message but #water is the medium: go.ted.com/sonaarluthra

Professor, Stanford University

Just Giving: Why Philanthropy is Failing Democracy

System Error: Where Big Tech Went Wrong

Intellectual mutt, making AI useful. Former SRI International, APL, ISI Foundation, Stanford, Johns Hopkins. 4x founder. Decamped to Tahoe. Here to serve.

five out of eight computers on a scale of computers

😬 Man of the internet; generalist.

🆕 App updates and snark.

🫡 Twitter sober since April '23.

#️⃣ Invented the hashtag.

🚬 Non-smoker.

Trying to change how startups are built

formerly @ericries on the bird app

Bloomberg News Tech Reporter covering Microsoft and AI, Mom, Liverpool FC Fan. Opinions are my own... or my evil twin's.

post-normal person

harper.lol / harper.blog