"A framework for blaming willlful ignorance" 🫣

-- New review paper with @rzultan.bsky.social and @tobigerstenberg.bsky.social now out in "Current Opinion in Psychology".

10.07.2025 05:07 — 👍 6 🔁 0 💬 0 📌 0

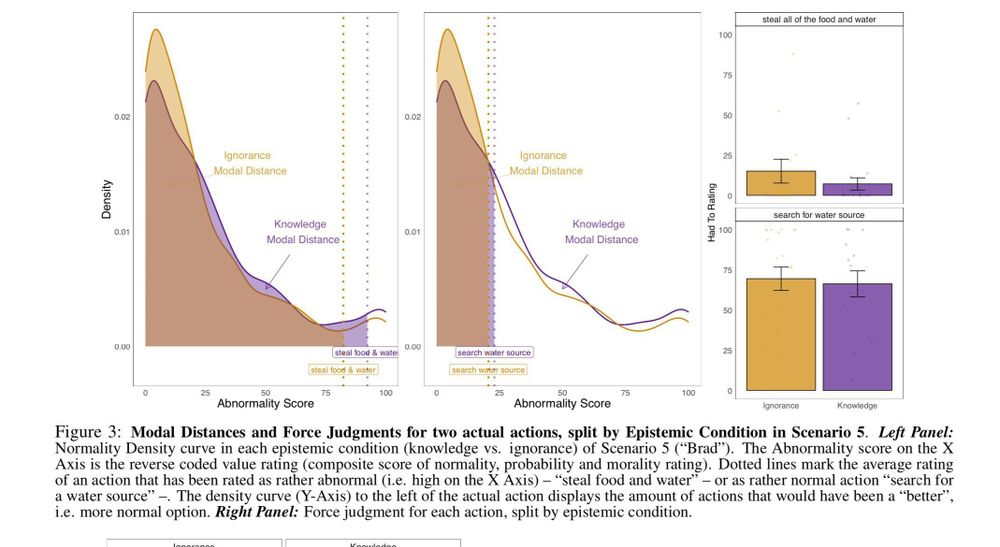

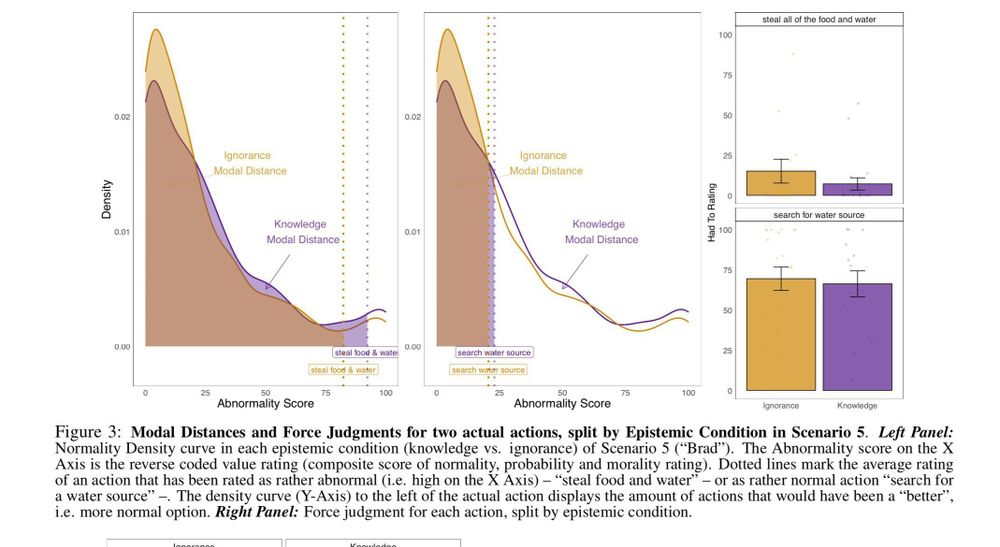

What makes people judge that someone was forced to take a particular action?

Research by @larakirfel.bsky.social et al suggests one influence on this judgment is people’s representation of what an agent knows is possible:

buff.ly/Z0oaRNk

HT @xphilosopher.bsky.social

17.05.2025 15:09 — 👍 3 🔁 1 💬 0 📌 0

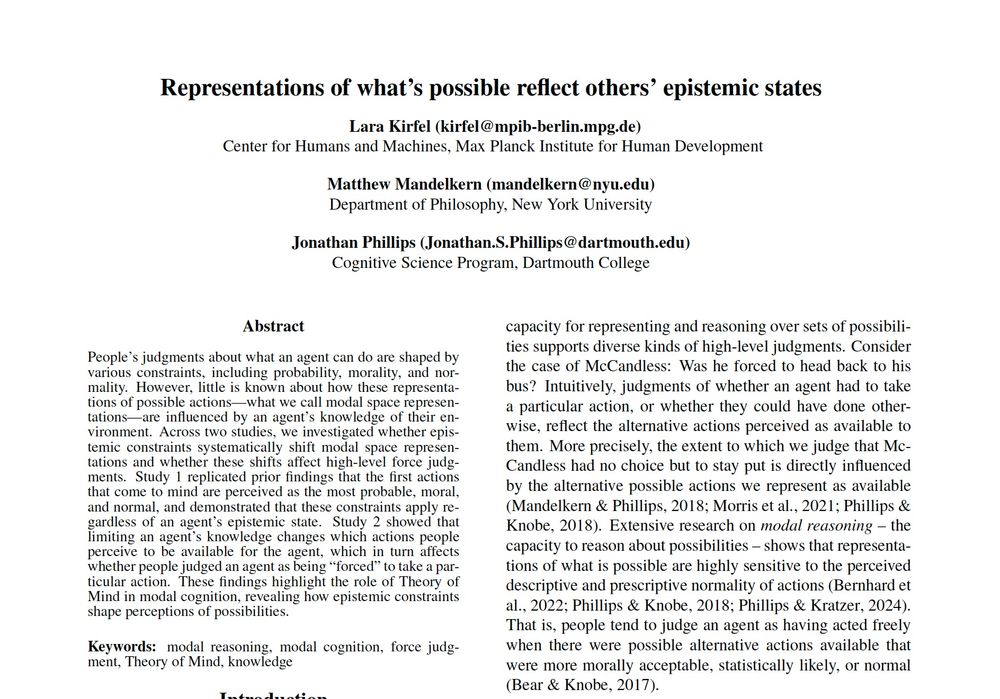

Title: Representations of what’s possible reflect others’ epistemic states

Authors: Lara Kirfel, Matthew Mandelkern, and Jonathan Scott Phillips

Abstract: People’s judgments about what an agent can do are shaped by various constraints, including probability, morality, and normality. However, little is known about how these representations of possible actions—what we call modal space representations—are influenced by an agent’s knowledge of their environment. Across two studies, we investigated whether epistemic constraints systematically shift modal space representations and whether these shifts affect high-level force judgments. Study 1 replicated prior findings that the first actions that come to mind are perceived as the most probable, moral, and normal, and demonstrated that these constraints apply regardless of an agent’s epistemic state. Study 2 showed that limiting an agent’s knowledge changes which actions people perceive to be available for the agent, which in turn affects whether people judged an agent as being “forced” to take a particular action. These findings highlight the role of Theory of Mind in modal cognition, revealing how epistemic constraints shape perceptions of possibilities.

🏔️ Brad is lost in the wilderness—but doesn’t know there’s a town nearby. Was he forced to stay put?

In our #CogSci2025 paper, we show that judgments of what’s possible—and whether someone had to act—depend on what agents know.

📰 osf.io/preprints/ps...

w/ Matt Mandelkern & @jsphillips.bsky.social

16.05.2025 12:04 — 👍 10 🔁 3 💬 0 📌 0

New paper for #CogSci2025: People cheat more when they delegate to AI. How can we stop this? We tested:

🧠 Explaining what the AI does (transparency)

🗣️ Calling cheating what it is (framing)

Only one worked.

w/ @larakirfel.bsky.social, Anne-Marie Nussberger, Raluca Rilla & @iyadrahwan.bsky.social

12.05.2025 10:20 — 👍 18 🔁 5 💬 0 📌 1

When AI meets counterfactuals: the ethical implications of counterfactual world simulation models

❗Now out in "AI and Ethics"❗

What are the consequences of AI that can reason counterfactually? Our new paper explores the ethical dimensions of AI-driven counterfactual world simulation. 🌎 🤖

With @tobigerstenberg.bsky.social, Rob MacCoun and Thomas Icard.

Link: shorturl.at/bHYEO

14.04.2025 08:50 — 👍 10 🔁 2 💬 0 📌 0

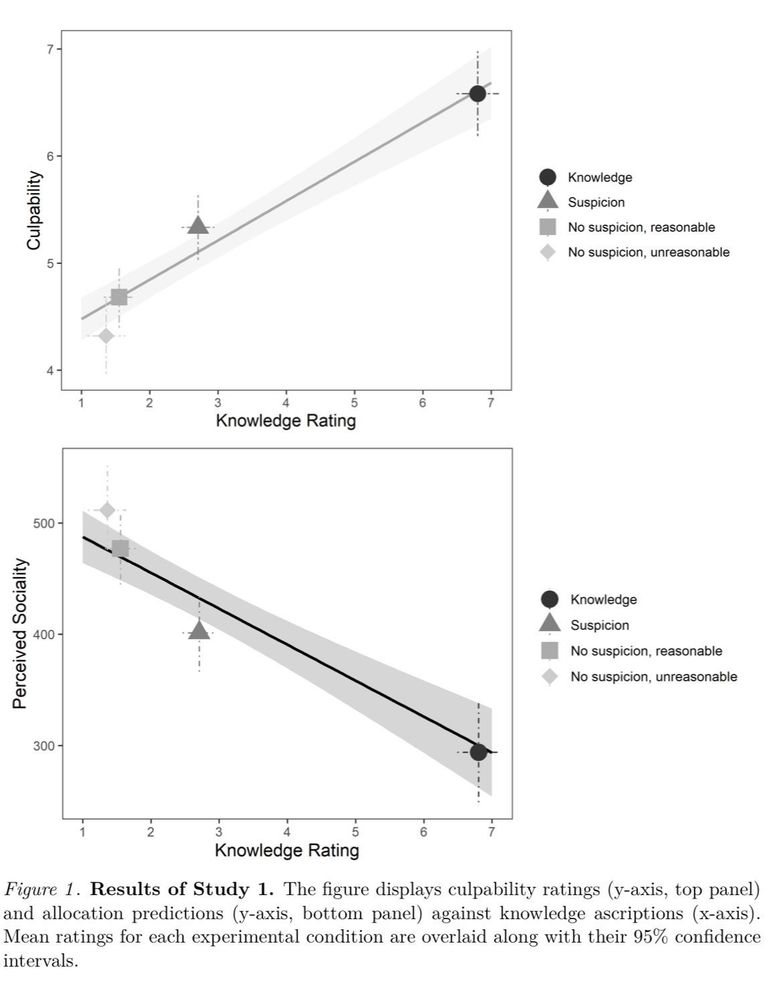

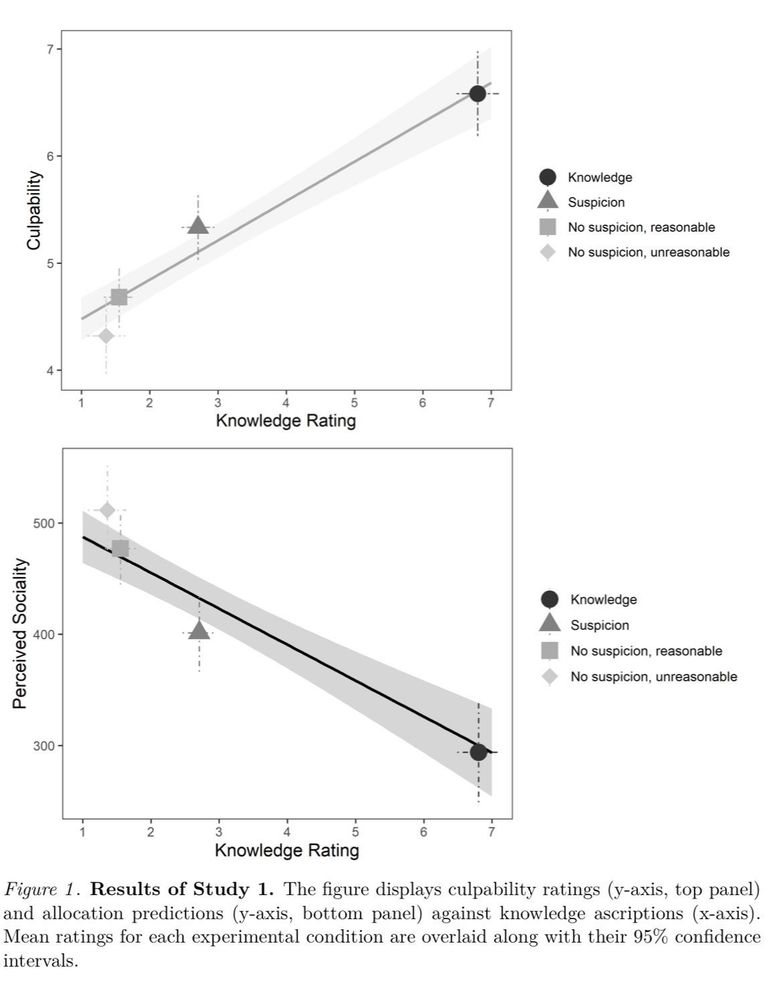

Does wilful ignorance—intentionally neglecting to ascertain you’re not implicated in criminal activity—make you culpable? Research by @larakirfel.bsky.social & Hannikainen suggests yes, as long as you suspected that may have been the case: https://buff.ly/3XDopzh

HT @xphilosopher.bsky.social

24.02.2025 16:09 — 👍 6 🔁 2 💬 0 📌 0

🗣️ People often select only a few events when explaining what happened. What drives people’s explanation selection?

🗞️ In our new paper, we propose a new model and show that people use explanations to communicate effective interventions. #Cogsci2024

🔗 Link to paper: osf.io/preprints/ps...

13.05.2024 08:14 — 👍 10 🔁 0 💬 0 📌 0

Menschliche Moral und was das für KI bedeutet! Diese Woche wird es mit @larakirfel.bsky.social wieder spannen auf @realscide.bsky.social!

#wisskomm #scicomm

05.02.2024 06:23 — 👍 12 🔁 2 💬 0 📌 0

Political philosopher of AI. Assistant Prof @ UW-Madison. Previous: Harvard Tech & Human Rights Fellow, Princeton postdoc, Oxford DPhil 🤖 In Berlin May-August 📍

I used to scan brains, now I talk about science for a living. Science editor, science podcaster, and SPIEGEL-bestselling writer. Founder of @realscientists.de and trainer at @nawik.de. Looks even worse IRL.

🔗 https://jensfoell.de

Historian - Democracy and Its Discontents - Newsletter: Democracy Americana https://democracyamericana.com - Podcast: Is This Democracy https://podcasters.spotify.com/pod/show/is-this-democracy

I'm Nature's Deputy Editor for ecology, evolution and social science and handle papers in cog neuro, psych, and a variety of behavioral and social sciences. When I'm not working, I'm a mom (and sometimes even try to find time to play my harp or ski).

Chief Scientist at the UK AI Security Institute (AISI). Previously DeepMind, OpenAI, Google Brain, etc.

New and free open access journal promoting Open Science. We will probably start to accept submissions in October (2025). Submission guidelines and more information will be posted soon.

Postdoc @ CMU • Safeguarding scientific integrity in the age of AI scientists • Explainable multi-agent systems @ University of Edinburgh • gbalint.me • 🇭🇺🏴

PhD student at the ROCKWOOL Foundation and SODAS (University of Copenhagen), examining the use of artificial intelligence in the public sector.

Pursuing PhD at Center for Humans and Machines, Max Planck Institute for Human Development, Berlin / humanet3

Psych PhD student @Harvard

Building Impact, Responsible Design

📍Mannheim & Berlin

www.birdux.studio

#ExperienceDesign #UXDesign #ServiceDesign

i criticize the tech industry

🎙️ @techwontsave.us

📬 https://disconnect.blog

📖 https://roadtonowherebook.com

Cognitive scientist working at the intersection of moral cognition and AI safety. Currently: Google Deepmind. Soon: Assistant Prof at NYU Psychology. More at sites.google.com/site/sydneymlevine.

Assistant Prof. at Stanford GSB

Kempner Institute research fellow @Harvard interested in scaling up (deep) reinforcement learning theories of human cognition

prev: deepmind, umich, msr

https://cogscikid.com/

Director of Helmholtz Institute for Human-Centered AI in Munich.

Cognitive science, machine learning, large models.

https://hcai-munich.com

Currently a visiting researcher at Uni of Oxford. Normally at Uni of Bern.

Meta-scientist building tools to help other scientists. NLP, simulation, & LLMs.

Creator and developer of RegCheck (https://regcheck.app).

1/4 of @error.reviews.

🇮🇪