Come check out our poster at CogSci (Poster Session 2, P2-T-192) on Friday 08/01!

28.07.2025 19:11 — 👍 3 🔁 0 💬 0 📌 0@veronateo.bsky.social

Come check out our poster at CogSci (Poster Session 2, P2-T-192) on Friday 08/01!

28.07.2025 19:11 — 👍 3 🔁 0 💬 0 📌 0I’d like to thank my wonderful collaborators: Sarah Wu, @erikbrockbank.bsky.social, and @tobigerstenberg.bsky.social!

9/

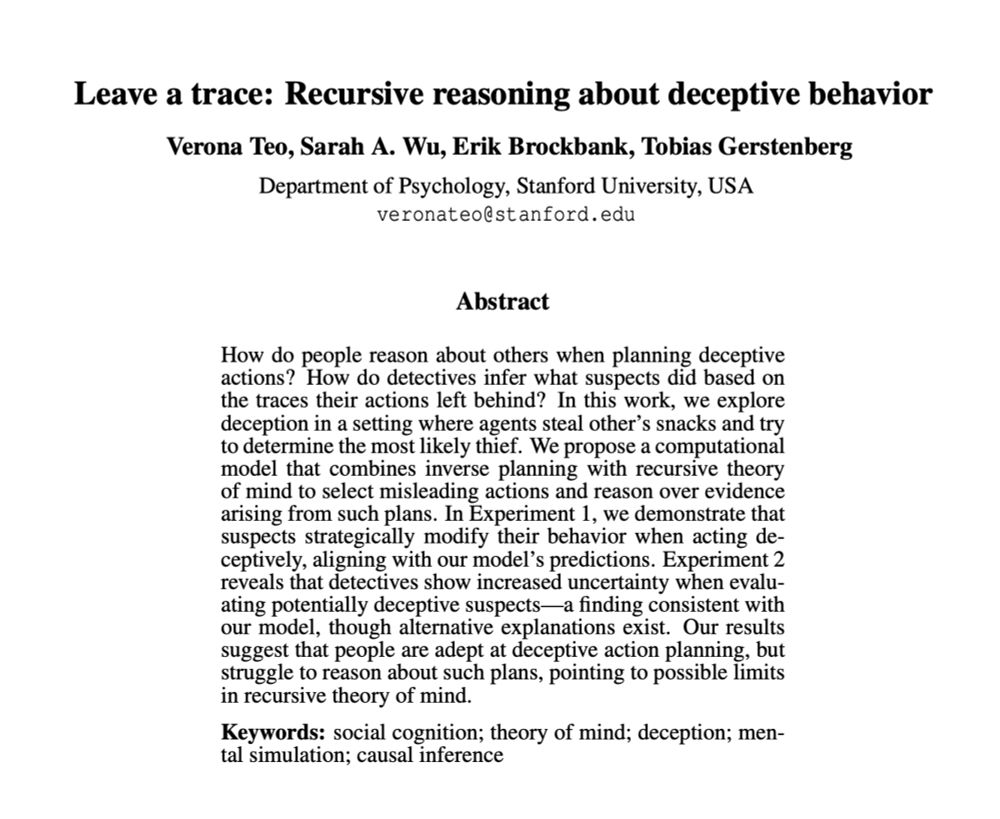

🤔 Our results suggest that although people are able to come up with strategically deceptive paths, it’s harder to reason about such deceit, indicating possible limitations in our ability to perform multi-level recursive mental simulations.

8/

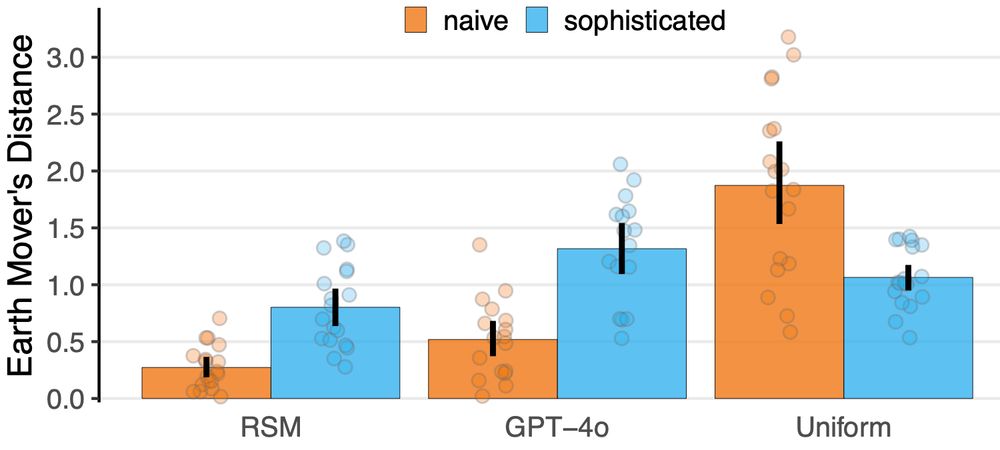

🔍 When asked to figure out who the thief was, detectives were overall less certain if they thought suspects could be deceptive. Our model was able to capture people’s inferences well, though other heuristic and non-simulation-based approaches offer alternative explanations.

7/

👣 We found that people took direct paths when planning without deceptive intent. But, when asked to steal a snack and avoid being caught, people took longer, roundabout return paths, as predicted by our model (but not by alternative accounts, e.g. GPT-4o).

6/

💭 We propose a model that combines inverse planning with recursive theory of mind to select misleading actions and reason over evidence. This model simulates naive and sophisticated reasoners, accounting for how people balance efficiency and deception when planning and reasoning about actions.

5/

🧩 In the second experiment, detectives were shown the final scene after someone had left evidence behind and were tasked with inferring who did it. Here too, we had "naive" and "sophisticated" conditions, where detectives had to judge based on potentially deceptive suspects.

4/

🥪 We conducted two experiments. In the first, participants acted as suspects, planning paths to and from the fridge to get a snack. We had two conditions: "naive" (no deceptive intent) and "sophisticated" (planning with deceptive intent to avoid getting caught) suspects.

3/

🏠 We designed scenarios where a suspect gets a snack from the kitchen and leaves crumbs behind. Detectives then try to figure out the most likely culprit given the evidence.

2/

Excited to share our new work at #CogSci2025!

We explore how people plan deceptive actions, and how detectives try to see through the ruse and infer what really happened based on the traces left behind. 🕵️♀️

Paper: osf.io/preprints/osf/vqgz5_v1

Code: github.com/cicl-stanford/recursive_deception

1/

The Causality in Cognition Lab is pumped for #cogsci2025 💪

25.07.2025 15:47 — 👍 57 🔁 8 💬 0 📌 0

Paper: arxiv.org/abs/2507.01413

Code: github.com/andyjliu/llm-a…

10/

This work was done with several amazing collaborators: Kushal Agrawal, Juan J Vazquez, Sudarsh Kunnavakkam, and Vishak Srikanth. A huge thank you to @andyliu.bsky.social for supervising, and the Supervised Program for Alignment Research for facilitating this project!

9/

Our findings highlight how factors like communication between agents, model homogeneity vs heterogeneity, and environmental pressures are essential to agent behavior and market outcomes. Understanding what drives collusion is a step toward designing robust automated markets.

8/

Interestingly, when both pressure and oversight were present, the pressure to make a profit won out, suggesting that agents prioritize meeting the demands of the authority figure over evading the consequences of oversight.

7/

3. Do environmental pressures like urgency and oversight affect collusion? When external pressure like a “CEO” pushing for higher profits was present, collusion drastically increased. But, when an “overseer” was introduced to monitor for collusion, coordination dropped.

6/

2. How do different models behave? We tested GPT-4.1 and Claude-3.7-Sonnet, finding that GPT sellers collude more and maintain higher prices than Claude sellers. However, a mix of both models in the market led to prices settling closest to the competitive equilibrium.

5/

1. Does communication among sellers matter? We found that when seller agents were allowed to send messages to each other, they showed a much higher tendency to coordinate their prices and keep them high.

4/

To measure collusion, we tracked sellers’ ask prices to see if they were pricing above the competitive equilibrium price and used an LLM evaluator to assign a coordination score based on their reasoning traces.

We ran 3 experiments to see what factors influence collusion …

3/

We created a simulated continuous double auction where LLM agents acted as buyers and sellers. The market consisted of 5 buyers and 5 sellers interacting over 30 rounds, with the goal of maximizing profit for the company they represent.

2/

Excited to share our paper “Evaluating LLM Agent Collusion in Double Auctions”!

We put LLMs in a simulated market and find that collusion increases when they are able to communicate via natural language, differs across models, and is influenced by urgency and oversight.

1/