Thanks! :)

17.10.2025 06:44 — 👍 0 🔁 0 💬 0 📌 0Amr Farahat

@amr-farahat.bsky.social

MD/M.Sc/PhD candidate @ESI_Frankfurt and IMPRS for neural circuits @MpiBrain. Medicine, Neuroscience & AI https://amr-farahat.github.io/

@amr-farahat.bsky.social

MD/M.Sc/PhD candidate @ESI_Frankfurt and IMPRS for neural circuits @MpiBrain. Medicine, Neuroscience & AI https://amr-farahat.github.io/

Thanks! :)

17.10.2025 06:44 — 👍 0 🔁 0 💬 0 📌 0Yes! Next Wednesday! 🙏

16.10.2025 16:33 — 👍 1 🔁 0 💬 1 📌 0Congratulations! 🎉🎉 And welcome to Frankfurt!

16.10.2025 08:37 — 👍 1 🔁 0 💬 1 📌 0

🚨Our NeurIPS 2025 competition Mouse vs. AI is LIVE!

We combine a visual navigation task + large-scale mouse neural data to test what makes visual RL agents robust and brain-like.

Top teams: featured at NeurIPS + co-author our summary paper. Join the challenge!

Whitepaper: arxiv.org/abs/2509.14446

Psychological measurement of subjective visual experiences through image reconstruction. (a) Mapping of brain, stimulus, and mind. Dots represent instances of visual experience (e.g., an image, perception, and corresponding brain activity). Veridical perception assumes that the mind accurately represents stimuli. The brain–mind mapping is considered fixed, while the brain–stimulus relationship is empirically identified. (b) Nonveridical perception (e.g., mental imagery, attentional modulation, and illusions) occurs when perceived content diverges from physical properties. The fixed brain–mind mapping and decoders trained on brain activity under veridical conditions allow the reconstruction of mental content as an image. (c) Reconstruction of mental imagery is achieved using models trained on brain activity from natural images.

Visual image reconstruction from brain activity via latent representation www.annualreviews.org/content/jour... by @ykamit.bsky.social et al.; mental imagery, #neuroscience

18.09.2025 09:11 — 👍 9 🔁 1 💬 1 📌 0

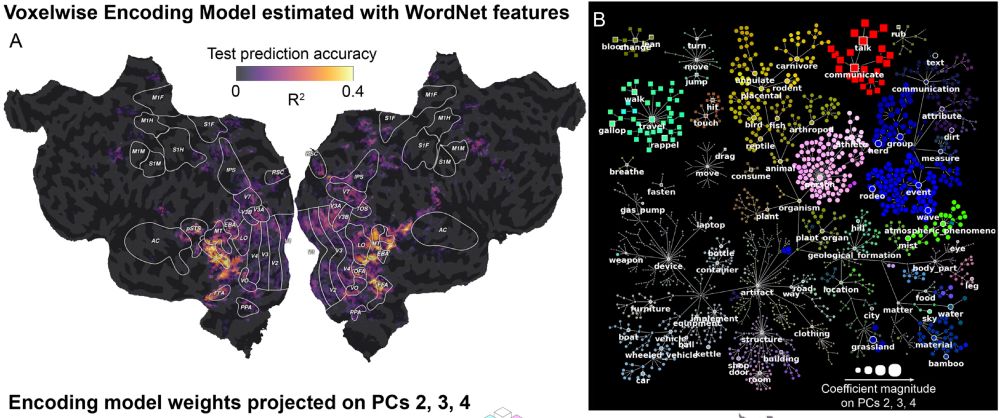

New paper in Imaging Neuroscience by Tom Dupré la Tour, Matteo Visconti di Oleggio Castello, and Jack L. Gallant:

The Voxelwise Encoding Model framework: A tutorial introduction to fitting encoding models to fMRI data

doi.org/10.1162/imag...

(1/6) Thrilled to share our triple-N dataset (Non-human Primate Neural Responses to Natural Scenes)! It captures thousands of high-level visual neuron responses in macaques to natural scenes using #Neuropixels.

11.05.2025 13:33 — 👍 122 🔁 42 💬 2 📌 1

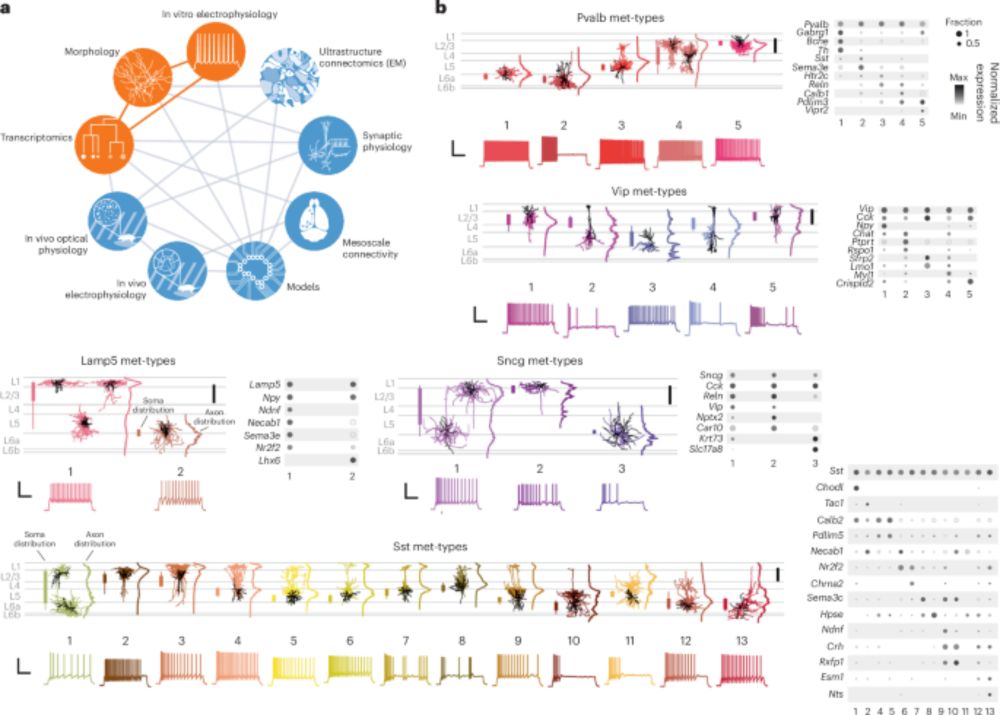

A Perspective on integrating multimodal data to understand cortical circuit architecture and function

@alleninstitute.bsky.social

www.nature.com/articles/s41...

Improvements to brain–computer interfaces are bringing the technology closer to natural conversation speed. www.nature.com/articles/d41...

01.04.2025 07:26 — 👍 4 🔁 1 💬 0 📌 0Yes indeed. It probably has something to do with learning dynamics that favors increasing the complexity gradually. Or it could be that the loss landscape has edges between high and low complexity volumes

15.03.2025 13:31 — 👍 3 🔁 0 💬 0 📌 0In AlexNet, however, the first layers are the most predictive. That's because they have bigger filters at earlier layers (see Miao and Tong 2024)

15.03.2025 12:35 — 👍 1 🔁 0 💬 1 📌 0V1 is usually predicted by more intermediate layers than early layers but it depends on the architecture of the model. In Cadena et al 2019 block3_conv1 in VGG19 was the most predictive. Early layers in VGG have very small receptive fields which makes it difficult to capture V1-like features.

15.03.2025 12:35 — 👍 1 🔁 0 💬 1 📌 0This was the most predictive layer of V1 in the VGG16 model. Same for IT, it was block4_conv2.

15.03.2025 10:58 — 👍 0 🔁 0 💬 1 📌 0and then starts increasing again with further training to fit the target function. This is the most likely explanation for the initial drop in V1 prediction.

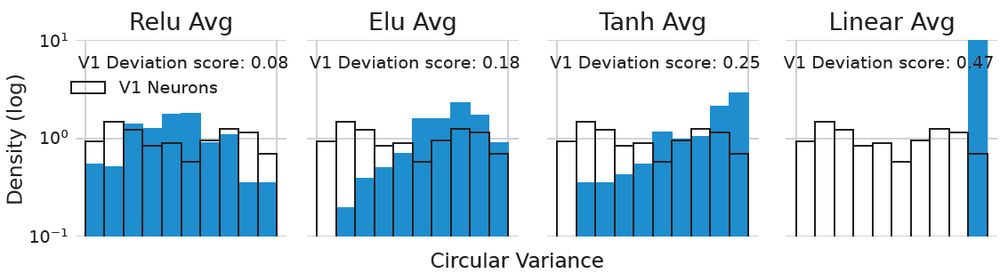

15.03.2025 10:57 — 👍 1 🔁 0 💬 1 📌 0We also observed in separate experiments on the simple CNN models that the complexity of the models "resets" to a low value (lower than its random-weight complexity) after the first training epoch (likely using the linear part of the activation function)

15.03.2025 10:57 — 👍 1 🔁 0 💬 1 📌 0Thanks for your interest! Object recognition performance increases directly starting from the first training epoch and nevertheless V1 prediction drops considerably so this drop supports the non significance of object recognition training for V1.

15.03.2025 10:57 — 👍 1 🔁 0 💬 1 📌 0

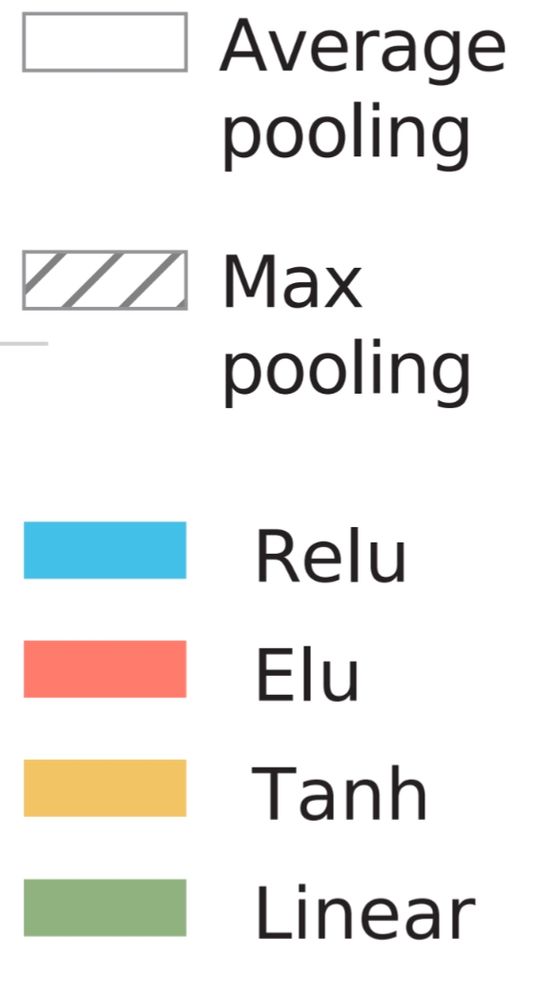

The legend of the left plot was missing!

14.03.2025 17:32 — 👍 0 🔁 0 💬 0 📌 0

read more here

www.biorxiv.org/content/10.1...

15/15

It is also important to use various ways to assess model strengths and weaknesses, not just one like prediction accuracy.

14/15

Our results also emphasize the importance of rigorous controls when using black box models like DNNs in neural modeling. They can show what makes a good neural model, and help us generate hypotheses about brain computations

13/15

Our results suggest that the architecture bias of CNNs is key to predicting neural responses in the early visual cortex, which aligns with results in computer vision, showing that random convolutions suffice for several visual tasks.

12/15

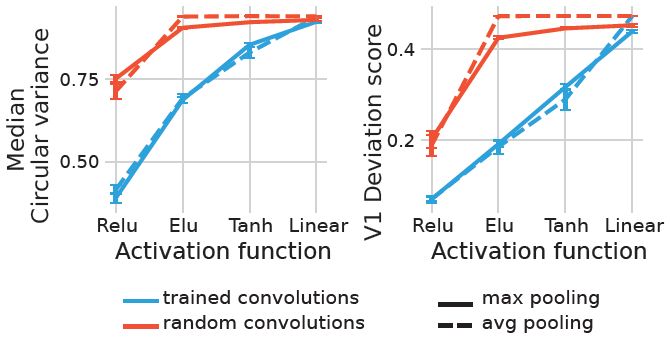

We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

11/15

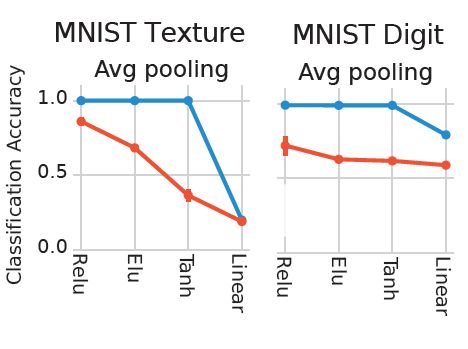

Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

10/15

We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.

9/15

We quantified the orientation selectivity (OS) of artificial neurons using circular variance and calculated how their distribution deviates from the distribution of an independent dataset of experimentally recorded v1 neurons

8/15

ReLU was introduced to DNN models inspired by sparsity of biological neural systems and the i/o function of biological neurons.

To test its biological relevance, we looked for characteristic of early visual processing: orientation selectivity and the capacity to support texture discrimination

7/15

Importantly, these findings hold true both for firing rates in monkeys and human fMRI data, suggesting their generalizability.

6/15

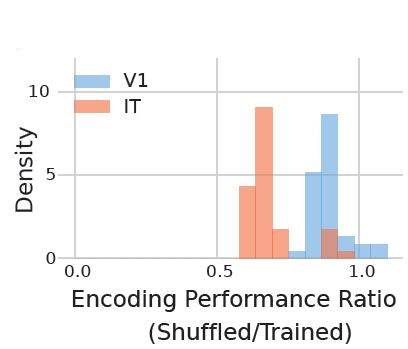

Even when we shuffled the trained weights of the convolutional filters, V1 models were way less affected than IT

5/15

This means that predicting responses in higher visual areas (e.g., IT, VO) strongly depends on precise weight configurations acquired through training in contrast to V1, highlighting the functional specialization of those areas.